User login

Why Scientists Are Linking More Diseases to Light at Night

This October, millions of Americans missed out on two of the most spectacular shows in the universe: the northern lights and a rare comet. Even if you were aware of them, light pollution made them difficult to see, unless you went to a dark area and let your eyes adjust.

It’s not getting any easier — the night sky over North America has been growing brighter by about 10% per year since 2011. More and more research is linking all that light pollution to a surprising range of health consequences: cancer, heart disease, diabetes, Alzheimer’s disease, and even low sperm quality, though the reasons for these troubling associations are not always clear.

“We’ve lost the contrast between light and dark, and we are confusing our physiology on a regular basis,” said John Hanifin, PhD, associate director of Thomas Jefferson University’s Light Research Program.

Our own galaxy is invisible to nearly 80% of people in North America. In 1994, an earthquake-triggered blackout in Los Angeles led to calls to the Griffith Observatory from people wondering about that hazy blob of light in the night sky. It was the Milky Way.

Glaring headlights, illuminated buildings, blazing billboards, and streetlights fill our urban skies with a glow that even affects rural residents. Inside, since the invention of the lightbulb, we’ve kept our homes bright at night. Now, we’ve also added blue light-emitting devices — smartphones, television screens, tablets — which have been linked to sleep problems.

But outdoor light may matter for our health, too. “Every photon counts,” Hanifin said.

Bright Lights, Big Problems

For one 2024 study researchers used satellite data to measure light pollution at residential addresses of over 13,000 people. They found that those who lived in places with the brightest skies at night had a 31% higher risk of high blood pressure. Another study out of Hong Kong showed a 29% higher risk of death from coronary heart disease. And yet another found a 17%higher risk of cerebrovascular disease, such as strokes or brain aneurysms.

Of course, urban areas also have air pollution, noise, and a lack of greenery. So, for some studies, scientists controlled for these factors, and the correlation remained strong (although air pollution with fine particulate matter appeared to be worse for heart health than outdoor light).

Research has found links between the nighttime glow outside and other diseases:

Breast cancer. “It’s a very strong correlation,” said Randy Nelson, PhD, a neuroscientist at West Virginia University. A study of over 100,000 teachers in California revealed that women living in areas with the most light pollution had a 12%higher risk. That effect is comparable to increasing your intake of ultra-processed foods by 10%.

Alzheimer’s disease. In a study published this fall, outdoor light at night was more strongly linked to the disease than even alcohol misuse or obesity.

Diabetes. In one recent study, people living in the most illuminated areas had a 28% higher risk of diabetes than those residing in much darker places. In a country like China, scientists concluded that 9 million cases of diabetes could be linked to light pollution.

What Happens in Your Body When You’re Exposed to Light at Night

, the “hormone of darkness.” “Darkness is very important,” Hanifin said. When he and his colleagues decades ago started studying the effects of light on human physiology, “people thought we were borderline crazy,” he said.

Nighttime illumination affects the health and behavior of species as diverse as Siberian hamsters, zebra finches, mice, crickets, and mosquitoes. Like most creatures on Earth, humans have internal clocks that are synced to the 24-hour cycle of day and night. The master clock is in your hypothalamus, a diamond-shaped part of the brain, but every cell in your body has its own clock, too. Many physiological processes run on circadian rhythms (a term derived from a Latin phrase meaning “about a day”), from sleep-wake cycle to hormone secretion, as well as processes involved in cancer progression, such as cell division.

“There are special photoreceptors in the eye that don’t deal with visual information. They just send light information,” Nelson said. “If you get light at the wrong time, you’re resetting the clocks.”

This internal clock “prepares the body for various recurrent challenges, such as eating,” said Christian Benedict, PhD, a sleep researcher at Uppsala University, Sweden. “Light exposure [at night] can mess up this very important system.” This could mean, for instance, that your insulin is released at the wrong time, Benedict said, causing “a jet lag-ish condition that will then impair the ability to handle blood sugar.” Animal studies confirm that exposure to light at night can reduce glucose tolerance and alter insulin secretion – potential pathways to diabetes.

The hormone melatonin, produced when it’s dark by the pineal gland in the brain, is a key player in this modern struggle. Melatonin helps you sleep, synchronizes the body’s circadian rhythms, protects neurons from damage, regulates the immune system, and fights inflammation. But even a sliver of light at night can suppress its secretion. Less than 30 lux of light, about the level of a pedestrian street at night, can slash melatonin by half.

When lab animals are exposed to nighttime light, they “show enormous neuroinflammation” — that is, inflammation of nervous tissue, Nelson said. In one experiment on humans, those who slept immersed in weak light had higher levels of C-reactive protein in their blood, a marker of inflammation.

Low melatonin has also been linked to cancer. It “allows the metabolic machinery of the cancer cells to be active,” Hanifin said. One of melatonin’s effects is stimulation of natural killer cells, which can recognize and destroy cancer cells. What’s more, when melatonin plunges, estrogen may go up, which could explain the link between light at night and breast cancer (estrogen fuels tumor growth in breast cancers).

Researchers concede that satellite data might be too coarse to estimate how much light people are actually exposed to while they sleep. Plus, many of us are staring at bright screens. “But the studies keep coming,” Nelson said, suggesting that outdoor light pollution does have an impact.

When researchers put wrist-worn light sensors on over 80,000 British people, they found that the more light the device registered between half-past midnight and 6 a.m., the more its wearer was at risk of having diabetes several years down the road — no matter how long they’ve actually slept. This, according to the study’s authors, supports the findings of satellite data.

A similar study that used actigraphy with built-in light sensors, measuring whether people had been sleeping in complete darkness for at least five hours, found that light pollution upped the risk of heart disease by 74%.

What Can You Do About This?

Not everyone’s melatonin is affected by nighttime light to the same degree. “Some people are very much sensitive to very dim light, whereas others are not as sensitive and need far, far more light stimulation [to impact melatonin],” Benedict said. In one study, some volunteers needed 350 lux to lower their melatonin by half. For such people, flipping on the light in the bathroom at night wouldn’t matter; for others, though, a mere 6 lux was already as harmful – which is darker than twilight.

You can protect yourself by keeping your bedroom lights off and your screens stashed away, but avoiding outdoor light pollution may be harder. You can invest in high-quality blackout curtains, of course, although some light may still seep inside. You can plant trees in front of your windows, reorient any motion-detector lights, and even petition your local government to reduce over-illumination of buildings and to choose better streetlights. You can support organizations, such as the International Dark-Sky Association, that work to preserve darkness.

Last but not least, you might want to change your habits. If you live in a particularly light-polluted area, such as the District of Columbia, America’s top place for urban blaze, you might reconsider late-night walks or drives around the neighborhood. Instead, Hanifin said, read a book in bed, while keeping the light “as dim as you can.” It’s “a much better idea versus being outside in midtown Manhattan,” he said. According to recent recommendations published by Hanifin and his colleagues, when you sleep, there should be no more than 1 lux of illumination at the level of your eyes — about as much as you’d get from having a lit candle 1 meter away.

And if we manage to preserve outdoor darkness, and the stars reappear (including the breathtaking Milky Way), we could reap more benefits — some research suggests that stargazing can elicit positive emotions, a sense of personal growth, and “a variety of transcendent thoughts and experiences.”

A version of this article appeared on WebMD.com.

This October, millions of Americans missed out on two of the most spectacular shows in the universe: the northern lights and a rare comet. Even if you were aware of them, light pollution made them difficult to see, unless you went to a dark area and let your eyes adjust.

It’s not getting any easier — the night sky over North America has been growing brighter by about 10% per year since 2011. More and more research is linking all that light pollution to a surprising range of health consequences: cancer, heart disease, diabetes, Alzheimer’s disease, and even low sperm quality, though the reasons for these troubling associations are not always clear.

“We’ve lost the contrast between light and dark, and we are confusing our physiology on a regular basis,” said John Hanifin, PhD, associate director of Thomas Jefferson University’s Light Research Program.

Our own galaxy is invisible to nearly 80% of people in North America. In 1994, an earthquake-triggered blackout in Los Angeles led to calls to the Griffith Observatory from people wondering about that hazy blob of light in the night sky. It was the Milky Way.

Glaring headlights, illuminated buildings, blazing billboards, and streetlights fill our urban skies with a glow that even affects rural residents. Inside, since the invention of the lightbulb, we’ve kept our homes bright at night. Now, we’ve also added blue light-emitting devices — smartphones, television screens, tablets — which have been linked to sleep problems.

But outdoor light may matter for our health, too. “Every photon counts,” Hanifin said.

Bright Lights, Big Problems

For one 2024 study researchers used satellite data to measure light pollution at residential addresses of over 13,000 people. They found that those who lived in places with the brightest skies at night had a 31% higher risk of high blood pressure. Another study out of Hong Kong showed a 29% higher risk of death from coronary heart disease. And yet another found a 17%higher risk of cerebrovascular disease, such as strokes or brain aneurysms.

Of course, urban areas also have air pollution, noise, and a lack of greenery. So, for some studies, scientists controlled for these factors, and the correlation remained strong (although air pollution with fine particulate matter appeared to be worse for heart health than outdoor light).

Research has found links between the nighttime glow outside and other diseases:

Breast cancer. “It’s a very strong correlation,” said Randy Nelson, PhD, a neuroscientist at West Virginia University. A study of over 100,000 teachers in California revealed that women living in areas with the most light pollution had a 12%higher risk. That effect is comparable to increasing your intake of ultra-processed foods by 10%.

Alzheimer’s disease. In a study published this fall, outdoor light at night was more strongly linked to the disease than even alcohol misuse or obesity.

Diabetes. In one recent study, people living in the most illuminated areas had a 28% higher risk of diabetes than those residing in much darker places. In a country like China, scientists concluded that 9 million cases of diabetes could be linked to light pollution.

What Happens in Your Body When You’re Exposed to Light at Night

, the “hormone of darkness.” “Darkness is very important,” Hanifin said. When he and his colleagues decades ago started studying the effects of light on human physiology, “people thought we were borderline crazy,” he said.

Nighttime illumination affects the health and behavior of species as diverse as Siberian hamsters, zebra finches, mice, crickets, and mosquitoes. Like most creatures on Earth, humans have internal clocks that are synced to the 24-hour cycle of day and night. The master clock is in your hypothalamus, a diamond-shaped part of the brain, but every cell in your body has its own clock, too. Many physiological processes run on circadian rhythms (a term derived from a Latin phrase meaning “about a day”), from sleep-wake cycle to hormone secretion, as well as processes involved in cancer progression, such as cell division.

“There are special photoreceptors in the eye that don’t deal with visual information. They just send light information,” Nelson said. “If you get light at the wrong time, you’re resetting the clocks.”

This internal clock “prepares the body for various recurrent challenges, such as eating,” said Christian Benedict, PhD, a sleep researcher at Uppsala University, Sweden. “Light exposure [at night] can mess up this very important system.” This could mean, for instance, that your insulin is released at the wrong time, Benedict said, causing “a jet lag-ish condition that will then impair the ability to handle blood sugar.” Animal studies confirm that exposure to light at night can reduce glucose tolerance and alter insulin secretion – potential pathways to diabetes.

The hormone melatonin, produced when it’s dark by the pineal gland in the brain, is a key player in this modern struggle. Melatonin helps you sleep, synchronizes the body’s circadian rhythms, protects neurons from damage, regulates the immune system, and fights inflammation. But even a sliver of light at night can suppress its secretion. Less than 30 lux of light, about the level of a pedestrian street at night, can slash melatonin by half.

When lab animals are exposed to nighttime light, they “show enormous neuroinflammation” — that is, inflammation of nervous tissue, Nelson said. In one experiment on humans, those who slept immersed in weak light had higher levels of C-reactive protein in their blood, a marker of inflammation.

Low melatonin has also been linked to cancer. It “allows the metabolic machinery of the cancer cells to be active,” Hanifin said. One of melatonin’s effects is stimulation of natural killer cells, which can recognize and destroy cancer cells. What’s more, when melatonin plunges, estrogen may go up, which could explain the link between light at night and breast cancer (estrogen fuels tumor growth in breast cancers).

Researchers concede that satellite data might be too coarse to estimate how much light people are actually exposed to while they sleep. Plus, many of us are staring at bright screens. “But the studies keep coming,” Nelson said, suggesting that outdoor light pollution does have an impact.

When researchers put wrist-worn light sensors on over 80,000 British people, they found that the more light the device registered between half-past midnight and 6 a.m., the more its wearer was at risk of having diabetes several years down the road — no matter how long they’ve actually slept. This, according to the study’s authors, supports the findings of satellite data.

A similar study that used actigraphy with built-in light sensors, measuring whether people had been sleeping in complete darkness for at least five hours, found that light pollution upped the risk of heart disease by 74%.

What Can You Do About This?

Not everyone’s melatonin is affected by nighttime light to the same degree. “Some people are very much sensitive to very dim light, whereas others are not as sensitive and need far, far more light stimulation [to impact melatonin],” Benedict said. In one study, some volunteers needed 350 lux to lower their melatonin by half. For such people, flipping on the light in the bathroom at night wouldn’t matter; for others, though, a mere 6 lux was already as harmful – which is darker than twilight.

You can protect yourself by keeping your bedroom lights off and your screens stashed away, but avoiding outdoor light pollution may be harder. You can invest in high-quality blackout curtains, of course, although some light may still seep inside. You can plant trees in front of your windows, reorient any motion-detector lights, and even petition your local government to reduce over-illumination of buildings and to choose better streetlights. You can support organizations, such as the International Dark-Sky Association, that work to preserve darkness.

Last but not least, you might want to change your habits. If you live in a particularly light-polluted area, such as the District of Columbia, America’s top place for urban blaze, you might reconsider late-night walks or drives around the neighborhood. Instead, Hanifin said, read a book in bed, while keeping the light “as dim as you can.” It’s “a much better idea versus being outside in midtown Manhattan,” he said. According to recent recommendations published by Hanifin and his colleagues, when you sleep, there should be no more than 1 lux of illumination at the level of your eyes — about as much as you’d get from having a lit candle 1 meter away.

And if we manage to preserve outdoor darkness, and the stars reappear (including the breathtaking Milky Way), we could reap more benefits — some research suggests that stargazing can elicit positive emotions, a sense of personal growth, and “a variety of transcendent thoughts and experiences.”

A version of this article appeared on WebMD.com.

This October, millions of Americans missed out on two of the most spectacular shows in the universe: the northern lights and a rare comet. Even if you were aware of them, light pollution made them difficult to see, unless you went to a dark area and let your eyes adjust.

It’s not getting any easier — the night sky over North America has been growing brighter by about 10% per year since 2011. More and more research is linking all that light pollution to a surprising range of health consequences: cancer, heart disease, diabetes, Alzheimer’s disease, and even low sperm quality, though the reasons for these troubling associations are not always clear.

“We’ve lost the contrast between light and dark, and we are confusing our physiology on a regular basis,” said John Hanifin, PhD, associate director of Thomas Jefferson University’s Light Research Program.

Our own galaxy is invisible to nearly 80% of people in North America. In 1994, an earthquake-triggered blackout in Los Angeles led to calls to the Griffith Observatory from people wondering about that hazy blob of light in the night sky. It was the Milky Way.

Glaring headlights, illuminated buildings, blazing billboards, and streetlights fill our urban skies with a glow that even affects rural residents. Inside, since the invention of the lightbulb, we’ve kept our homes bright at night. Now, we’ve also added blue light-emitting devices — smartphones, television screens, tablets — which have been linked to sleep problems.

But outdoor light may matter for our health, too. “Every photon counts,” Hanifin said.

Bright Lights, Big Problems

For one 2024 study researchers used satellite data to measure light pollution at residential addresses of over 13,000 people. They found that those who lived in places with the brightest skies at night had a 31% higher risk of high blood pressure. Another study out of Hong Kong showed a 29% higher risk of death from coronary heart disease. And yet another found a 17%higher risk of cerebrovascular disease, such as strokes or brain aneurysms.

Of course, urban areas also have air pollution, noise, and a lack of greenery. So, for some studies, scientists controlled for these factors, and the correlation remained strong (although air pollution with fine particulate matter appeared to be worse for heart health than outdoor light).

Research has found links between the nighttime glow outside and other diseases:

Breast cancer. “It’s a very strong correlation,” said Randy Nelson, PhD, a neuroscientist at West Virginia University. A study of over 100,000 teachers in California revealed that women living in areas with the most light pollution had a 12%higher risk. That effect is comparable to increasing your intake of ultra-processed foods by 10%.

Alzheimer’s disease. In a study published this fall, outdoor light at night was more strongly linked to the disease than even alcohol misuse or obesity.

Diabetes. In one recent study, people living in the most illuminated areas had a 28% higher risk of diabetes than those residing in much darker places. In a country like China, scientists concluded that 9 million cases of diabetes could be linked to light pollution.

What Happens in Your Body When You’re Exposed to Light at Night

, the “hormone of darkness.” “Darkness is very important,” Hanifin said. When he and his colleagues decades ago started studying the effects of light on human physiology, “people thought we were borderline crazy,” he said.

Nighttime illumination affects the health and behavior of species as diverse as Siberian hamsters, zebra finches, mice, crickets, and mosquitoes. Like most creatures on Earth, humans have internal clocks that are synced to the 24-hour cycle of day and night. The master clock is in your hypothalamus, a diamond-shaped part of the brain, but every cell in your body has its own clock, too. Many physiological processes run on circadian rhythms (a term derived from a Latin phrase meaning “about a day”), from sleep-wake cycle to hormone secretion, as well as processes involved in cancer progression, such as cell division.

“There are special photoreceptors in the eye that don’t deal with visual information. They just send light information,” Nelson said. “If you get light at the wrong time, you’re resetting the clocks.”

This internal clock “prepares the body for various recurrent challenges, such as eating,” said Christian Benedict, PhD, a sleep researcher at Uppsala University, Sweden. “Light exposure [at night] can mess up this very important system.” This could mean, for instance, that your insulin is released at the wrong time, Benedict said, causing “a jet lag-ish condition that will then impair the ability to handle blood sugar.” Animal studies confirm that exposure to light at night can reduce glucose tolerance and alter insulin secretion – potential pathways to diabetes.

The hormone melatonin, produced when it’s dark by the pineal gland in the brain, is a key player in this modern struggle. Melatonin helps you sleep, synchronizes the body’s circadian rhythms, protects neurons from damage, regulates the immune system, and fights inflammation. But even a sliver of light at night can suppress its secretion. Less than 30 lux of light, about the level of a pedestrian street at night, can slash melatonin by half.

When lab animals are exposed to nighttime light, they “show enormous neuroinflammation” — that is, inflammation of nervous tissue, Nelson said. In one experiment on humans, those who slept immersed in weak light had higher levels of C-reactive protein in their blood, a marker of inflammation.

Low melatonin has also been linked to cancer. It “allows the metabolic machinery of the cancer cells to be active,” Hanifin said. One of melatonin’s effects is stimulation of natural killer cells, which can recognize and destroy cancer cells. What’s more, when melatonin plunges, estrogen may go up, which could explain the link between light at night and breast cancer (estrogen fuels tumor growth in breast cancers).

Researchers concede that satellite data might be too coarse to estimate how much light people are actually exposed to while they sleep. Plus, many of us are staring at bright screens. “But the studies keep coming,” Nelson said, suggesting that outdoor light pollution does have an impact.

When researchers put wrist-worn light sensors on over 80,000 British people, they found that the more light the device registered between half-past midnight and 6 a.m., the more its wearer was at risk of having diabetes several years down the road — no matter how long they’ve actually slept. This, according to the study’s authors, supports the findings of satellite data.

A similar study that used actigraphy with built-in light sensors, measuring whether people had been sleeping in complete darkness for at least five hours, found that light pollution upped the risk of heart disease by 74%.

What Can You Do About This?

Not everyone’s melatonin is affected by nighttime light to the same degree. “Some people are very much sensitive to very dim light, whereas others are not as sensitive and need far, far more light stimulation [to impact melatonin],” Benedict said. In one study, some volunteers needed 350 lux to lower their melatonin by half. For such people, flipping on the light in the bathroom at night wouldn’t matter; for others, though, a mere 6 lux was already as harmful – which is darker than twilight.

You can protect yourself by keeping your bedroom lights off and your screens stashed away, but avoiding outdoor light pollution may be harder. You can invest in high-quality blackout curtains, of course, although some light may still seep inside. You can plant trees in front of your windows, reorient any motion-detector lights, and even petition your local government to reduce over-illumination of buildings and to choose better streetlights. You can support organizations, such as the International Dark-Sky Association, that work to preserve darkness.

Last but not least, you might want to change your habits. If you live in a particularly light-polluted area, such as the District of Columbia, America’s top place for urban blaze, you might reconsider late-night walks or drives around the neighborhood. Instead, Hanifin said, read a book in bed, while keeping the light “as dim as you can.” It’s “a much better idea versus being outside in midtown Manhattan,” he said. According to recent recommendations published by Hanifin and his colleagues, when you sleep, there should be no more than 1 lux of illumination at the level of your eyes — about as much as you’d get from having a lit candle 1 meter away.

And if we manage to preserve outdoor darkness, and the stars reappear (including the breathtaking Milky Way), we could reap more benefits — some research suggests that stargazing can elicit positive emotions, a sense of personal growth, and “a variety of transcendent thoughts and experiences.”

A version of this article appeared on WebMD.com.

The Appendix: Is It ’Useless,’ or a Safe House and Immune Training Ground?

When doctors and patients consider the appendix, it’s often with urgency. In cases of appendicitis, the clock could be ticking down to a life-threatening burst. Thus, despite recent research suggesting antibiotics could be an alternative therapy, appendectomy remains standard for uncomplicated appendicitis.

But what if removing the appendix could raise the risk for gastrointestinal (GI) diseases like irritable bowel syndrome and colorectal cancer? That’s what some emerging science suggests. And though the research is early and mixed, it’s enough to give some health professionals pause.

“If there’s no reason to remove the appendix, then it’s better to have one,” said Heather Smith, PhD, a comparative anatomist at Midwestern University, Glendale, Arizona. Preemptive removal is not supported by the evidence, she said.

To be fair, we’ve come a long way since 1928, when American physician Miles Breuer, MD, suggested that people with infected appendixes should be left to perish, so as to remove their inferior DNA from the gene pool (he called such people “uncivilized” and “candidates for extinction”). Charles Darwin, while less radical, believed the appendix was at best useless — a mere vestige of our ancestors switching diets from leaves to fruits.

What we know now is that the appendix isn’t just a troublesome piece of worthless flesh. Instead, it may act as a safe house for friendly gut bacteria and a training camp for the immune system. It also appears to play a role in several medical conditions, from ulcerative colitis and colorectal cancer to Parkinson’s disease and lupus. The roughly 300,000 Americans who undergo appendectomy each year should be made aware of this, some experts say. But the frustrating truth is, scientists are still trying to figure out in which cases having an appendix is protective and in which we may be better off without it.

A ‘Worm’ as Intestinal Protection

The appendix is a blind pouch (meaning its ending is closed off) that extends from the large intestine. Not all mammals have one; it’s been found in several species of primates and rodents, as well as in rabbits, wombats, and Florida manatees, among others (dogs and cats don’t have it). While a human appendix “looks like a little worm,” Dr. Smith said, these anatomical structures come in various sizes and shapes. Some are thick, as in a beaver, while others are long and spiraling, like a rabbit’s.

Comparative anatomy studies reveal that the appendix has evolved independently at least 29 times throughout mammalian evolution. This suggests that “it has some kind of an adaptive function,” Dr. Smith said. When French scientists analyzed data from 258 species of mammals, they discovered that those that possess an appendix live longer than those without one. A possible explanation, the researchers wrote, may lie with the appendix’s role in preventing diarrhea.

Their 2023 study supported this hypothesis. Based on veterinary records of 45 different species of primates housed in a French zoo, the scientists established that primates with appendixes are far less likely to suffer severe diarrhea than those that don’t possess this organ. The appendix, it appears, might be our tiny weapon against bowel troubles.

For immunologist William Parker, PhD, a visiting scholar at the University of North Carolina at Chapel Hill, these data are “about as good as we could hope for” in support of the idea that the appendix might protect mammals from GI problems. An experiment on humans would be unethical, Dr. Parker said. But observational studies offer clues.

One study showed that compared with people with an intact appendix, young adults with a history of appendectomy have more than double the risk of developing a serious infection with non-typhoidal Salmonella of the kind that would require hospitalization.

A ‘Safe House’ for Bacteria

Such studies add weight to a theory that Dr. Parker and his colleagues developed back in 2007: That the appendix acts as a “safe house” for beneficial gut bacteria.

Think of the colon as a wide pipe, Dr. Parker said, that may become contaminated with a pathogen such as Salmonella. Diarrhea follows, and the pipe gets repeatedly flushed, wiping everything clean, including your friendly gut microbiome. Luckily, “you’ve got this little offshoot of that pipe,” where the flow can’t really get in “because it’s so constricted,” Dr. Parker said. The friendly gut microbes can survive inside the appendix and repopulate the colon once diarrhea is over. Dr. Parker and his colleagues found that the human appendix contains a thick layer of beneficial bacteria. “They were right where we predicted they would be,” he said.

This safe house hypothesis could explain why the gut microbiome may be different in people who no longer have an appendix. In one small study, people who’d had an appendectomy had a less diverse microbiome, with a lower abundance of beneficial strains such as Butyricicoccus and Barnesiella, than did those with intact appendixes.

The appendix likely has a second function, too, Dr. Smith said: It may serve as a training camp for the immune system. “When there is an invading pathogen in the gut, it helps the GI system to mount the immune response,” she said. The human appendix is rich in special cells known as M cells. These act as scouts, detecting and capturing invasive bacteria and viruses and presenting them to the body’s defense team, such as the T lymphocytes.

If the appendix shelters beneficial bacteria and boosts immune response, that may explain its links to various diseases. According to an epidemiological study from Taiwan,patients who underwent an appendectomy have a 46% higher risk of developing irritable bowel syndrome (IBS) — a disease associated with a low abundance of Butyricicoccus bacteria. This is why, the study authors wrote, doctors should pay careful attention to people who’ve had their appendixes removed, monitoring them for potential symptoms of IBS.

The same database helped uncover other connections between appendectomy and disease. For one, there was type 2 diabetes: Within 3 years of the surgery, patients under 30 had double the risk of developing this disorder. Then there was lupus: While those who underwent appendectomy generally had higher risk for this autoimmune disease, women were particularly affected.

The Contentious Connections

The most heated scientific discussion surrounds the links between the appendix and conditions such as Parkinson’s disease, ulcerative colitis, and colorectal cancer. A small 2019 study showed, for example, that appendectomy may improve symptoms of certain forms of ulcerative colitis that don’t respond to standard medical treatments. A third of patients improved after their appendix was removed, and 17% fully recovered.

Why? According to Dr. Parker, appendectomy may work for ulcerative colitis because it’s “a way of suppressing the immune system, especially in the lower intestinal areas.” A 2023 meta-analysis found that people who’d had their appendix removed before being diagnosed with ulcerative colitis were less likely to need their colon removed later on.

Such a procedure may have a serious side effect, however: Colorectal cancer. French scientists discovered that removing the appendix may reduce the numbers of certain immune cells called CD3+ and CD8+ T cells, causing a weakened immune surveillance. As a result, tumor cells might escape detection.

Yet the links between appendix removal and cancer are far from clear. A recent meta-analysis found that while people with appendectomies generally had a higher risk for colorectal cancer, for Europeans, these effects were insignificant. In fact, removal of the appendix actually protected European women from this particular form of cancer. For Parker, such mixed results may stem from the fact that treatments and populations vary widely. The issue “may depend on complex social and medical factors,” Dr. Parker said.

Things also appear complicated with Parkinson’s disease — another condition linked to the appendix. A large epidemiological study showed that appendectomy is associated with a lower risk for Parkinson’s disease and a delayed age of Parkinson’s onset. It also found that a normal appendix contains α-synuclein, a protein that may accumulate in the brain and contribute to the development of Parkinson’s. “Although α-synuclein is toxic when in the brain, it appears to be quite normal when present in the appendix,” said Luis Vitetta, PhD, MD, a clinical epidemiologist at the University of Sydney, Camperdown, Australia. Yet, not all studies find that removing the appendix lowers the risk for Parkinson’s. In fact, some show the opposite results.

How Should Doctors View the Appendix?

Even with these mysteries and contradictions, Dr. Vitetta said, a healthy appendix in a healthy body appears to be protective. This is why, he said, when someone is diagnosed with appendicitis, careful assessment is essential before surgery is performed.

“Perhaps an antibiotic can actually help fix it,” he said. A 2020 study published in The New England Journal of Medicine showed that antibiotics may indeed be a good alternative to surgery for the treatment of appendicitis. “We don’t want necessarily to remove an appendix that could be beneficial,” Dr. Smith said.

The many links between the appendix and various diseases mean that doctors should be more vigilant when treating patients who’ve had this organ removed, Dr. Parker said. “When a patient loses an appendix, depending on their environment, there may be effects on infection and cancer. So they might need more regular checkups,” he said. This could include monitoring for IBS and colorectal cancer.

What’s more, Dr. Parker believes that research on the appendix puts even more emphasis on the need to protect the gut microbiome — such as taking probiotics with antibiotics. And while we are still a long way from understanding how exactly this worm-like structure affects various diseases, one thing appears quite certain: The appendix is not useless. “If Darwin had the information that we have, he would not have drawn these conclusions,” Dr. Parker said.

A version of this article first appeared on Medscape.com.

When doctors and patients consider the appendix, it’s often with urgency. In cases of appendicitis, the clock could be ticking down to a life-threatening burst. Thus, despite recent research suggesting antibiotics could be an alternative therapy, appendectomy remains standard for uncomplicated appendicitis.

But what if removing the appendix could raise the risk for gastrointestinal (GI) diseases like irritable bowel syndrome and colorectal cancer? That’s what some emerging science suggests. And though the research is early and mixed, it’s enough to give some health professionals pause.

“If there’s no reason to remove the appendix, then it’s better to have one,” said Heather Smith, PhD, a comparative anatomist at Midwestern University, Glendale, Arizona. Preemptive removal is not supported by the evidence, she said.

To be fair, we’ve come a long way since 1928, when American physician Miles Breuer, MD, suggested that people with infected appendixes should be left to perish, so as to remove their inferior DNA from the gene pool (he called such people “uncivilized” and “candidates for extinction”). Charles Darwin, while less radical, believed the appendix was at best useless — a mere vestige of our ancestors switching diets from leaves to fruits.

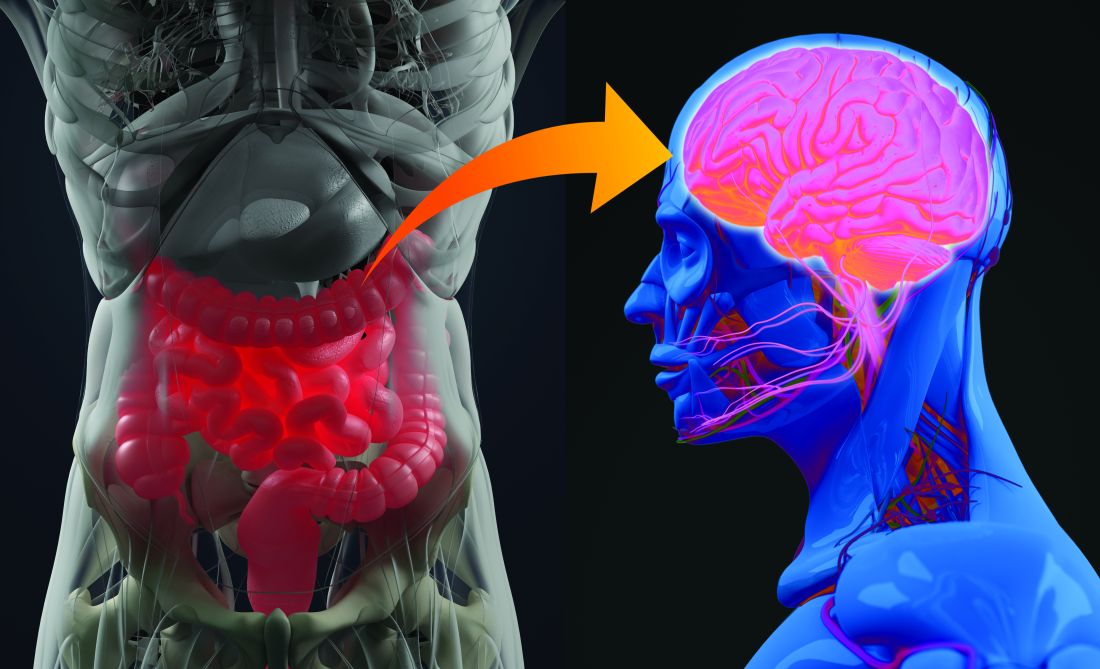

What we know now is that the appendix isn’t just a troublesome piece of worthless flesh. Instead, it may act as a safe house for friendly gut bacteria and a training camp for the immune system. It also appears to play a role in several medical conditions, from ulcerative colitis and colorectal cancer to Parkinson’s disease and lupus. The roughly 300,000 Americans who undergo appendectomy each year should be made aware of this, some experts say. But the frustrating truth is, scientists are still trying to figure out in which cases having an appendix is protective and in which we may be better off without it.

A ‘Worm’ as Intestinal Protection

The appendix is a blind pouch (meaning its ending is closed off) that extends from the large intestine. Not all mammals have one; it’s been found in several species of primates and rodents, as well as in rabbits, wombats, and Florida manatees, among others (dogs and cats don’t have it). While a human appendix “looks like a little worm,” Dr. Smith said, these anatomical structures come in various sizes and shapes. Some are thick, as in a beaver, while others are long and spiraling, like a rabbit’s.

Comparative anatomy studies reveal that the appendix has evolved independently at least 29 times throughout mammalian evolution. This suggests that “it has some kind of an adaptive function,” Dr. Smith said. When French scientists analyzed data from 258 species of mammals, they discovered that those that possess an appendix live longer than those without one. A possible explanation, the researchers wrote, may lie with the appendix’s role in preventing diarrhea.

Their 2023 study supported this hypothesis. Based on veterinary records of 45 different species of primates housed in a French zoo, the scientists established that primates with appendixes are far less likely to suffer severe diarrhea than those that don’t possess this organ. The appendix, it appears, might be our tiny weapon against bowel troubles.

For immunologist William Parker, PhD, a visiting scholar at the University of North Carolina at Chapel Hill, these data are “about as good as we could hope for” in support of the idea that the appendix might protect mammals from GI problems. An experiment on humans would be unethical, Dr. Parker said. But observational studies offer clues.

One study showed that compared with people with an intact appendix, young adults with a history of appendectomy have more than double the risk of developing a serious infection with non-typhoidal Salmonella of the kind that would require hospitalization.

A ‘Safe House’ for Bacteria

Such studies add weight to a theory that Dr. Parker and his colleagues developed back in 2007: That the appendix acts as a “safe house” for beneficial gut bacteria.

Think of the colon as a wide pipe, Dr. Parker said, that may become contaminated with a pathogen such as Salmonella. Diarrhea follows, and the pipe gets repeatedly flushed, wiping everything clean, including your friendly gut microbiome. Luckily, “you’ve got this little offshoot of that pipe,” where the flow can’t really get in “because it’s so constricted,” Dr. Parker said. The friendly gut microbes can survive inside the appendix and repopulate the colon once diarrhea is over. Dr. Parker and his colleagues found that the human appendix contains a thick layer of beneficial bacteria. “They were right where we predicted they would be,” he said.

This safe house hypothesis could explain why the gut microbiome may be different in people who no longer have an appendix. In one small study, people who’d had an appendectomy had a less diverse microbiome, with a lower abundance of beneficial strains such as Butyricicoccus and Barnesiella, than did those with intact appendixes.

The appendix likely has a second function, too, Dr. Smith said: It may serve as a training camp for the immune system. “When there is an invading pathogen in the gut, it helps the GI system to mount the immune response,” she said. The human appendix is rich in special cells known as M cells. These act as scouts, detecting and capturing invasive bacteria and viruses and presenting them to the body’s defense team, such as the T lymphocytes.

If the appendix shelters beneficial bacteria and boosts immune response, that may explain its links to various diseases. According to an epidemiological study from Taiwan,patients who underwent an appendectomy have a 46% higher risk of developing irritable bowel syndrome (IBS) — a disease associated with a low abundance of Butyricicoccus bacteria. This is why, the study authors wrote, doctors should pay careful attention to people who’ve had their appendixes removed, monitoring them for potential symptoms of IBS.

The same database helped uncover other connections between appendectomy and disease. For one, there was type 2 diabetes: Within 3 years of the surgery, patients under 30 had double the risk of developing this disorder. Then there was lupus: While those who underwent appendectomy generally had higher risk for this autoimmune disease, women were particularly affected.

The Contentious Connections

The most heated scientific discussion surrounds the links between the appendix and conditions such as Parkinson’s disease, ulcerative colitis, and colorectal cancer. A small 2019 study showed, for example, that appendectomy may improve symptoms of certain forms of ulcerative colitis that don’t respond to standard medical treatments. A third of patients improved after their appendix was removed, and 17% fully recovered.

Why? According to Dr. Parker, appendectomy may work for ulcerative colitis because it’s “a way of suppressing the immune system, especially in the lower intestinal areas.” A 2023 meta-analysis found that people who’d had their appendix removed before being diagnosed with ulcerative colitis were less likely to need their colon removed later on.

Such a procedure may have a serious side effect, however: Colorectal cancer. French scientists discovered that removing the appendix may reduce the numbers of certain immune cells called CD3+ and CD8+ T cells, causing a weakened immune surveillance. As a result, tumor cells might escape detection.

Yet the links between appendix removal and cancer are far from clear. A recent meta-analysis found that while people with appendectomies generally had a higher risk for colorectal cancer, for Europeans, these effects were insignificant. In fact, removal of the appendix actually protected European women from this particular form of cancer. For Parker, such mixed results may stem from the fact that treatments and populations vary widely. The issue “may depend on complex social and medical factors,” Dr. Parker said.

Things also appear complicated with Parkinson’s disease — another condition linked to the appendix. A large epidemiological study showed that appendectomy is associated with a lower risk for Parkinson’s disease and a delayed age of Parkinson’s onset. It also found that a normal appendix contains α-synuclein, a protein that may accumulate in the brain and contribute to the development of Parkinson’s. “Although α-synuclein is toxic when in the brain, it appears to be quite normal when present in the appendix,” said Luis Vitetta, PhD, MD, a clinical epidemiologist at the University of Sydney, Camperdown, Australia. Yet, not all studies find that removing the appendix lowers the risk for Parkinson’s. In fact, some show the opposite results.

How Should Doctors View the Appendix?

Even with these mysteries and contradictions, Dr. Vitetta said, a healthy appendix in a healthy body appears to be protective. This is why, he said, when someone is diagnosed with appendicitis, careful assessment is essential before surgery is performed.

“Perhaps an antibiotic can actually help fix it,” he said. A 2020 study published in The New England Journal of Medicine showed that antibiotics may indeed be a good alternative to surgery for the treatment of appendicitis. “We don’t want necessarily to remove an appendix that could be beneficial,” Dr. Smith said.

The many links between the appendix and various diseases mean that doctors should be more vigilant when treating patients who’ve had this organ removed, Dr. Parker said. “When a patient loses an appendix, depending on their environment, there may be effects on infection and cancer. So they might need more regular checkups,” he said. This could include monitoring for IBS and colorectal cancer.

What’s more, Dr. Parker believes that research on the appendix puts even more emphasis on the need to protect the gut microbiome — such as taking probiotics with antibiotics. And while we are still a long way from understanding how exactly this worm-like structure affects various diseases, one thing appears quite certain: The appendix is not useless. “If Darwin had the information that we have, he would not have drawn these conclusions,” Dr. Parker said.

A version of this article first appeared on Medscape.com.

When doctors and patients consider the appendix, it’s often with urgency. In cases of appendicitis, the clock could be ticking down to a life-threatening burst. Thus, despite recent research suggesting antibiotics could be an alternative therapy, appendectomy remains standard for uncomplicated appendicitis.

But what if removing the appendix could raise the risk for gastrointestinal (GI) diseases like irritable bowel syndrome and colorectal cancer? That’s what some emerging science suggests. And though the research is early and mixed, it’s enough to give some health professionals pause.

“If there’s no reason to remove the appendix, then it’s better to have one,” said Heather Smith, PhD, a comparative anatomist at Midwestern University, Glendale, Arizona. Preemptive removal is not supported by the evidence, she said.

To be fair, we’ve come a long way since 1928, when American physician Miles Breuer, MD, suggested that people with infected appendixes should be left to perish, so as to remove their inferior DNA from the gene pool (he called such people “uncivilized” and “candidates for extinction”). Charles Darwin, while less radical, believed the appendix was at best useless — a mere vestige of our ancestors switching diets from leaves to fruits.

What we know now is that the appendix isn’t just a troublesome piece of worthless flesh. Instead, it may act as a safe house for friendly gut bacteria and a training camp for the immune system. It also appears to play a role in several medical conditions, from ulcerative colitis and colorectal cancer to Parkinson’s disease and lupus. The roughly 300,000 Americans who undergo appendectomy each year should be made aware of this, some experts say. But the frustrating truth is, scientists are still trying to figure out in which cases having an appendix is protective and in which we may be better off without it.

A ‘Worm’ as Intestinal Protection

The appendix is a blind pouch (meaning its ending is closed off) that extends from the large intestine. Not all mammals have one; it’s been found in several species of primates and rodents, as well as in rabbits, wombats, and Florida manatees, among others (dogs and cats don’t have it). While a human appendix “looks like a little worm,” Dr. Smith said, these anatomical structures come in various sizes and shapes. Some are thick, as in a beaver, while others are long and spiraling, like a rabbit’s.

Comparative anatomy studies reveal that the appendix has evolved independently at least 29 times throughout mammalian evolution. This suggests that “it has some kind of an adaptive function,” Dr. Smith said. When French scientists analyzed data from 258 species of mammals, they discovered that those that possess an appendix live longer than those without one. A possible explanation, the researchers wrote, may lie with the appendix’s role in preventing diarrhea.

Their 2023 study supported this hypothesis. Based on veterinary records of 45 different species of primates housed in a French zoo, the scientists established that primates with appendixes are far less likely to suffer severe diarrhea than those that don’t possess this organ. The appendix, it appears, might be our tiny weapon against bowel troubles.

For immunologist William Parker, PhD, a visiting scholar at the University of North Carolina at Chapel Hill, these data are “about as good as we could hope for” in support of the idea that the appendix might protect mammals from GI problems. An experiment on humans would be unethical, Dr. Parker said. But observational studies offer clues.

One study showed that compared with people with an intact appendix, young adults with a history of appendectomy have more than double the risk of developing a serious infection with non-typhoidal Salmonella of the kind that would require hospitalization.

A ‘Safe House’ for Bacteria

Such studies add weight to a theory that Dr. Parker and his colleagues developed back in 2007: That the appendix acts as a “safe house” for beneficial gut bacteria.

Think of the colon as a wide pipe, Dr. Parker said, that may become contaminated with a pathogen such as Salmonella. Diarrhea follows, and the pipe gets repeatedly flushed, wiping everything clean, including your friendly gut microbiome. Luckily, “you’ve got this little offshoot of that pipe,” where the flow can’t really get in “because it’s so constricted,” Dr. Parker said. The friendly gut microbes can survive inside the appendix and repopulate the colon once diarrhea is over. Dr. Parker and his colleagues found that the human appendix contains a thick layer of beneficial bacteria. “They were right where we predicted they would be,” he said.

This safe house hypothesis could explain why the gut microbiome may be different in people who no longer have an appendix. In one small study, people who’d had an appendectomy had a less diverse microbiome, with a lower abundance of beneficial strains such as Butyricicoccus and Barnesiella, than did those with intact appendixes.

The appendix likely has a second function, too, Dr. Smith said: It may serve as a training camp for the immune system. “When there is an invading pathogen in the gut, it helps the GI system to mount the immune response,” she said. The human appendix is rich in special cells known as M cells. These act as scouts, detecting and capturing invasive bacteria and viruses and presenting them to the body’s defense team, such as the T lymphocytes.

If the appendix shelters beneficial bacteria and boosts immune response, that may explain its links to various diseases. According to an epidemiological study from Taiwan,patients who underwent an appendectomy have a 46% higher risk of developing irritable bowel syndrome (IBS) — a disease associated with a low abundance of Butyricicoccus bacteria. This is why, the study authors wrote, doctors should pay careful attention to people who’ve had their appendixes removed, monitoring them for potential symptoms of IBS.

The same database helped uncover other connections between appendectomy and disease. For one, there was type 2 diabetes: Within 3 years of the surgery, patients under 30 had double the risk of developing this disorder. Then there was lupus: While those who underwent appendectomy generally had higher risk for this autoimmune disease, women were particularly affected.

The Contentious Connections

The most heated scientific discussion surrounds the links between the appendix and conditions such as Parkinson’s disease, ulcerative colitis, and colorectal cancer. A small 2019 study showed, for example, that appendectomy may improve symptoms of certain forms of ulcerative colitis that don’t respond to standard medical treatments. A third of patients improved after their appendix was removed, and 17% fully recovered.

Why? According to Dr. Parker, appendectomy may work for ulcerative colitis because it’s “a way of suppressing the immune system, especially in the lower intestinal areas.” A 2023 meta-analysis found that people who’d had their appendix removed before being diagnosed with ulcerative colitis were less likely to need their colon removed later on.

Such a procedure may have a serious side effect, however: Colorectal cancer. French scientists discovered that removing the appendix may reduce the numbers of certain immune cells called CD3+ and CD8+ T cells, causing a weakened immune surveillance. As a result, tumor cells might escape detection.

Yet the links between appendix removal and cancer are far from clear. A recent meta-analysis found that while people with appendectomies generally had a higher risk for colorectal cancer, for Europeans, these effects were insignificant. In fact, removal of the appendix actually protected European women from this particular form of cancer. For Parker, such mixed results may stem from the fact that treatments and populations vary widely. The issue “may depend on complex social and medical factors,” Dr. Parker said.

Things also appear complicated with Parkinson’s disease — another condition linked to the appendix. A large epidemiological study showed that appendectomy is associated with a lower risk for Parkinson’s disease and a delayed age of Parkinson’s onset. It also found that a normal appendix contains α-synuclein, a protein that may accumulate in the brain and contribute to the development of Parkinson’s. “Although α-synuclein is toxic when in the brain, it appears to be quite normal when present in the appendix,” said Luis Vitetta, PhD, MD, a clinical epidemiologist at the University of Sydney, Camperdown, Australia. Yet, not all studies find that removing the appendix lowers the risk for Parkinson’s. In fact, some show the opposite results.

How Should Doctors View the Appendix?

Even with these mysteries and contradictions, Dr. Vitetta said, a healthy appendix in a healthy body appears to be protective. This is why, he said, when someone is diagnosed with appendicitis, careful assessment is essential before surgery is performed.

“Perhaps an antibiotic can actually help fix it,” he said. A 2020 study published in The New England Journal of Medicine showed that antibiotics may indeed be a good alternative to surgery for the treatment of appendicitis. “We don’t want necessarily to remove an appendix that could be beneficial,” Dr. Smith said.

The many links between the appendix and various diseases mean that doctors should be more vigilant when treating patients who’ve had this organ removed, Dr. Parker said. “When a patient loses an appendix, depending on their environment, there may be effects on infection and cancer. So they might need more regular checkups,” he said. This could include monitoring for IBS and colorectal cancer.

What’s more, Dr. Parker believes that research on the appendix puts even more emphasis on the need to protect the gut microbiome — such as taking probiotics with antibiotics. And while we are still a long way from understanding how exactly this worm-like structure affects various diseases, one thing appears quite certain: The appendix is not useless. “If Darwin had the information that we have, he would not have drawn these conclusions,” Dr. Parker said.

A version of this article first appeared on Medscape.com.

MDMA therapy for loneliness? Researchers say it could work

Some call the drug “ecstasy” or “molly.” Researchers are calling it a potential tool to help treat loneliness.

As public health experts sound the alarm on a rising loneliness epidemic in the United States and across the globe,

In the latest study, MDMA “led to a robust increase in feelings of connection” among people socializing in a controlled setting. Participants were dosed with either MDMA or a placebo and asked to chat with a stranger. Afterward, those who took MDMA said their companion was more responsive and attentive, and that they had plenty in common. The drug also “increased participants’ ratings of liking their partners, feeling connected and finding the conversation enjoyable and meaningful.”

The study was small — just 18 participants — but its results “have implications for MDMA-assisted therapy,” the authors wrote. “This feeling of connectedness could help patients feel safe and trusting, thereby facilitating deeper emotional exploration.”

MDMA “really does seem to make people want to interact more with other people,” says Harriet de Wit, PhD, a neuropharmacologist at the University of Chicago and one of the study’s authors. The results echo those of earlier research using psychedelics like LSD or psilocybin.

It’s important to note that any intervention involving MDMA or psychedelics would be a drug-assisted therapy — that is, used in conjunction with the appropriate therapy and in a therapeutic setting. MDMA-assisted therapy has already drawn popular and scientific attention, as it recently cleared clinical trials for treating posttraumatic stress disorder (PTSD) and may be nearing approval by the US Food and Drug Administration (FDA).

According to Friederike Holze, PhD, psychopharmacologist at the University of Basel, in Switzerland, “there could be a place” for MDMA and psychedelics in treating chronic loneliness, but only under professional supervision.

There would have to be clear guidelines too, says Joshua Woolley, MD, PhD, a psychiatrist at the University of California, San Francisco.

MDMA and psychedelics “induce this plastic state, a state where people can change. They feel open, they feel like things are possible,” Dr. Woolley says. Then, with therapy, “you can help them change.”

Loneliness Can Impact Our Health

On top of the mental health ramifications, the physiologic effects of loneliness could have grave consequences over time. In observational studies, loneliness has been linked to higher risks for cancer and heart disease, and shorter lifespan. One third of Americans over 45 say they are chronically lonely.

Chronic loneliness changes how we think and behave, research shows. It makes us fear contact with others and see them in a more negative light, as more threatening and less trustworthy. Lonely people prefer to stand farther apart from strangers and avoid touch.

This is where MDMA-assisted therapies could potentially help, by easing these defensive tendencies, according to Dr. Woolley.

MDMA, Psychedelics, and Social Behavior

MDMA, or 3,4-methylenedioxymethamphetamine, is a hybrid between a stimulant and a psychedelic. In Dr. de Wit’s earlier experiments, volunteers given MDMA engaged more in communal activities, chatting, and playing games. They used more positive words during social encounters than those who had received a placebo. And after MDMA, people felt less rejected if they were slighted in Cyberball — a virtual ball-tossing game commonly used to measure the effects of social exclusion.

MDMA has been shown to reduce people’s response to other’s negative emotions, diminishing activation of the amygdala (the brain’s fear center) while looking at pictures of angry faces.

This could be helpful. “If you perceive a person’s natural expression as being a little bit angry, if that disappears, then you might be more inclined to interact,” de Wit says.

However, there may be downsides, too. If a drug makes people more trusting and willing to connect, they could be taken advantage of. This is why, Dr. Woolley says, “psychedelics have been used in cults.”

MDMA may also make the experience of touch more pleasant. In a series of experiments in 2019, researchers gently stroked volunteers ’ arms with a goat-hair brush, mimicking the comforting gestures one may receive from a loved one. At the same time, the scientists monitored the volunteers’ facial muscles. People on MDMA perceived gentle touch as more pleasant than those on placebo, and their smile muscles activated more.

MDMA and psychedelics boost social behaviors in animals, too — suggesting that their effects on relationships have a biological basis. Rats on MDMA are more likely to lie next to each other, and mice become more resilient to social stress. Even octopuses become more outgoing after a dose of MDMA, choosing to spend more time with other octopuses instead of a new toy. Classic psychedelics show similar effects — LSD, for example, makes mice more social.

Psychedelics can induce a sense of a “dissolution of the self-other boundary,” Dr. Woolley says. People who take them often say it’s “helped them feel more connected to themselves and other people.” LSD, first synthesized in 1938, may help increase empathy in some people.

Psilocybin, a compound found in over 200 species of mushrooms and used for centuries in Mesoamerican rituals, also seems to boost empathy, with effects persisting for at least seven days. In Cyberball, the online ball-throwing game, people who took psilocybin felt less socially rejected, an outcome reflected in their brain activation patterns in one study — the areas responsible for social-pain processing appeared to dim after a dose.

Making It Legal and Putting It to Use

In 2020, Oregon became the first state to establish a regulatory framework for psilocybin for therapeutic use, and Colorado followed suit in 2022. Such therapeutic applications of psilocybin could help fight loneliness as well, Dr. Woolley believes, because a “ common symptom of depression is that people feel socially withdrawn and lack motivation, ” he says. As mentioned above, MDMA-assisted therapy is also nearing FDA approval for PTSD.

What remain unclear are the exact mechanisms at play.

“MDMA releases oxytocin, and it does that through serotonin receptors,” Dr. de Wit says. Serotonin activates 5-HT1A receptors in the hypothalamus, releasing oxytocin into the bloodstream. In Dr. de Wit’s recent experiments, the more people felt connected after taking MDMA, the more oxytocin was found circulating in their bodies. (Another drug, methamphetamine, also upped the levels of oxytocin but did not increase feelings of connectedness.)

“It’s likely that both something in the serotonin system independent of oxytocin, and oxytocin itself, contribute,” Dr. de Wit says. Dopamine, a neurotransmitter responsible for motivation, appears to increase as well.

The empathy-boosting effects of LSD also seem to be at least partly driven by oxytocin, experiments published in 2021 revealed. Studies in mice, meanwhile, suggest that glutamate, a chemical messenger in the brain, may be behind some of LSD’s prosocial effects.

Scientists are fairly certain which receptors these drugs bind to and which neurotransmitters they affect. “How that gets translated into these higher-order things like empathy and feeling connected to the world, we don’t totally understand,” Dr. Woolley says.

Challenges and the Future

Although MDMA and psychedelics are largely considered safe when taken in a legal, medically controlled setting, there is reason to be cautious.

“They have relatively low impact on the body, like heart rate increase or blood pressure increase. But they might leave some disturbing psychological effects,” says Dr. Holze. Scientists routinely screen experiment volunteers for their risk for psychiatric disorders.

Although risk for addiction is low with both MDMA and psychedelics, there is always some risk for misuse. MDMA “ can produce feelings of well-being, and then people might use it repeatedly, ” Dr. de Wit says. “ That doesn ’ t seem to be a problem for really a lot of people, but it could easily happen. ”

Still, possibilities remain for MDMA in the fight against loneliness.

“[People] feel open, they feel like things are possible, they feel like they’re unstuck,” Dr. Woolley says. “You can harness that in psychotherapy.”

A version of this article appeared on Medscape.com.

Some call the drug “ecstasy” or “molly.” Researchers are calling it a potential tool to help treat loneliness.

As public health experts sound the alarm on a rising loneliness epidemic in the United States and across the globe,

In the latest study, MDMA “led to a robust increase in feelings of connection” among people socializing in a controlled setting. Participants were dosed with either MDMA or a placebo and asked to chat with a stranger. Afterward, those who took MDMA said their companion was more responsive and attentive, and that they had plenty in common. The drug also “increased participants’ ratings of liking their partners, feeling connected and finding the conversation enjoyable and meaningful.”

The study was small — just 18 participants — but its results “have implications for MDMA-assisted therapy,” the authors wrote. “This feeling of connectedness could help patients feel safe and trusting, thereby facilitating deeper emotional exploration.”

MDMA “really does seem to make people want to interact more with other people,” says Harriet de Wit, PhD, a neuropharmacologist at the University of Chicago and one of the study’s authors. The results echo those of earlier research using psychedelics like LSD or psilocybin.

It’s important to note that any intervention involving MDMA or psychedelics would be a drug-assisted therapy — that is, used in conjunction with the appropriate therapy and in a therapeutic setting. MDMA-assisted therapy has already drawn popular and scientific attention, as it recently cleared clinical trials for treating posttraumatic stress disorder (PTSD) and may be nearing approval by the US Food and Drug Administration (FDA).

According to Friederike Holze, PhD, psychopharmacologist at the University of Basel, in Switzerland, “there could be a place” for MDMA and psychedelics in treating chronic loneliness, but only under professional supervision.

There would have to be clear guidelines too, says Joshua Woolley, MD, PhD, a psychiatrist at the University of California, San Francisco.

MDMA and psychedelics “induce this plastic state, a state where people can change. They feel open, they feel like things are possible,” Dr. Woolley says. Then, with therapy, “you can help them change.”

Loneliness Can Impact Our Health

On top of the mental health ramifications, the physiologic effects of loneliness could have grave consequences over time. In observational studies, loneliness has been linked to higher risks for cancer and heart disease, and shorter lifespan. One third of Americans over 45 say they are chronically lonely.

Chronic loneliness changes how we think and behave, research shows. It makes us fear contact with others and see them in a more negative light, as more threatening and less trustworthy. Lonely people prefer to stand farther apart from strangers and avoid touch.

This is where MDMA-assisted therapies could potentially help, by easing these defensive tendencies, according to Dr. Woolley.

MDMA, Psychedelics, and Social Behavior

MDMA, or 3,4-methylenedioxymethamphetamine, is a hybrid between a stimulant and a psychedelic. In Dr. de Wit’s earlier experiments, volunteers given MDMA engaged more in communal activities, chatting, and playing games. They used more positive words during social encounters than those who had received a placebo. And after MDMA, people felt less rejected if they were slighted in Cyberball — a virtual ball-tossing game commonly used to measure the effects of social exclusion.

MDMA has been shown to reduce people’s response to other’s negative emotions, diminishing activation of the amygdala (the brain’s fear center) while looking at pictures of angry faces.

This could be helpful. “If you perceive a person’s natural expression as being a little bit angry, if that disappears, then you might be more inclined to interact,” de Wit says.

However, there may be downsides, too. If a drug makes people more trusting and willing to connect, they could be taken advantage of. This is why, Dr. Woolley says, “psychedelics have been used in cults.”

MDMA may also make the experience of touch more pleasant. In a series of experiments in 2019, researchers gently stroked volunteers ’ arms with a goat-hair brush, mimicking the comforting gestures one may receive from a loved one. At the same time, the scientists monitored the volunteers’ facial muscles. People on MDMA perceived gentle touch as more pleasant than those on placebo, and their smile muscles activated more.

MDMA and psychedelics boost social behaviors in animals, too — suggesting that their effects on relationships have a biological basis. Rats on MDMA are more likely to lie next to each other, and mice become more resilient to social stress. Even octopuses become more outgoing after a dose of MDMA, choosing to spend more time with other octopuses instead of a new toy. Classic psychedelics show similar effects — LSD, for example, makes mice more social.

Psychedelics can induce a sense of a “dissolution of the self-other boundary,” Dr. Woolley says. People who take them often say it’s “helped them feel more connected to themselves and other people.” LSD, first synthesized in 1938, may help increase empathy in some people.

Psilocybin, a compound found in over 200 species of mushrooms and used for centuries in Mesoamerican rituals, also seems to boost empathy, with effects persisting for at least seven days. In Cyberball, the online ball-throwing game, people who took psilocybin felt less socially rejected, an outcome reflected in their brain activation patterns in one study — the areas responsible for social-pain processing appeared to dim after a dose.

Making It Legal and Putting It to Use

In 2020, Oregon became the first state to establish a regulatory framework for psilocybin for therapeutic use, and Colorado followed suit in 2022. Such therapeutic applications of psilocybin could help fight loneliness as well, Dr. Woolley believes, because a “ common symptom of depression is that people feel socially withdrawn and lack motivation, ” he says. As mentioned above, MDMA-assisted therapy is also nearing FDA approval for PTSD.

What remain unclear are the exact mechanisms at play.

“MDMA releases oxytocin, and it does that through serotonin receptors,” Dr. de Wit says. Serotonin activates 5-HT1A receptors in the hypothalamus, releasing oxytocin into the bloodstream. In Dr. de Wit’s recent experiments, the more people felt connected after taking MDMA, the more oxytocin was found circulating in their bodies. (Another drug, methamphetamine, also upped the levels of oxytocin but did not increase feelings of connectedness.)

“It’s likely that both something in the serotonin system independent of oxytocin, and oxytocin itself, contribute,” Dr. de Wit says. Dopamine, a neurotransmitter responsible for motivation, appears to increase as well.

The empathy-boosting effects of LSD also seem to be at least partly driven by oxytocin, experiments published in 2021 revealed. Studies in mice, meanwhile, suggest that glutamate, a chemical messenger in the brain, may be behind some of LSD’s prosocial effects.

Scientists are fairly certain which receptors these drugs bind to and which neurotransmitters they affect. “How that gets translated into these higher-order things like empathy and feeling connected to the world, we don’t totally understand,” Dr. Woolley says.

Challenges and the Future

Although MDMA and psychedelics are largely considered safe when taken in a legal, medically controlled setting, there is reason to be cautious.

“They have relatively low impact on the body, like heart rate increase or blood pressure increase. But they might leave some disturbing psychological effects,” says Dr. Holze. Scientists routinely screen experiment volunteers for their risk for psychiatric disorders.

Although risk for addiction is low with both MDMA and psychedelics, there is always some risk for misuse. MDMA “ can produce feelings of well-being, and then people might use it repeatedly, ” Dr. de Wit says. “ That doesn ’ t seem to be a problem for really a lot of people, but it could easily happen. ”

Still, possibilities remain for MDMA in the fight against loneliness.

“[People] feel open, they feel like things are possible, they feel like they’re unstuck,” Dr. Woolley says. “You can harness that in psychotherapy.”

A version of this article appeared on Medscape.com.

Some call the drug “ecstasy” or “molly.” Researchers are calling it a potential tool to help treat loneliness.

As public health experts sound the alarm on a rising loneliness epidemic in the United States and across the globe,

In the latest study, MDMA “led to a robust increase in feelings of connection” among people socializing in a controlled setting. Participants were dosed with either MDMA or a placebo and asked to chat with a stranger. Afterward, those who took MDMA said their companion was more responsive and attentive, and that they had plenty in common. The drug also “increased participants’ ratings of liking their partners, feeling connected and finding the conversation enjoyable and meaningful.”

The study was small — just 18 participants — but its results “have implications for MDMA-assisted therapy,” the authors wrote. “This feeling of connectedness could help patients feel safe and trusting, thereby facilitating deeper emotional exploration.”

MDMA “really does seem to make people want to interact more with other people,” says Harriet de Wit, PhD, a neuropharmacologist at the University of Chicago and one of the study’s authors. The results echo those of earlier research using psychedelics like LSD or psilocybin.

It’s important to note that any intervention involving MDMA or psychedelics would be a drug-assisted therapy — that is, used in conjunction with the appropriate therapy and in a therapeutic setting. MDMA-assisted therapy has already drawn popular and scientific attention, as it recently cleared clinical trials for treating posttraumatic stress disorder (PTSD) and may be nearing approval by the US Food and Drug Administration (FDA).

According to Friederike Holze, PhD, psychopharmacologist at the University of Basel, in Switzerland, “there could be a place” for MDMA and psychedelics in treating chronic loneliness, but only under professional supervision.

There would have to be clear guidelines too, says Joshua Woolley, MD, PhD, a psychiatrist at the University of California, San Francisco.

MDMA and psychedelics “induce this plastic state, a state where people can change. They feel open, they feel like things are possible,” Dr. Woolley says. Then, with therapy, “you can help them change.”

Loneliness Can Impact Our Health

On top of the mental health ramifications, the physiologic effects of loneliness could have grave consequences over time. In observational studies, loneliness has been linked to higher risks for cancer and heart disease, and shorter lifespan. One third of Americans over 45 say they are chronically lonely.

Chronic loneliness changes how we think and behave, research shows. It makes us fear contact with others and see them in a more negative light, as more threatening and less trustworthy. Lonely people prefer to stand farther apart from strangers and avoid touch.

This is where MDMA-assisted therapies could potentially help, by easing these defensive tendencies, according to Dr. Woolley.

MDMA, Psychedelics, and Social Behavior

MDMA, or 3,4-methylenedioxymethamphetamine, is a hybrid between a stimulant and a psychedelic. In Dr. de Wit’s earlier experiments, volunteers given MDMA engaged more in communal activities, chatting, and playing games. They used more positive words during social encounters than those who had received a placebo. And after MDMA, people felt less rejected if they were slighted in Cyberball — a virtual ball-tossing game commonly used to measure the effects of social exclusion.

MDMA has been shown to reduce people’s response to other’s negative emotions, diminishing activation of the amygdala (the brain’s fear center) while looking at pictures of angry faces.

This could be helpful. “If you perceive a person’s natural expression as being a little bit angry, if that disappears, then you might be more inclined to interact,” de Wit says.