User login

Can GLP-1s Reduce Alzheimer’s Disease Risk?

Tina is a lovely 67-year-old woman who was recently found to be an APOE gene carrier (a gene associated with increased risk of developing Alzheimer’s disease as well as an earlier age of disease onset), with diffused amyloid protein deposition her brain.

Her neuropsychiatric testing was consistent with mild cognitive impairment. Although Tina is not a doctor herself, her entire family consists of doctors, and she came to me under their advisement to consider semaglutide (Ozempic) for early Alzheimer’s disease prevention.

This would usually be simple, but in Tina’s case, there was a complicating factor: At 5’ and 90 pounds, she was already considerably underweight and was at risk of becoming severely undernourished.

To understand the potential role for glucagon-like peptide-1 (GLP-1) receptor agonists such as Ozempic in prevention, a quick primer on Alzheimer’s Disease is necessary.

The exact cause of Alzheimer’s disease remains elusive, but it is probably due to a combination of factors, including:

- Buildup of abnormal amyloid and tau proteins around brain cells

- Brain shrinkage, with subsequent damage to blood vessels and mitochondria, and inflammation

- Genetic predisposition

- Lifestyle factors, including obesity, high blood pressure, high cholesterol, and diabetes.

Once in the brain, they can reduce inflammation and improve functioning of the neurons. In early rodent trials, GLP-1 receptor agonists led to reduced amyloid and tau aggregation, downregulation of inflammation, and improved memory.

In 2021, multiple studies showed that liraglutide, an early GLP-1 receptor agonist, improved cognitive function and MRI volume in patients with Alzheimer’s disease.

A study recently published in Alzheimer’s & Dementia analyzed data from 1 million people with type 2 diabetes and no prior Alzheimer’s disease diagnosis. The authors compared Alzheimer’s disease occurrence in patients taking various diabetes medications, including insulin, metformin, and GLP-1 receptor agonists. The study found that participants taking semaglutide had up to a 70% reduction in Alzheimer’s risk. The results were consistent across gender, age, and weight.

Given the reassuring safety profile of GLP-1 receptor agonists and lack of other effective treatment or prophylaxis for Alzheimer’s disease, I agreed to start her on dulaglutide (Trulicity). My rationale was twofold:

1. In studies, dulaglutide has the highest uptake in the brain tissue at 68%. By contrast, there is virtually zero uptake in brain tissue for semaglutide (Ozempic/Wegovy) and tirzepatide (Mounjaro/Zepbound). Because this class of drugs exert their effects in the brain tissue, I wanted to give her a GLP-1 receptor agonist with a high percent uptake.

2. Trulicity has a minimal effect on weight loss compared with the newer-generation GLP-1 receptor agonists. Even so, I connected Tina to my dietitian to ensure that she would receive a high-protein, high-calorie diet.

Tina has now been taking Trulicity for 6 months. Although it is certainly too early to draw firm conclusions about the efficacy of her treatment, she is not experiencing any weight loss and is cognitively stable, according to her neurologist.

The EVOKE and EVOKE+ phase 3 trials are currently underway to evaluate the efficacy of semaglutide to treat mild cognitive impairment and early Alzheimer’s in amyloid-positive patients. Results are expected in 2025, but in the meantime, I feel comforted knowing that Tina is receiving a potentially beneficial and definitively low-risk treatment.

Dr Messer, Clinical Assistant Professor, Mount Sinai School of Medicine; Associate Professor, Hofstra School of Medicine, New York, NY, has disclosed no relevant financial relationships.

A version of this article appeared on Medscape.com.

Tina is a lovely 67-year-old woman who was recently found to be an APOE gene carrier (a gene associated with increased risk of developing Alzheimer’s disease as well as an earlier age of disease onset), with diffused amyloid protein deposition her brain.

Her neuropsychiatric testing was consistent with mild cognitive impairment. Although Tina is not a doctor herself, her entire family consists of doctors, and she came to me under their advisement to consider semaglutide (Ozempic) for early Alzheimer’s disease prevention.

This would usually be simple, but in Tina’s case, there was a complicating factor: At 5’ and 90 pounds, she was already considerably underweight and was at risk of becoming severely undernourished.

To understand the potential role for glucagon-like peptide-1 (GLP-1) receptor agonists such as Ozempic in prevention, a quick primer on Alzheimer’s Disease is necessary.

The exact cause of Alzheimer’s disease remains elusive, but it is probably due to a combination of factors, including:

- Buildup of abnormal amyloid and tau proteins around brain cells

- Brain shrinkage, with subsequent damage to blood vessels and mitochondria, and inflammation

- Genetic predisposition

- Lifestyle factors, including obesity, high blood pressure, high cholesterol, and diabetes.

Once in the brain, they can reduce inflammation and improve functioning of the neurons. In early rodent trials, GLP-1 receptor agonists led to reduced amyloid and tau aggregation, downregulation of inflammation, and improved memory.

In 2021, multiple studies showed that liraglutide, an early GLP-1 receptor agonist, improved cognitive function and MRI volume in patients with Alzheimer’s disease.

A study recently published in Alzheimer’s & Dementia analyzed data from 1 million people with type 2 diabetes and no prior Alzheimer’s disease diagnosis. The authors compared Alzheimer’s disease occurrence in patients taking various diabetes medications, including insulin, metformin, and GLP-1 receptor agonists. The study found that participants taking semaglutide had up to a 70% reduction in Alzheimer’s risk. The results were consistent across gender, age, and weight.

Given the reassuring safety profile of GLP-1 receptor agonists and lack of other effective treatment or prophylaxis for Alzheimer’s disease, I agreed to start her on dulaglutide (Trulicity). My rationale was twofold:

1. In studies, dulaglutide has the highest uptake in the brain tissue at 68%. By contrast, there is virtually zero uptake in brain tissue for semaglutide (Ozempic/Wegovy) and tirzepatide (Mounjaro/Zepbound). Because this class of drugs exert their effects in the brain tissue, I wanted to give her a GLP-1 receptor agonist with a high percent uptake.

2. Trulicity has a minimal effect on weight loss compared with the newer-generation GLP-1 receptor agonists. Even so, I connected Tina to my dietitian to ensure that she would receive a high-protein, high-calorie diet.

Tina has now been taking Trulicity for 6 months. Although it is certainly too early to draw firm conclusions about the efficacy of her treatment, she is not experiencing any weight loss and is cognitively stable, according to her neurologist.

The EVOKE and EVOKE+ phase 3 trials are currently underway to evaluate the efficacy of semaglutide to treat mild cognitive impairment and early Alzheimer’s in amyloid-positive patients. Results are expected in 2025, but in the meantime, I feel comforted knowing that Tina is receiving a potentially beneficial and definitively low-risk treatment.

Dr Messer, Clinical Assistant Professor, Mount Sinai School of Medicine; Associate Professor, Hofstra School of Medicine, New York, NY, has disclosed no relevant financial relationships.

A version of this article appeared on Medscape.com.

Tina is a lovely 67-year-old woman who was recently found to be an APOE gene carrier (a gene associated with increased risk of developing Alzheimer’s disease as well as an earlier age of disease onset), with diffused amyloid protein deposition her brain.

Her neuropsychiatric testing was consistent with mild cognitive impairment. Although Tina is not a doctor herself, her entire family consists of doctors, and she came to me under their advisement to consider semaglutide (Ozempic) for early Alzheimer’s disease prevention.

This would usually be simple, but in Tina’s case, there was a complicating factor: At 5’ and 90 pounds, she was already considerably underweight and was at risk of becoming severely undernourished.

To understand the potential role for glucagon-like peptide-1 (GLP-1) receptor agonists such as Ozempic in prevention, a quick primer on Alzheimer’s Disease is necessary.

The exact cause of Alzheimer’s disease remains elusive, but it is probably due to a combination of factors, including:

- Buildup of abnormal amyloid and tau proteins around brain cells

- Brain shrinkage, with subsequent damage to blood vessels and mitochondria, and inflammation

- Genetic predisposition

- Lifestyle factors, including obesity, high blood pressure, high cholesterol, and diabetes.

Once in the brain, they can reduce inflammation and improve functioning of the neurons. In early rodent trials, GLP-1 receptor agonists led to reduced amyloid and tau aggregation, downregulation of inflammation, and improved memory.

In 2021, multiple studies showed that liraglutide, an early GLP-1 receptor agonist, improved cognitive function and MRI volume in patients with Alzheimer’s disease.

A study recently published in Alzheimer’s & Dementia analyzed data from 1 million people with type 2 diabetes and no prior Alzheimer’s disease diagnosis. The authors compared Alzheimer’s disease occurrence in patients taking various diabetes medications, including insulin, metformin, and GLP-1 receptor agonists. The study found that participants taking semaglutide had up to a 70% reduction in Alzheimer’s risk. The results were consistent across gender, age, and weight.

Given the reassuring safety profile of GLP-1 receptor agonists and lack of other effective treatment or prophylaxis for Alzheimer’s disease, I agreed to start her on dulaglutide (Trulicity). My rationale was twofold:

1. In studies, dulaglutide has the highest uptake in the brain tissue at 68%. By contrast, there is virtually zero uptake in brain tissue for semaglutide (Ozempic/Wegovy) and tirzepatide (Mounjaro/Zepbound). Because this class of drugs exert their effects in the brain tissue, I wanted to give her a GLP-1 receptor agonist with a high percent uptake.

2. Trulicity has a minimal effect on weight loss compared with the newer-generation GLP-1 receptor agonists. Even so, I connected Tina to my dietitian to ensure that she would receive a high-protein, high-calorie diet.

Tina has now been taking Trulicity for 6 months. Although it is certainly too early to draw firm conclusions about the efficacy of her treatment, she is not experiencing any weight loss and is cognitively stable, according to her neurologist.

The EVOKE and EVOKE+ phase 3 trials are currently underway to evaluate the efficacy of semaglutide to treat mild cognitive impairment and early Alzheimer’s in amyloid-positive patients. Results are expected in 2025, but in the meantime, I feel comforted knowing that Tina is receiving a potentially beneficial and definitively low-risk treatment.

Dr Messer, Clinical Assistant Professor, Mount Sinai School of Medicine; Associate Professor, Hofstra School of Medicine, New York, NY, has disclosed no relevant financial relationships.

A version of this article appeared on Medscape.com.

Donepezil Shows Promise in TBI Recovery

TOPLINE:

Donepezil was associated with improved verbal memory and enhanced recall and processing speed, compared with placebo, in patients with severe traumatic brain injury (TBI), with a favorable safety profile despite mild to moderate gastrointestinal side effects.

METHODOLOGY:

- A four-site, randomized, parallel-group, double-blind, placebo-controlled, 10-week clinical trial (MEMRI-TBI-D) was conducted between 2013 and 2019 to evaluate the efficacy of donepezil for verbal memory impairments following severe TBI.

- 75 adults (75% men; mean age, 37 years) with complicated mild, moderate, or severe nonpenetrating TBI at least 6 months prior to study participation were included and randomly assigned to receive donepezil (n = 37) or placebo (n = 38).

- Participants received 5 mg donepezil daily or matching placebo for 2 weeks, then donepezil at 10 mg daily or matching placebo for 8 weeks; treatment was discontinued at 10 weeks, with an additional 4-week observation period.

- Verbal memory was assessed using the Hopkins Verbal Learning Test–Revised (HVLT-R). The primary outcome measure was verbal learning, evaluated through the HVLT-R total recall (ie, Total Trials 1-3) score.

TAKEAWAY:

- Compared with placebo, donepezil was associated with significantly greater improvements in verbal learning in both modified intent-to-treat and per-protocol analyses (P = .034 and .036, respectively).

- Treatment-responder rates were significantly higher in the donepezil group than in the placebo group (42 vs 18%; P = .03), with donepezil responders showing significant improvements in delayed recall and processing speed.

- Although there were no serious adverse events in either group, treatment-emergent adverse events were significantly more common in the donepezil group vs placebo (46% vs 8%; P < .001). No serious adverse events occurred in either group.

- Diarrhea and nausea were significantly more common in the donepezil group than in the placebo group (Fisher’s exact test: diarrhea, P = .03; nausea, P = .01).

IN PRACTICE:

“This study demonstrates the efficacy of donepezil on severe, persistent verbal memory impairments after predominantly severe TBI, with significant benefit for a subset of persons with such injuries, as well as a relatively favorable safety and tolerability profile,” the investigators wrote.

SOURCE:

The study was led by David B. Arciniegas, MD, University of Colorado School of Medicine, Aurora. It was published online in The Journal of Neuropsychiatry and Clinical Neurosciences.

LIMITATIONS:

The study included a relatively small sample with predominantly severe TBI requiring hospitalization and inpatient rehabilitation. The sample characteristics limit the generalizability of the findings to persons with other severities of TBI, other types of memory impairments, or more complex neuropsychiatric presentations. The study population had an average of 14 years of education, making generalizability to individuals with lower education levels uncertain. Additionally, while measures of information processing speed and immediate auditory attention were included, specific measures of sustained or selective attention were not, making it difficult to rule out improvements in higher-level attention as potential contributors to the observed verbal memory performance improvements.

DISCLOSURES:

The study was funded by the National Institute on Disability, Independent Living, and Rehabilitation Research, with in-kind support from TIRR Memorial Hermann. Four authors disclosed various financial and professional affiliations, including advisory roles with pharmaceutical and diagnostic companies, support from institutional awards, and involvement in programs funded by external organizations. One author served as the editor of The Journal of Neuropsychiatry and Clinical Neurosciences, with an independent editor overseeing the review and publication process for this article.

This article was created using several editorial tools, including artificial intelligence, as part of the process. Human editors reviewed this content before publication. A version of this article appeared on Medscape.com.

TOPLINE:

Donepezil was associated with improved verbal memory and enhanced recall and processing speed, compared with placebo, in patients with severe traumatic brain injury (TBI), with a favorable safety profile despite mild to moderate gastrointestinal side effects.

METHODOLOGY:

- A four-site, randomized, parallel-group, double-blind, placebo-controlled, 10-week clinical trial (MEMRI-TBI-D) was conducted between 2013 and 2019 to evaluate the efficacy of donepezil for verbal memory impairments following severe TBI.

- 75 adults (75% men; mean age, 37 years) with complicated mild, moderate, or severe nonpenetrating TBI at least 6 months prior to study participation were included and randomly assigned to receive donepezil (n = 37) or placebo (n = 38).

- Participants received 5 mg donepezil daily or matching placebo for 2 weeks, then donepezil at 10 mg daily or matching placebo for 8 weeks; treatment was discontinued at 10 weeks, with an additional 4-week observation period.

- Verbal memory was assessed using the Hopkins Verbal Learning Test–Revised (HVLT-R). The primary outcome measure was verbal learning, evaluated through the HVLT-R total recall (ie, Total Trials 1-3) score.

TAKEAWAY:

- Compared with placebo, donepezil was associated with significantly greater improvements in verbal learning in both modified intent-to-treat and per-protocol analyses (P = .034 and .036, respectively).

- Treatment-responder rates were significantly higher in the donepezil group than in the placebo group (42 vs 18%; P = .03), with donepezil responders showing significant improvements in delayed recall and processing speed.

- Although there were no serious adverse events in either group, treatment-emergent adverse events were significantly more common in the donepezil group vs placebo (46% vs 8%; P < .001). No serious adverse events occurred in either group.

- Diarrhea and nausea were significantly more common in the donepezil group than in the placebo group (Fisher’s exact test: diarrhea, P = .03; nausea, P = .01).

IN PRACTICE:

“This study demonstrates the efficacy of donepezil on severe, persistent verbal memory impairments after predominantly severe TBI, with significant benefit for a subset of persons with such injuries, as well as a relatively favorable safety and tolerability profile,” the investigators wrote.

SOURCE:

The study was led by David B. Arciniegas, MD, University of Colorado School of Medicine, Aurora. It was published online in The Journal of Neuropsychiatry and Clinical Neurosciences.

LIMITATIONS:

The study included a relatively small sample with predominantly severe TBI requiring hospitalization and inpatient rehabilitation. The sample characteristics limit the generalizability of the findings to persons with other severities of TBI, other types of memory impairments, or more complex neuropsychiatric presentations. The study population had an average of 14 years of education, making generalizability to individuals with lower education levels uncertain. Additionally, while measures of information processing speed and immediate auditory attention were included, specific measures of sustained or selective attention were not, making it difficult to rule out improvements in higher-level attention as potential contributors to the observed verbal memory performance improvements.

DISCLOSURES:

The study was funded by the National Institute on Disability, Independent Living, and Rehabilitation Research, with in-kind support from TIRR Memorial Hermann. Four authors disclosed various financial and professional affiliations, including advisory roles with pharmaceutical and diagnostic companies, support from institutional awards, and involvement in programs funded by external organizations. One author served as the editor of The Journal of Neuropsychiatry and Clinical Neurosciences, with an independent editor overseeing the review and publication process for this article.

This article was created using several editorial tools, including artificial intelligence, as part of the process. Human editors reviewed this content before publication. A version of this article appeared on Medscape.com.

TOPLINE:

Donepezil was associated with improved verbal memory and enhanced recall and processing speed, compared with placebo, in patients with severe traumatic brain injury (TBI), with a favorable safety profile despite mild to moderate gastrointestinal side effects.

METHODOLOGY:

- A four-site, randomized, parallel-group, double-blind, placebo-controlled, 10-week clinical trial (MEMRI-TBI-D) was conducted between 2013 and 2019 to evaluate the efficacy of donepezil for verbal memory impairments following severe TBI.

- 75 adults (75% men; mean age, 37 years) with complicated mild, moderate, or severe nonpenetrating TBI at least 6 months prior to study participation were included and randomly assigned to receive donepezil (n = 37) or placebo (n = 38).

- Participants received 5 mg donepezil daily or matching placebo for 2 weeks, then donepezil at 10 mg daily or matching placebo for 8 weeks; treatment was discontinued at 10 weeks, with an additional 4-week observation period.

- Verbal memory was assessed using the Hopkins Verbal Learning Test–Revised (HVLT-R). The primary outcome measure was verbal learning, evaluated through the HVLT-R total recall (ie, Total Trials 1-3) score.

TAKEAWAY:

- Compared with placebo, donepezil was associated with significantly greater improvements in verbal learning in both modified intent-to-treat and per-protocol analyses (P = .034 and .036, respectively).

- Treatment-responder rates were significantly higher in the donepezil group than in the placebo group (42 vs 18%; P = .03), with donepezil responders showing significant improvements in delayed recall and processing speed.

- Although there were no serious adverse events in either group, treatment-emergent adverse events were significantly more common in the donepezil group vs placebo (46% vs 8%; P < .001). No serious adverse events occurred in either group.

- Diarrhea and nausea were significantly more common in the donepezil group than in the placebo group (Fisher’s exact test: diarrhea, P = .03; nausea, P = .01).

IN PRACTICE:

“This study demonstrates the efficacy of donepezil on severe, persistent verbal memory impairments after predominantly severe TBI, with significant benefit for a subset of persons with such injuries, as well as a relatively favorable safety and tolerability profile,” the investigators wrote.

SOURCE:

The study was led by David B. Arciniegas, MD, University of Colorado School of Medicine, Aurora. It was published online in The Journal of Neuropsychiatry and Clinical Neurosciences.

LIMITATIONS:

The study included a relatively small sample with predominantly severe TBI requiring hospitalization and inpatient rehabilitation. The sample characteristics limit the generalizability of the findings to persons with other severities of TBI, other types of memory impairments, or more complex neuropsychiatric presentations. The study population had an average of 14 years of education, making generalizability to individuals with lower education levels uncertain. Additionally, while measures of information processing speed and immediate auditory attention were included, specific measures of sustained or selective attention were not, making it difficult to rule out improvements in higher-level attention as potential contributors to the observed verbal memory performance improvements.

DISCLOSURES:

The study was funded by the National Institute on Disability, Independent Living, and Rehabilitation Research, with in-kind support from TIRR Memorial Hermann. Four authors disclosed various financial and professional affiliations, including advisory roles with pharmaceutical and diagnostic companies, support from institutional awards, and involvement in programs funded by external organizations. One author served as the editor of The Journal of Neuropsychiatry and Clinical Neurosciences, with an independent editor overseeing the review and publication process for this article.

This article was created using several editorial tools, including artificial intelligence, as part of the process. Human editors reviewed this content before publication. A version of this article appeared on Medscape.com.

Simufilam: Just Another Placebo

An Alzheimer’s drug trial failing is, unfortunately, nothing new. This one, however, had more baggage behind it than most.

Like all of these things, it was worth a try. It’s an interesting molecule with a reasonable mechanism of action.

But the trials have been raising questions for a few years, with allegations of misconduct against the drug’s co-discoverer Hoau-Yan Wang. He’s been indicted for defrauding the National Institutes of Health of $16 million in grants related to the drug. There have been concerns over doctored images and other not-so-minor issues in trying to move simufilam forward. Cassava itself agreed to pay the Securities and Exchange Commission $40 million in 2024 to settle charges about misleading investors.

Yet, like an innocent child with criminal parents, many of us hoped that the drug would work, regardless of the ethical shenanigans behind it. On the front lines we deal with a tragic disease that robs people of what makes them human and robs the families who have to live with it.

As the wheels started to come off the bus I told a friend, “it would be really sad if this drug is THE ONE and it never gets to finish trials because of everything else.”

Now we know it isn’t. Regardless of the controversy, the final data show that simufilam is just another placebo, joining the ranks of many others in the Alzheimer’s development graveyard.

Yes, there is a vague sense of jubilation behind it. I believe in fair play, and it’s good to know that those who misled investors and falsified data were wrong and will never have their day in the sun.

At the same time, however, I’m disappointed. I’m happy that the drug at least got a chance to prove itself, but when it’s all said and done, it doesn’t do anything.

I feel bad for the innocent people in the company, who had nothing to do with the scheming and were just hoping the drug would go somewhere. The majority, if not all, of them will likely lose their jobs. Like me, they have families, bills, and mortgages.

But I’m even more disappointed for the patients and families who only wanted an effective treatment for Alzheimer’s disease, and were hoping that, regardless of its dirty laundry, simufilam would work.

They’re the ones that I, and many other neurologists, have to face every day when they ask “is there anything new out?” and we sadly shake our heads.

Dr. Block has a solo neurology practice in Scottsdale, Arizona.

An Alzheimer’s drug trial failing is, unfortunately, nothing new. This one, however, had more baggage behind it than most.

Like all of these things, it was worth a try. It’s an interesting molecule with a reasonable mechanism of action.

But the trials have been raising questions for a few years, with allegations of misconduct against the drug’s co-discoverer Hoau-Yan Wang. He’s been indicted for defrauding the National Institutes of Health of $16 million in grants related to the drug. There have been concerns over doctored images and other not-so-minor issues in trying to move simufilam forward. Cassava itself agreed to pay the Securities and Exchange Commission $40 million in 2024 to settle charges about misleading investors.

Yet, like an innocent child with criminal parents, many of us hoped that the drug would work, regardless of the ethical shenanigans behind it. On the front lines we deal with a tragic disease that robs people of what makes them human and robs the families who have to live with it.

As the wheels started to come off the bus I told a friend, “it would be really sad if this drug is THE ONE and it never gets to finish trials because of everything else.”

Now we know it isn’t. Regardless of the controversy, the final data show that simufilam is just another placebo, joining the ranks of many others in the Alzheimer’s development graveyard.

Yes, there is a vague sense of jubilation behind it. I believe in fair play, and it’s good to know that those who misled investors and falsified data were wrong and will never have their day in the sun.

At the same time, however, I’m disappointed. I’m happy that the drug at least got a chance to prove itself, but when it’s all said and done, it doesn’t do anything.

I feel bad for the innocent people in the company, who had nothing to do with the scheming and were just hoping the drug would go somewhere. The majority, if not all, of them will likely lose their jobs. Like me, they have families, bills, and mortgages.

But I’m even more disappointed for the patients and families who only wanted an effective treatment for Alzheimer’s disease, and were hoping that, regardless of its dirty laundry, simufilam would work.

They’re the ones that I, and many other neurologists, have to face every day when they ask “is there anything new out?” and we sadly shake our heads.

Dr. Block has a solo neurology practice in Scottsdale, Arizona.

An Alzheimer’s drug trial failing is, unfortunately, nothing new. This one, however, had more baggage behind it than most.

Like all of these things, it was worth a try. It’s an interesting molecule with a reasonable mechanism of action.

But the trials have been raising questions for a few years, with allegations of misconduct against the drug’s co-discoverer Hoau-Yan Wang. He’s been indicted for defrauding the National Institutes of Health of $16 million in grants related to the drug. There have been concerns over doctored images and other not-so-minor issues in trying to move simufilam forward. Cassava itself agreed to pay the Securities and Exchange Commission $40 million in 2024 to settle charges about misleading investors.

Yet, like an innocent child with criminal parents, many of us hoped that the drug would work, regardless of the ethical shenanigans behind it. On the front lines we deal with a tragic disease that robs people of what makes them human and robs the families who have to live with it.

As the wheels started to come off the bus I told a friend, “it would be really sad if this drug is THE ONE and it never gets to finish trials because of everything else.”

Now we know it isn’t. Regardless of the controversy, the final data show that simufilam is just another placebo, joining the ranks of many others in the Alzheimer’s development graveyard.

Yes, there is a vague sense of jubilation behind it. I believe in fair play, and it’s good to know that those who misled investors and falsified data were wrong and will never have their day in the sun.

At the same time, however, I’m disappointed. I’m happy that the drug at least got a chance to prove itself, but when it’s all said and done, it doesn’t do anything.

I feel bad for the innocent people in the company, who had nothing to do with the scheming and were just hoping the drug would go somewhere. The majority, if not all, of them will likely lose their jobs. Like me, they have families, bills, and mortgages.

But I’m even more disappointed for the patients and families who only wanted an effective treatment for Alzheimer’s disease, and were hoping that, regardless of its dirty laundry, simufilam would work.

They’re the ones that I, and many other neurologists, have to face every day when they ask “is there anything new out?” and we sadly shake our heads.

Dr. Block has a solo neurology practice in Scottsdale, Arizona.

Urinary Metals Linked to Increased Dementia Risk

TOPLINE:

METHODOLOGY:

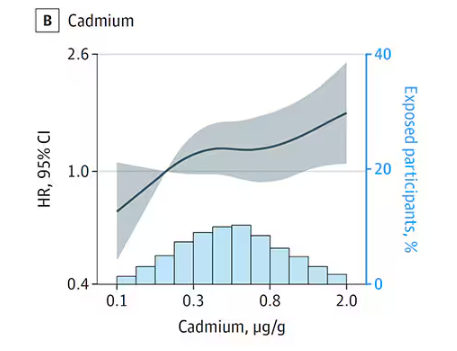

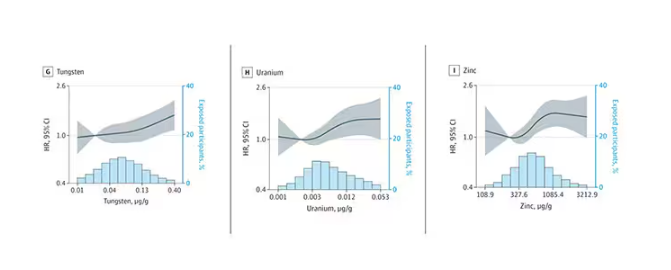

- This multicenter prospective cohort study included 6303 participants from six US study centers from 2000 to 2002, with follow-up through 2018.

- Participants were aged 45-84 years (median age at baseline, 60 years; 52% women) and were free of diagnosed cardiovascular disease.

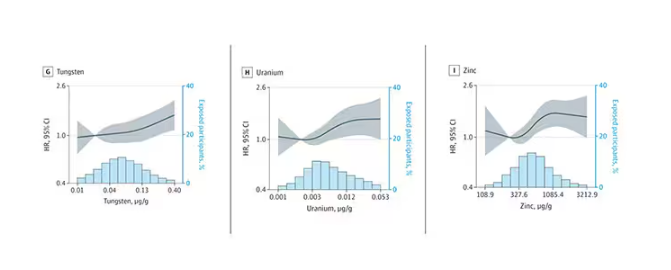

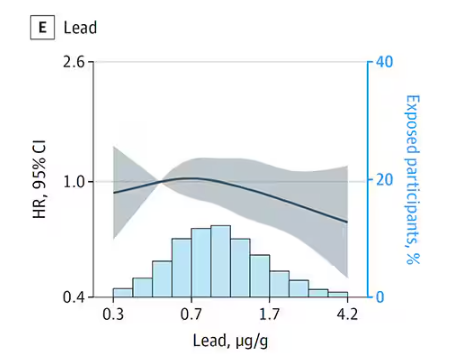

- Researchers measured urinary levels of arsenic, cadmium, cobalt, copper, lead, manganese, tungsten, uranium, and zinc.

- Neuropsychological assessments included the Digit Symbol Coding, Cognitive Abilities Screening Instrument, and Digit Span tests.

- The median follow-up duration was 11.7 years for participants with dementia and 16.8 years for those without; 559 cases of dementia were identified during the study.

TAKEAWAY:

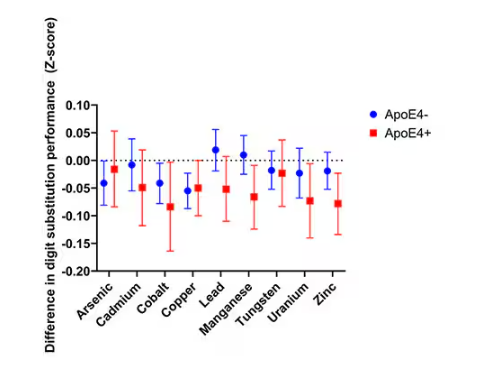

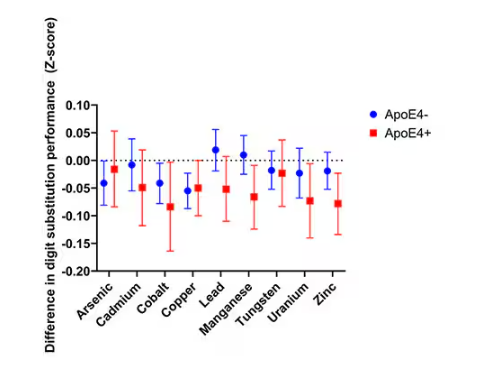

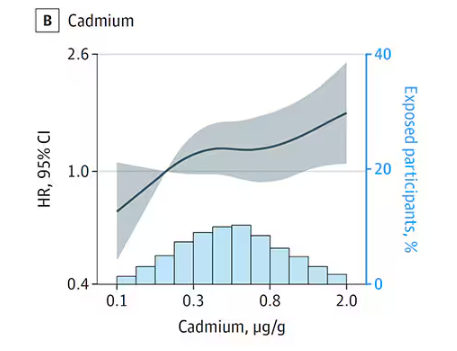

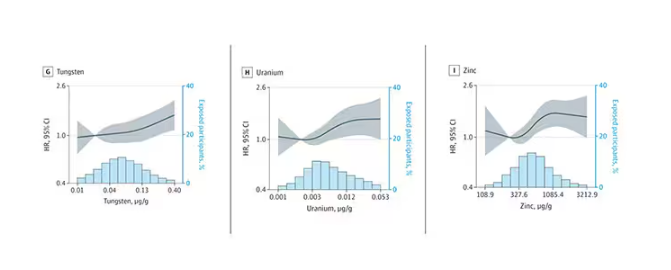

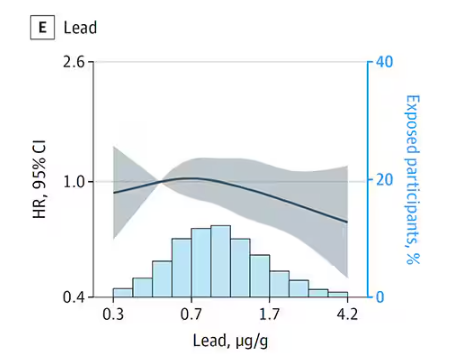

- Lower Digit Symbol Coding scores were associated with higher urinary concentrations of arsenic (mean difference [MD] in score per interquartile range [IQR] increase, –0.03), cobalt (MD per IQR increase, –0.05), copper (MD per IQR increase, –0.05), uranium (MD per IQR increase, –0.04), and zinc (MD per IQR increase, –0.03).

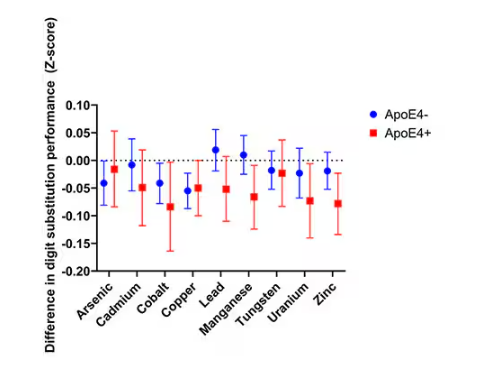

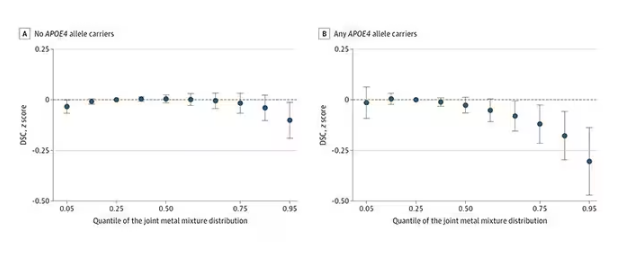

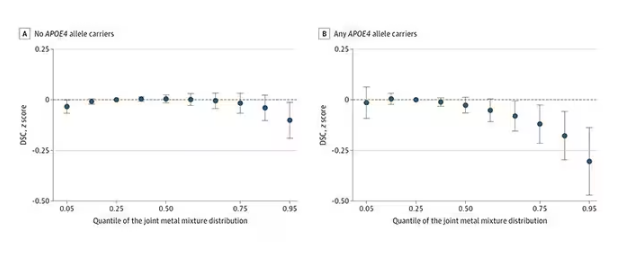

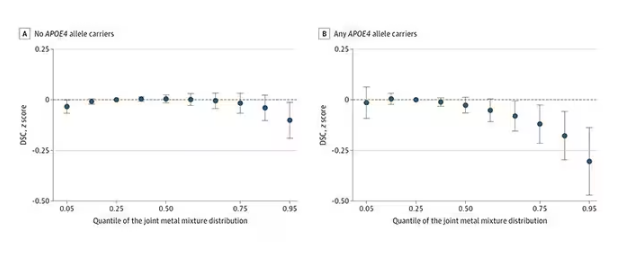

- Effects for cobalt, uranium, and zinc were stronger in apolipoprotein epsilon 4 allele (APOE4) carriers vs noncarriers.

- Higher urinary levels of copper were associated with lower Digit Span scores (MD, –0.043) and elevated levels of copper (MD, –0.028) and zinc (MD, –0.024) were associated with lower global cognitive scores.

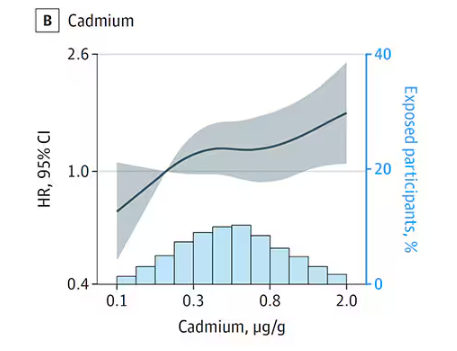

- Individuals with urinary levels of the nine-metal mixture at the 95th percentile had a 71% higher risk for dementia compared to those with levels at the 25th percentile, with the risk more pronounced in APOE4 carriers than in noncarriers (MD, –0.30 vs –0.10, respectively).

IN PRACTICE:

“We found an inverse association of essential and nonessential metals in urine, both individually and as a mixture, with the speed of mental operations, as well as a positive association of urinary metal levels with dementia risk. As metal exposure and levels in the body are modifiable, these findings could inform early screening and precision interventions for dementia prevention based on individuals’ metal exposure and genetic profiles,” the investigators wrote.

SOURCE:

The study was led by Arce Domingo-Relloso, PhD, Columbia University Mailman School of Public Health, New York City. It was published online in JAMA Network Open.

LIMITATIONS:

Data may have been missed for patients with dementia who were never hospitalized, died, or were lost to follow-up. The dementia diagnosis included nonspecific International Classification of Diseases codes, potentially leading to false-positive reports. In addition, the sample size was not sufficient to evaluate the associations between metal exposure and cognitive test scores for carriers of two APOE4 alleles.

DISCLOSURES:

The study was supported by the National Heart, Lung, and Blood Institute. Several authors reported receiving grants from the National Institutes of Health and consulting fees, editorial stipends, teaching fees, or unrelated grant funding from various sources, which are fully listed in the original article.

This article was created using several editorial tools, including AI, as part of the process. Human editors reviewed this content before publication. A version of this article appeared on Medscape.com.

TOPLINE:

METHODOLOGY:

- This multicenter prospective cohort study included 6303 participants from six US study centers from 2000 to 2002, with follow-up through 2018.

- Participants were aged 45-84 years (median age at baseline, 60 years; 52% women) and were free of diagnosed cardiovascular disease.

- Researchers measured urinary levels of arsenic, cadmium, cobalt, copper, lead, manganese, tungsten, uranium, and zinc.

- Neuropsychological assessments included the Digit Symbol Coding, Cognitive Abilities Screening Instrument, and Digit Span tests.

- The median follow-up duration was 11.7 years for participants with dementia and 16.8 years for those without; 559 cases of dementia were identified during the study.

TAKEAWAY:

- Lower Digit Symbol Coding scores were associated with higher urinary concentrations of arsenic (mean difference [MD] in score per interquartile range [IQR] increase, –0.03), cobalt (MD per IQR increase, –0.05), copper (MD per IQR increase, –0.05), uranium (MD per IQR increase, –0.04), and zinc (MD per IQR increase, –0.03).

- Effects for cobalt, uranium, and zinc were stronger in apolipoprotein epsilon 4 allele (APOE4) carriers vs noncarriers.

- Higher urinary levels of copper were associated with lower Digit Span scores (MD, –0.043) and elevated levels of copper (MD, –0.028) and zinc (MD, –0.024) were associated with lower global cognitive scores.

- Individuals with urinary levels of the nine-metal mixture at the 95th percentile had a 71% higher risk for dementia compared to those with levels at the 25th percentile, with the risk more pronounced in APOE4 carriers than in noncarriers (MD, –0.30 vs –0.10, respectively).

IN PRACTICE:

“We found an inverse association of essential and nonessential metals in urine, both individually and as a mixture, with the speed of mental operations, as well as a positive association of urinary metal levels with dementia risk. As metal exposure and levels in the body are modifiable, these findings could inform early screening and precision interventions for dementia prevention based on individuals’ metal exposure and genetic profiles,” the investigators wrote.

SOURCE:

The study was led by Arce Domingo-Relloso, PhD, Columbia University Mailman School of Public Health, New York City. It was published online in JAMA Network Open.

LIMITATIONS:

Data may have been missed for patients with dementia who were never hospitalized, died, or were lost to follow-up. The dementia diagnosis included nonspecific International Classification of Diseases codes, potentially leading to false-positive reports. In addition, the sample size was not sufficient to evaluate the associations between metal exposure and cognitive test scores for carriers of two APOE4 alleles.

DISCLOSURES:

The study was supported by the National Heart, Lung, and Blood Institute. Several authors reported receiving grants from the National Institutes of Health and consulting fees, editorial stipends, teaching fees, or unrelated grant funding from various sources, which are fully listed in the original article.

This article was created using several editorial tools, including AI, as part of the process. Human editors reviewed this content before publication. A version of this article appeared on Medscape.com.

TOPLINE:

METHODOLOGY:

- This multicenter prospective cohort study included 6303 participants from six US study centers from 2000 to 2002, with follow-up through 2018.

- Participants were aged 45-84 years (median age at baseline, 60 years; 52% women) and were free of diagnosed cardiovascular disease.

- Researchers measured urinary levels of arsenic, cadmium, cobalt, copper, lead, manganese, tungsten, uranium, and zinc.

- Neuropsychological assessments included the Digit Symbol Coding, Cognitive Abilities Screening Instrument, and Digit Span tests.

- The median follow-up duration was 11.7 years for participants with dementia and 16.8 years for those without; 559 cases of dementia were identified during the study.

TAKEAWAY:

- Lower Digit Symbol Coding scores were associated with higher urinary concentrations of arsenic (mean difference [MD] in score per interquartile range [IQR] increase, –0.03), cobalt (MD per IQR increase, –0.05), copper (MD per IQR increase, –0.05), uranium (MD per IQR increase, –0.04), and zinc (MD per IQR increase, –0.03).

- Effects for cobalt, uranium, and zinc were stronger in apolipoprotein epsilon 4 allele (APOE4) carriers vs noncarriers.

- Higher urinary levels of copper were associated with lower Digit Span scores (MD, –0.043) and elevated levels of copper (MD, –0.028) and zinc (MD, –0.024) were associated with lower global cognitive scores.

- Individuals with urinary levels of the nine-metal mixture at the 95th percentile had a 71% higher risk for dementia compared to those with levels at the 25th percentile, with the risk more pronounced in APOE4 carriers than in noncarriers (MD, –0.30 vs –0.10, respectively).

IN PRACTICE:

“We found an inverse association of essential and nonessential metals in urine, both individually and as a mixture, with the speed of mental operations, as well as a positive association of urinary metal levels with dementia risk. As metal exposure and levels in the body are modifiable, these findings could inform early screening and precision interventions for dementia prevention based on individuals’ metal exposure and genetic profiles,” the investigators wrote.

SOURCE:

The study was led by Arce Domingo-Relloso, PhD, Columbia University Mailman School of Public Health, New York City. It was published online in JAMA Network Open.

LIMITATIONS:

Data may have been missed for patients with dementia who were never hospitalized, died, or were lost to follow-up. The dementia diagnosis included nonspecific International Classification of Diseases codes, potentially leading to false-positive reports. In addition, the sample size was not sufficient to evaluate the associations between metal exposure and cognitive test scores for carriers of two APOE4 alleles.

DISCLOSURES:

The study was supported by the National Heart, Lung, and Blood Institute. Several authors reported receiving grants from the National Institutes of Health and consulting fees, editorial stipends, teaching fees, or unrelated grant funding from various sources, which are fully listed in the original article.

This article was created using several editorial tools, including AI, as part of the process. Human editors reviewed this content before publication. A version of this article appeared on Medscape.com.

Common Gut Infection Tied to Alzheimer’s Disease

Researchers are gaining new insight into the relationship between the human cytomegalovirus (HCMV), a common herpes virus found in the gut, and the immune response associated with CD83 antibody in some individuals with Alzheimer’s disease (AD).

Using tissue samples from deceased donors with AD, the study showed CD83-positive (CD83+) microglia in the superior frontal gyrus (SFG) are significantly associated with elevated immunoglobulin gamma 4 (IgG4) and HCMV in the transverse colon (TC), increased anti-HCMV IgG4 in the cerebrospinal fluid (CSF), and both HCMV and IgG4 in the SFG and vagus nerve.

“Our results indicate a complex, cross-tissue interaction between HCMV and the host adaptive immune response associated with CD83+ microglia in persons with AD,” noted the investigators, including Benjamin P. Readhead, MBBS, research associate professor, ASU-Banner Neurodegenerative Disease Research Center, Arizona State University, Tempe.

The results suggest antiviral therapy in patients with biomarker evidence of HCMV, IgG4, or CD83+ microglia might ward off dementia.

“We’re preparing to conduct a clinical trial to evaluate whether careful use of existing antivirals might be clinically helpful in preventing or slowing progression of CD83+ associated Alzheimer’s disease,” Readhead said in an interview.

The study was published on December 19, 2024, in Alzheimer’s & Dementia.

Vagus Nerve a Potential Pathway?

CMV is a common virus. In the United States, nearly one in three children are already infected with CMV by age 5 years. Over half the adults have been infected with CMV by age 40 years, the Centers for Disease Control and Prevention reported.

It is typically passed through bodily fluids and spread only when the virus is active. It’s not considered a sexually transmitted disease.

Compared with other IgG subclasses, IgG4 is believed to be a less inflammatory, and therefore less damaging, immune response. But this response may be less effective at clearing infections and allow invasion of HCMV into the brain.

The researchers previously found a CD83+ microglial subtype in the SFG of 47% of brain donors with AD vs 25% of unaffected control individuals. They reported this subtype is associated with increased IgG4 in the TC.

The current analysis extends investigations of the potential etiology and clinicopathologic relevance of CD83+ microglia in the context of AD.

Researchers conducted experiments using donated tissue samples from deceased patients with AD and control individuals. Sources for these samples included the Banner cohort, for whom classifications for the presence of CD83+ microglia were available, as were tissue samples from the SFG, TC, and vagus nerve, and the Religious Orders Study and Rush Memory and Aging Project (ROSMAP), in which participants without known dementia are evaluated annually.

From the Banner cohort, researchers completed immunohistochemistry (IHC) studies on 34 SFG samples (21 AD and 13 control individuals) and included 25 TC samples (13 AD and 12 control individuals) and 8 vagal nerve samples (6 AD and 2 control individuals) in the study. From the ROSMAP cohort, they completed IHC studies on 27 prefrontal cortex samples from individuals with AD.

They carefully selected these samples to ensure matching for critical factors such as postmortem interval, age, and sex, as well as other relevant covariates, said the authors.

The study verified that CD83+ microglia are associated with IgG4 and HCMV in the TC and showed a significant association between CD83+ microglia and IgG4 immunoreactivity in the TC.

Investigators confirmed HCMV positivity in all nine CD83+ TC samples evaluated and in one CD83– TC sample, indicating a strong positive association between HCMV within the TC and CD83+ microglia within the SFG.

HCMV IgG seroprevalence is common, varies by age and comorbidity, and is present in 79% of 85-year-olds, the investigators noted. “Despite this, we note that HCMV presence in the TC was not ubiquitous and was significantly associated with CD83+ microglia and HCMV in the SFG,” they wrote.

This observation, they added, “may help reconcile how a common pathogen might contribute to a disease that most individuals do not develop.”

The experiments also uncovered increased anti-HCMV IgG4 in the CSF and evidence of HCMV and IgG4 in the vagus nerve.

“Overall, the histochemical staining patterns observed in TC, SFG, and vagus nerve of CD83+ subjects are consistent with active HCMV infection,” the investigators wrote. “Taken together, these results indicate a multiorgan presence of IgG4 and HCMV in subjects with CD83+ microglia within the SFG,” they added.

Accelerated AD Pathology

The team showed HCMV infection accelerates production of two pathologic features of AD — amyloid beta (Abeta) and tau — and causes neuronal death. “We observed high, positive correlations between the abundance of HCMV, and both Abeta42 and pTau-212,” they wrote.

As HCMV histochemistry is consistent with an active HCMV infection, the findings “may indicate an opportunity for the administration of antiviral therapy in subjects with AD and biomarker evidence of HCMV, IgG4, or CD83+ microglia,” they added.

In addition to planning a clinical trial of existing antivirals, the research team is developing a blood test that can help identify patients with an active HCMV infection who might benefit from such an intervention, said Readhead.

But he emphasized that the research is still in its infancy. “Our study is best understood as a series of interesting scientific findings that warrant further exploration, replication, and validation in additional study populations.”

Although it’s too early for the study to impact practice, “we’re motivated to understand whether these findings have implications for clinical care,” he added.

Tipping the Balance

A number of experts have weighed in on the research via the Science Media Center, an independent forum featuring the voices and views on science news from experts in the field.

Andrew Doig, PhD, professor, Division of Neuroscience, University of Manchester in England, said the new work supports the hypothesis that HCMV might be a trigger that tips the balance from a healthy brain to one with dementia. “If so, antiviral drugs against HCMV might be beneficial in reducing the risk of AD.”

Doig noted newly approved drugs for AD are expensive, provide only a small benefit, and have significant risks, such as causing brain hemorrhages. “Antiviral drugs are an attractive alternative that are well worth exploring.”

Richard Oakley, PhD, associate director of research and innovation, Alzheimer’s Society, cautioned the study only established a connection and didn’t directly show the virus leads to AD. “Also, the virus is not found in the brain of everyone with Alzheimer’s disease, the most common form of dementia.”

The significance of the new findings is “far from clear,” commented William McEwan, PhD, group leader at the UK Dementia Research Institute at Cambridge, England. “The study does not address how common this infection is in people without Alzheimer’s and therefore cannot by itself suggest that HCMV infection, or the associated immune response, is a driver of disease.”

The experts agreed follow-up research is needed to confirm these new findings and understand what they mean.

The study received support from the National Institute on Aging, National Institutes of Health, Global Lyme Alliance, National Institute of Neurological Disorders and Stroke, Arizona Alzheimer’s Consortium, The Benter Foundation, and NOMIS Stiftung. Readhead is a coinventor on a patent application for an IgG4-based peripheral biomarker for the detection of CD83+ microglia. Doig is a founder, director, and consultant for PharmaKure, which works on AD drugs and diagnostics, although not on viruses. He has cowritten a review on Viral Involvement in Alzheimer’s Disease. McEwan reported receiving research funding from Takeda Pharmaceuticals and is a founder and consultant to Trimtech Therapeutics.

A version of this article first appeared on Medscape.com.

Researchers are gaining new insight into the relationship between the human cytomegalovirus (HCMV), a common herpes virus found in the gut, and the immune response associated with CD83 antibody in some individuals with Alzheimer’s disease (AD).

Using tissue samples from deceased donors with AD, the study showed CD83-positive (CD83+) microglia in the superior frontal gyrus (SFG) are significantly associated with elevated immunoglobulin gamma 4 (IgG4) and HCMV in the transverse colon (TC), increased anti-HCMV IgG4 in the cerebrospinal fluid (CSF), and both HCMV and IgG4 in the SFG and vagus nerve.

“Our results indicate a complex, cross-tissue interaction between HCMV and the host adaptive immune response associated with CD83+ microglia in persons with AD,” noted the investigators, including Benjamin P. Readhead, MBBS, research associate professor, ASU-Banner Neurodegenerative Disease Research Center, Arizona State University, Tempe.

The results suggest antiviral therapy in patients with biomarker evidence of HCMV, IgG4, or CD83+ microglia might ward off dementia.

“We’re preparing to conduct a clinical trial to evaluate whether careful use of existing antivirals might be clinically helpful in preventing or slowing progression of CD83+ associated Alzheimer’s disease,” Readhead said in an interview.

The study was published on December 19, 2024, in Alzheimer’s & Dementia.

Vagus Nerve a Potential Pathway?

CMV is a common virus. In the United States, nearly one in three children are already infected with CMV by age 5 years. Over half the adults have been infected with CMV by age 40 years, the Centers for Disease Control and Prevention reported.

It is typically passed through bodily fluids and spread only when the virus is active. It’s not considered a sexually transmitted disease.

Compared with other IgG subclasses, IgG4 is believed to be a less inflammatory, and therefore less damaging, immune response. But this response may be less effective at clearing infections and allow invasion of HCMV into the brain.

The researchers previously found a CD83+ microglial subtype in the SFG of 47% of brain donors with AD vs 25% of unaffected control individuals. They reported this subtype is associated with increased IgG4 in the TC.

The current analysis extends investigations of the potential etiology and clinicopathologic relevance of CD83+ microglia in the context of AD.

Researchers conducted experiments using donated tissue samples from deceased patients with AD and control individuals. Sources for these samples included the Banner cohort, for whom classifications for the presence of CD83+ microglia were available, as were tissue samples from the SFG, TC, and vagus nerve, and the Religious Orders Study and Rush Memory and Aging Project (ROSMAP), in which participants without known dementia are evaluated annually.

From the Banner cohort, researchers completed immunohistochemistry (IHC) studies on 34 SFG samples (21 AD and 13 control individuals) and included 25 TC samples (13 AD and 12 control individuals) and 8 vagal nerve samples (6 AD and 2 control individuals) in the study. From the ROSMAP cohort, they completed IHC studies on 27 prefrontal cortex samples from individuals with AD.

They carefully selected these samples to ensure matching for critical factors such as postmortem interval, age, and sex, as well as other relevant covariates, said the authors.

The study verified that CD83+ microglia are associated with IgG4 and HCMV in the TC and showed a significant association between CD83+ microglia and IgG4 immunoreactivity in the TC.

Investigators confirmed HCMV positivity in all nine CD83+ TC samples evaluated and in one CD83– TC sample, indicating a strong positive association between HCMV within the TC and CD83+ microglia within the SFG.

HCMV IgG seroprevalence is common, varies by age and comorbidity, and is present in 79% of 85-year-olds, the investigators noted. “Despite this, we note that HCMV presence in the TC was not ubiquitous and was significantly associated with CD83+ microglia and HCMV in the SFG,” they wrote.

This observation, they added, “may help reconcile how a common pathogen might contribute to a disease that most individuals do not develop.”

The experiments also uncovered increased anti-HCMV IgG4 in the CSF and evidence of HCMV and IgG4 in the vagus nerve.

“Overall, the histochemical staining patterns observed in TC, SFG, and vagus nerve of CD83+ subjects are consistent with active HCMV infection,” the investigators wrote. “Taken together, these results indicate a multiorgan presence of IgG4 and HCMV in subjects with CD83+ microglia within the SFG,” they added.

Accelerated AD Pathology

The team showed HCMV infection accelerates production of two pathologic features of AD — amyloid beta (Abeta) and tau — and causes neuronal death. “We observed high, positive correlations between the abundance of HCMV, and both Abeta42 and pTau-212,” they wrote.

As HCMV histochemistry is consistent with an active HCMV infection, the findings “may indicate an opportunity for the administration of antiviral therapy in subjects with AD and biomarker evidence of HCMV, IgG4, or CD83+ microglia,” they added.

In addition to planning a clinical trial of existing antivirals, the research team is developing a blood test that can help identify patients with an active HCMV infection who might benefit from such an intervention, said Readhead.

But he emphasized that the research is still in its infancy. “Our study is best understood as a series of interesting scientific findings that warrant further exploration, replication, and validation in additional study populations.”

Although it’s too early for the study to impact practice, “we’re motivated to understand whether these findings have implications for clinical care,” he added.

Tipping the Balance

A number of experts have weighed in on the research via the Science Media Center, an independent forum featuring the voices and views on science news from experts in the field.

Andrew Doig, PhD, professor, Division of Neuroscience, University of Manchester in England, said the new work supports the hypothesis that HCMV might be a trigger that tips the balance from a healthy brain to one with dementia. “If so, antiviral drugs against HCMV might be beneficial in reducing the risk of AD.”

Doig noted newly approved drugs for AD are expensive, provide only a small benefit, and have significant risks, such as causing brain hemorrhages. “Antiviral drugs are an attractive alternative that are well worth exploring.”

Richard Oakley, PhD, associate director of research and innovation, Alzheimer’s Society, cautioned the study only established a connection and didn’t directly show the virus leads to AD. “Also, the virus is not found in the brain of everyone with Alzheimer’s disease, the most common form of dementia.”

The significance of the new findings is “far from clear,” commented William McEwan, PhD, group leader at the UK Dementia Research Institute at Cambridge, England. “The study does not address how common this infection is in people without Alzheimer’s and therefore cannot by itself suggest that HCMV infection, or the associated immune response, is a driver of disease.”

The experts agreed follow-up research is needed to confirm these new findings and understand what they mean.

The study received support from the National Institute on Aging, National Institutes of Health, Global Lyme Alliance, National Institute of Neurological Disorders and Stroke, Arizona Alzheimer’s Consortium, The Benter Foundation, and NOMIS Stiftung. Readhead is a coinventor on a patent application for an IgG4-based peripheral biomarker for the detection of CD83+ microglia. Doig is a founder, director, and consultant for PharmaKure, which works on AD drugs and diagnostics, although not on viruses. He has cowritten a review on Viral Involvement in Alzheimer’s Disease. McEwan reported receiving research funding from Takeda Pharmaceuticals and is a founder and consultant to Trimtech Therapeutics.

A version of this article first appeared on Medscape.com.

Researchers are gaining new insight into the relationship between the human cytomegalovirus (HCMV), a common herpes virus found in the gut, and the immune response associated with CD83 antibody in some individuals with Alzheimer’s disease (AD).

Using tissue samples from deceased donors with AD, the study showed CD83-positive (CD83+) microglia in the superior frontal gyrus (SFG) are significantly associated with elevated immunoglobulin gamma 4 (IgG4) and HCMV in the transverse colon (TC), increased anti-HCMV IgG4 in the cerebrospinal fluid (CSF), and both HCMV and IgG4 in the SFG and vagus nerve.

“Our results indicate a complex, cross-tissue interaction between HCMV and the host adaptive immune response associated with CD83+ microglia in persons with AD,” noted the investigators, including Benjamin P. Readhead, MBBS, research associate professor, ASU-Banner Neurodegenerative Disease Research Center, Arizona State University, Tempe.

The results suggest antiviral therapy in patients with biomarker evidence of HCMV, IgG4, or CD83+ microglia might ward off dementia.

“We’re preparing to conduct a clinical trial to evaluate whether careful use of existing antivirals might be clinically helpful in preventing or slowing progression of CD83+ associated Alzheimer’s disease,” Readhead said in an interview.

The study was published on December 19, 2024, in Alzheimer’s & Dementia.

Vagus Nerve a Potential Pathway?

CMV is a common virus. In the United States, nearly one in three children are already infected with CMV by age 5 years. Over half the adults have been infected with CMV by age 40 years, the Centers for Disease Control and Prevention reported.

It is typically passed through bodily fluids and spread only when the virus is active. It’s not considered a sexually transmitted disease.

Compared with other IgG subclasses, IgG4 is believed to be a less inflammatory, and therefore less damaging, immune response. But this response may be less effective at clearing infections and allow invasion of HCMV into the brain.

The researchers previously found a CD83+ microglial subtype in the SFG of 47% of brain donors with AD vs 25% of unaffected control individuals. They reported this subtype is associated with increased IgG4 in the TC.

The current analysis extends investigations of the potential etiology and clinicopathologic relevance of CD83+ microglia in the context of AD.

Researchers conducted experiments using donated tissue samples from deceased patients with AD and control individuals. Sources for these samples included the Banner cohort, for whom classifications for the presence of CD83+ microglia were available, as were tissue samples from the SFG, TC, and vagus nerve, and the Religious Orders Study and Rush Memory and Aging Project (ROSMAP), in which participants without known dementia are evaluated annually.

From the Banner cohort, researchers completed immunohistochemistry (IHC) studies on 34 SFG samples (21 AD and 13 control individuals) and included 25 TC samples (13 AD and 12 control individuals) and 8 vagal nerve samples (6 AD and 2 control individuals) in the study. From the ROSMAP cohort, they completed IHC studies on 27 prefrontal cortex samples from individuals with AD.

They carefully selected these samples to ensure matching for critical factors such as postmortem interval, age, and sex, as well as other relevant covariates, said the authors.

The study verified that CD83+ microglia are associated with IgG4 and HCMV in the TC and showed a significant association between CD83+ microglia and IgG4 immunoreactivity in the TC.

Investigators confirmed HCMV positivity in all nine CD83+ TC samples evaluated and in one CD83– TC sample, indicating a strong positive association between HCMV within the TC and CD83+ microglia within the SFG.

HCMV IgG seroprevalence is common, varies by age and comorbidity, and is present in 79% of 85-year-olds, the investigators noted. “Despite this, we note that HCMV presence in the TC was not ubiquitous and was significantly associated with CD83+ microglia and HCMV in the SFG,” they wrote.

This observation, they added, “may help reconcile how a common pathogen might contribute to a disease that most individuals do not develop.”

The experiments also uncovered increased anti-HCMV IgG4 in the CSF and evidence of HCMV and IgG4 in the vagus nerve.

“Overall, the histochemical staining patterns observed in TC, SFG, and vagus nerve of CD83+ subjects are consistent with active HCMV infection,” the investigators wrote. “Taken together, these results indicate a multiorgan presence of IgG4 and HCMV in subjects with CD83+ microglia within the SFG,” they added.

Accelerated AD Pathology

The team showed HCMV infection accelerates production of two pathologic features of AD — amyloid beta (Abeta) and tau — and causes neuronal death. “We observed high, positive correlations between the abundance of HCMV, and both Abeta42 and pTau-212,” they wrote.

As HCMV histochemistry is consistent with an active HCMV infection, the findings “may indicate an opportunity for the administration of antiviral therapy in subjects with AD and biomarker evidence of HCMV, IgG4, or CD83+ microglia,” they added.

In addition to planning a clinical trial of existing antivirals, the research team is developing a blood test that can help identify patients with an active HCMV infection who might benefit from such an intervention, said Readhead.

But he emphasized that the research is still in its infancy. “Our study is best understood as a series of interesting scientific findings that warrant further exploration, replication, and validation in additional study populations.”

Although it’s too early for the study to impact practice, “we’re motivated to understand whether these findings have implications for clinical care,” he added.

Tipping the Balance

A number of experts have weighed in on the research via the Science Media Center, an independent forum featuring the voices and views on science news from experts in the field.

Andrew Doig, PhD, professor, Division of Neuroscience, University of Manchester in England, said the new work supports the hypothesis that HCMV might be a trigger that tips the balance from a healthy brain to one with dementia. “If so, antiviral drugs against HCMV might be beneficial in reducing the risk of AD.”

Doig noted newly approved drugs for AD are expensive, provide only a small benefit, and have significant risks, such as causing brain hemorrhages. “Antiviral drugs are an attractive alternative that are well worth exploring.”

Richard Oakley, PhD, associate director of research and innovation, Alzheimer’s Society, cautioned the study only established a connection and didn’t directly show the virus leads to AD. “Also, the virus is not found in the brain of everyone with Alzheimer’s disease, the most common form of dementia.”

The significance of the new findings is “far from clear,” commented William McEwan, PhD, group leader at the UK Dementia Research Institute at Cambridge, England. “The study does not address how common this infection is in people without Alzheimer’s and therefore cannot by itself suggest that HCMV infection, or the associated immune response, is a driver of disease.”

The experts agreed follow-up research is needed to confirm these new findings and understand what they mean.

The study received support from the National Institute on Aging, National Institutes of Health, Global Lyme Alliance, National Institute of Neurological Disorders and Stroke, Arizona Alzheimer’s Consortium, The Benter Foundation, and NOMIS Stiftung. Readhead is a coinventor on a patent application for an IgG4-based peripheral biomarker for the detection of CD83+ microglia. Doig is a founder, director, and consultant for PharmaKure, which works on AD drugs and diagnostics, although not on viruses. He has cowritten a review on Viral Involvement in Alzheimer’s Disease. McEwan reported receiving research funding from Takeda Pharmaceuticals and is a founder and consultant to Trimtech Therapeutics.

A version of this article first appeared on Medscape.com.

FROM ALZHEIMER’S & DEMENTIA

Common Herbicide a Player in Neurodegeneration?

new research showed.

Researchers found that glyphosate exposure even at regulated levels was associated with increased neuroinflammation and accelerated Alzheimer’s disease–like pathology in mice — an effect that persisted 6 months after a recovery period when exposure was stopped.

“More research is needed to understand the consequences of glyphosate exposure to the brain in humans and to understand the appropriate dose of exposure to limit detrimental outcomes,” said co–senior author Ramon Velazquez, PhD, with Arizona State University, Tempe.

The study was published online in The Journal of Neuroinflammation.

Persistent Accumulation Within the Brain

Glyphosate is the most heavily applied herbicide in the United States, with roughly 300 million pounds used annually in agricultural communities throughout the United States. It is also used for weed control in parks, residential areas, and personal gardens.

The Environmental Protection Agency (EPA) has determined that glyphosate poses no risks to human health when used as directed. But the World Health Organization’s International Agency for Research on Cancer disagrees, classifying the herbicide as “possibly carcinogenic to humans.”

In addition to the possible cancer risk, multiple reports have also suggested potential harmful effects of glyphosate exposure on the brain.

In earlier work, Velazquez and colleagues showed that glyphosate crosses the blood-brain barrier and infiltrates the brains of mice, contributing to neuroinflammation and other detrimental effects on brain function.

In their latest study, they examined the long-term effects of glyphosate exposure on neuroinflammation and Alzheimer’s disease–like pathology using a mouse model.

They dosed 4.5-month-old mice genetically predisposed to Alzheimer’s disease and non-transgenic control mice with either 0, 50, or 500 mg/kg of glyphosate daily for 13 weeks followed by a 6-month recovery period.

The high dose is similar to levels used in earlier research, and the low dose is close to the limit used to establish the current EPA acceptable dose in humans.

Glyphosate’s metabolite, aminomethylphosphonic acid, was detectable and persisted in mouse brain tissue even 6 months after exposure ceased, the researchers reported.

Additionally, there was a significant increase in soluble and insoluble fractions of amyloid-beta (Abeta), Abeta42 plaque load and plaque size, and phosphorylated tau at Threonine 181 and Serine 396 in hippocampus and cortex brain tissue from glyphosate-exposed mice, “highlighting an exacerbation of hallmark Alzheimer’s disease–like proteinopathies,” they noted.

Glyphosate exposure was also associated with significant elevations in both pro- and anti-inflammatory cytokines and chemokines in brain tissue of transgenic and normal mice and in peripheral blood plasma of transgenic mice.

Glyphosate-exposed transgenic mice also showed heightened anxiety-like behaviors and reduced survival.

“These findings highlight that many chemicals we regularly encounter, previously considered safe, may pose potential health risks,” co–senior author Patrick Pirrotte, PhD, with the Translational Genomics Research Institute, Phoenix, Arizona, said in a statement.

“However, further research is needed to fully assess the public health impact and identify safer alternatives,” Pirrotte added.

Funding for the study was provided by the National Institutes on Aging, National Cancer Institute and the Arizona State University (ASU) Biodesign Institute. The authors have declared no relevant conflicts of interest.

A version of this article first appeared on Medscape.com.

new research showed.

Researchers found that glyphosate exposure even at regulated levels was associated with increased neuroinflammation and accelerated Alzheimer’s disease–like pathology in mice — an effect that persisted 6 months after a recovery period when exposure was stopped.

“More research is needed to understand the consequences of glyphosate exposure to the brain in humans and to understand the appropriate dose of exposure to limit detrimental outcomes,” said co–senior author Ramon Velazquez, PhD, with Arizona State University, Tempe.

The study was published online in The Journal of Neuroinflammation.

Persistent Accumulation Within the Brain

Glyphosate is the most heavily applied herbicide in the United States, with roughly 300 million pounds used annually in agricultural communities throughout the United States. It is also used for weed control in parks, residential areas, and personal gardens.

The Environmental Protection Agency (EPA) has determined that glyphosate poses no risks to human health when used as directed. But the World Health Organization’s International Agency for Research on Cancer disagrees, classifying the herbicide as “possibly carcinogenic to humans.”

In addition to the possible cancer risk, multiple reports have also suggested potential harmful effects of glyphosate exposure on the brain.

In earlier work, Velazquez and colleagues showed that glyphosate crosses the blood-brain barrier and infiltrates the brains of mice, contributing to neuroinflammation and other detrimental effects on brain function.

In their latest study, they examined the long-term effects of glyphosate exposure on neuroinflammation and Alzheimer’s disease–like pathology using a mouse model.

They dosed 4.5-month-old mice genetically predisposed to Alzheimer’s disease and non-transgenic control mice with either 0, 50, or 500 mg/kg of glyphosate daily for 13 weeks followed by a 6-month recovery period.

The high dose is similar to levels used in earlier research, and the low dose is close to the limit used to establish the current EPA acceptable dose in humans.

Glyphosate’s metabolite, aminomethylphosphonic acid, was detectable and persisted in mouse brain tissue even 6 months after exposure ceased, the researchers reported.

Additionally, there was a significant increase in soluble and insoluble fractions of amyloid-beta (Abeta), Abeta42 plaque load and plaque size, and phosphorylated tau at Threonine 181 and Serine 396 in hippocampus and cortex brain tissue from glyphosate-exposed mice, “highlighting an exacerbation of hallmark Alzheimer’s disease–like proteinopathies,” they noted.

Glyphosate exposure was also associated with significant elevations in both pro- and anti-inflammatory cytokines and chemokines in brain tissue of transgenic and normal mice and in peripheral blood plasma of transgenic mice.

Glyphosate-exposed transgenic mice also showed heightened anxiety-like behaviors and reduced survival.

“These findings highlight that many chemicals we regularly encounter, previously considered safe, may pose potential health risks,” co–senior author Patrick Pirrotte, PhD, with the Translational Genomics Research Institute, Phoenix, Arizona, said in a statement.

“However, further research is needed to fully assess the public health impact and identify safer alternatives,” Pirrotte added.

Funding for the study was provided by the National Institutes on Aging, National Cancer Institute and the Arizona State University (ASU) Biodesign Institute. The authors have declared no relevant conflicts of interest.

A version of this article first appeared on Medscape.com.

new research showed.

Researchers found that glyphosate exposure even at regulated levels was associated with increased neuroinflammation and accelerated Alzheimer’s disease–like pathology in mice — an effect that persisted 6 months after a recovery period when exposure was stopped.

“More research is needed to understand the consequences of glyphosate exposure to the brain in humans and to understand the appropriate dose of exposure to limit detrimental outcomes,” said co–senior author Ramon Velazquez, PhD, with Arizona State University, Tempe.

The study was published online in The Journal of Neuroinflammation.

Persistent Accumulation Within the Brain

Glyphosate is the most heavily applied herbicide in the United States, with roughly 300 million pounds used annually in agricultural communities throughout the United States. It is also used for weed control in parks, residential areas, and personal gardens.

The Environmental Protection Agency (EPA) has determined that glyphosate poses no risks to human health when used as directed. But the World Health Organization’s International Agency for Research on Cancer disagrees, classifying the herbicide as “possibly carcinogenic to humans.”

In addition to the possible cancer risk, multiple reports have also suggested potential harmful effects of glyphosate exposure on the brain.

In earlier work, Velazquez and colleagues showed that glyphosate crosses the blood-brain barrier and infiltrates the brains of mice, contributing to neuroinflammation and other detrimental effects on brain function.

In their latest study, they examined the long-term effects of glyphosate exposure on neuroinflammation and Alzheimer’s disease–like pathology using a mouse model.

They dosed 4.5-month-old mice genetically predisposed to Alzheimer’s disease and non-transgenic control mice with either 0, 50, or 500 mg/kg of glyphosate daily for 13 weeks followed by a 6-month recovery period.

The high dose is similar to levels used in earlier research, and the low dose is close to the limit used to establish the current EPA acceptable dose in humans.

Glyphosate’s metabolite, aminomethylphosphonic acid, was detectable and persisted in mouse brain tissue even 6 months after exposure ceased, the researchers reported.

Additionally, there was a significant increase in soluble and insoluble fractions of amyloid-beta (Abeta), Abeta42 plaque load and plaque size, and phosphorylated tau at Threonine 181 and Serine 396 in hippocampus and cortex brain tissue from glyphosate-exposed mice, “highlighting an exacerbation of hallmark Alzheimer’s disease–like proteinopathies,” they noted.

Glyphosate exposure was also associated with significant elevations in both pro- and anti-inflammatory cytokines and chemokines in brain tissue of transgenic and normal mice and in peripheral blood plasma of transgenic mice.

Glyphosate-exposed transgenic mice also showed heightened anxiety-like behaviors and reduced survival.

“These findings highlight that many chemicals we regularly encounter, previously considered safe, may pose potential health risks,” co–senior author Patrick Pirrotte, PhD, with the Translational Genomics Research Institute, Phoenix, Arizona, said in a statement.

“However, further research is needed to fully assess the public health impact and identify safer alternatives,” Pirrotte added.

Funding for the study was provided by the National Institutes on Aging, National Cancer Institute and the Arizona State University (ASU) Biodesign Institute. The authors have declared no relevant conflicts of interest.

A version of this article first appeared on Medscape.com.

FROM THE JOURNAL OF NEUROINFLAMMATION

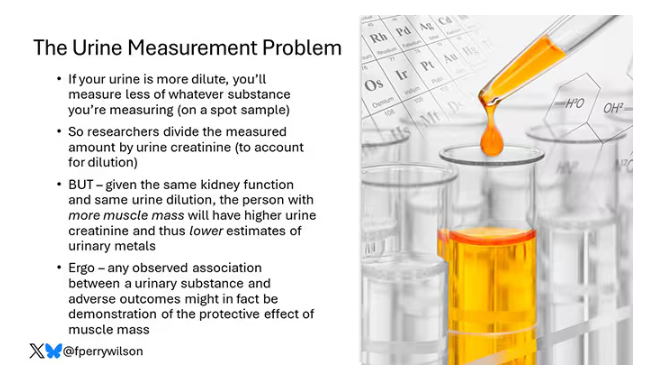

How Metals Affect the Brain

This transcript has been edited for clarity.

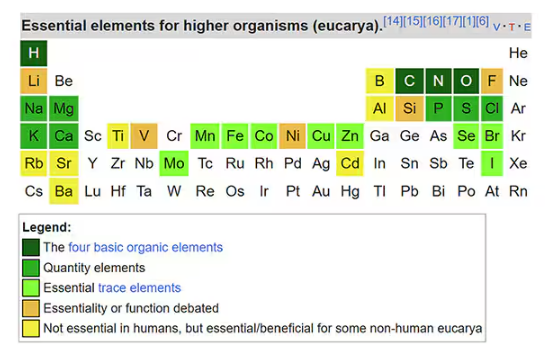

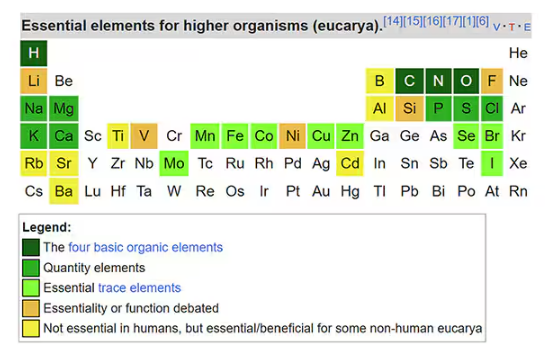

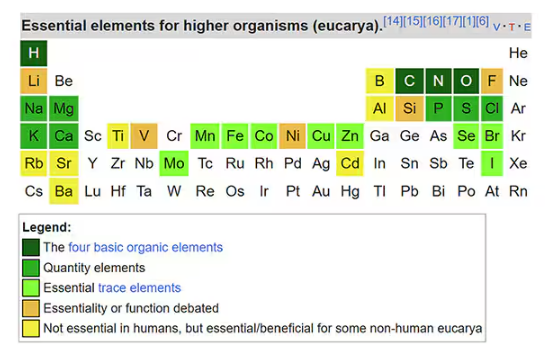

It has always amazed me that our bodies require these tiny amounts of incredibly rare substances to function. Sure, we need oxygen. We need water. But we also need molybdenum, which makes up just 1.2 parts per million of the Earth’s crust.

Without adequate molybdenum intake, we develop seizures, developmental delays, death. Fortunately, we need so little molybdenum that true molybdenum deficiency is incredibly rare — seen only in people on total parenteral nutrition without supplementation or those with certain rare genetic conditions. But still, molybdenum is necessary for life.

Many metals are. Figure 1 colors the essential minerals on the periodic table. You can see that to stay alive, we humans need not only things like sodium, but selenium, bromine, zinc, copper, and cobalt.

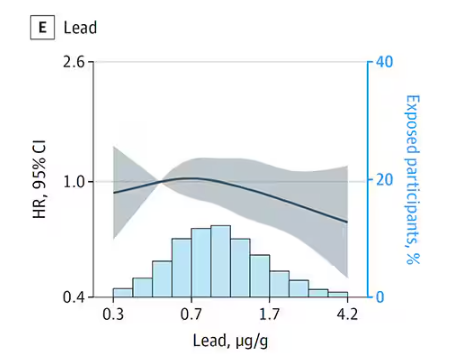

Some metals are very clearly not essential; we can all do without lead and mercury, and probably should.

But just because something is essential for life does not mean that more is better. The dose is the poison, as they say. And this week, we explore whether metals — even essential metals — might be adversely affecting our brains.