User login

Clinical Psychiatry News is the online destination and multimedia properties of Clinica Psychiatry News, the independent news publication for psychiatrists. Since 1971, Clinical Psychiatry News has been the leading source of news and commentary about clinical developments in psychiatry as well as health care policy and regulations that affect the physician's practice.

Dear Drupal User: You're seeing this because you're logged in to Drupal, and not redirected to MDedge.com/psychiatry.

Depression

adolescent depression

adolescent major depressive disorder

adolescent schizophrenia

adolescent with major depressive disorder

animals

autism

baby

brexpiprazole

child

child bipolar

child depression

child schizophrenia

children with bipolar disorder

children with depression

children with major depressive disorder

compulsive behaviors

cure

elderly bipolar

elderly depression

elderly major depressive disorder

elderly schizophrenia

elderly with dementia

first break

first episode

gambling

gaming

geriatric depression

geriatric major depressive disorder

geriatric schizophrenia

infant

ketamine

kid

major depressive disorder

major depressive disorder in adolescents

major depressive disorder in children

parenting

pediatric

pediatric bipolar

pediatric depression

pediatric major depressive disorder

pediatric schizophrenia

pregnancy

pregnant

rexulti

skin care

suicide

teen

wine

section[contains(@class, 'nav-hidden')]

footer[@id='footer']

div[contains(@class, 'pane-pub-article-cpn')]

div[contains(@class, 'pane-pub-home-cpn')]

div[contains(@class, 'pane-pub-topic-cpn')]

div[contains(@class, 'panel-panel-inner')]

div[contains(@class, 'pane-node-field-article-topics')]

section[contains(@class, 'footer-nav-section-wrapper')]

Measurement-Based Treatment to Target Approaches

Clinical Scenario

Lilly is a 15-year-old girl in her sophomore year of high school. Over the course of a month after a romantic and then a friend-group breakup, her parents have been concerned about her increasing tearfulness every day and retreat from activities to avoid social interactions with others that she once enjoyed so much. She has been missing more and more school, saying that she can’t bear to go, and staying in bed during the days, even on weekends. You start her on an SSRI and recommend psychotherapy in the form of CBT offered through your office. She returns to the appointment in 2 weeks with you and then again in another 2 weeks. Her parents and she tell you, “I thought she would be better by now.” You feel stuck with how to proceed in the visit. You have correctly identified the problem as depression, started the recommended evidence-based treatments, but the parents and Lilly are looking to you for something more or different. There are not many or other local resources. When and how do you all determine what “better” looks and feels like? Where do you go from here?

Metrics Can Guide Next Steps

This clinical scenario is not uncommon. As a psychiatrist consultant in primary care, I often encounter the following comment and question: “Someone isn’t feeling better. I have them taking an SSRI and doing psychotherapy. What is the next thing to do?” In discussions with supervisees and in training residents, I often say that you will know that your consultations have made a real impact on providers’ practices when these questions shift from “what’s the next medication or treatment” to a more robust baseline and follow-up inventory of symptoms via common and available metrics (PHQ9A, PSC-17 or 30, SCARED) shared with you at the start, the middle, and at other times of treatment. Such metrics can more meaningfully guide your collaborative clinical discussions and decisions.

Tracking baseline metrics and follow-up with treatment interventions is a transformative approach to clinical care. But, in primary care, it’s common that the question around mental health care may not receive the same robust screening and tracking of symptoms which have the power to more thoughtfully guide decision-making, even though this is common in other forms of patient care which have more routine use of more objective data.

Measurement-based treatment to target approaches are well-studied, but not often or always implemented. They involve providing a baseline metric (PHQ9A, Pediatric Symptom Checklist 17 or 30, GAD7, or SCARED), and tracking that metric for response over time using specific scores for decision points.

An Alternative Clinical Scenario

Consider the following alternative scenario for the above patient using a measurement-based treatment to target approach:

Lilly is a 15-year-old girl in her sophomore year of high school with symptoms concerning for depression. A PHQ9A is administered in your appointment, and she scores 20 out of 30, exceeding the threshold score for 11 for depression. You start her on an SSRI and recommend psychotherapy in the form of CBT offered through your office. She returns to the appointment with you in 2 weeks and then again in another 2 weeks. You obtain a PHQ9A at each appointment, and track the change with her and her parents over time.

You share with her and the family that it is common that there will be fluctuations in measurements, and you know that a score change on the PHQ9A greater than 7 is considered a clinically significant, reliable change. So, a PHQ9 score reduction from 20 to 13 would be meaningful progress. While seeking a score within the normal and non-clinical range, the progress can be tracked in a way that allows a more robust monitoring of treatment response. If the scores do not improve, you can see that and act accordingly. This way of using metrics shifts the conversation from “how are you feeling now and today” to tracking symptoms more broadly and tracking those individual symptoms over time, some of which may improve and some which may be trickier to target.

Such a way of tracking common mental health symptoms with a focus on having data at baseline and throughout treatment allows a provider to change or adapt interventions, and to not chase something that can feel ephemeral, such as “feeling happy or looking better.”

For additional information on the measurement-based treatment to target approach, there are resources that share in more depth the research informing this approach, and other and broader real ways to integrate these practices into your own visits:

- Is Treatment Working? Detecting Real Change in the Treatment of Child and Adolescent Depression

- AACAP Clinical Update: Collaborative Mental Health Care for Children and Adolescents in Primary Care

Pawlowski is a child and adolescent consulting psychiatrist. She is a division chief at the University of Vermont Medical Center where she focuses on primary care mental health integration within primary care pediatrics, internal medicine, and family medicine.

Clinical Scenario

Lilly is a 15-year-old girl in her sophomore year of high school. Over the course of a month after a romantic and then a friend-group breakup, her parents have been concerned about her increasing tearfulness every day and retreat from activities to avoid social interactions with others that she once enjoyed so much. She has been missing more and more school, saying that she can’t bear to go, and staying in bed during the days, even on weekends. You start her on an SSRI and recommend psychotherapy in the form of CBT offered through your office. She returns to the appointment in 2 weeks with you and then again in another 2 weeks. Her parents and she tell you, “I thought she would be better by now.” You feel stuck with how to proceed in the visit. You have correctly identified the problem as depression, started the recommended evidence-based treatments, but the parents and Lilly are looking to you for something more or different. There are not many or other local resources. When and how do you all determine what “better” looks and feels like? Where do you go from here?

Metrics Can Guide Next Steps

This clinical scenario is not uncommon. As a psychiatrist consultant in primary care, I often encounter the following comment and question: “Someone isn’t feeling better. I have them taking an SSRI and doing psychotherapy. What is the next thing to do?” In discussions with supervisees and in training residents, I often say that you will know that your consultations have made a real impact on providers’ practices when these questions shift from “what’s the next medication or treatment” to a more robust baseline and follow-up inventory of symptoms via common and available metrics (PHQ9A, PSC-17 or 30, SCARED) shared with you at the start, the middle, and at other times of treatment. Such metrics can more meaningfully guide your collaborative clinical discussions and decisions.

Tracking baseline metrics and follow-up with treatment interventions is a transformative approach to clinical care. But, in primary care, it’s common that the question around mental health care may not receive the same robust screening and tracking of symptoms which have the power to more thoughtfully guide decision-making, even though this is common in other forms of patient care which have more routine use of more objective data.

Measurement-based treatment to target approaches are well-studied, but not often or always implemented. They involve providing a baseline metric (PHQ9A, Pediatric Symptom Checklist 17 or 30, GAD7, or SCARED), and tracking that metric for response over time using specific scores for decision points.

An Alternative Clinical Scenario

Consider the following alternative scenario for the above patient using a measurement-based treatment to target approach:

Lilly is a 15-year-old girl in her sophomore year of high school with symptoms concerning for depression. A PHQ9A is administered in your appointment, and she scores 20 out of 30, exceeding the threshold score for 11 for depression. You start her on an SSRI and recommend psychotherapy in the form of CBT offered through your office. She returns to the appointment with you in 2 weeks and then again in another 2 weeks. You obtain a PHQ9A at each appointment, and track the change with her and her parents over time.

You share with her and the family that it is common that there will be fluctuations in measurements, and you know that a score change on the PHQ9A greater than 7 is considered a clinically significant, reliable change. So, a PHQ9 score reduction from 20 to 13 would be meaningful progress. While seeking a score within the normal and non-clinical range, the progress can be tracked in a way that allows a more robust monitoring of treatment response. If the scores do not improve, you can see that and act accordingly. This way of using metrics shifts the conversation from “how are you feeling now and today” to tracking symptoms more broadly and tracking those individual symptoms over time, some of which may improve and some which may be trickier to target.

Such a way of tracking common mental health symptoms with a focus on having data at baseline and throughout treatment allows a provider to change or adapt interventions, and to not chase something that can feel ephemeral, such as “feeling happy or looking better.”

For additional information on the measurement-based treatment to target approach, there are resources that share in more depth the research informing this approach, and other and broader real ways to integrate these practices into your own visits:

- Is Treatment Working? Detecting Real Change in the Treatment of Child and Adolescent Depression

- AACAP Clinical Update: Collaborative Mental Health Care for Children and Adolescents in Primary Care

Pawlowski is a child and adolescent consulting psychiatrist. She is a division chief at the University of Vermont Medical Center where she focuses on primary care mental health integration within primary care pediatrics, internal medicine, and family medicine.

Clinical Scenario

Lilly is a 15-year-old girl in her sophomore year of high school. Over the course of a month after a romantic and then a friend-group breakup, her parents have been concerned about her increasing tearfulness every day and retreat from activities to avoid social interactions with others that she once enjoyed so much. She has been missing more and more school, saying that she can’t bear to go, and staying in bed during the days, even on weekends. You start her on an SSRI and recommend psychotherapy in the form of CBT offered through your office. She returns to the appointment in 2 weeks with you and then again in another 2 weeks. Her parents and she tell you, “I thought she would be better by now.” You feel stuck with how to proceed in the visit. You have correctly identified the problem as depression, started the recommended evidence-based treatments, but the parents and Lilly are looking to you for something more or different. There are not many or other local resources. When and how do you all determine what “better” looks and feels like? Where do you go from here?

Metrics Can Guide Next Steps

This clinical scenario is not uncommon. As a psychiatrist consultant in primary care, I often encounter the following comment and question: “Someone isn’t feeling better. I have them taking an SSRI and doing psychotherapy. What is the next thing to do?” In discussions with supervisees and in training residents, I often say that you will know that your consultations have made a real impact on providers’ practices when these questions shift from “what’s the next medication or treatment” to a more robust baseline and follow-up inventory of symptoms via common and available metrics (PHQ9A, PSC-17 or 30, SCARED) shared with you at the start, the middle, and at other times of treatment. Such metrics can more meaningfully guide your collaborative clinical discussions and decisions.

Tracking baseline metrics and follow-up with treatment interventions is a transformative approach to clinical care. But, in primary care, it’s common that the question around mental health care may not receive the same robust screening and tracking of symptoms which have the power to more thoughtfully guide decision-making, even though this is common in other forms of patient care which have more routine use of more objective data.

Measurement-based treatment to target approaches are well-studied, but not often or always implemented. They involve providing a baseline metric (PHQ9A, Pediatric Symptom Checklist 17 or 30, GAD7, or SCARED), and tracking that metric for response over time using specific scores for decision points.

An Alternative Clinical Scenario

Consider the following alternative scenario for the above patient using a measurement-based treatment to target approach:

Lilly is a 15-year-old girl in her sophomore year of high school with symptoms concerning for depression. A PHQ9A is administered in your appointment, and she scores 20 out of 30, exceeding the threshold score for 11 for depression. You start her on an SSRI and recommend psychotherapy in the form of CBT offered through your office. She returns to the appointment with you in 2 weeks and then again in another 2 weeks. You obtain a PHQ9A at each appointment, and track the change with her and her parents over time.

You share with her and the family that it is common that there will be fluctuations in measurements, and you know that a score change on the PHQ9A greater than 7 is considered a clinically significant, reliable change. So, a PHQ9 score reduction from 20 to 13 would be meaningful progress. While seeking a score within the normal and non-clinical range, the progress can be tracked in a way that allows a more robust monitoring of treatment response. If the scores do not improve, you can see that and act accordingly. This way of using metrics shifts the conversation from “how are you feeling now and today” to tracking symptoms more broadly and tracking those individual symptoms over time, some of which may improve and some which may be trickier to target.

Such a way of tracking common mental health symptoms with a focus on having data at baseline and throughout treatment allows a provider to change or adapt interventions, and to not chase something that can feel ephemeral, such as “feeling happy or looking better.”

For additional information on the measurement-based treatment to target approach, there are resources that share in more depth the research informing this approach, and other and broader real ways to integrate these practices into your own visits:

- Is Treatment Working? Detecting Real Change in the Treatment of Child and Adolescent Depression

- AACAP Clinical Update: Collaborative Mental Health Care for Children and Adolescents in Primary Care

Pawlowski is a child and adolescent consulting psychiatrist. She is a division chief at the University of Vermont Medical Center where she focuses on primary care mental health integration within primary care pediatrics, internal medicine, and family medicine.

Common Herbicide a Player in Neurodegeneration?

new research showed.

Researchers found that glyphosate exposure even at regulated levels was associated with increased neuroinflammation and accelerated Alzheimer’s disease–like pathology in mice — an effect that persisted 6 months after a recovery period when exposure was stopped.

“More research is needed to understand the consequences of glyphosate exposure to the brain in humans and to understand the appropriate dose of exposure to limit detrimental outcomes,” said co–senior author Ramon Velazquez, PhD, with Arizona State University, Tempe.

The study was published online in The Journal of Neuroinflammation.

Persistent Accumulation Within the Brain

Glyphosate is the most heavily applied herbicide in the United States, with roughly 300 million pounds used annually in agricultural communities throughout the United States. It is also used for weed control in parks, residential areas, and personal gardens.

The Environmental Protection Agency (EPA) has determined that glyphosate poses no risks to human health when used as directed. But the World Health Organization’s International Agency for Research on Cancer disagrees, classifying the herbicide as “possibly carcinogenic to humans.”

In addition to the possible cancer risk, multiple reports have also suggested potential harmful effects of glyphosate exposure on the brain.

In earlier work, Velazquez and colleagues showed that glyphosate crosses the blood-brain barrier and infiltrates the brains of mice, contributing to neuroinflammation and other detrimental effects on brain function.

In their latest study, they examined the long-term effects of glyphosate exposure on neuroinflammation and Alzheimer’s disease–like pathology using a mouse model.

They dosed 4.5-month-old mice genetically predisposed to Alzheimer’s disease and non-transgenic control mice with either 0, 50, or 500 mg/kg of glyphosate daily for 13 weeks followed by a 6-month recovery period.

The high dose is similar to levels used in earlier research, and the low dose is close to the limit used to establish the current EPA acceptable dose in humans.

Glyphosate’s metabolite, aminomethylphosphonic acid, was detectable and persisted in mouse brain tissue even 6 months after exposure ceased, the researchers reported.

Additionally, there was a significant increase in soluble and insoluble fractions of amyloid-beta (Abeta), Abeta42 plaque load and plaque size, and phosphorylated tau at Threonine 181 and Serine 396 in hippocampus and cortex brain tissue from glyphosate-exposed mice, “highlighting an exacerbation of hallmark Alzheimer’s disease–like proteinopathies,” they noted.

Glyphosate exposure was also associated with significant elevations in both pro- and anti-inflammatory cytokines and chemokines in brain tissue of transgenic and normal mice and in peripheral blood plasma of transgenic mice.

Glyphosate-exposed transgenic mice also showed heightened anxiety-like behaviors and reduced survival.

“These findings highlight that many chemicals we regularly encounter, previously considered safe, may pose potential health risks,” co–senior author Patrick Pirrotte, PhD, with the Translational Genomics Research Institute, Phoenix, Arizona, said in a statement.

“However, further research is needed to fully assess the public health impact and identify safer alternatives,” Pirrotte added.

Funding for the study was provided by the National Institutes on Aging, National Cancer Institute and the Arizona State University (ASU) Biodesign Institute. The authors have declared no relevant conflicts of interest.

A version of this article first appeared on Medscape.com.

new research showed.

Researchers found that glyphosate exposure even at regulated levels was associated with increased neuroinflammation and accelerated Alzheimer’s disease–like pathology in mice — an effect that persisted 6 months after a recovery period when exposure was stopped.

“More research is needed to understand the consequences of glyphosate exposure to the brain in humans and to understand the appropriate dose of exposure to limit detrimental outcomes,” said co–senior author Ramon Velazquez, PhD, with Arizona State University, Tempe.

The study was published online in The Journal of Neuroinflammation.

Persistent Accumulation Within the Brain

Glyphosate is the most heavily applied herbicide in the United States, with roughly 300 million pounds used annually in agricultural communities throughout the United States. It is also used for weed control in parks, residential areas, and personal gardens.

The Environmental Protection Agency (EPA) has determined that glyphosate poses no risks to human health when used as directed. But the World Health Organization’s International Agency for Research on Cancer disagrees, classifying the herbicide as “possibly carcinogenic to humans.”

In addition to the possible cancer risk, multiple reports have also suggested potential harmful effects of glyphosate exposure on the brain.

In earlier work, Velazquez and colleagues showed that glyphosate crosses the blood-brain barrier and infiltrates the brains of mice, contributing to neuroinflammation and other detrimental effects on brain function.

In their latest study, they examined the long-term effects of glyphosate exposure on neuroinflammation and Alzheimer’s disease–like pathology using a mouse model.

They dosed 4.5-month-old mice genetically predisposed to Alzheimer’s disease and non-transgenic control mice with either 0, 50, or 500 mg/kg of glyphosate daily for 13 weeks followed by a 6-month recovery period.

The high dose is similar to levels used in earlier research, and the low dose is close to the limit used to establish the current EPA acceptable dose in humans.

Glyphosate’s metabolite, aminomethylphosphonic acid, was detectable and persisted in mouse brain tissue even 6 months after exposure ceased, the researchers reported.

Additionally, there was a significant increase in soluble and insoluble fractions of amyloid-beta (Abeta), Abeta42 plaque load and plaque size, and phosphorylated tau at Threonine 181 and Serine 396 in hippocampus and cortex brain tissue from glyphosate-exposed mice, “highlighting an exacerbation of hallmark Alzheimer’s disease–like proteinopathies,” they noted.

Glyphosate exposure was also associated with significant elevations in both pro- and anti-inflammatory cytokines and chemokines in brain tissue of transgenic and normal mice and in peripheral blood plasma of transgenic mice.

Glyphosate-exposed transgenic mice also showed heightened anxiety-like behaviors and reduced survival.

“These findings highlight that many chemicals we regularly encounter, previously considered safe, may pose potential health risks,” co–senior author Patrick Pirrotte, PhD, with the Translational Genomics Research Institute, Phoenix, Arizona, said in a statement.

“However, further research is needed to fully assess the public health impact and identify safer alternatives,” Pirrotte added.

Funding for the study was provided by the National Institutes on Aging, National Cancer Institute and the Arizona State University (ASU) Biodesign Institute. The authors have declared no relevant conflicts of interest.

A version of this article first appeared on Medscape.com.

new research showed.

Researchers found that glyphosate exposure even at regulated levels was associated with increased neuroinflammation and accelerated Alzheimer’s disease–like pathology in mice — an effect that persisted 6 months after a recovery period when exposure was stopped.

“More research is needed to understand the consequences of glyphosate exposure to the brain in humans and to understand the appropriate dose of exposure to limit detrimental outcomes,” said co–senior author Ramon Velazquez, PhD, with Arizona State University, Tempe.

The study was published online in The Journal of Neuroinflammation.

Persistent Accumulation Within the Brain

Glyphosate is the most heavily applied herbicide in the United States, with roughly 300 million pounds used annually in agricultural communities throughout the United States. It is also used for weed control in parks, residential areas, and personal gardens.

The Environmental Protection Agency (EPA) has determined that glyphosate poses no risks to human health when used as directed. But the World Health Organization’s International Agency for Research on Cancer disagrees, classifying the herbicide as “possibly carcinogenic to humans.”

In addition to the possible cancer risk, multiple reports have also suggested potential harmful effects of glyphosate exposure on the brain.

In earlier work, Velazquez and colleagues showed that glyphosate crosses the blood-brain barrier and infiltrates the brains of mice, contributing to neuroinflammation and other detrimental effects on brain function.

In their latest study, they examined the long-term effects of glyphosate exposure on neuroinflammation and Alzheimer’s disease–like pathology using a mouse model.

They dosed 4.5-month-old mice genetically predisposed to Alzheimer’s disease and non-transgenic control mice with either 0, 50, or 500 mg/kg of glyphosate daily for 13 weeks followed by a 6-month recovery period.

The high dose is similar to levels used in earlier research, and the low dose is close to the limit used to establish the current EPA acceptable dose in humans.

Glyphosate’s metabolite, aminomethylphosphonic acid, was detectable and persisted in mouse brain tissue even 6 months after exposure ceased, the researchers reported.

Additionally, there was a significant increase in soluble and insoluble fractions of amyloid-beta (Abeta), Abeta42 plaque load and plaque size, and phosphorylated tau at Threonine 181 and Serine 396 in hippocampus and cortex brain tissue from glyphosate-exposed mice, “highlighting an exacerbation of hallmark Alzheimer’s disease–like proteinopathies,” they noted.

Glyphosate exposure was also associated with significant elevations in both pro- and anti-inflammatory cytokines and chemokines in brain tissue of transgenic and normal mice and in peripheral blood plasma of transgenic mice.

Glyphosate-exposed transgenic mice also showed heightened anxiety-like behaviors and reduced survival.

“These findings highlight that many chemicals we regularly encounter, previously considered safe, may pose potential health risks,” co–senior author Patrick Pirrotte, PhD, with the Translational Genomics Research Institute, Phoenix, Arizona, said in a statement.

“However, further research is needed to fully assess the public health impact and identify safer alternatives,” Pirrotte added.

Funding for the study was provided by the National Institutes on Aging, National Cancer Institute and the Arizona State University (ASU) Biodesign Institute. The authors have declared no relevant conflicts of interest.

A version of this article first appeared on Medscape.com.

FROM THE JOURNAL OF NEUROINFLAMMATION

Internet Use May Boost Mental Health in Later Life

TOPLINE:

and better self-reported health among adults aged 50 years or older across 23 countries than nonuse, a new cohort study suggests.

METHODOLOGY:

- Data were examined for more than 87,000 adults aged 50 years or older across 23 countries and from six aging cohorts.

- Researchers examined the potential association between internet use and mental health outcomes, including depressive symptoms, life satisfaction, and self-reported health.

- Polygenic scores were used for subset analysis to stratify participants from England and the United States according to their genetic risk for depression.

- Participants were followed up for a median of 6 years.

TAKEAWAY:

- Internet use was linked to consistent benefits across countries, including lower depressive symptoms (pooled average marginal effect [AME], –0.09; 95% CI, –0.12 to –0.07), higher life satisfaction (pooled AME, 0.07; 95% CI, 0.05-0.10), and better self-reported health (pooled AME, 0.15; 95% CI, 0.12-0.17).

- Frequent internet users showed better mental health outcomes than nonusers, and daily internet users showed significant improvements in depressive symptoms and self-reported health in England and the United States.

- Each additional wave of internet use was associated with reduced depressive symptoms (pooled AME, –0.06; 95% CI, –0.09 to –0.04) and improved life satisfaction (pooled AME, 0.05; 95% CI, 0.03-0.07).

- Benefits of internet use were observed across all genetic risk categories for depression in England and the United States, suggesting potential utility regardless of genetic predisposition.

IN PRACTICE:

“Our findings are relevant to public health policies and practices in promoting mental health in later life through the internet, especially in countries with limited internet access and mental health services,” the investigators wrote.

SOURCE:

The study was led by Yan Luo, Department of Data Science, City University of Hong Kong, Hong Kong, China. It was published online November 18 in Nature Human Behaviour.

LIMITATIONS:

The possibility of residual confounding and reverse causation prevented the establishment of direct causality between internet use and mental health. Selection bias may have also existed due to differences in baseline characteristics between the analytic samples and entire populations. Internet use was assessed through self-reported items, which could have led to recall and information bias. Additionally, genetic data were available for participants only from England and the United States.

DISCLOSURES:

The study was funded in part by the National Natural Science Foundation of China. The investigators reported no conflicts of interest.

This article was created using several editorial tools, including artificial intelligence, as part of the process. Human editors reviewed this content before publication. A version of this article first appeared on Medscape.com.

TOPLINE:

and better self-reported health among adults aged 50 years or older across 23 countries than nonuse, a new cohort study suggests.

METHODOLOGY:

- Data were examined for more than 87,000 adults aged 50 years or older across 23 countries and from six aging cohorts.

- Researchers examined the potential association between internet use and mental health outcomes, including depressive symptoms, life satisfaction, and self-reported health.

- Polygenic scores were used for subset analysis to stratify participants from England and the United States according to their genetic risk for depression.

- Participants were followed up for a median of 6 years.

TAKEAWAY:

- Internet use was linked to consistent benefits across countries, including lower depressive symptoms (pooled average marginal effect [AME], –0.09; 95% CI, –0.12 to –0.07), higher life satisfaction (pooled AME, 0.07; 95% CI, 0.05-0.10), and better self-reported health (pooled AME, 0.15; 95% CI, 0.12-0.17).

- Frequent internet users showed better mental health outcomes than nonusers, and daily internet users showed significant improvements in depressive symptoms and self-reported health in England and the United States.

- Each additional wave of internet use was associated with reduced depressive symptoms (pooled AME, –0.06; 95% CI, –0.09 to –0.04) and improved life satisfaction (pooled AME, 0.05; 95% CI, 0.03-0.07).

- Benefits of internet use were observed across all genetic risk categories for depression in England and the United States, suggesting potential utility regardless of genetic predisposition.

IN PRACTICE:

“Our findings are relevant to public health policies and practices in promoting mental health in later life through the internet, especially in countries with limited internet access and mental health services,” the investigators wrote.

SOURCE:

The study was led by Yan Luo, Department of Data Science, City University of Hong Kong, Hong Kong, China. It was published online November 18 in Nature Human Behaviour.

LIMITATIONS:

The possibility of residual confounding and reverse causation prevented the establishment of direct causality between internet use and mental health. Selection bias may have also existed due to differences in baseline characteristics between the analytic samples and entire populations. Internet use was assessed through self-reported items, which could have led to recall and information bias. Additionally, genetic data were available for participants only from England and the United States.

DISCLOSURES:

The study was funded in part by the National Natural Science Foundation of China. The investigators reported no conflicts of interest.

This article was created using several editorial tools, including artificial intelligence, as part of the process. Human editors reviewed this content before publication. A version of this article first appeared on Medscape.com.

TOPLINE:

and better self-reported health among adults aged 50 years or older across 23 countries than nonuse, a new cohort study suggests.

METHODOLOGY:

- Data were examined for more than 87,000 adults aged 50 years or older across 23 countries and from six aging cohorts.

- Researchers examined the potential association between internet use and mental health outcomes, including depressive symptoms, life satisfaction, and self-reported health.

- Polygenic scores were used for subset analysis to stratify participants from England and the United States according to their genetic risk for depression.

- Participants were followed up for a median of 6 years.

TAKEAWAY:

- Internet use was linked to consistent benefits across countries, including lower depressive symptoms (pooled average marginal effect [AME], –0.09; 95% CI, –0.12 to –0.07), higher life satisfaction (pooled AME, 0.07; 95% CI, 0.05-0.10), and better self-reported health (pooled AME, 0.15; 95% CI, 0.12-0.17).

- Frequent internet users showed better mental health outcomes than nonusers, and daily internet users showed significant improvements in depressive symptoms and self-reported health in England and the United States.

- Each additional wave of internet use was associated with reduced depressive symptoms (pooled AME, –0.06; 95% CI, –0.09 to –0.04) and improved life satisfaction (pooled AME, 0.05; 95% CI, 0.03-0.07).

- Benefits of internet use were observed across all genetic risk categories for depression in England and the United States, suggesting potential utility regardless of genetic predisposition.

IN PRACTICE:

“Our findings are relevant to public health policies and practices in promoting mental health in later life through the internet, especially in countries with limited internet access and mental health services,” the investigators wrote.

SOURCE:

The study was led by Yan Luo, Department of Data Science, City University of Hong Kong, Hong Kong, China. It was published online November 18 in Nature Human Behaviour.

LIMITATIONS:

The possibility of residual confounding and reverse causation prevented the establishment of direct causality between internet use and mental health. Selection bias may have also existed due to differences in baseline characteristics between the analytic samples and entire populations. Internet use was assessed through self-reported items, which could have led to recall and information bias. Additionally, genetic data were available for participants only from England and the United States.

DISCLOSURES:

The study was funded in part by the National Natural Science Foundation of China. The investigators reported no conflicts of interest.

This article was created using several editorial tools, including artificial intelligence, as part of the process. Human editors reviewed this content before publication. A version of this article first appeared on Medscape.com.

GLP-1s Hold Promise for Addiction but Questions Remain

Glucagon-like peptide 1 receptor agonist (GLP-1) prescriptions for diabetes and obesity treatment are soaring, as is the interest in their potential for treating an array of other conditions. One area in particular is addiction, which, like obesity and diabetes, has been increasing, both in terms of case numbers and deaths from drug overdose, excessive alcohol use, and tobacco/e-cigarettes.

“The evidence is very preliminary and very exciting,” said Nora D. Volkow, MD, director of the National Institute on Drug Abuse (NIDA). “The studies have been going on for more than a decade looking at the effects of GLP medications, mostly first generation and predominantly in rodents,” she said.

GLP “drugs like exenatide and liraglutide all reduced consumption of nicotine, of alcohol, of cocaine, and response to opioids,” Volkow said.

Clinical, Real-World Data Promising

Second-generation agents like semaglutide appear to hold greater promise than their first-generation counterparts. Volkow noted that not only is semaglutide a “much more potent drug,” but pointed to recent findings that saw significant declines in heavy drinking days among patients with alcohol use disorder (AUD).

At the Research Society on Alcohol’s annual meeting in June, researchers from the University of North Carolina at Chapel Hill presented findings of a 2-month, phase 2, randomized clinical trial comparing two low doses (0.25 mg/wk, 0.5 mg/wk) of semaglutide with placebo in 48 participants reporting symptoms of AUD. Though preliminary and unpublished, the data showed a reduction in drinking quantity and heavy drinking in the semaglutide vs placebo groups.

Real-world evidence from electronic health records has also underscored the potential benefit of semaglutide in AUD. In a 12-month retrospective cohort analysis of the records of patients with obesity and no prior AUD diagnosis prescribed semaglutide (n = 45,797) or non-GLP-1 anti-obesity medications (naltrexone, topiramate, n = 38,028), semaglutide was associated with a 50% lower risk for a recurrent AUD diagnosis and a 56% significantly lower risk for incidence AUD diagnosis across gender, age group, and race, and in patients with/without type 2 diabetes.

Likewise, findings from another cohort analysis assigned 1306 treatment-naive patients with type 2 diabetes and no prior AUD diagnosis to semaglutide or non-GLP-1 anti-diabetes medications and followed them for 12 months. Compared with people prescribed non-GLP-1 diabetes medications, those who took semaglutide had a 42% lower risk for recurrent alcohol use diagnosis, consistent across gender, age group, and race, whether the person had been diagnosed with obesity.

However, AUD is not the only addiction where semaglutide appears to have potential benefit. Cohort studies conducted by Volkow and her colleagues have suggested as much as a 78% reduced risk or opioid overdose in patients with comorbid obesity and type 2 diabetes) and a 44% reduction in cannabis use disorder in type 2 diabetes patients without a prior cannabis use disorder history.

Unclear Mechanisms, Multiple Theories

It’s not entirely clear how semaglutide provides a path for addicts to reduce their cravings or which patients might benefit most.

Preclinical studies have suggested that GLP-1 receptors are expressed throughout the mesolimbic dopamine system and transmit dopamine directly to reward centers in the forebrain, for example, the nucleus accumbens. The drugs appear to reduce dopamine release and transmission to these reward centers, as well as to areas that are responsible for impulse control.

“What we’re seeing is counteracting mechanisms that allow you to self-regulate are also involved in addiction, but I don’t know to what extent these medications could help strengthen that,” said Volkow.

Henry Kranzler, MD, professor of psychiatry and director of the Center for Studies of Addiction at the University of Pennsylvania’s Perelman School of Medicine in Philadelphia, has a paper in press looking at genetic correlation between body mass index (BMI) and AUD. “Genetic analysis showed that many of the same genes are working in both disorders but in opposite directions,” he said.

The bottom line is that “they share genetics, but by no means are they the same; this gives us reason to believe that the GLP-1s could be beneficial in obesity but not nearly as beneficial for treating addiction,” said Kranzler.

Behind Closed Doors

Like many people with overweight or obesity who are on semaglutide, Bridget Pilloud, a writer who divides her time between Washington State and Arizona, no longer has any desire to drink.

“I used to really enjoy sitting and slowly sipping an Old Fashioned. I used to really enjoy specific whiskeys. Now, I don’t even like the flavor; the pleasure of drinking is gone,” she said.

Inexplicably, Pilloud said that she’s also given up compulsive shopping; “The hunt and acquisition of it was always really delicious to me,” she said.

Pilloud’s experience is not unique. Angela Fitch, MD, an obesity medicine specialist, co-founder and CMO of knownwell health, and former president of the Obesity Medicine Association, has had patients on semaglutide tell her that they’re not shopping as much.

But self-reports about alcohol consumption are far more common.

A 2023 analysis of social media posts reinforced that the experience is quite common, albeit self-reported.

Researchers used machine learning attribution mapping of 68,250 posts related to GLP-1 or GLP-1/glucose-dependent insulinotropic polypeptide agonists on the Reddit platform. Among the 1580 alcohol-related posts, 71% (1134/1580) of users of either drug said they had reduced cravings and decreased desire to drink. In a remote companion study of 153 people with obesity taking semaglutide (n = 56), tirzepatide (n = 50), or neither (n = 47), there appeared to be a reduced suppression of the desire to consume alcohol, with users reporting fewer drinks and binge episodes than control individuals.

Self-reports also underscored the association between either of the medications and less stimulating/sedative effects of alcohol compared with before starting the medications and to controls.

Behind closed doors, there appears to be as much chatter about the potential of these agents for AUD and other addiction disorders as there are questions about factors like treatment duration, safety of long-term, chronic use, and dosage.

“We don’t have data around people with normal weight and how much risk that is to them if they start taking these medications for addiction and reduce their BMI as low as 18,” said Fitch.

There’s also the question of when and how to wean patients off the medications, a consideration that is quite important for patients with addiction problems, said Volkow.

“What happens when you become addicted to drugs is that you start to degrade social support systems needed for well-being,” she explained. “The big difference with drugs versus foods is that you can live happily with no drugs at all, whereas you die if you don’t eat. So, there are greater challenges in the ability to change the environment (eg, help stabilize everyday life so people have alternative reinforcers) when you remove the reward.”

Additional considerations range from overuse and the development of treatment-resistant obesity to the need to ensure that patients on these drugs receive ongoing management and, of course, access, noted Fitch.

Still, the NIDA coffers are open. “We’re waiting for proposals,” said Volkow.

Fitch is cofounder and CMO of knownwell health. Volkow reported no relevant financial relationships. Kranzler is a member of advisory boards for Altimmune, Clearmind Medicine, Dicerna Pharmaceuticals, Enthion Pharmaceuticals, Eli Lilly and Company, and Sophrosyne Pharmaceuticals; a consultant to Sobrera Pharma and Altimmune; the recipient of research funding and medication supplies for an investigator-initiated study from Alkermes; a member of the American Society of Clinical Psychopharmacology’s Alcohol Clinical Trials Initiative, which was supported in the past 3 years by Alkermes, Dicerna Pharmaceuticals, Ethypharm, Imbrium, Indivior, Kinnov, Eli Lilly, Otsuka, and Pear; and a holder of US patent 10,900,082 titled: “Genotype-guided dosing of opioid agonists,” issued on January 26, 2021.

A version of this article appeared on Medscape.com.

Glucagon-like peptide 1 receptor agonist (GLP-1) prescriptions for diabetes and obesity treatment are soaring, as is the interest in their potential for treating an array of other conditions. One area in particular is addiction, which, like obesity and diabetes, has been increasing, both in terms of case numbers and deaths from drug overdose, excessive alcohol use, and tobacco/e-cigarettes.

“The evidence is very preliminary and very exciting,” said Nora D. Volkow, MD, director of the National Institute on Drug Abuse (NIDA). “The studies have been going on for more than a decade looking at the effects of GLP medications, mostly first generation and predominantly in rodents,” she said.

GLP “drugs like exenatide and liraglutide all reduced consumption of nicotine, of alcohol, of cocaine, and response to opioids,” Volkow said.

Clinical, Real-World Data Promising

Second-generation agents like semaglutide appear to hold greater promise than their first-generation counterparts. Volkow noted that not only is semaglutide a “much more potent drug,” but pointed to recent findings that saw significant declines in heavy drinking days among patients with alcohol use disorder (AUD).

At the Research Society on Alcohol’s annual meeting in June, researchers from the University of North Carolina at Chapel Hill presented findings of a 2-month, phase 2, randomized clinical trial comparing two low doses (0.25 mg/wk, 0.5 mg/wk) of semaglutide with placebo in 48 participants reporting symptoms of AUD. Though preliminary and unpublished, the data showed a reduction in drinking quantity and heavy drinking in the semaglutide vs placebo groups.

Real-world evidence from electronic health records has also underscored the potential benefit of semaglutide in AUD. In a 12-month retrospective cohort analysis of the records of patients with obesity and no prior AUD diagnosis prescribed semaglutide (n = 45,797) or non-GLP-1 anti-obesity medications (naltrexone, topiramate, n = 38,028), semaglutide was associated with a 50% lower risk for a recurrent AUD diagnosis and a 56% significantly lower risk for incidence AUD diagnosis across gender, age group, and race, and in patients with/without type 2 diabetes.

Likewise, findings from another cohort analysis assigned 1306 treatment-naive patients with type 2 diabetes and no prior AUD diagnosis to semaglutide or non-GLP-1 anti-diabetes medications and followed them for 12 months. Compared with people prescribed non-GLP-1 diabetes medications, those who took semaglutide had a 42% lower risk for recurrent alcohol use diagnosis, consistent across gender, age group, and race, whether the person had been diagnosed with obesity.

However, AUD is not the only addiction where semaglutide appears to have potential benefit. Cohort studies conducted by Volkow and her colleagues have suggested as much as a 78% reduced risk or opioid overdose in patients with comorbid obesity and type 2 diabetes) and a 44% reduction in cannabis use disorder in type 2 diabetes patients without a prior cannabis use disorder history.

Unclear Mechanisms, Multiple Theories

It’s not entirely clear how semaglutide provides a path for addicts to reduce their cravings or which patients might benefit most.

Preclinical studies have suggested that GLP-1 receptors are expressed throughout the mesolimbic dopamine system and transmit dopamine directly to reward centers in the forebrain, for example, the nucleus accumbens. The drugs appear to reduce dopamine release and transmission to these reward centers, as well as to areas that are responsible for impulse control.

“What we’re seeing is counteracting mechanisms that allow you to self-regulate are also involved in addiction, but I don’t know to what extent these medications could help strengthen that,” said Volkow.

Henry Kranzler, MD, professor of psychiatry and director of the Center for Studies of Addiction at the University of Pennsylvania’s Perelman School of Medicine in Philadelphia, has a paper in press looking at genetic correlation between body mass index (BMI) and AUD. “Genetic analysis showed that many of the same genes are working in both disorders but in opposite directions,” he said.

The bottom line is that “they share genetics, but by no means are they the same; this gives us reason to believe that the GLP-1s could be beneficial in obesity but not nearly as beneficial for treating addiction,” said Kranzler.

Behind Closed Doors

Like many people with overweight or obesity who are on semaglutide, Bridget Pilloud, a writer who divides her time between Washington State and Arizona, no longer has any desire to drink.

“I used to really enjoy sitting and slowly sipping an Old Fashioned. I used to really enjoy specific whiskeys. Now, I don’t even like the flavor; the pleasure of drinking is gone,” she said.

Inexplicably, Pilloud said that she’s also given up compulsive shopping; “The hunt and acquisition of it was always really delicious to me,” she said.

Pilloud’s experience is not unique. Angela Fitch, MD, an obesity medicine specialist, co-founder and CMO of knownwell health, and former president of the Obesity Medicine Association, has had patients on semaglutide tell her that they’re not shopping as much.

But self-reports about alcohol consumption are far more common.

A 2023 analysis of social media posts reinforced that the experience is quite common, albeit self-reported.

Researchers used machine learning attribution mapping of 68,250 posts related to GLP-1 or GLP-1/glucose-dependent insulinotropic polypeptide agonists on the Reddit platform. Among the 1580 alcohol-related posts, 71% (1134/1580) of users of either drug said they had reduced cravings and decreased desire to drink. In a remote companion study of 153 people with obesity taking semaglutide (n = 56), tirzepatide (n = 50), or neither (n = 47), there appeared to be a reduced suppression of the desire to consume alcohol, with users reporting fewer drinks and binge episodes than control individuals.

Self-reports also underscored the association between either of the medications and less stimulating/sedative effects of alcohol compared with before starting the medications and to controls.

Behind closed doors, there appears to be as much chatter about the potential of these agents for AUD and other addiction disorders as there are questions about factors like treatment duration, safety of long-term, chronic use, and dosage.

“We don’t have data around people with normal weight and how much risk that is to them if they start taking these medications for addiction and reduce their BMI as low as 18,” said Fitch.

There’s also the question of when and how to wean patients off the medications, a consideration that is quite important for patients with addiction problems, said Volkow.

“What happens when you become addicted to drugs is that you start to degrade social support systems needed for well-being,” she explained. “The big difference with drugs versus foods is that you can live happily with no drugs at all, whereas you die if you don’t eat. So, there are greater challenges in the ability to change the environment (eg, help stabilize everyday life so people have alternative reinforcers) when you remove the reward.”

Additional considerations range from overuse and the development of treatment-resistant obesity to the need to ensure that patients on these drugs receive ongoing management and, of course, access, noted Fitch.

Still, the NIDA coffers are open. “We’re waiting for proposals,” said Volkow.

Fitch is cofounder and CMO of knownwell health. Volkow reported no relevant financial relationships. Kranzler is a member of advisory boards for Altimmune, Clearmind Medicine, Dicerna Pharmaceuticals, Enthion Pharmaceuticals, Eli Lilly and Company, and Sophrosyne Pharmaceuticals; a consultant to Sobrera Pharma and Altimmune; the recipient of research funding and medication supplies for an investigator-initiated study from Alkermes; a member of the American Society of Clinical Psychopharmacology’s Alcohol Clinical Trials Initiative, which was supported in the past 3 years by Alkermes, Dicerna Pharmaceuticals, Ethypharm, Imbrium, Indivior, Kinnov, Eli Lilly, Otsuka, and Pear; and a holder of US patent 10,900,082 titled: “Genotype-guided dosing of opioid agonists,” issued on January 26, 2021.

A version of this article appeared on Medscape.com.

Glucagon-like peptide 1 receptor agonist (GLP-1) prescriptions for diabetes and obesity treatment are soaring, as is the interest in their potential for treating an array of other conditions. One area in particular is addiction, which, like obesity and diabetes, has been increasing, both in terms of case numbers and deaths from drug overdose, excessive alcohol use, and tobacco/e-cigarettes.

“The evidence is very preliminary and very exciting,” said Nora D. Volkow, MD, director of the National Institute on Drug Abuse (NIDA). “The studies have been going on for more than a decade looking at the effects of GLP medications, mostly first generation and predominantly in rodents,” she said.

GLP “drugs like exenatide and liraglutide all reduced consumption of nicotine, of alcohol, of cocaine, and response to opioids,” Volkow said.

Clinical, Real-World Data Promising

Second-generation agents like semaglutide appear to hold greater promise than their first-generation counterparts. Volkow noted that not only is semaglutide a “much more potent drug,” but pointed to recent findings that saw significant declines in heavy drinking days among patients with alcohol use disorder (AUD).

At the Research Society on Alcohol’s annual meeting in June, researchers from the University of North Carolina at Chapel Hill presented findings of a 2-month, phase 2, randomized clinical trial comparing two low doses (0.25 mg/wk, 0.5 mg/wk) of semaglutide with placebo in 48 participants reporting symptoms of AUD. Though preliminary and unpublished, the data showed a reduction in drinking quantity and heavy drinking in the semaglutide vs placebo groups.

Real-world evidence from electronic health records has also underscored the potential benefit of semaglutide in AUD. In a 12-month retrospective cohort analysis of the records of patients with obesity and no prior AUD diagnosis prescribed semaglutide (n = 45,797) or non-GLP-1 anti-obesity medications (naltrexone, topiramate, n = 38,028), semaglutide was associated with a 50% lower risk for a recurrent AUD diagnosis and a 56% significantly lower risk for incidence AUD diagnosis across gender, age group, and race, and in patients with/without type 2 diabetes.

Likewise, findings from another cohort analysis assigned 1306 treatment-naive patients with type 2 diabetes and no prior AUD diagnosis to semaglutide or non-GLP-1 anti-diabetes medications and followed them for 12 months. Compared with people prescribed non-GLP-1 diabetes medications, those who took semaglutide had a 42% lower risk for recurrent alcohol use diagnosis, consistent across gender, age group, and race, whether the person had been diagnosed with obesity.

However, AUD is not the only addiction where semaglutide appears to have potential benefit. Cohort studies conducted by Volkow and her colleagues have suggested as much as a 78% reduced risk or opioid overdose in patients with comorbid obesity and type 2 diabetes) and a 44% reduction in cannabis use disorder in type 2 diabetes patients without a prior cannabis use disorder history.

Unclear Mechanisms, Multiple Theories

It’s not entirely clear how semaglutide provides a path for addicts to reduce their cravings or which patients might benefit most.

Preclinical studies have suggested that GLP-1 receptors are expressed throughout the mesolimbic dopamine system and transmit dopamine directly to reward centers in the forebrain, for example, the nucleus accumbens. The drugs appear to reduce dopamine release and transmission to these reward centers, as well as to areas that are responsible for impulse control.

“What we’re seeing is counteracting mechanisms that allow you to self-regulate are also involved in addiction, but I don’t know to what extent these medications could help strengthen that,” said Volkow.

Henry Kranzler, MD, professor of psychiatry and director of the Center for Studies of Addiction at the University of Pennsylvania’s Perelman School of Medicine in Philadelphia, has a paper in press looking at genetic correlation between body mass index (BMI) and AUD. “Genetic analysis showed that many of the same genes are working in both disorders but in opposite directions,” he said.

The bottom line is that “they share genetics, but by no means are they the same; this gives us reason to believe that the GLP-1s could be beneficial in obesity but not nearly as beneficial for treating addiction,” said Kranzler.

Behind Closed Doors

Like many people with overweight or obesity who are on semaglutide, Bridget Pilloud, a writer who divides her time between Washington State and Arizona, no longer has any desire to drink.

“I used to really enjoy sitting and slowly sipping an Old Fashioned. I used to really enjoy specific whiskeys. Now, I don’t even like the flavor; the pleasure of drinking is gone,” she said.

Inexplicably, Pilloud said that she’s also given up compulsive shopping; “The hunt and acquisition of it was always really delicious to me,” she said.

Pilloud’s experience is not unique. Angela Fitch, MD, an obesity medicine specialist, co-founder and CMO of knownwell health, and former president of the Obesity Medicine Association, has had patients on semaglutide tell her that they’re not shopping as much.

But self-reports about alcohol consumption are far more common.

A 2023 analysis of social media posts reinforced that the experience is quite common, albeit self-reported.

Researchers used machine learning attribution mapping of 68,250 posts related to GLP-1 or GLP-1/glucose-dependent insulinotropic polypeptide agonists on the Reddit platform. Among the 1580 alcohol-related posts, 71% (1134/1580) of users of either drug said they had reduced cravings and decreased desire to drink. In a remote companion study of 153 people with obesity taking semaglutide (n = 56), tirzepatide (n = 50), or neither (n = 47), there appeared to be a reduced suppression of the desire to consume alcohol, with users reporting fewer drinks and binge episodes than control individuals.

Self-reports also underscored the association between either of the medications and less stimulating/sedative effects of alcohol compared with before starting the medications and to controls.

Behind closed doors, there appears to be as much chatter about the potential of these agents for AUD and other addiction disorders as there are questions about factors like treatment duration, safety of long-term, chronic use, and dosage.

“We don’t have data around people with normal weight and how much risk that is to them if they start taking these medications for addiction and reduce their BMI as low as 18,” said Fitch.

There’s also the question of when and how to wean patients off the medications, a consideration that is quite important for patients with addiction problems, said Volkow.

“What happens when you become addicted to drugs is that you start to degrade social support systems needed for well-being,” she explained. “The big difference with drugs versus foods is that you can live happily with no drugs at all, whereas you die if you don’t eat. So, there are greater challenges in the ability to change the environment (eg, help stabilize everyday life so people have alternative reinforcers) when you remove the reward.”

Additional considerations range from overuse and the development of treatment-resistant obesity to the need to ensure that patients on these drugs receive ongoing management and, of course, access, noted Fitch.

Still, the NIDA coffers are open. “We’re waiting for proposals,” said Volkow.

Fitch is cofounder and CMO of knownwell health. Volkow reported no relevant financial relationships. Kranzler is a member of advisory boards for Altimmune, Clearmind Medicine, Dicerna Pharmaceuticals, Enthion Pharmaceuticals, Eli Lilly and Company, and Sophrosyne Pharmaceuticals; a consultant to Sobrera Pharma and Altimmune; the recipient of research funding and medication supplies for an investigator-initiated study from Alkermes; a member of the American Society of Clinical Psychopharmacology’s Alcohol Clinical Trials Initiative, which was supported in the past 3 years by Alkermes, Dicerna Pharmaceuticals, Ethypharm, Imbrium, Indivior, Kinnov, Eli Lilly, Otsuka, and Pear; and a holder of US patent 10,900,082 titled: “Genotype-guided dosing of opioid agonists,” issued on January 26, 2021.

A version of this article appeared on Medscape.com.

How Metals Affect the Brain

This transcript has been edited for clarity.

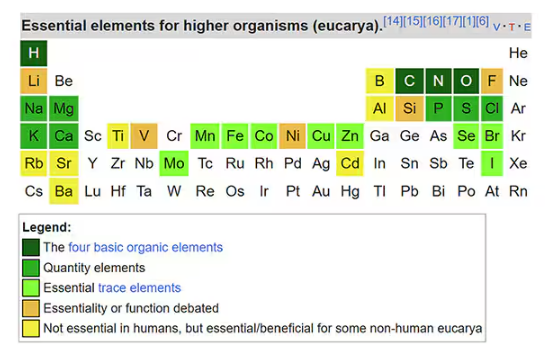

It has always amazed me that our bodies require these tiny amounts of incredibly rare substances to function. Sure, we need oxygen. We need water. But we also need molybdenum, which makes up just 1.2 parts per million of the Earth’s crust.

Without adequate molybdenum intake, we develop seizures, developmental delays, death. Fortunately, we need so little molybdenum that true molybdenum deficiency is incredibly rare — seen only in people on total parenteral nutrition without supplementation or those with certain rare genetic conditions. But still, molybdenum is necessary for life.

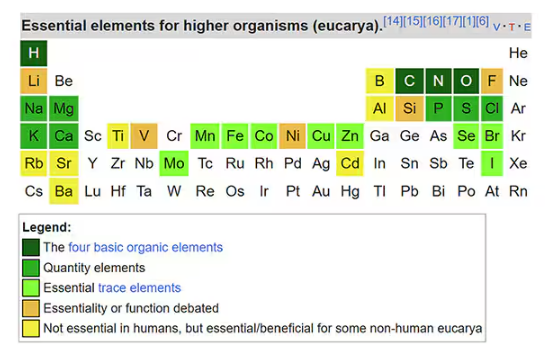

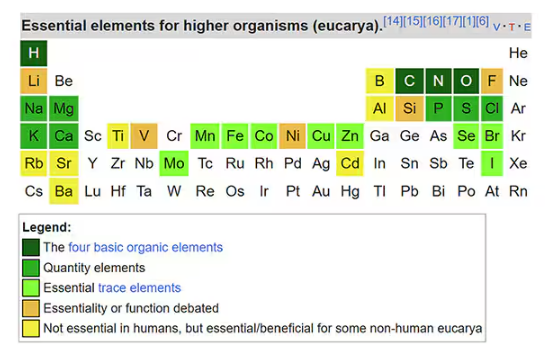

Many metals are. Figure 1 colors the essential minerals on the periodic table. You can see that to stay alive, we humans need not only things like sodium, but selenium, bromine, zinc, copper, and cobalt.

Some metals are very clearly not essential; we can all do without lead and mercury, and probably should.

But just because something is essential for life does not mean that more is better. The dose is the poison, as they say. And this week, we explore whether metals — even essential metals — might be adversely affecting our brains.

It’s not a stretch to think that metal intake could have weird effects on our nervous system. Lead exposure, primarily due to leaded gasoline, has been blamed for an average reduction of about 3 points in our national IQ, for example . But not all metals are created equal. Researchers set out to find out which might be more strongly associated with performance on cognitive tests and dementia, and reported their results in this study in JAMA Network Open.

To do this, they leveraged the MESA cohort study. This is a longitudinal study of a relatively diverse group of 6300 adults who were enrolled from 2000 to 2002 around the United States. At enrollment, they gave a urine sample and took a variety of cognitive tests. Important for this study was the digit symbol substitution test, where participants are provided a code and need to replace a list of numbers with symbols as per that code. Performance on this test worsens with age, depression, and cognitive impairment.

Participants were followed for more than a decade, and over that time, 559 (about 9%) were diagnosed with dementia.

Those baseline urine samples were assayed for a variety of metals — some essential, some very much not, as you can see in Figure 2.

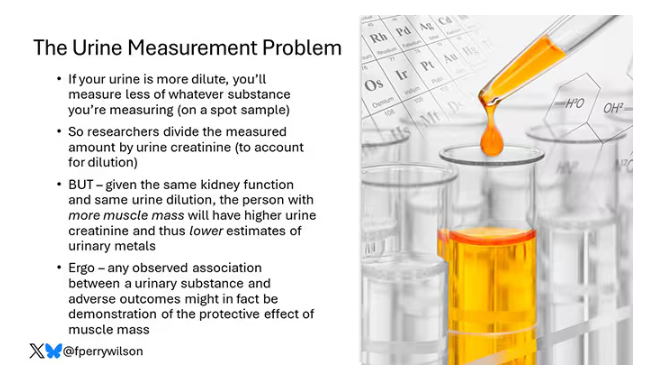

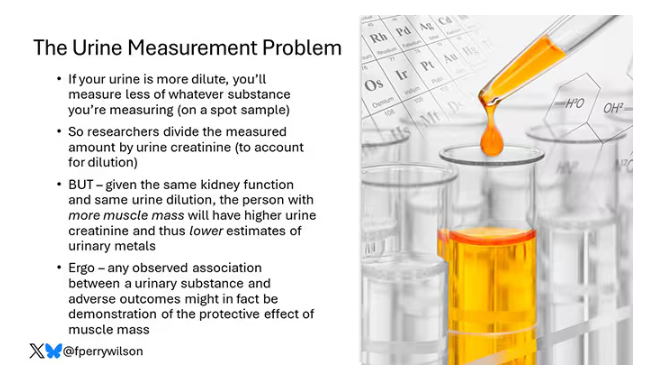

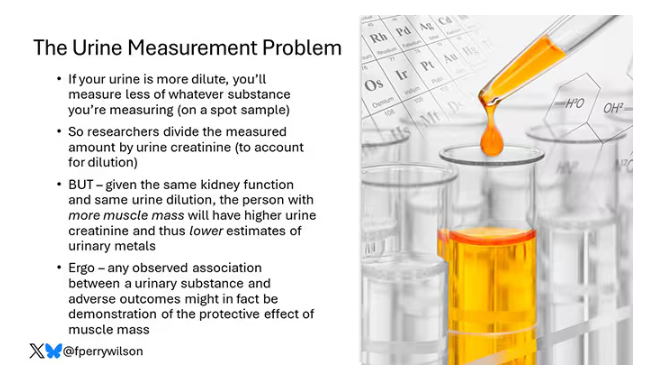

Now, I have to put my kidney doctor hat on for a second and talk about urine measurement ... of anything. The problem with urine is that the concentration can change a lot — by more than 10-fold, in fact — based on how much water you drank recently. Researchers correct for this, and in the case of this study, they do what a lot of researchers do: divide the measured concentration by the urine creatinine level.

This introduces a bit of a problem. Take two people with exactly the same kidney function, who drank exactly the same water, whose urine is exactly the same concentration. The person with more muscle mass will have more creatinine in that urine sample, since creatinine is a byproduct of muscle metabolism. Because people with more muscle mass are generally healthier, when you divide your metal concentration by urine creatinine, you get a lower number, which might lead you to believe that lower levels of the metal in the urine are protective. But in fact, what you’re seeing is that higher levels of creatinine are protective. I see this issue all the time and it will always color results of studies like this.

Okay, I am doffing my kidney doctor hat now to show you the results.

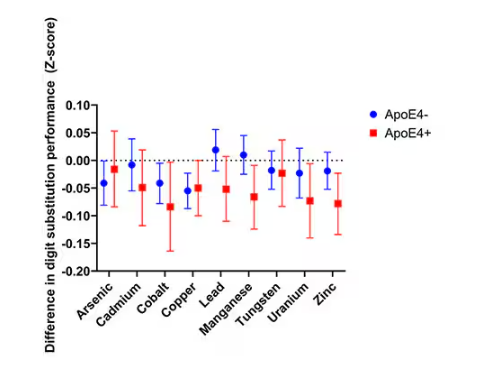

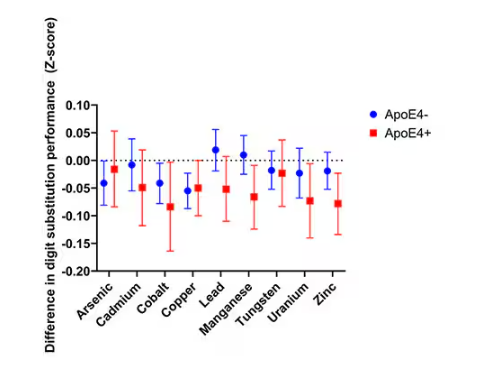

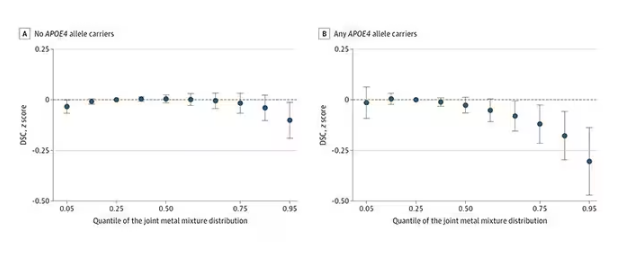

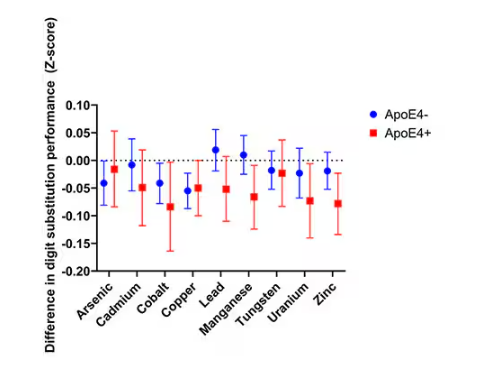

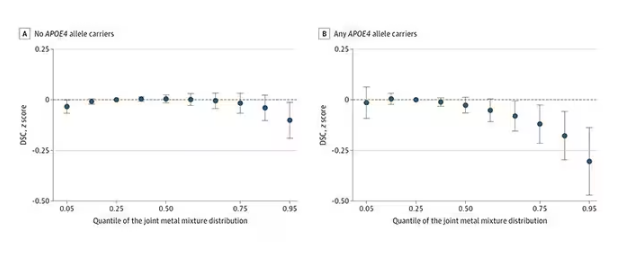

The researchers first looked at the relationship between metal concentrations in the urine and performance on cognitive tests. The results were fairly equivocal, save for that digit substitution test which is shown in Figure 4.

Even these results don’t ring major alarm bells for me. What you’re seeing here is the change in scores on the digit substitution test for each 25-percentile increase in urinary metal level — a pretty big change. And yet, you see really minor changes in the performance on the test. The digit substitution test is not an IQ test; but to give you a feeling for the magnitude of this change, if we looked at copper level, moving from the 25th to the 50th percentile would be associated with a loss of nine tenths of an IQ point.

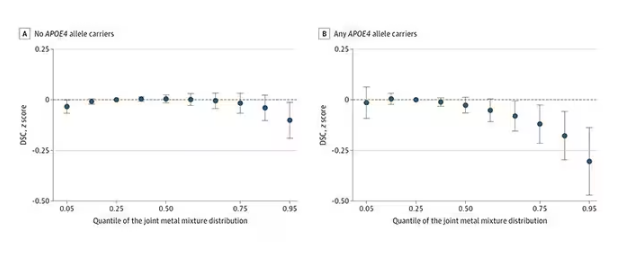

You see two colors on the Figure 4 graph, by the way. That’s because the researchers stratified their findings based on whether the individual carried the ApoE4 gene allele, which is a risk factor for the development of dementia. There are reasons to believe that neurotoxic metals might be worse in this population, and I suppose you do see generally more adverse effects on scores in the red lines compared with the blue lines. But still, we’re not talking about a huge effect size here.

Let’s look at the relationship between these metals and the development of dementia itself, a clearly more important outcome than how well you can replace numeric digits with symbols. I’ll highlight a few of the results that are particularly telling.

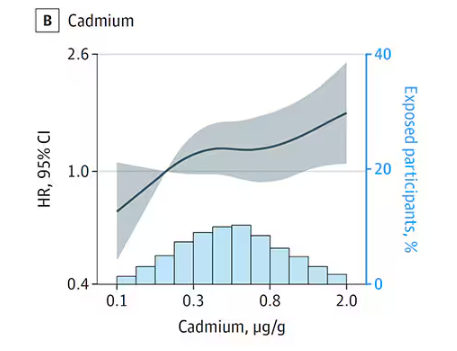

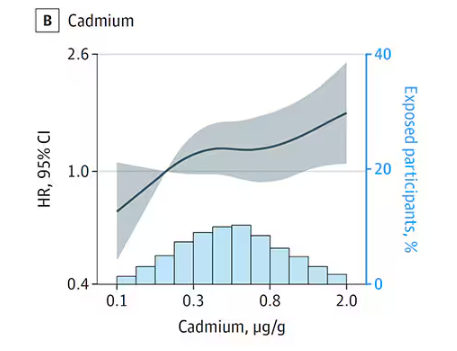

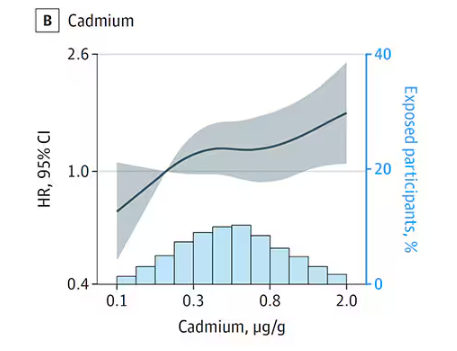

First, the nonessential mineral cadmium, which displays the type of relationship we would expect if the metal were neurotoxic: a clear, roughly linear increase in risk for dementia as urinary concentration increases.

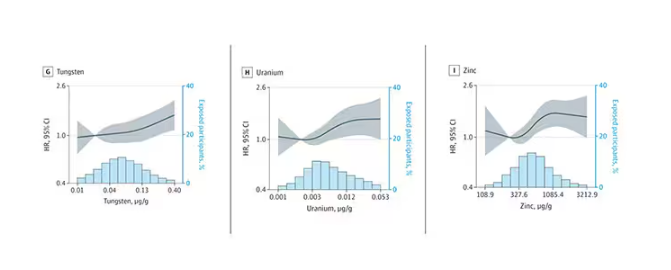

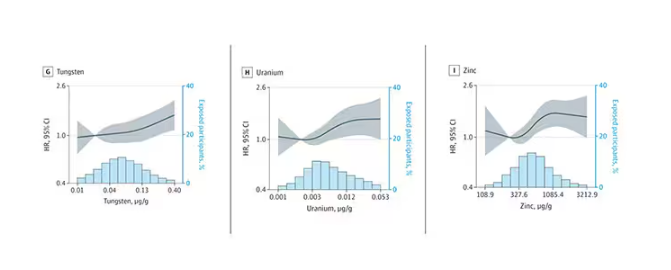

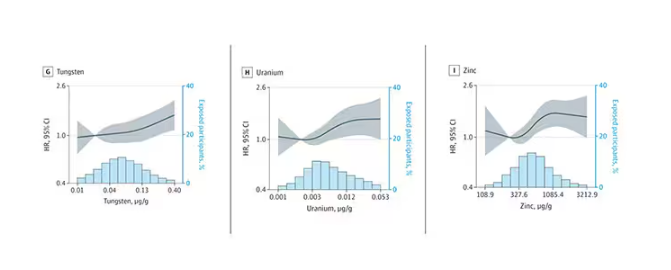

We see roughly similar patterns with the nonessential minerals tungsten and uranium, and the essential mineral zinc (beloved of respiratory-virus avoiders everywhere).

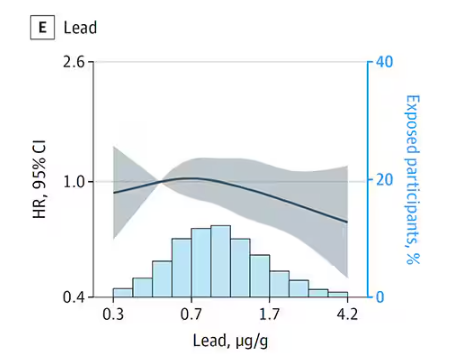

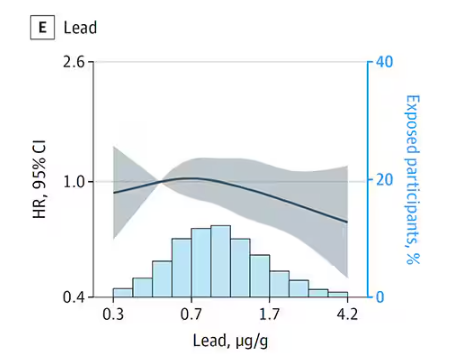

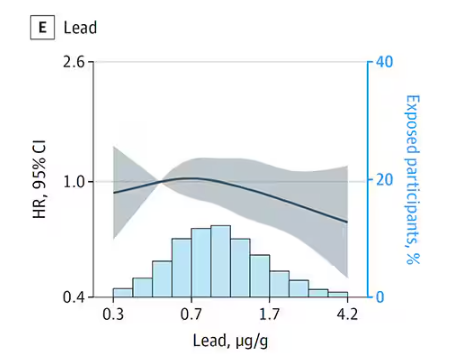

But it is very much not what we see for all metals. Strangest of all, look at lead, which shows basically no relationship with dementia.

This concerns me a bit. Earlier, I discussed the issue of measuring stuff in urine and how standardizing levels to the urine creatinine level introduces a bias due to muscle mass. One way around this is to standardize urine levels to some other marker of urine dilution, like osmolality. But more fundamental than that, I like to see positive and negative controls in studies like this. For example, lead strikes me as a good positive control here. If the experimental framework were valid, I would think we’d see a relationship between lead level and dementia.

For a negative control? Well, something we are quite sure is not neurotoxic — something like sulfur, which is relatively ubiquitous, used in a variety of biological processes, and efficiently eliminated. We don’t have that in this study.

The authors close their case by creating a model that combines all the metal levels, asking the question of whether higher levels of metals in the urine in general worsen cognitive scores. And they find that the relationship exists, as you can see in Figure 8, both in carriers and noncarriers of ApoE4. But, to me, this is even more argument for the creatinine problem. If it’s not a specific metal but just the sort of general concentration of all metals, the risk for confounding by muscle mass is even higher.

So should we worry about ingesting metals? I suppose the answer is ... kind of.

I am sure we should be avoiding lead, despite the results of this study. It’s probably best to stay away from uranium too.

As for the essential metals, I’m sure there is some toxic dose; there’s a toxic dose for everything at some point. But I don’t see evidence in this study to make me worry that a significant chunk of the population is anywhere close to that.

Dr. Wilson is associate professor of medicine and public health and director of the Clinical and Translational Research Accelerator at Yale University, New Haven, Connecticut. He has disclosed no relevant financial relationships.

A version of this article appeared on Medscape.com.

This transcript has been edited for clarity.

It has always amazed me that our bodies require these tiny amounts of incredibly rare substances to function. Sure, we need oxygen. We need water. But we also need molybdenum, which makes up just 1.2 parts per million of the Earth’s crust.

Without adequate molybdenum intake, we develop seizures, developmental delays, death. Fortunately, we need so little molybdenum that true molybdenum deficiency is incredibly rare — seen only in people on total parenteral nutrition without supplementation or those with certain rare genetic conditions. But still, molybdenum is necessary for life.

Many metals are. Figure 1 colors the essential minerals on the periodic table. You can see that to stay alive, we humans need not only things like sodium, but selenium, bromine, zinc, copper, and cobalt.

Some metals are very clearly not essential; we can all do without lead and mercury, and probably should.

But just because something is essential for life does not mean that more is better. The dose is the poison, as they say. And this week, we explore whether metals — even essential metals — might be adversely affecting our brains.

It’s not a stretch to think that metal intake could have weird effects on our nervous system. Lead exposure, primarily due to leaded gasoline, has been blamed for an average reduction of about 3 points in our national IQ, for example . But not all metals are created equal. Researchers set out to find out which might be more strongly associated with performance on cognitive tests and dementia, and reported their results in this study in JAMA Network Open.

To do this, they leveraged the MESA cohort study. This is a longitudinal study of a relatively diverse group of 6300 adults who were enrolled from 2000 to 2002 around the United States. At enrollment, they gave a urine sample and took a variety of cognitive tests. Important for this study was the digit symbol substitution test, where participants are provided a code and need to replace a list of numbers with symbols as per that code. Performance on this test worsens with age, depression, and cognitive impairment.

Participants were followed for more than a decade, and over that time, 559 (about 9%) were diagnosed with dementia.

Those baseline urine samples were assayed for a variety of metals — some essential, some very much not, as you can see in Figure 2.

Now, I have to put my kidney doctor hat on for a second and talk about urine measurement ... of anything. The problem with urine is that the concentration can change a lot — by more than 10-fold, in fact — based on how much water you drank recently. Researchers correct for this, and in the case of this study, they do what a lot of researchers do: divide the measured concentration by the urine creatinine level.

This introduces a bit of a problem. Take two people with exactly the same kidney function, who drank exactly the same water, whose urine is exactly the same concentration. The person with more muscle mass will have more creatinine in that urine sample, since creatinine is a byproduct of muscle metabolism. Because people with more muscle mass are generally healthier, when you divide your metal concentration by urine creatinine, you get a lower number, which might lead you to believe that lower levels of the metal in the urine are protective. But in fact, what you’re seeing is that higher levels of creatinine are protective. I see this issue all the time and it will always color results of studies like this.

Okay, I am doffing my kidney doctor hat now to show you the results.

The researchers first looked at the relationship between metal concentrations in the urine and performance on cognitive tests. The results were fairly equivocal, save for that digit substitution test which is shown in Figure 4.

Even these results don’t ring major alarm bells for me. What you’re seeing here is the change in scores on the digit substitution test for each 25-percentile increase in urinary metal level — a pretty big change. And yet, you see really minor changes in the performance on the test. The digit substitution test is not an IQ test; but to give you a feeling for the magnitude of this change, if we looked at copper level, moving from the 25th to the 50th percentile would be associated with a loss of nine tenths of an IQ point.

You see two colors on the Figure 4 graph, by the way. That’s because the researchers stratified their findings based on whether the individual carried the ApoE4 gene allele, which is a risk factor for the development of dementia. There are reasons to believe that neurotoxic metals might be worse in this population, and I suppose you do see generally more adverse effects on scores in the red lines compared with the blue lines. But still, we’re not talking about a huge effect size here.

Let’s look at the relationship between these metals and the development of dementia itself, a clearly more important outcome than how well you can replace numeric digits with symbols. I’ll highlight a few of the results that are particularly telling.

First, the nonessential mineral cadmium, which displays the type of relationship we would expect if the metal were neurotoxic: a clear, roughly linear increase in risk for dementia as urinary concentration increases.

We see roughly similar patterns with the nonessential minerals tungsten and uranium, and the essential mineral zinc (beloved of respiratory-virus avoiders everywhere).

But it is very much not what we see for all metals. Strangest of all, look at lead, which shows basically no relationship with dementia.

This concerns me a bit. Earlier, I discussed the issue of measuring stuff in urine and how standardizing levels to the urine creatinine level introduces a bias due to muscle mass. One way around this is to standardize urine levels to some other marker of urine dilution, like osmolality. But more fundamental than that, I like to see positive and negative controls in studies like this. For example, lead strikes me as a good positive control here. If the experimental framework were valid, I would think we’d see a relationship between lead level and dementia.

For a negative control? Well, something we are quite sure is not neurotoxic — something like sulfur, which is relatively ubiquitous, used in a variety of biological processes, and efficiently eliminated. We don’t have that in this study.

The authors close their case by creating a model that combines all the metal levels, asking the question of whether higher levels of metals in the urine in general worsen cognitive scores. And they find that the relationship exists, as you can see in Figure 8, both in carriers and noncarriers of ApoE4. But, to me, this is even more argument for the creatinine problem. If it’s not a specific metal but just the sort of general concentration of all metals, the risk for confounding by muscle mass is even higher.

So should we worry about ingesting metals? I suppose the answer is ... kind of.

I am sure we should be avoiding lead, despite the results of this study. It’s probably best to stay away from uranium too.

As for the essential metals, I’m sure there is some toxic dose; there’s a toxic dose for everything at some point. But I don’t see evidence in this study to make me worry that a significant chunk of the population is anywhere close to that.

Dr. Wilson is associate professor of medicine and public health and director of the Clinical and Translational Research Accelerator at Yale University, New Haven, Connecticut. He has disclosed no relevant financial relationships.

A version of this article appeared on Medscape.com.

This transcript has been edited for clarity.

It has always amazed me that our bodies require these tiny amounts of incredibly rare substances to function. Sure, we need oxygen. We need water. But we also need molybdenum, which makes up just 1.2 parts per million of the Earth’s crust.