User login

Mortality post perioperative CPR climbs with patient frailty

And the frailer that patients were going into surgery, according to their scores on an established frailty index, the greater their adjusted mortality risk at 30 days and the likelier they were to be discharged to a location other than their home.

The findings are based on more than 3,000 patients in an American College of Surgeons (ACS) quality improvement registry who underwent CPR at noncardiac surgery, about one-fourth of whom scored a least 40 on the revised Risk Analysis Index (RAI). The frailty index accounts for the patient’s comorbidities, cognition, functional and nutritional status, and other factors as predictors of postoperative mortality risk.

Such CPR for perioperative cardiac arrest “should not be considered futile just because a patient is frail, but neither should cardiac arrest be considered as ‘reversible’ in this population, as previously thought,” lead author Matthew B. Allen, MD, of Brigham and Women’s Hospital, Boston, said in an interview.

“We know that patients who are frail have higher risk of complications and mortality after surgery, and recent studies have demonstrated that frailty is associated with very poor outcomes following CPR in nonsurgical settings,” said Dr. Allen, an attending physician in the department of anesthesiology, perioperative, and pain medicine at his center.

Although cardiac arrest is typically regarded as being “more reversible” in the setting of surgery and anesthesia than elsewhere in the hospital, he observed, there’s very little data on whether that is indeed the case for frail patients.

The current analysis provides “a heretofore absent base of evidence to guide decision-making regarding CPR in patients with frailty who undergo surgery,” states the report, published in JAMA Network Open.

The 3,058 patients in the analysis, from the ACS National Surgical Quality Improvement database, received CPR for cardiac arrest during or soon after noncardiac surgery. Their mean age was 71 and 44% were women.

Their RAI scores ranged from 14 to 71 and averaged 37.7; one-fourth of the patients had scores of 40 or higher, the study’s threshold for identifying patients as “frail.”

Overall in the cohort, more cardiac arrests occurred during surgeries that entailed low-to-moderate physiologic stress (an Operative Stress Score of 1 to 3) than in the setting of emergency surgery: 67.9% vs. 39.1%, respectively.

During emergency surgeries, a greater proportion of frail than nonfrail patients experienced cardiac arrest, 42% and 38%, respectively. The same relationship was observed during low-to-moderate stress surgeries: 76.6% of frail patients and 64.8% of nonfrail patients. General anesthesia was used in about 93% of procedures for both frail and nonfrail patients, the report states.

The primary endpoint, 30-day mortality, was 58.6% overall, 67.4% in frail patients, and 55.6% for nonfrail patients. Frailty and mortality were positively associated, with an adjusted odds ratio (AOR) of 1.35 (95% confidence interval [CI], 1.11-1.65, P = .003) in multivariate analysis.

Of the cohort’s 1,164 patients who had been admitted from home and survived to discharge, 38.6% were discharged to a destination other than home; the corresponding rates for frail and nonfrail patients were 59.3% and 33.9%, respectively. Frailty and nonhome discharge were positively correlated with an AOR of 1.85 (95% CI, 1.31-2.62, P < .001).

“There is no such thing as a low-risk procedure in patients who are frail,” Dr. Allen said in an interview. “Frail patients should be medically optimized prior to undergoing surgery and anesthesia, and plans should be tailored to patients’ vulnerabilities to reduce the risk of complications and facilitate rapid recognition and treatment when they occur.”

Moreover, he said, management of clinical decompensation in the perioperative period should be a part of the shared decision-making process “to establish a plan aligned with the patients’ priorities whenever possible.”

The current study quantifies risk associated with frailty in the surgical setting, and “this quantification can help providers, patients, and insurers better grasp the growing frailty problem,” Balachundhar Subramaniam, MD, MPH, of Harvard Medical School, Boston, said in an interview.

Universal screening for frailty is “a must in all surgical patients” to help identify those who are high-risk and reduce their chances for perioperative adverse events, said Dr. Subramaniam, who was not involved in the study.

“Prehabilitation with education, nutrition, physical fitness, and psychological support offer the best chance of significantly reducing poor outcomes” in frail patients, he said, along with “continuous education” in the care of frail patients.

University of Colorado surgeon Joseph Cleveland, MD, not part of the current study, said that it “provides a framework for counseling patients” regarding their do-not-resuscitate status.

“We can counsel patients with frailty with this information,” he said, “that if their heart should stop or go into in irregular rhythm, their chances of surviving are not greater than 50% and they have a more than 50% chance of not being discharged home.”

Dr. Allen reported receiving a clinical translational starter grant from Brigham and Women’s Hospital Department of Anesthesiology; disclosures for the other authors are in the original article. Dr. Subramaniam disclosed research funding from Masimo and Merck and serving as an education consultant for Masimo. Dr. Cleveland reported no relevant financial relationships.

A version of this article appeared on Medscape.com.

And the frailer that patients were going into surgery, according to their scores on an established frailty index, the greater their adjusted mortality risk at 30 days and the likelier they were to be discharged to a location other than their home.

The findings are based on more than 3,000 patients in an American College of Surgeons (ACS) quality improvement registry who underwent CPR at noncardiac surgery, about one-fourth of whom scored a least 40 on the revised Risk Analysis Index (RAI). The frailty index accounts for the patient’s comorbidities, cognition, functional and nutritional status, and other factors as predictors of postoperative mortality risk.

Such CPR for perioperative cardiac arrest “should not be considered futile just because a patient is frail, but neither should cardiac arrest be considered as ‘reversible’ in this population, as previously thought,” lead author Matthew B. Allen, MD, of Brigham and Women’s Hospital, Boston, said in an interview.

“We know that patients who are frail have higher risk of complications and mortality after surgery, and recent studies have demonstrated that frailty is associated with very poor outcomes following CPR in nonsurgical settings,” said Dr. Allen, an attending physician in the department of anesthesiology, perioperative, and pain medicine at his center.

Although cardiac arrest is typically regarded as being “more reversible” in the setting of surgery and anesthesia than elsewhere in the hospital, he observed, there’s very little data on whether that is indeed the case for frail patients.

The current analysis provides “a heretofore absent base of evidence to guide decision-making regarding CPR in patients with frailty who undergo surgery,” states the report, published in JAMA Network Open.

The 3,058 patients in the analysis, from the ACS National Surgical Quality Improvement database, received CPR for cardiac arrest during or soon after noncardiac surgery. Their mean age was 71 and 44% were women.

Their RAI scores ranged from 14 to 71 and averaged 37.7; one-fourth of the patients had scores of 40 or higher, the study’s threshold for identifying patients as “frail.”

Overall in the cohort, more cardiac arrests occurred during surgeries that entailed low-to-moderate physiologic stress (an Operative Stress Score of 1 to 3) than in the setting of emergency surgery: 67.9% vs. 39.1%, respectively.

During emergency surgeries, a greater proportion of frail than nonfrail patients experienced cardiac arrest, 42% and 38%, respectively. The same relationship was observed during low-to-moderate stress surgeries: 76.6% of frail patients and 64.8% of nonfrail patients. General anesthesia was used in about 93% of procedures for both frail and nonfrail patients, the report states.

The primary endpoint, 30-day mortality, was 58.6% overall, 67.4% in frail patients, and 55.6% for nonfrail patients. Frailty and mortality were positively associated, with an adjusted odds ratio (AOR) of 1.35 (95% confidence interval [CI], 1.11-1.65, P = .003) in multivariate analysis.

Of the cohort’s 1,164 patients who had been admitted from home and survived to discharge, 38.6% were discharged to a destination other than home; the corresponding rates for frail and nonfrail patients were 59.3% and 33.9%, respectively. Frailty and nonhome discharge were positively correlated with an AOR of 1.85 (95% CI, 1.31-2.62, P < .001).

“There is no such thing as a low-risk procedure in patients who are frail,” Dr. Allen said in an interview. “Frail patients should be medically optimized prior to undergoing surgery and anesthesia, and plans should be tailored to patients’ vulnerabilities to reduce the risk of complications and facilitate rapid recognition and treatment when they occur.”

Moreover, he said, management of clinical decompensation in the perioperative period should be a part of the shared decision-making process “to establish a plan aligned with the patients’ priorities whenever possible.”

The current study quantifies risk associated with frailty in the surgical setting, and “this quantification can help providers, patients, and insurers better grasp the growing frailty problem,” Balachundhar Subramaniam, MD, MPH, of Harvard Medical School, Boston, said in an interview.

Universal screening for frailty is “a must in all surgical patients” to help identify those who are high-risk and reduce their chances for perioperative adverse events, said Dr. Subramaniam, who was not involved in the study.

“Prehabilitation with education, nutrition, physical fitness, and psychological support offer the best chance of significantly reducing poor outcomes” in frail patients, he said, along with “continuous education” in the care of frail patients.

University of Colorado surgeon Joseph Cleveland, MD, not part of the current study, said that it “provides a framework for counseling patients” regarding their do-not-resuscitate status.

“We can counsel patients with frailty with this information,” he said, “that if their heart should stop or go into in irregular rhythm, their chances of surviving are not greater than 50% and they have a more than 50% chance of not being discharged home.”

Dr. Allen reported receiving a clinical translational starter grant from Brigham and Women’s Hospital Department of Anesthesiology; disclosures for the other authors are in the original article. Dr. Subramaniam disclosed research funding from Masimo and Merck and serving as an education consultant for Masimo. Dr. Cleveland reported no relevant financial relationships.

A version of this article appeared on Medscape.com.

And the frailer that patients were going into surgery, according to their scores on an established frailty index, the greater their adjusted mortality risk at 30 days and the likelier they were to be discharged to a location other than their home.

The findings are based on more than 3,000 patients in an American College of Surgeons (ACS) quality improvement registry who underwent CPR at noncardiac surgery, about one-fourth of whom scored a least 40 on the revised Risk Analysis Index (RAI). The frailty index accounts for the patient’s comorbidities, cognition, functional and nutritional status, and other factors as predictors of postoperative mortality risk.

Such CPR for perioperative cardiac arrest “should not be considered futile just because a patient is frail, but neither should cardiac arrest be considered as ‘reversible’ in this population, as previously thought,” lead author Matthew B. Allen, MD, of Brigham and Women’s Hospital, Boston, said in an interview.

“We know that patients who are frail have higher risk of complications and mortality after surgery, and recent studies have demonstrated that frailty is associated with very poor outcomes following CPR in nonsurgical settings,” said Dr. Allen, an attending physician in the department of anesthesiology, perioperative, and pain medicine at his center.

Although cardiac arrest is typically regarded as being “more reversible” in the setting of surgery and anesthesia than elsewhere in the hospital, he observed, there’s very little data on whether that is indeed the case for frail patients.

The current analysis provides “a heretofore absent base of evidence to guide decision-making regarding CPR in patients with frailty who undergo surgery,” states the report, published in JAMA Network Open.

The 3,058 patients in the analysis, from the ACS National Surgical Quality Improvement database, received CPR for cardiac arrest during or soon after noncardiac surgery. Their mean age was 71 and 44% were women.

Their RAI scores ranged from 14 to 71 and averaged 37.7; one-fourth of the patients had scores of 40 or higher, the study’s threshold for identifying patients as “frail.”

Overall in the cohort, more cardiac arrests occurred during surgeries that entailed low-to-moderate physiologic stress (an Operative Stress Score of 1 to 3) than in the setting of emergency surgery: 67.9% vs. 39.1%, respectively.

During emergency surgeries, a greater proportion of frail than nonfrail patients experienced cardiac arrest, 42% and 38%, respectively. The same relationship was observed during low-to-moderate stress surgeries: 76.6% of frail patients and 64.8% of nonfrail patients. General anesthesia was used in about 93% of procedures for both frail and nonfrail patients, the report states.

The primary endpoint, 30-day mortality, was 58.6% overall, 67.4% in frail patients, and 55.6% for nonfrail patients. Frailty and mortality were positively associated, with an adjusted odds ratio (AOR) of 1.35 (95% confidence interval [CI], 1.11-1.65, P = .003) in multivariate analysis.

Of the cohort’s 1,164 patients who had been admitted from home and survived to discharge, 38.6% were discharged to a destination other than home; the corresponding rates for frail and nonfrail patients were 59.3% and 33.9%, respectively. Frailty and nonhome discharge were positively correlated with an AOR of 1.85 (95% CI, 1.31-2.62, P < .001).

“There is no such thing as a low-risk procedure in patients who are frail,” Dr. Allen said in an interview. “Frail patients should be medically optimized prior to undergoing surgery and anesthesia, and plans should be tailored to patients’ vulnerabilities to reduce the risk of complications and facilitate rapid recognition and treatment when they occur.”

Moreover, he said, management of clinical decompensation in the perioperative period should be a part of the shared decision-making process “to establish a plan aligned with the patients’ priorities whenever possible.”

The current study quantifies risk associated with frailty in the surgical setting, and “this quantification can help providers, patients, and insurers better grasp the growing frailty problem,” Balachundhar Subramaniam, MD, MPH, of Harvard Medical School, Boston, said in an interview.

Universal screening for frailty is “a must in all surgical patients” to help identify those who are high-risk and reduce their chances for perioperative adverse events, said Dr. Subramaniam, who was not involved in the study.

“Prehabilitation with education, nutrition, physical fitness, and psychological support offer the best chance of significantly reducing poor outcomes” in frail patients, he said, along with “continuous education” in the care of frail patients.

University of Colorado surgeon Joseph Cleveland, MD, not part of the current study, said that it “provides a framework for counseling patients” regarding their do-not-resuscitate status.

“We can counsel patients with frailty with this information,” he said, “that if their heart should stop or go into in irregular rhythm, their chances of surviving are not greater than 50% and they have a more than 50% chance of not being discharged home.”

Dr. Allen reported receiving a clinical translational starter grant from Brigham and Women’s Hospital Department of Anesthesiology; disclosures for the other authors are in the original article. Dr. Subramaniam disclosed research funding from Masimo and Merck and serving as an education consultant for Masimo. Dr. Cleveland reported no relevant financial relationships.

A version of this article appeared on Medscape.com.

FROM JAMA NETWORK OPEN

Aspirin not the best antiplatelet for CAD secondary prevention in meta-analysis

such as clopidogrel or ticagrelor rather than aspirin, suggests a patient-level meta-analysis of seven randomized trials.

The more than 24,000 patients in the meta-analysis, called PANTHER, had documented stable CAD, prior myocardial infarction (MI), or recent or remote surgical or percutaneous coronary revascularization.

About half of patients in each antiplatelet monotherapy trial received clopidogrel or ticagrelor, and the other half received aspirin. Follow-ups ranged from 6 months to 3 years.

Those taking a P2Y12 inhibitor showed a 12% reduction in risk (P = .012) for the primary efficacy outcome, a composite of cardiovascular (CV) death, MI, and stroke, over a median of about 1.35 years. The difference was driven primarily by a 23% reduction in risk for MI (P < .001); mortality seemed unaffected by antiplatelet treatment assignment.

Although the P2Y12 inhibitor and aspirin groups were similar with respect to risk of major bleeding, the P2Y12 inhibitor group showed significant reductions in risk for gastrointestinal (GI) bleeding, definite stent thrombosis, and hemorrhagic stroke; rates of hemorrhagic stroke were well under 1% in both groups.

The treatment effects were consistent across patient subgroups, including whether the aspirin comparison was with clopidogrel or ticagrelor.

“Taken together, our data challenge the central role of aspirin in secondary prevention and support a paradigm shift toward P2Y12 inhibitor monotherapy as long-term antiplatelet strategy in the sizable population of patients with coronary atherosclerosis,” Felice Gragnano, MD, PhD, said in an interview. “Given [their] superior efficacy and similar overall safety, P2Y12 inhibitors may be preferred [over] aspirin for the prevention of cardiovascular events in patients with CAD.”

Dr. Gragnano, of the University of Campania Luigi Vanvitelli, Caserta, Italy, who called PANTHER “the largest and most comprehensive synthesis of individual patient data from randomized trials comparing P2Y12 inhibitor monotherapy with aspirin monotherapy,” is lead author of the study, which was published online in the Journal of the American College of Cardiology.

Current guidelines recommend aspirin for antiplatelet monotherapy for patients with established CAD, Dr. Gragnano said, but “the primacy of aspirin in secondary prevention is based on historical trials conducted in the 1970s and 1980s and may not apply to contemporary practice.”

Moreover, later trials that compared P2Y12 inhibitors with aspirin for secondary prevention produced “inconsistent results,” possibly owing to their heterogeneous populations of patients with coronary, cerebrovascular, or peripheral vascular disease, he said. Study-level meta-analyses in this area “provide inconclusive evidence” because they haven’t evaluated treatment effects exclusively in patients with established CAD.

Most of the seven trials’ 24,325 participants had a history of MI, and some had peripheral artery disease (PAD); the rates were 56.2% and 9.1%, respectively. Coronary revascularization, either percutaneous or surgical, had been performed for about 70%. Most (61%) had presented with acute coronary syndromes, and the remainder had presented with chronic CAD.

About 76% of the combined cohorts were from Europe or North America; the rest were from Asia. The mean age of the patients was 64 years, and about 22% were women.

In all, 12,175 had been assigned to P2Y12 inhibitor monotherapy (62% received clopidogrel and 38% received ticagrelor); 12,147 received aspirin at dosages ranging from 75 mg to 325 mg daily.

The hazard ratio (HR) for the primary efficacy outcome, P2Y12 inhibitors vs. aspirin, was significantly reduced, at 0.88 (95% confidence interval [CI], 0.79-0.97; P = .012); the number needed to treat (NNT) to prevent one primary event over 2 years was 121, the report states.

The corresponding HR for MI was 0.77 (95% CI, 0.66-0.90; P < .001), for an NNT benefit of 136. For net adverse clinical events, the HR was 0.89 (95% CI, 0.81-0.98; P = .020), for an NNT benefit of 121.

Risk for major bleeding was not significantly different (HR, 0.87; 95% CI, 0.70-1.09; P = .23), nor were risks for stroke (HR, 0.84; 95% CI, 0.70-1.02; P = .076) or cardiovascular death (HR, 1.02; 95% CI, 0.86-1.20; P = .82).

Still, the P2Y12 inhibitor group showed significant risk reductions for the following:

- GI bleeding: HR, 0.75 (95% CI, 0.57-0.97; P = .027)

- Definite stent thrombosis: HR, 0.42 (95% CI, 0.19-0.97; P = .028)

- Hemorrhagic stroke: HR, 0.43 (95% CI, 0.23-0.83; P = .012)

The current findings are “hypothesis-generating but not definitive,” Dharam Kumbhani, MD, University of Texas Southwestern, Dallas, said in an interview.

It remains unclear “whether aspirin or P2Y12 inhibitor monotherapy is better for long-term maintenance use among patients with established CAD. Aspirin has historically been the agent of choice for this indication,” said Dr. Kumbhani, who with James A. de Lemos, MD, of the same institution, wrote an editorial accompanying the PANTHER report.

“It certainly would be appropriate to consider P2Y12 monotherapy preferentially for patients with prior or currently at high risk for GI or intracranial bleeding, for instance,” Dr. Kumbhani said. For the remainder, aspirin and P2Y12 inhibitors are both “reasonable alternatives.”

In their editorial, Dr. Kumbhani and Dr. de Lemos call the PANTHER meta-analysis “a well-done study with potentially important clinical implications.” The findings “make biological sense: P2Y12 inhibitors are more potent antiplatelet agents than aspirin and have less effect on gastrointestinal mucosal integrity.”

But for now, they wrote, “both aspirin and P2Y12 inhibitors remain viable alternatives for prevention of atherothrombotic events among patients with established CAD.”

Dr. Gragnano had no disclosures; potential conflicts for the other authors are in the report. Dr. Kumbhani reports no relevant relationships; Dr. de Lemos has received honoraria for participation in data safety monitoring boards from Eli Lilly, Novo Nordisk, AstraZeneca, and Janssen.

A version of this article first appeared on Medscape.com.

such as clopidogrel or ticagrelor rather than aspirin, suggests a patient-level meta-analysis of seven randomized trials.

The more than 24,000 patients in the meta-analysis, called PANTHER, had documented stable CAD, prior myocardial infarction (MI), or recent or remote surgical or percutaneous coronary revascularization.

About half of patients in each antiplatelet monotherapy trial received clopidogrel or ticagrelor, and the other half received aspirin. Follow-ups ranged from 6 months to 3 years.

Those taking a P2Y12 inhibitor showed a 12% reduction in risk (P = .012) for the primary efficacy outcome, a composite of cardiovascular (CV) death, MI, and stroke, over a median of about 1.35 years. The difference was driven primarily by a 23% reduction in risk for MI (P < .001); mortality seemed unaffected by antiplatelet treatment assignment.

Although the P2Y12 inhibitor and aspirin groups were similar with respect to risk of major bleeding, the P2Y12 inhibitor group showed significant reductions in risk for gastrointestinal (GI) bleeding, definite stent thrombosis, and hemorrhagic stroke; rates of hemorrhagic stroke were well under 1% in both groups.

The treatment effects were consistent across patient subgroups, including whether the aspirin comparison was with clopidogrel or ticagrelor.

“Taken together, our data challenge the central role of aspirin in secondary prevention and support a paradigm shift toward P2Y12 inhibitor monotherapy as long-term antiplatelet strategy in the sizable population of patients with coronary atherosclerosis,” Felice Gragnano, MD, PhD, said in an interview. “Given [their] superior efficacy and similar overall safety, P2Y12 inhibitors may be preferred [over] aspirin for the prevention of cardiovascular events in patients with CAD.”

Dr. Gragnano, of the University of Campania Luigi Vanvitelli, Caserta, Italy, who called PANTHER “the largest and most comprehensive synthesis of individual patient data from randomized trials comparing P2Y12 inhibitor monotherapy with aspirin monotherapy,” is lead author of the study, which was published online in the Journal of the American College of Cardiology.

Current guidelines recommend aspirin for antiplatelet monotherapy for patients with established CAD, Dr. Gragnano said, but “the primacy of aspirin in secondary prevention is based on historical trials conducted in the 1970s and 1980s and may not apply to contemporary practice.”

Moreover, later trials that compared P2Y12 inhibitors with aspirin for secondary prevention produced “inconsistent results,” possibly owing to their heterogeneous populations of patients with coronary, cerebrovascular, or peripheral vascular disease, he said. Study-level meta-analyses in this area “provide inconclusive evidence” because they haven’t evaluated treatment effects exclusively in patients with established CAD.

Most of the seven trials’ 24,325 participants had a history of MI, and some had peripheral artery disease (PAD); the rates were 56.2% and 9.1%, respectively. Coronary revascularization, either percutaneous or surgical, had been performed for about 70%. Most (61%) had presented with acute coronary syndromes, and the remainder had presented with chronic CAD.

About 76% of the combined cohorts were from Europe or North America; the rest were from Asia. The mean age of the patients was 64 years, and about 22% were women.

In all, 12,175 had been assigned to P2Y12 inhibitor monotherapy (62% received clopidogrel and 38% received ticagrelor); 12,147 received aspirin at dosages ranging from 75 mg to 325 mg daily.

The hazard ratio (HR) for the primary efficacy outcome, P2Y12 inhibitors vs. aspirin, was significantly reduced, at 0.88 (95% confidence interval [CI], 0.79-0.97; P = .012); the number needed to treat (NNT) to prevent one primary event over 2 years was 121, the report states.

The corresponding HR for MI was 0.77 (95% CI, 0.66-0.90; P < .001), for an NNT benefit of 136. For net adverse clinical events, the HR was 0.89 (95% CI, 0.81-0.98; P = .020), for an NNT benefit of 121.

Risk for major bleeding was not significantly different (HR, 0.87; 95% CI, 0.70-1.09; P = .23), nor were risks for stroke (HR, 0.84; 95% CI, 0.70-1.02; P = .076) or cardiovascular death (HR, 1.02; 95% CI, 0.86-1.20; P = .82).

Still, the P2Y12 inhibitor group showed significant risk reductions for the following:

- GI bleeding: HR, 0.75 (95% CI, 0.57-0.97; P = .027)

- Definite stent thrombosis: HR, 0.42 (95% CI, 0.19-0.97; P = .028)

- Hemorrhagic stroke: HR, 0.43 (95% CI, 0.23-0.83; P = .012)

The current findings are “hypothesis-generating but not definitive,” Dharam Kumbhani, MD, University of Texas Southwestern, Dallas, said in an interview.

It remains unclear “whether aspirin or P2Y12 inhibitor monotherapy is better for long-term maintenance use among patients with established CAD. Aspirin has historically been the agent of choice for this indication,” said Dr. Kumbhani, who with James A. de Lemos, MD, of the same institution, wrote an editorial accompanying the PANTHER report.

“It certainly would be appropriate to consider P2Y12 monotherapy preferentially for patients with prior or currently at high risk for GI or intracranial bleeding, for instance,” Dr. Kumbhani said. For the remainder, aspirin and P2Y12 inhibitors are both “reasonable alternatives.”

In their editorial, Dr. Kumbhani and Dr. de Lemos call the PANTHER meta-analysis “a well-done study with potentially important clinical implications.” The findings “make biological sense: P2Y12 inhibitors are more potent antiplatelet agents than aspirin and have less effect on gastrointestinal mucosal integrity.”

But for now, they wrote, “both aspirin and P2Y12 inhibitors remain viable alternatives for prevention of atherothrombotic events among patients with established CAD.”

Dr. Gragnano had no disclosures; potential conflicts for the other authors are in the report. Dr. Kumbhani reports no relevant relationships; Dr. de Lemos has received honoraria for participation in data safety monitoring boards from Eli Lilly, Novo Nordisk, AstraZeneca, and Janssen.

A version of this article first appeared on Medscape.com.

such as clopidogrel or ticagrelor rather than aspirin, suggests a patient-level meta-analysis of seven randomized trials.

The more than 24,000 patients in the meta-analysis, called PANTHER, had documented stable CAD, prior myocardial infarction (MI), or recent or remote surgical or percutaneous coronary revascularization.

About half of patients in each antiplatelet monotherapy trial received clopidogrel or ticagrelor, and the other half received aspirin. Follow-ups ranged from 6 months to 3 years.

Those taking a P2Y12 inhibitor showed a 12% reduction in risk (P = .012) for the primary efficacy outcome, a composite of cardiovascular (CV) death, MI, and stroke, over a median of about 1.35 years. The difference was driven primarily by a 23% reduction in risk for MI (P < .001); mortality seemed unaffected by antiplatelet treatment assignment.

Although the P2Y12 inhibitor and aspirin groups were similar with respect to risk of major bleeding, the P2Y12 inhibitor group showed significant reductions in risk for gastrointestinal (GI) bleeding, definite stent thrombosis, and hemorrhagic stroke; rates of hemorrhagic stroke were well under 1% in both groups.

The treatment effects were consistent across patient subgroups, including whether the aspirin comparison was with clopidogrel or ticagrelor.

“Taken together, our data challenge the central role of aspirin in secondary prevention and support a paradigm shift toward P2Y12 inhibitor monotherapy as long-term antiplatelet strategy in the sizable population of patients with coronary atherosclerosis,” Felice Gragnano, MD, PhD, said in an interview. “Given [their] superior efficacy and similar overall safety, P2Y12 inhibitors may be preferred [over] aspirin for the prevention of cardiovascular events in patients with CAD.”

Dr. Gragnano, of the University of Campania Luigi Vanvitelli, Caserta, Italy, who called PANTHER “the largest and most comprehensive synthesis of individual patient data from randomized trials comparing P2Y12 inhibitor monotherapy with aspirin monotherapy,” is lead author of the study, which was published online in the Journal of the American College of Cardiology.

Current guidelines recommend aspirin for antiplatelet monotherapy for patients with established CAD, Dr. Gragnano said, but “the primacy of aspirin in secondary prevention is based on historical trials conducted in the 1970s and 1980s and may not apply to contemporary practice.”

Moreover, later trials that compared P2Y12 inhibitors with aspirin for secondary prevention produced “inconsistent results,” possibly owing to their heterogeneous populations of patients with coronary, cerebrovascular, or peripheral vascular disease, he said. Study-level meta-analyses in this area “provide inconclusive evidence” because they haven’t evaluated treatment effects exclusively in patients with established CAD.

Most of the seven trials’ 24,325 participants had a history of MI, and some had peripheral artery disease (PAD); the rates were 56.2% and 9.1%, respectively. Coronary revascularization, either percutaneous or surgical, had been performed for about 70%. Most (61%) had presented with acute coronary syndromes, and the remainder had presented with chronic CAD.

About 76% of the combined cohorts were from Europe or North America; the rest were from Asia. The mean age of the patients was 64 years, and about 22% were women.

In all, 12,175 had been assigned to P2Y12 inhibitor monotherapy (62% received clopidogrel and 38% received ticagrelor); 12,147 received aspirin at dosages ranging from 75 mg to 325 mg daily.

The hazard ratio (HR) for the primary efficacy outcome, P2Y12 inhibitors vs. aspirin, was significantly reduced, at 0.88 (95% confidence interval [CI], 0.79-0.97; P = .012); the number needed to treat (NNT) to prevent one primary event over 2 years was 121, the report states.

The corresponding HR for MI was 0.77 (95% CI, 0.66-0.90; P < .001), for an NNT benefit of 136. For net adverse clinical events, the HR was 0.89 (95% CI, 0.81-0.98; P = .020), for an NNT benefit of 121.

Risk for major bleeding was not significantly different (HR, 0.87; 95% CI, 0.70-1.09; P = .23), nor were risks for stroke (HR, 0.84; 95% CI, 0.70-1.02; P = .076) or cardiovascular death (HR, 1.02; 95% CI, 0.86-1.20; P = .82).

Still, the P2Y12 inhibitor group showed significant risk reductions for the following:

- GI bleeding: HR, 0.75 (95% CI, 0.57-0.97; P = .027)

- Definite stent thrombosis: HR, 0.42 (95% CI, 0.19-0.97; P = .028)

- Hemorrhagic stroke: HR, 0.43 (95% CI, 0.23-0.83; P = .012)

The current findings are “hypothesis-generating but not definitive,” Dharam Kumbhani, MD, University of Texas Southwestern, Dallas, said in an interview.

It remains unclear “whether aspirin or P2Y12 inhibitor monotherapy is better for long-term maintenance use among patients with established CAD. Aspirin has historically been the agent of choice for this indication,” said Dr. Kumbhani, who with James A. de Lemos, MD, of the same institution, wrote an editorial accompanying the PANTHER report.

“It certainly would be appropriate to consider P2Y12 monotherapy preferentially for patients with prior or currently at high risk for GI or intracranial bleeding, for instance,” Dr. Kumbhani said. For the remainder, aspirin and P2Y12 inhibitors are both “reasonable alternatives.”

In their editorial, Dr. Kumbhani and Dr. de Lemos call the PANTHER meta-analysis “a well-done study with potentially important clinical implications.” The findings “make biological sense: P2Y12 inhibitors are more potent antiplatelet agents than aspirin and have less effect on gastrointestinal mucosal integrity.”

But for now, they wrote, “both aspirin and P2Y12 inhibitors remain viable alternatives for prevention of atherothrombotic events among patients with established CAD.”

Dr. Gragnano had no disclosures; potential conflicts for the other authors are in the report. Dr. Kumbhani reports no relevant relationships; Dr. de Lemos has received honoraria for participation in data safety monitoring boards from Eli Lilly, Novo Nordisk, AstraZeneca, and Janssen.

A version of this article first appeared on Medscape.com.

FROM JACC

Expanded coverage of carotid stenting in CMS draft proposal

The new memo follows a national coverage analysis for CAS that was initiated in January 2023 and considers 193 public comments received in the ensuing month.

That analysis followed a request from the Multispecialty Carotid Alliance (MSCA) to make the existing guidelines less restrictive.

The decision proposal would expand coverage for CAS “to standard surgical risk patients by removing the limitation of coverage to only high surgical risk patients.” It would limit it to patients for whom CAS is considered “reasonable and necessary” and who are either symptomatic with carotid stenosis of 50% or greater or asymptomatic with carotid stenosis of at least 70%.

The proposal would require practitioners to “engage in a formal shared decision-making interaction with the beneficiary” that involves use of a “validated decision-making tool.” The conversation must include discussion of all treatment options and their risks and benefits and cover information from the clinical guidelines, as well as “incorporate the patient’s personal preferences and priorities.”

Much of the proposed coverage criteria resemble recommendations from several societies that offered comments in response to the Jan. 12 CMS statement that led to the current draft proposal. They include, along with MSCA, the American Association of Neurological Surgeons and the Congress of Neurological Surgeons, and jointly the American College of Cardiology and the American Heart Association.

Carotid stenting, commented the ACC/AHA, “was first introduced in 1994, and the field has matured in the last 3 decades.” The procedure “is a well-established treatment option.” The groups declared support for “removal of the facility and operator requirement for CAS consistent with the current state of the published literature and standard clinical practice.”

The current CMS draft proposal acknowledges the publication of five major randomized controlled trials and a number of “large, prospective registry-based studies” since 2009 that support its proposed coverage criteria.

Collectively, it states, the evidence “suffices to demonstrate that CAS and [carotid endarterectomy] are similarly effective” with respect to the clinical primary endpoints of recent trials “in patients with either standard or high surgical risk and who are symptomatic with carotid artery stenosis ≥ 50% or asymptomatic with stenosis ≥ 70%.”

A version of this article appeared on Medscape.com.

The new memo follows a national coverage analysis for CAS that was initiated in January 2023 and considers 193 public comments received in the ensuing month.

That analysis followed a request from the Multispecialty Carotid Alliance (MSCA) to make the existing guidelines less restrictive.

The decision proposal would expand coverage for CAS “to standard surgical risk patients by removing the limitation of coverage to only high surgical risk patients.” It would limit it to patients for whom CAS is considered “reasonable and necessary” and who are either symptomatic with carotid stenosis of 50% or greater or asymptomatic with carotid stenosis of at least 70%.

The proposal would require practitioners to “engage in a formal shared decision-making interaction with the beneficiary” that involves use of a “validated decision-making tool.” The conversation must include discussion of all treatment options and their risks and benefits and cover information from the clinical guidelines, as well as “incorporate the patient’s personal preferences and priorities.”

Much of the proposed coverage criteria resemble recommendations from several societies that offered comments in response to the Jan. 12 CMS statement that led to the current draft proposal. They include, along with MSCA, the American Association of Neurological Surgeons and the Congress of Neurological Surgeons, and jointly the American College of Cardiology and the American Heart Association.

Carotid stenting, commented the ACC/AHA, “was first introduced in 1994, and the field has matured in the last 3 decades.” The procedure “is a well-established treatment option.” The groups declared support for “removal of the facility and operator requirement for CAS consistent with the current state of the published literature and standard clinical practice.”

The current CMS draft proposal acknowledges the publication of five major randomized controlled trials and a number of “large, prospective registry-based studies” since 2009 that support its proposed coverage criteria.

Collectively, it states, the evidence “suffices to demonstrate that CAS and [carotid endarterectomy] are similarly effective” with respect to the clinical primary endpoints of recent trials “in patients with either standard or high surgical risk and who are symptomatic with carotid artery stenosis ≥ 50% or asymptomatic with stenosis ≥ 70%.”

A version of this article appeared on Medscape.com.

The new memo follows a national coverage analysis for CAS that was initiated in January 2023 and considers 193 public comments received in the ensuing month.

That analysis followed a request from the Multispecialty Carotid Alliance (MSCA) to make the existing guidelines less restrictive.

The decision proposal would expand coverage for CAS “to standard surgical risk patients by removing the limitation of coverage to only high surgical risk patients.” It would limit it to patients for whom CAS is considered “reasonable and necessary” and who are either symptomatic with carotid stenosis of 50% or greater or asymptomatic with carotid stenosis of at least 70%.

The proposal would require practitioners to “engage in a formal shared decision-making interaction with the beneficiary” that involves use of a “validated decision-making tool.” The conversation must include discussion of all treatment options and their risks and benefits and cover information from the clinical guidelines, as well as “incorporate the patient’s personal preferences and priorities.”

Much of the proposed coverage criteria resemble recommendations from several societies that offered comments in response to the Jan. 12 CMS statement that led to the current draft proposal. They include, along with MSCA, the American Association of Neurological Surgeons and the Congress of Neurological Surgeons, and jointly the American College of Cardiology and the American Heart Association.

Carotid stenting, commented the ACC/AHA, “was first introduced in 1994, and the field has matured in the last 3 decades.” The procedure “is a well-established treatment option.” The groups declared support for “removal of the facility and operator requirement for CAS consistent with the current state of the published literature and standard clinical practice.”

The current CMS draft proposal acknowledges the publication of five major randomized controlled trials and a number of “large, prospective registry-based studies” since 2009 that support its proposed coverage criteria.

Collectively, it states, the evidence “suffices to demonstrate that CAS and [carotid endarterectomy] are similarly effective” with respect to the clinical primary endpoints of recent trials “in patients with either standard or high surgical risk and who are symptomatic with carotid artery stenosis ≥ 50% or asymptomatic with stenosis ≥ 70%.”

A version of this article appeared on Medscape.com.

Peripartum cardiomyopathy raises risks at future pregnancy despite LV recovery

, a new study suggests.

Researchers looked at the long-term outcomes in a cohort of women who had developed PPCM and became pregnant again several years later, comparing those with LV function that had “normalized” in the interim against those with persisting LV dysfunction.

In their analysis, adverse maternal outcomes 5 years after an index pregnancy were significantly worse among those in whom LV dysfunction had persisted, compared with those with recovered LV function. The risk of relapsed PPCM persisted out to 8 years. Mortality remained high in both groups through the follow-up.

The study suggests that “women with PPCM need long-term follow-up by cardiology, as mortality does not abate over time,” Kalgi Modi, MD, Louisiana State University, Shreveport, said in an interview.

Women with a history of PPCM, she said, need “multidisciplinary and shared decision-making for family planning, because normalization of left ventricular function after index pregnancy does not guarantee a favorable outcome in the subsequent pregnancies.”

Dr. Modi is senior author on the study published online in the Journal of the American College of Cardiology.

The current findings are important to women with a history of PPCM who are “contemplating future pregnancy,” Afshan Hameed, MD, a maternal-fetal medicine specialist and cardiologist at the University of California, Irvine, said in an interview. The investigators suggest that “complete recovery of cardiac function after PPCM does not guarantee a favorable outcome in future pregnancy,” agreed Dr. Hameed, who was not involved in the current study. Future pregnancies must therefore “be highly discouraged or considered with caution even in patients who have recovered their cardiac function.”

To investigate the impact of PPCM on risk at subsequent pregnancies, the researchers studied 45 patients with PPCM who had gone on to have at least one more pregnancy, the first a median of 28 months later. Their mean age was 27 and 80% were Black; they were followed a median of 8 years.

Peripartum cardiomyopathy, defined as idiopathic heart failure with LV ejection fraction (LVEF) 45% or less in the last month of pregnancy through the following 5 months, was diagnosed post partum in 93.3% and antepartum in the remaining 6.7% (mean time of diagnosis, 6 weeks post partum).

The mean LVEF fell from 45.1% at the index pregnancy to 41.2% (P = .009) at subsequent pregnancies. The “recovery group” included the 30 women with LVEF recovery to 50% or higher after the index pregnancy, and the remaining 15 with persisting LV dysfunction – defined as LVEF < 50% – made up the “nonrecovery group.”

Recovery of LVEF was associated with a reduced risk of persisting LV dysfunction, the report states, at a hazard ratio of 0.08 (95% CI, 0.01-0.64; P = .02) after adjustment for hypertension, diabetes, and history of preeclampsia. But that risk went up sharply in association with illicit drug use, similarly adjusted, with an HR of 9.08 (95% CI, 1.38-59.8; P = .02).

The nonrecovery group, compared with the recovery group, experienced significantly higher rates of adverse maternal outcomes (53.3% vs. 20.0%; P = .04) – a composite endpoint that included relapse PPCM (33.3% vs. 3.3%; P = .01), HF (53.3% vs. 20.0%; P = .03), cardiogenic shock, thromboembolic events, and death – at 5 years. However, all-cause mortality was nonsignificantly different between the two groups (13.3% vs. 3.3%; P = .25)

All-cause mortality was nonsignificantly different between the two groups at a median of 8 years (20.0% vs. 20.0%; P = 1.00), and the difference in overall adverse maternal outcomes had gone from significant to nonsignificant (53.3% vs. 33.3%; P = .20). The difference in relapse PPCM between groups remained significant after 8 years (53.3% vs. 23.3%; P = .04)

The study is limited by its retrospective nature, a relatively small population, and lack of racial diversity, the report notes.

Indeed, most of the study’s subjects were Black, and previous studies have demonstrated a “different phenotypic presentation and outcome in African American women with PPCM, compared with non–African American women,” an accompanying editorial states.

Therefore, applicability of its findings to other populations “needs to be examined by urgently needed national prospective registries with long-term follow-up,” writes Uri Elkayam, MD, University of Southern California, Los Angeles.

Moreover, the study questions “whether the reverse remodeling and improvement of [LVEF] in women with PPCM represent a true recovery.” Prior studies “have shown an impaired contractile reserve as well as abnormal myocardial strain and reduced exercise capacity and even mortality in women with PPCM after RLV,” Dr. Elkayam notes.

It’s therefore possible – as with other forms of dilated cardiomyopathy – that LVEF normalization “does not represent a true recovery but a new steady state with subclinical myocardial dysfunction that is prone to development of recurrent [LV dysfunction] and clinical deterioration in response to various triggers such as long-standing hypertension, obesity, diabetes, illicit drug use,” and, “more importantly,” subsequent pregnancies.

The study points to “the need for a close long-term follow-up of women with PPCM” and provides “a rationale for early initiation of guideline-directed medical therapy after the diagnosis of PPCM and possible continuation even after improvement of LVEF.”

No funding source was reported. Dr. Modi and coauthors, Dr. Elkayam, and Dr. Hameed declare no relevant financial relationships.

A version of this article first appeared on Medscape.com.

, a new study suggests.

Researchers looked at the long-term outcomes in a cohort of women who had developed PPCM and became pregnant again several years later, comparing those with LV function that had “normalized” in the interim against those with persisting LV dysfunction.

In their analysis, adverse maternal outcomes 5 years after an index pregnancy were significantly worse among those in whom LV dysfunction had persisted, compared with those with recovered LV function. The risk of relapsed PPCM persisted out to 8 years. Mortality remained high in both groups through the follow-up.

The study suggests that “women with PPCM need long-term follow-up by cardiology, as mortality does not abate over time,” Kalgi Modi, MD, Louisiana State University, Shreveport, said in an interview.

Women with a history of PPCM, she said, need “multidisciplinary and shared decision-making for family planning, because normalization of left ventricular function after index pregnancy does not guarantee a favorable outcome in the subsequent pregnancies.”

Dr. Modi is senior author on the study published online in the Journal of the American College of Cardiology.

The current findings are important to women with a history of PPCM who are “contemplating future pregnancy,” Afshan Hameed, MD, a maternal-fetal medicine specialist and cardiologist at the University of California, Irvine, said in an interview. The investigators suggest that “complete recovery of cardiac function after PPCM does not guarantee a favorable outcome in future pregnancy,” agreed Dr. Hameed, who was not involved in the current study. Future pregnancies must therefore “be highly discouraged or considered with caution even in patients who have recovered their cardiac function.”

To investigate the impact of PPCM on risk at subsequent pregnancies, the researchers studied 45 patients with PPCM who had gone on to have at least one more pregnancy, the first a median of 28 months later. Their mean age was 27 and 80% were Black; they were followed a median of 8 years.

Peripartum cardiomyopathy, defined as idiopathic heart failure with LV ejection fraction (LVEF) 45% or less in the last month of pregnancy through the following 5 months, was diagnosed post partum in 93.3% and antepartum in the remaining 6.7% (mean time of diagnosis, 6 weeks post partum).

The mean LVEF fell from 45.1% at the index pregnancy to 41.2% (P = .009) at subsequent pregnancies. The “recovery group” included the 30 women with LVEF recovery to 50% or higher after the index pregnancy, and the remaining 15 with persisting LV dysfunction – defined as LVEF < 50% – made up the “nonrecovery group.”

Recovery of LVEF was associated with a reduced risk of persisting LV dysfunction, the report states, at a hazard ratio of 0.08 (95% CI, 0.01-0.64; P = .02) after adjustment for hypertension, diabetes, and history of preeclampsia. But that risk went up sharply in association with illicit drug use, similarly adjusted, with an HR of 9.08 (95% CI, 1.38-59.8; P = .02).

The nonrecovery group, compared with the recovery group, experienced significantly higher rates of adverse maternal outcomes (53.3% vs. 20.0%; P = .04) – a composite endpoint that included relapse PPCM (33.3% vs. 3.3%; P = .01), HF (53.3% vs. 20.0%; P = .03), cardiogenic shock, thromboembolic events, and death – at 5 years. However, all-cause mortality was nonsignificantly different between the two groups (13.3% vs. 3.3%; P = .25)

All-cause mortality was nonsignificantly different between the two groups at a median of 8 years (20.0% vs. 20.0%; P = 1.00), and the difference in overall adverse maternal outcomes had gone from significant to nonsignificant (53.3% vs. 33.3%; P = .20). The difference in relapse PPCM between groups remained significant after 8 years (53.3% vs. 23.3%; P = .04)

The study is limited by its retrospective nature, a relatively small population, and lack of racial diversity, the report notes.

Indeed, most of the study’s subjects were Black, and previous studies have demonstrated a “different phenotypic presentation and outcome in African American women with PPCM, compared with non–African American women,” an accompanying editorial states.

Therefore, applicability of its findings to other populations “needs to be examined by urgently needed national prospective registries with long-term follow-up,” writes Uri Elkayam, MD, University of Southern California, Los Angeles.

Moreover, the study questions “whether the reverse remodeling and improvement of [LVEF] in women with PPCM represent a true recovery.” Prior studies “have shown an impaired contractile reserve as well as abnormal myocardial strain and reduced exercise capacity and even mortality in women with PPCM after RLV,” Dr. Elkayam notes.

It’s therefore possible – as with other forms of dilated cardiomyopathy – that LVEF normalization “does not represent a true recovery but a new steady state with subclinical myocardial dysfunction that is prone to development of recurrent [LV dysfunction] and clinical deterioration in response to various triggers such as long-standing hypertension, obesity, diabetes, illicit drug use,” and, “more importantly,” subsequent pregnancies.

The study points to “the need for a close long-term follow-up of women with PPCM” and provides “a rationale for early initiation of guideline-directed medical therapy after the diagnosis of PPCM and possible continuation even after improvement of LVEF.”

No funding source was reported. Dr. Modi and coauthors, Dr. Elkayam, and Dr. Hameed declare no relevant financial relationships.

A version of this article first appeared on Medscape.com.

, a new study suggests.

Researchers looked at the long-term outcomes in a cohort of women who had developed PPCM and became pregnant again several years later, comparing those with LV function that had “normalized” in the interim against those with persisting LV dysfunction.

In their analysis, adverse maternal outcomes 5 years after an index pregnancy were significantly worse among those in whom LV dysfunction had persisted, compared with those with recovered LV function. The risk of relapsed PPCM persisted out to 8 years. Mortality remained high in both groups through the follow-up.

The study suggests that “women with PPCM need long-term follow-up by cardiology, as mortality does not abate over time,” Kalgi Modi, MD, Louisiana State University, Shreveport, said in an interview.

Women with a history of PPCM, she said, need “multidisciplinary and shared decision-making for family planning, because normalization of left ventricular function after index pregnancy does not guarantee a favorable outcome in the subsequent pregnancies.”

Dr. Modi is senior author on the study published online in the Journal of the American College of Cardiology.

The current findings are important to women with a history of PPCM who are “contemplating future pregnancy,” Afshan Hameed, MD, a maternal-fetal medicine specialist and cardiologist at the University of California, Irvine, said in an interview. The investigators suggest that “complete recovery of cardiac function after PPCM does not guarantee a favorable outcome in future pregnancy,” agreed Dr. Hameed, who was not involved in the current study. Future pregnancies must therefore “be highly discouraged or considered with caution even in patients who have recovered their cardiac function.”

To investigate the impact of PPCM on risk at subsequent pregnancies, the researchers studied 45 patients with PPCM who had gone on to have at least one more pregnancy, the first a median of 28 months later. Their mean age was 27 and 80% were Black; they were followed a median of 8 years.

Peripartum cardiomyopathy, defined as idiopathic heart failure with LV ejection fraction (LVEF) 45% or less in the last month of pregnancy through the following 5 months, was diagnosed post partum in 93.3% and antepartum in the remaining 6.7% (mean time of diagnosis, 6 weeks post partum).

The mean LVEF fell from 45.1% at the index pregnancy to 41.2% (P = .009) at subsequent pregnancies. The “recovery group” included the 30 women with LVEF recovery to 50% or higher after the index pregnancy, and the remaining 15 with persisting LV dysfunction – defined as LVEF < 50% – made up the “nonrecovery group.”

Recovery of LVEF was associated with a reduced risk of persisting LV dysfunction, the report states, at a hazard ratio of 0.08 (95% CI, 0.01-0.64; P = .02) after adjustment for hypertension, diabetes, and history of preeclampsia. But that risk went up sharply in association with illicit drug use, similarly adjusted, with an HR of 9.08 (95% CI, 1.38-59.8; P = .02).

The nonrecovery group, compared with the recovery group, experienced significantly higher rates of adverse maternal outcomes (53.3% vs. 20.0%; P = .04) – a composite endpoint that included relapse PPCM (33.3% vs. 3.3%; P = .01), HF (53.3% vs. 20.0%; P = .03), cardiogenic shock, thromboembolic events, and death – at 5 years. However, all-cause mortality was nonsignificantly different between the two groups (13.3% vs. 3.3%; P = .25)

All-cause mortality was nonsignificantly different between the two groups at a median of 8 years (20.0% vs. 20.0%; P = 1.00), and the difference in overall adverse maternal outcomes had gone from significant to nonsignificant (53.3% vs. 33.3%; P = .20). The difference in relapse PPCM between groups remained significant after 8 years (53.3% vs. 23.3%; P = .04)

The study is limited by its retrospective nature, a relatively small population, and lack of racial diversity, the report notes.

Indeed, most of the study’s subjects were Black, and previous studies have demonstrated a “different phenotypic presentation and outcome in African American women with PPCM, compared with non–African American women,” an accompanying editorial states.

Therefore, applicability of its findings to other populations “needs to be examined by urgently needed national prospective registries with long-term follow-up,” writes Uri Elkayam, MD, University of Southern California, Los Angeles.

Moreover, the study questions “whether the reverse remodeling and improvement of [LVEF] in women with PPCM represent a true recovery.” Prior studies “have shown an impaired contractile reserve as well as abnormal myocardial strain and reduced exercise capacity and even mortality in women with PPCM after RLV,” Dr. Elkayam notes.

It’s therefore possible – as with other forms of dilated cardiomyopathy – that LVEF normalization “does not represent a true recovery but a new steady state with subclinical myocardial dysfunction that is prone to development of recurrent [LV dysfunction] and clinical deterioration in response to various triggers such as long-standing hypertension, obesity, diabetes, illicit drug use,” and, “more importantly,” subsequent pregnancies.

The study points to “the need for a close long-term follow-up of women with PPCM” and provides “a rationale for early initiation of guideline-directed medical therapy after the diagnosis of PPCM and possible continuation even after improvement of LVEF.”

No funding source was reported. Dr. Modi and coauthors, Dr. Elkayam, and Dr. Hameed declare no relevant financial relationships.

A version of this article first appeared on Medscape.com.

FROM THE JOURNAL OF THE AMERICAN COLLEGE OF CARDIOLOGY

New definition for iron deficiency in CV disease proposed

with implications that may extend to cardiovascular disease in general.

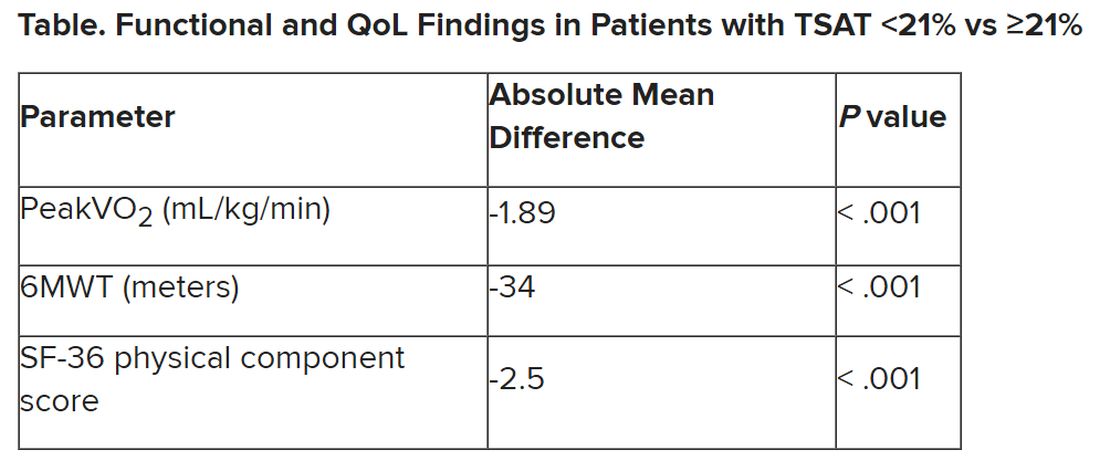

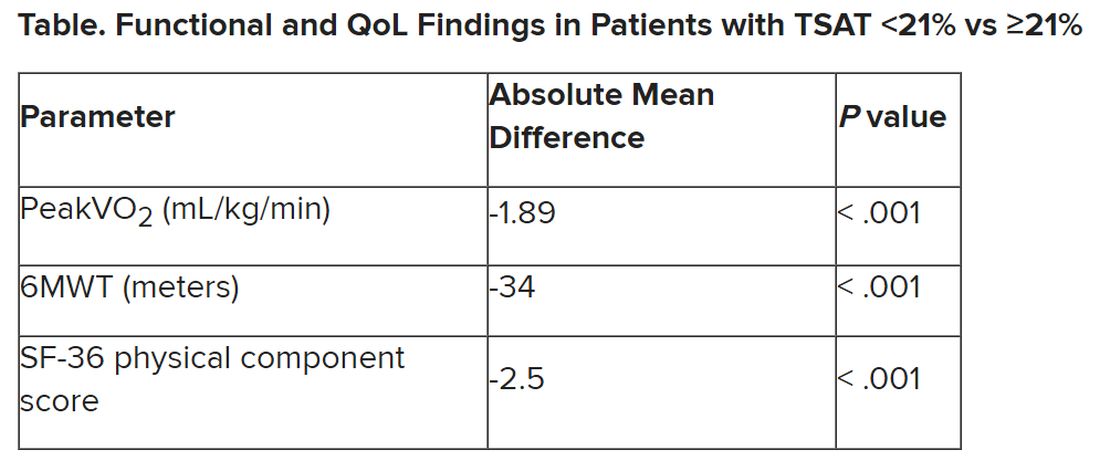

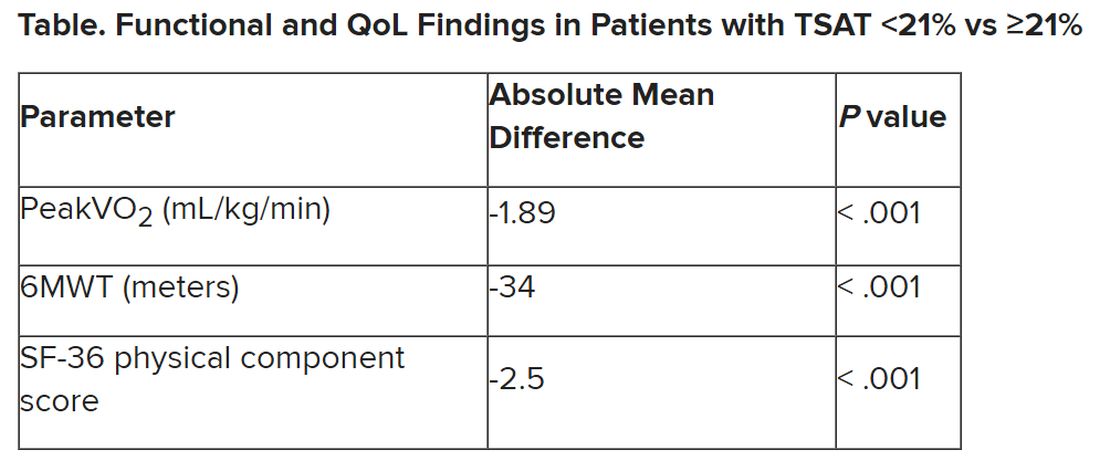

In the study involving more than 900 patients with PH, investigators at seven U.S. centers determined the prevalence of iron deficiency by two separate definitions and assessed its associations with functional measures and quality of life (QoL) scores.

An iron deficiency definition used conventionally in heart failure (HF) – ferritin less than 100 g/mL or 100-299 ng/mL with transferrin saturation (TSAT) less than 20% – failed to discriminate patients with reduced peak oxygen consumption (peakVO2), 6-minute walk test (6MWT) results, and QoL scores on the 36-item Short Form Survey (SF-36).

But an alternative definition for iron deficiency, simply a TSAT less than 21%, did predict such patients with reduced peakVO2, 6MWT, and QoL. It was also associated with an increased mortality risk. The study was published in the European Heart Journal.

“A low TSAT, less than 21%, is key in the pathophysiology of iron deficiency in pulmonary hypertension” and is associated with those important clinical and functional characteristics, lead author Pieter Martens MD, PhD, said in an interview. The study “underscores the importance of these criteria in future intervention studies in the field of pulmonary hypertension testing iron therapies.”

A broader implication is that “we should revise how we define iron deficiency in heart failure and cardiovascular disease in general and how we select patients for iron therapies,” said Dr. Martens, of the Heart, Vascular & Thoracic Institute of the Cleveland Clinic.

Iron’s role in pulmonary vascular disease

“Iron deficiency is associated with an energetic deficit, especially in high energy–demanding tissue, leading to early skeletal muscle acidification and diminished left and right ventricular (RV) contractile reserve during exercise,” the published report states. It can lead to “maladaptive RV remodeling,” which is a “hallmark feature” predictive of morbidity and mortality in patients with pulmonary vascular disease (PVD).

Some studies have suggested that iron deficiency is a common comorbidity in patients with PVD, their estimates of its prevalence ranging widely due in part to the “absence of a uniform definition,” write the authors.

Dr. Martens said the current study was conducted partly in response to the increasingly common observation that the HF-associated definition of iron deficiency “has limitations.” Yet, “without validation in the field of pulmonary hypertension, the 2022 pulmonary hypertension guidelines endorse this definition.”

As iron deficiency is a causal risk factor for HF progression, Dr. Martens added, the HF field has “taught us the importance of using validated definitions for iron deficiency when selecting patients for iron treatment in randomized controlled trials.”

Moreover, some evidence suggests that iron deficiency by some definitions may be associated with diminished exercise capacity and QoL in patients with PVD, which are associations that have not been confirmed in large studies, the report notes.

Therefore, it continues, the study sought to “determine and validate” the optimal definition of iron deficiency in patients with PVD; document its prevalence; and explore associations between iron deficiency and exercise capacity, QoL, and cardiac and pulmonary vascular remodeling.

Evaluating definitions of iron deficiency

The prospective study, called PVDOMICS, entered 1,195 subjects with available iron levels. After exclusion of 38 patients with sarcoidosis, myeloproliferative disease, or hemoglobinopathy, there remained 693 patients with “overt” PH, 225 with a milder form of PH who served as PVD comparators, and 90 age-, sex-, race/ethnicity- matched “healthy” adults who served as controls.

According to the conventional HF definition of iron deficiency – that is, ferritin 100-299 ng/mL and TSAT less than 20% – the prevalences were 74% in patients with overt PH and 72% of those “across the PVD spectrum.”

But by that definition, iron deficient and non-iron deficient patients didn’t differ significantly in peakVO2, 6MWT distance, or SF-36 physical component scores.

In contrast, patients meeting the alternative definition of iron deficiency of TSAT less than 21% showed significantly reduced functional and QoL measures, compared with those with TSAT greater than or equal to 21%.

The group with TSAT less than 21% also showed significantly more RV remodeling at cardiac MRI, compared with those who had TSAT greater than or equal to 21%, but their invasively measured pulmonary vascular resistance was comparable.

Of note, those with TSAT less than 21% also showed significantly increased all-cause mortality (hazard ratio, 1.63; 95% confidence interval, 1.13-2.34; P = .009) after adjustment for age, sex, hemoglobin, and natriuretic peptide levels.

“Proper validation of the definition of iron deficiency is important for prognostication,” the published report states, “but also for providing a working definition that can be used to identify suitable patients for inclusion in randomized controlled trials” of drugs for iron deficiency.

Additionally, the finding that TSAT less than 21% points to patients with diminished functional and exercise capacity is “consistent with more recent studies in the field of heart failure” that suggest “functional abnormalities and adverse cardiac remodeling are worse in patients with a low TSAT.” Indeed, the report states, such treatment effects have been “the most convincing” in HF trials.

Broader implications

An accompanying editorial agrees that the study’s implications apply well beyond PH. It highlights that iron deficiency is common in PH, while such PH is “not substantially different from the problem in patients with heart failure, chronic kidney disease, and cardiovascular disease in general,” lead editorialist John G.F. Cleland, MD, PhD, University of Glasgow, said in an interview. “It’s also common as people get older, even in those without these diseases.”

Dr. Cleland said the anemia definition currently used in cardiovascular research and practice is based on a hemoglobin concentration below the 5th percentile of age and sex in primarily young, healthy people, and not on its association with clinical outcomes.

“We recently analyzed data on a large population in the United Kingdom with a broad range of cardiovascular diseases and found that unless anemia is severe, [other] markers of iron deficiency are usually not measured,” he said. A low hemoglobin and TSAT, but not low ferritin levels, are associated with worse prognosis.

Dr. Cleland agreed that the HF-oriented definition is “poor,” with profound implications for the conduct of clinical trials. “If the definition of iron deficiency lacks specificity, then clinical trials will include many patients without iron deficiency who are unlikely to benefit from and might be harmed by IV iron.” Inclusion of such patients may also “dilute” any benefit that might emerge and render the outcome inaccurate.

But if the definition of iron deficiency lacks sensitivity, “then in clinical practice, many patients with iron deficiency may be denied a simple and effective treatment.”

Measuring serum iron could potentially be useful, but it’s usually not done in randomized trials “especially since taking an iron tablet can give a temporary ‘blip’ in serum iron,” Dr. Cleland said. “So TSAT is a reasonable compromise.” He said he “looks forward” to any further data on serum iron as a way of assessing iron deficiency and anemia.

Half full vs. half empty

Dr. Cleland likened the question of whom to treat with iron supplementation as a “glass half full versus half empty” clinical dilemma. “One approach is to give iron to everyone unless there’s evidence that they’re overloaded,” he said, “while the other is to withhold iron from everyone unless there’s evidence that they’re iron depleted.”

Recent evidence from the IRONMAN trial suggested that its patients with HF who received intravenous iron were less likely to be hospitalized for infections, particularly COVID-19, than a usual-care group. The treatment may also help reduce frailty.

“So should we be offering IV iron specifically to people considered iron deficient, or should we be ensuring that everyone over age 70 get iron supplements?” Dr. Cleland mused rhetorically. On a cautionary note, he added, perhaps iron supplementation will be harmful if it’s not necessary.

Dr. Cleland proposed “focusing for the moment on people who are iron deficient but investigating the possibility that we are being overly restrictive and should be giving iron to a much broader population.” That course, however, would require large population-based studies.

“We need more experience,” Dr. Cleland said, “to make sure that the benefits outweigh any risks before we can just give iron to everyone.”

Dr. Martens has received consultancy fees from AstraZeneca, Abbott, Bayer, Boehringer Ingelheim, Daiichi Sankyo, Novartis, Novo Nordisk, and Vifor Pharma. Dr. Cleland declares grant support, support for travel, and personal honoraria from Pharmacosmos and Vifor. Disclosures for other authors are in the published report and editorial.

A version of this article first appeared on Medscape.com.

with implications that may extend to cardiovascular disease in general.

In the study involving more than 900 patients with PH, investigators at seven U.S. centers determined the prevalence of iron deficiency by two separate definitions and assessed its associations with functional measures and quality of life (QoL) scores.

An iron deficiency definition used conventionally in heart failure (HF) – ferritin less than 100 g/mL or 100-299 ng/mL with transferrin saturation (TSAT) less than 20% – failed to discriminate patients with reduced peak oxygen consumption (peakVO2), 6-minute walk test (6MWT) results, and QoL scores on the 36-item Short Form Survey (SF-36).

But an alternative definition for iron deficiency, simply a TSAT less than 21%, did predict such patients with reduced peakVO2, 6MWT, and QoL. It was also associated with an increased mortality risk. The study was published in the European Heart Journal.

“A low TSAT, less than 21%, is key in the pathophysiology of iron deficiency in pulmonary hypertension” and is associated with those important clinical and functional characteristics, lead author Pieter Martens MD, PhD, said in an interview. The study “underscores the importance of these criteria in future intervention studies in the field of pulmonary hypertension testing iron therapies.”

A broader implication is that “we should revise how we define iron deficiency in heart failure and cardiovascular disease in general and how we select patients for iron therapies,” said Dr. Martens, of the Heart, Vascular & Thoracic Institute of the Cleveland Clinic.

Iron’s role in pulmonary vascular disease

“Iron deficiency is associated with an energetic deficit, especially in high energy–demanding tissue, leading to early skeletal muscle acidification and diminished left and right ventricular (RV) contractile reserve during exercise,” the published report states. It can lead to “maladaptive RV remodeling,” which is a “hallmark feature” predictive of morbidity and mortality in patients with pulmonary vascular disease (PVD).

Some studies have suggested that iron deficiency is a common comorbidity in patients with PVD, their estimates of its prevalence ranging widely due in part to the “absence of a uniform definition,” write the authors.

Dr. Martens said the current study was conducted partly in response to the increasingly common observation that the HF-associated definition of iron deficiency “has limitations.” Yet, “without validation in the field of pulmonary hypertension, the 2022 pulmonary hypertension guidelines endorse this definition.”

As iron deficiency is a causal risk factor for HF progression, Dr. Martens added, the HF field has “taught us the importance of using validated definitions for iron deficiency when selecting patients for iron treatment in randomized controlled trials.”

Moreover, some evidence suggests that iron deficiency by some definitions may be associated with diminished exercise capacity and QoL in patients with PVD, which are associations that have not been confirmed in large studies, the report notes.

Therefore, it continues, the study sought to “determine and validate” the optimal definition of iron deficiency in patients with PVD; document its prevalence; and explore associations between iron deficiency and exercise capacity, QoL, and cardiac and pulmonary vascular remodeling.

Evaluating definitions of iron deficiency

The prospective study, called PVDOMICS, entered 1,195 subjects with available iron levels. After exclusion of 38 patients with sarcoidosis, myeloproliferative disease, or hemoglobinopathy, there remained 693 patients with “overt” PH, 225 with a milder form of PH who served as PVD comparators, and 90 age-, sex-, race/ethnicity- matched “healthy” adults who served as controls.

According to the conventional HF definition of iron deficiency – that is, ferritin 100-299 ng/mL and TSAT less than 20% – the prevalences were 74% in patients with overt PH and 72% of those “across the PVD spectrum.”

But by that definition, iron deficient and non-iron deficient patients didn’t differ significantly in peakVO2, 6MWT distance, or SF-36 physical component scores.

In contrast, patients meeting the alternative definition of iron deficiency of TSAT less than 21% showed significantly reduced functional and QoL measures, compared with those with TSAT greater than or equal to 21%.

The group with TSAT less than 21% also showed significantly more RV remodeling at cardiac MRI, compared with those who had TSAT greater than or equal to 21%, but their invasively measured pulmonary vascular resistance was comparable.

Of note, those with TSAT less than 21% also showed significantly increased all-cause mortality (hazard ratio, 1.63; 95% confidence interval, 1.13-2.34; P = .009) after adjustment for age, sex, hemoglobin, and natriuretic peptide levels.

“Proper validation of the definition of iron deficiency is important for prognostication,” the published report states, “but also for providing a working definition that can be used to identify suitable patients for inclusion in randomized controlled trials” of drugs for iron deficiency.

Additionally, the finding that TSAT less than 21% points to patients with diminished functional and exercise capacity is “consistent with more recent studies in the field of heart failure” that suggest “functional abnormalities and adverse cardiac remodeling are worse in patients with a low TSAT.” Indeed, the report states, such treatment effects have been “the most convincing” in HF trials.

Broader implications

An accompanying editorial agrees that the study’s implications apply well beyond PH. It highlights that iron deficiency is common in PH, while such PH is “not substantially different from the problem in patients with heart failure, chronic kidney disease, and cardiovascular disease in general,” lead editorialist John G.F. Cleland, MD, PhD, University of Glasgow, said in an interview. “It’s also common as people get older, even in those without these diseases.”

Dr. Cleland said the anemia definition currently used in cardiovascular research and practice is based on a hemoglobin concentration below the 5th percentile of age and sex in primarily young, healthy people, and not on its association with clinical outcomes.

“We recently analyzed data on a large population in the United Kingdom with a broad range of cardiovascular diseases and found that unless anemia is severe, [other] markers of iron deficiency are usually not measured,” he said. A low hemoglobin and TSAT, but not low ferritin levels, are associated with worse prognosis.

Dr. Cleland agreed that the HF-oriented definition is “poor,” with profound implications for the conduct of clinical trials. “If the definition of iron deficiency lacks specificity, then clinical trials will include many patients without iron deficiency who are unlikely to benefit from and might be harmed by IV iron.” Inclusion of such patients may also “dilute” any benefit that might emerge and render the outcome inaccurate.

But if the definition of iron deficiency lacks sensitivity, “then in clinical practice, many patients with iron deficiency may be denied a simple and effective treatment.”

Measuring serum iron could potentially be useful, but it’s usually not done in randomized trials “especially since taking an iron tablet can give a temporary ‘blip’ in serum iron,” Dr. Cleland said. “So TSAT is a reasonable compromise.” He said he “looks forward” to any further data on serum iron as a way of assessing iron deficiency and anemia.

Half full vs. half empty

Dr. Cleland likened the question of whom to treat with iron supplementation as a “glass half full versus half empty” clinical dilemma. “One approach is to give iron to everyone unless there’s evidence that they’re overloaded,” he said, “while the other is to withhold iron from everyone unless there’s evidence that they’re iron depleted.”

Recent evidence from the IRONMAN trial suggested that its patients with HF who received intravenous iron were less likely to be hospitalized for infections, particularly COVID-19, than a usual-care group. The treatment may also help reduce frailty.

“So should we be offering IV iron specifically to people considered iron deficient, or should we be ensuring that everyone over age 70 get iron supplements?” Dr. Cleland mused rhetorically. On a cautionary note, he added, perhaps iron supplementation will be harmful if it’s not necessary.

Dr. Cleland proposed “focusing for the moment on people who are iron deficient but investigating the possibility that we are being overly restrictive and should be giving iron to a much broader population.” That course, however, would require large population-based studies.

“We need more experience,” Dr. Cleland said, “to make sure that the benefits outweigh any risks before we can just give iron to everyone.”