User login

Teaching Quality Improvement to Internal Medicine Residents to Address Patient Care Gaps in Ambulatory Quality Metrics

ABSTRACT

Objective: To teach internal medicine residents quality improvement (QI) principles in an effort to improve resident knowledge and comfort with QI, as well as address quality care gaps in resident clinic primary care patient panels.

Design: A QI curriculum was implemented for all residents rotating through a primary care block over a 6-month period. Residents completed Institute for Healthcare Improvement (IHI) modules, participated in a QI workshop, and received panel data reports, ultimately completing a plan-do-study-act (PDSA) cycle to improve colorectal cancer screening and hypertension control.

Setting and participants: This project was undertaken at Tufts Medical Center Primary Care, Boston, Massachusetts, the primary care teaching practice for all 75 internal medicine residents at Tufts Medical Center. All internal medicine residents were included, with 55 (73%) of the 75 residents completing the presurvey, and 39 (52%) completing the postsurvey.

Measurements: We administered a 10-question pre- and postsurvey looking at resident attitudes toward and comfort with QI and familiarity with their panel data as well as measured rates of colorectal cancer screening and hypertension control in resident panels.

Results: There was an increase in the numbers of residents who performed a PDSA cycle (P = .002), completed outreach based on their panel data (P = .02), and felt comfortable in both creating aim statements and designing and implementing PDSA cycles (P < .0001). The residents’ knowledge of their panel data significantly increased. There was no significant improvement in hypertension control, but there was an increase in colorectal cancer screening rates (P < .0001).

Conclusion: Providing panel data and performing targeted QI interventions can improve resident comfort with QI, translating to improvement in patient outcomes.

Keywords: quality improvement, resident education, medical education, care gaps, quality metrics.

As quality improvement (QI) has become an integral part of clinical practice, residency training programs have continued to evolve in how best to teach QI. The Accreditation Council for Graduate Medical Education (ACGME) Common Program requirements mandate that core competencies in residency programs include practice-based learning and improvement and systems-based practice.1 Residents should receive education in QI, receive data on quality metrics and benchmarks related to their patient population, and participate in QI activities. The Clinical Learning Environment Review (CLER) program was established to provide feedback to institutions on 6 focused areas, including patient safety and health care quality. In visits to institutions across the United States, the CLER committees found that many residents had limited knowledge of QI concepts and limited access to data on quality metrics and benchmarks.2

There are many barriers to implementing a QI curriculum in residency programs, and creating and maintaining successful strategies has proven challenging.3 Many QI curricula for internal medicine residents have been described in the literature, but the results of many of these studies focus on resident self-assessment of QI knowledge and numbers of projects rather than on patient outcomes.4-13 As there is some evidence suggesting that patients treated by residents have worse outcomes on ambulatory quality measures when compared with patients treated by staff physicians,14,15 it is important to also look at patient outcomes when evaluating a QI curriculum. Experts in education recommend the following to optimize learning: exposure to both didactic and experiential opportunities, connection to health system improvement efforts, and assessment of patient outcomes in addition to learner feedback.16,17 A study also found that providing panel data to residents could improve quality metrics.18

In this study, we sought to investigate the effects of a resident QI intervention during an ambulatory block on both residents’ self-assessments of QI knowledge and attitudes as well as on patient quality metrics.

Methods

Curriculum

We implemented this educational initiative at Tufts Medical Center Primary Care, Boston, Massachusetts, the primary care teaching practice for all 75 internal medicine residents at Tufts Medical Center. Co-located with the 415-bed academic medical center in downtown Boston, the practice serves more than 40,000 patients, approximately 7000 of whom are cared for by resident primary care physicians (PCPs). The internal medicine residents rotate through the primary care clinic as part of continuity clinic during ambulatory or elective blocks. In addition to continuity clinic, the residents have 2 dedicated 3-week primary care rotations during the course of an academic year. Primary care rotations consist of 5 clinic sessions a week as well as structured teaching sessions. Each resident inherits a panel of patients from an outgoing senior resident, with an average panel size of 96 patients per resident.

Prior to this study intervention, we did not do any formal QI teaching to our residents as part of their primary care curriculum, and previous panel management had focused more on chart reviews of patients whom residents perceived to be higher risk. Residents from all 3 years were included in the intervention. We taught a QI curriculum to our residents from January 2018 to June 2018 during the 3-week primary care rotation, which consisted of the following components:

- Institute for Healthcare Improvement (IHI) module QI 102 completed independently online.

- A 2-hour QI workshop led by 1 of 2 primary care faculty with backgrounds in QI, during which residents were taught basic principles of QI, including how to craft aim statements and design plan-do-study-act (PDSA) cycles, and participated in a hands-on QI activity designed to model rapid cycle improvement (the Paper Airplane Factory19).

- Distribution of individualized reports of residents’ patient panel data by email at the start of the primary care block that detailed patients’ overall rates of colorectal cancer screening and hypertension (HTN) control, along with the average resident panel rates and the average attending panel rates. The reports also included a list of all residents’ patients who were overdue for colorectal cancer screening or whose last blood pressure (BP) was uncontrolled (systolic BP ≥ 140 mm Hg or diastolic BP ≥ 90 mm Hg). These reports were originally designed by our practice’s QI team and run and exported in Microsoft Excel format monthly by our information technology (IT) administrator.

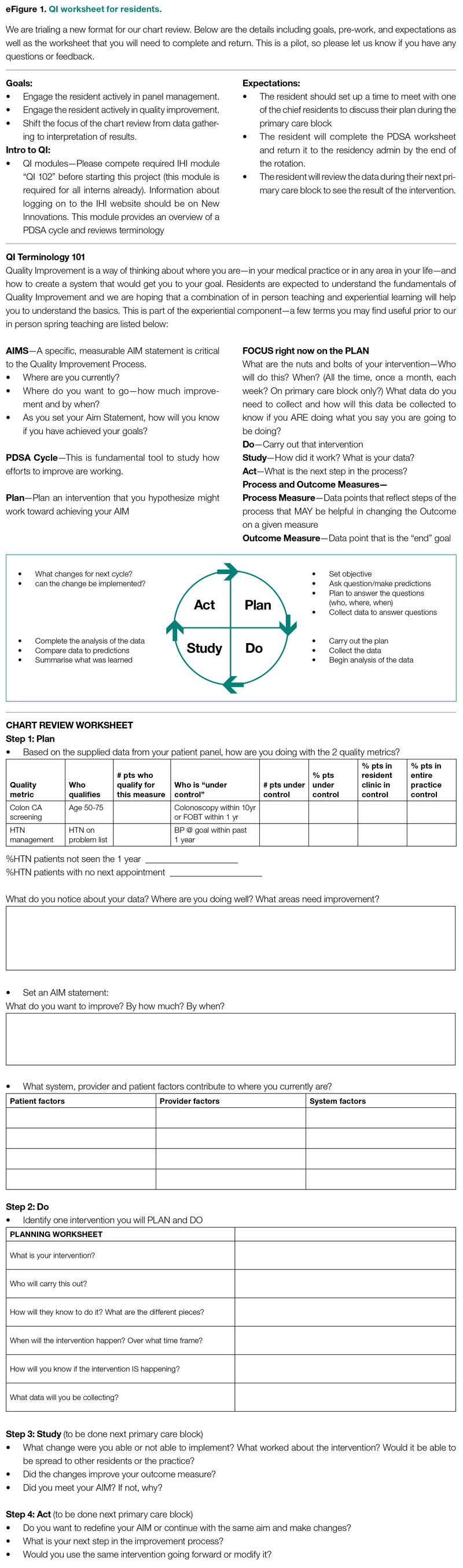

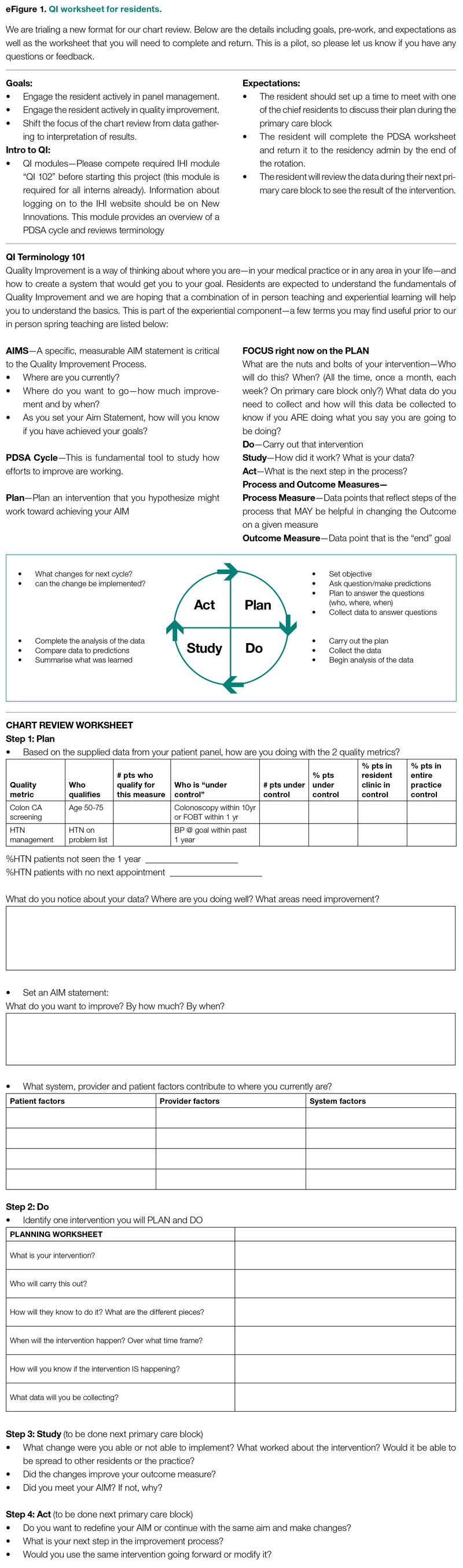

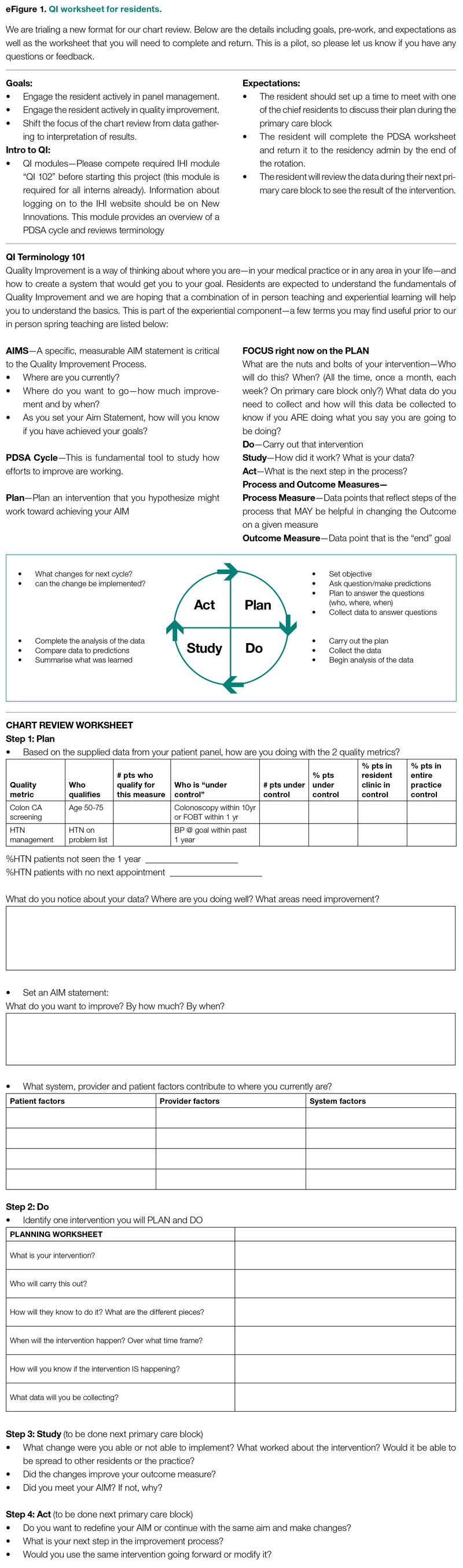

- Instruction on aim statements as a group, followed by the expectation that each resident create an individualized aim statement tailored to each resident’s patient panel rates, with the PDSA cycle to be implemented during the remainder of the primary care rotation, focusing on improvement of colorectal cancer screening and HTN control (see supplementary eFigure 1 online for the worksheet used for the workshop).

- Residents were held accountable for their interventions by various check-ins. At the end of the primary care block, residents were required to submit their completed worksheets showing the intervention they had undertaken and when it was performed. The 2 primary care attendings primarily responsible for QI education would review the resident’s work approximately 1 to 2 months after they submitted their worksheets describing their intervention. These attendings sent the residents personalized feedback based on whether the intervention had been completed or successful as evidenced by documentation in the chart, including direct patient outreach by phone, letter, or portal; outreach to the resident coordinator; scheduled follow-up appointment; or booking or completion of colorectal cancer screening. Along with this feedback, residents were also sent suggestions for next steps. Resident preceptors were copied on the email to facilitate reinforcement of the goals and plans. Finally, the resident preceptors also helped with accountability by going through the residents’ worksheets and patient panel metrics with the residents during biannual evaluations.

Evaluation

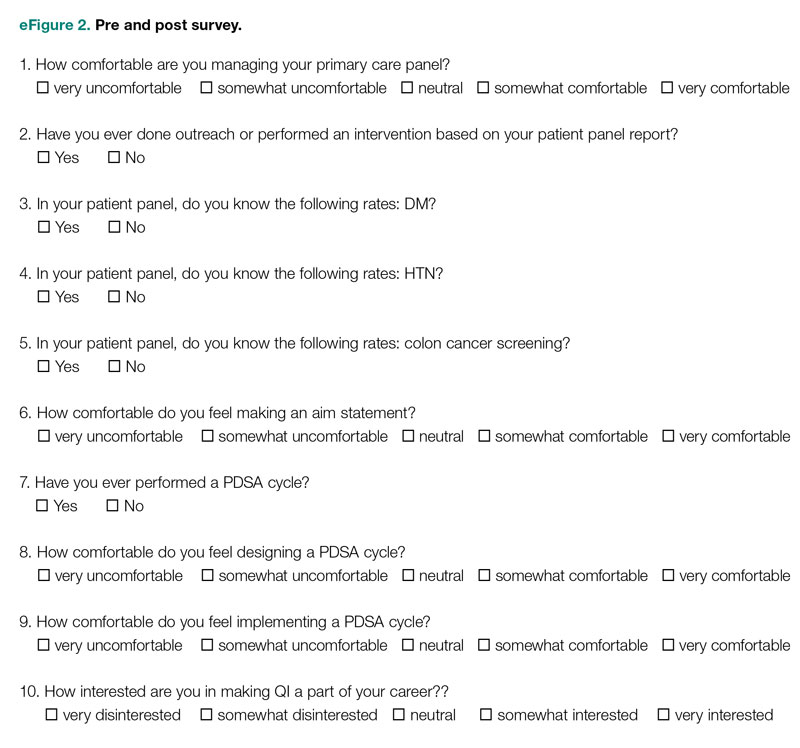

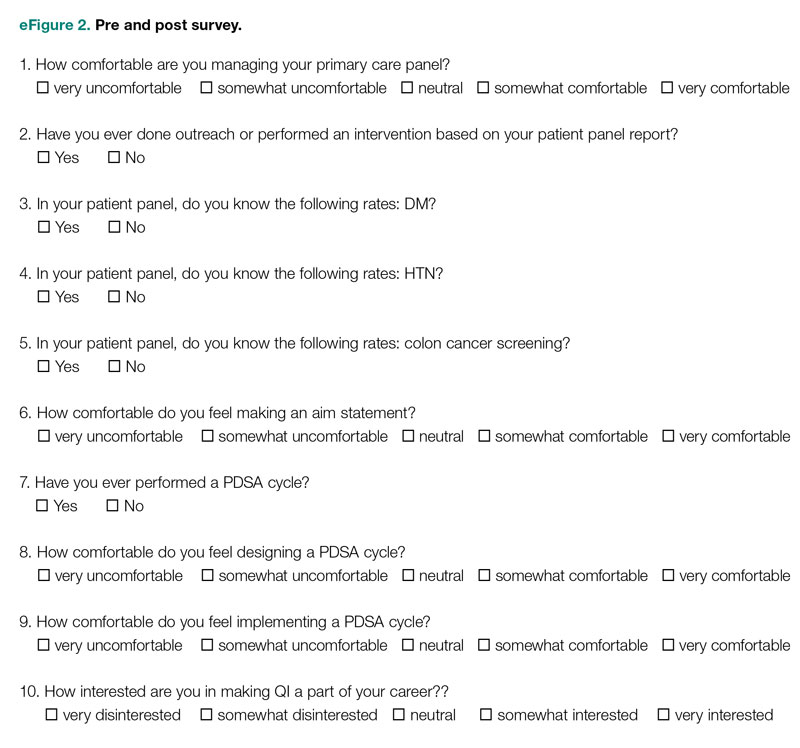

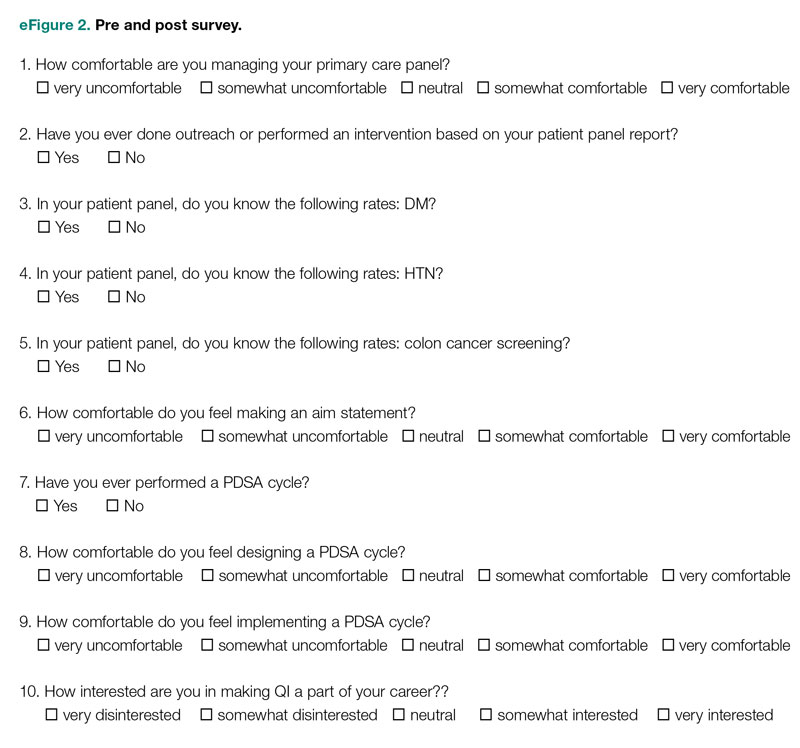

Residents were surveyed with a 10-item questionnaire pre and post intervention regarding their attitudes toward QI, understanding of QI principles, and familiarity with their patient panel data. Surveys were anonymous and distributed via the SurveyMonkey platform (see supplementary eFigure 2 online). Residents were asked if they had ever performed a PDSA cycle, performed patient outreach, or performed an intervention and whether they knew the rates of diabetes, HTN, and colorectal cancer screening in their patient panels. Questions rated on a 5-point Likert scale were used to assess comfort with panel management, developing an aim statement, designing and implementing a PDSA cycle, as well as interest in pursuing QI as a career. For the purposes of analysis, these questions were dichotomized into “somewhat comfortable” and “very comfortable” vs “neutral,” “somewhat uncomfortable,” and “very uncomfortable.” Similarly, we dichotomized the question about interest in QI as a career into “somewhat interested” and “very interested” vs “neutral,” “somewhat disinterested,” and “very disinterested.” As the surveys were anonymous, we were unable to pair the pre- and postintervention surveys and used a chi-square test to evaluate whether there was an association between survey assessments pre intervention vs post intervention and a positive or negative response to the question.

We also examined rates of HTN control and colorectal cancer screening in all 75 resident panels pre and post intervention. The paired t-test was used to determine whether the mean change from pre to post intervention was significant. SAS 9.4 (SAS Institute Inc.) was used for all analyses. Institutional Review Board exemption was obtained from the Tufts Medical Center IRB. There was no funding received for this study.

Results

Respondents

Of the 75 residents, 55 (73%) completed the survey prior to the intervention, and 39 (52%) completed the survey after the intervention.

Panel Knowledge and Intervention

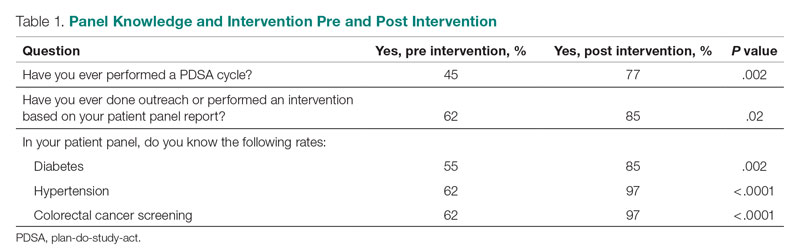

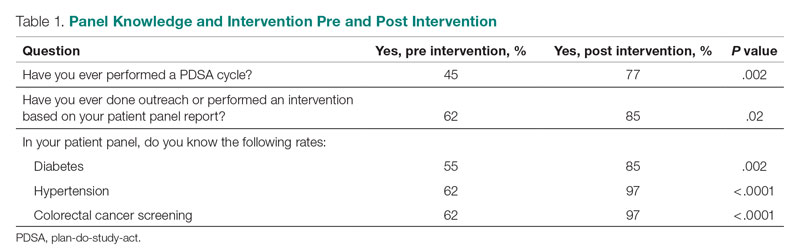

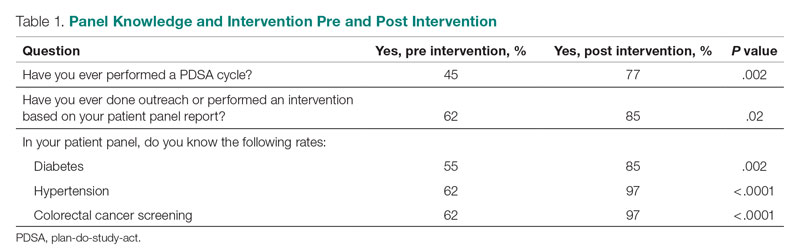

Prior to the intervention, 45% of residents had performed a PDSA cycle, compared with 77% post intervention, which was a significant increase (P = .002) (Table 1). Sixty-two percent of residents had performed outreach or an intervention based on their patient panel reports prior to the intervention, compared with 85% of residents post intervention, which was also a significant increase (P = .02). The increase post intervention was not 100%, as there were residents who either missed the initial workshop or who did not follow through with their planned intervention. Common interventions included the residents giving their coordinators a list of patients to call to schedule appointments, utilizing fellow team members (eg, pharmacists, social workers) for targeted patient outreach, or calling patients themselves to reestablish a connection.

In terms of knowledge of their patient panels, prior to the intervention, 55%, 62%, and 62% of residents knew the rates of patients in their panel with diabetes, HTN, and colorectal cancer screening, respectively. After the intervention, the residents’ knowledge of these rates increased significantly, to 85% for diabetes (P = .002), 97% for HTN (P < .0001), and 97% for colorectal cancer screening (P < .0001).

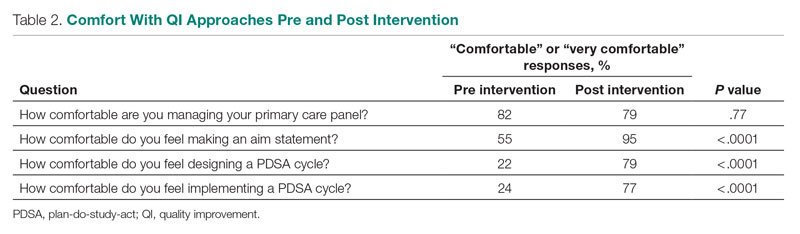

Comfort With QI Approaches

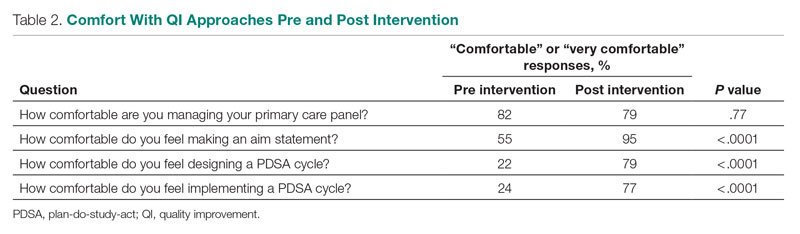

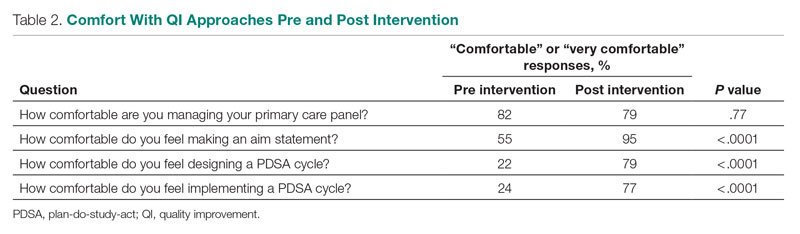

Prior to the intervention, 82% of residents were comfortable managing their primary care panel, which did not change significantly post intervention (Table 2). The residents’ comfort with designing an aim statement did significantly increase, from 55% to 95% (P < .0001). The residents also had a significant increase in comfort with both designing and implementing a PDSA cycle. Prior to the intervention, 22% felt comfortable designing a PDSA cycle, which increased to 79% (P < .0001) post intervention, and 24% felt comfortable implementing a PDSA cycle, which increased to 77% (P < .0001) post intervention.

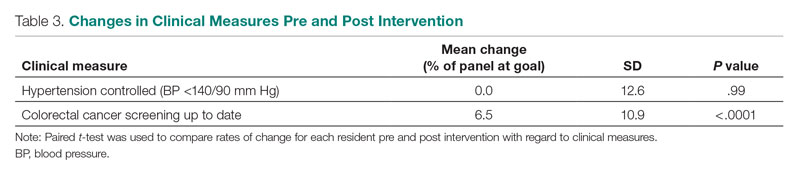

Patient Outcome Measures

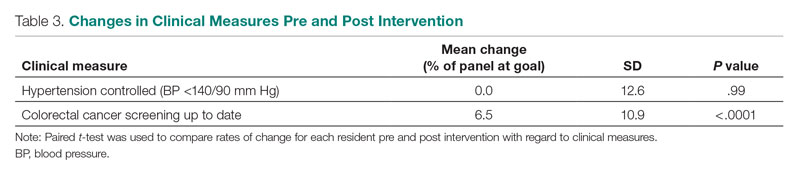

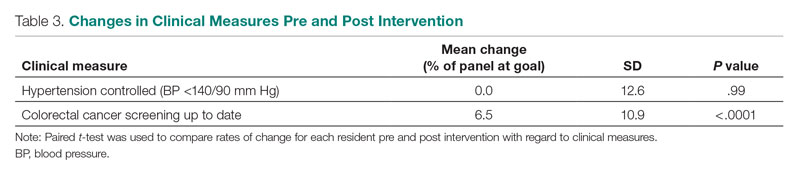

The rate of HTN control in the residents' patient panels did not change significantly pre and post intervention (Table 3). The rate of resident patients who were up to date with colorectal cancer screening increased by 6.5% post intervention (P < .0001).

Interest in QI as a Career

As part of the survey, residents were asked how interested they were in making QI a part of their career. Fifty percent of residents indicated an interest in QI pre intervention, and 54% indicated an interest post intervention, which was not a significant difference (P = .72).

Discussion

In this study, we found that integration of a QI curriculum into a primary care rotation improved both residents’ knowledge of their patient panels and comfort with QI approaches, which translated to improvement in patient outcomes. Several previous studies have found improvements in resident self-assessment or knowledge after implementation of a QI curriculum.4-13 Liao et al implemented a longitudinal curriculum including both didactic and experiential components and found an improvement in both QI confidence and knowledge.3 Similarly, Duello et al8 found that a curriculum including both didactic lectures and QI projects improved subjective QI knowledge and comfort. Interestingly, Fok and Wong9 found that resident knowledge could be sustained post curriculum after completion of a QI project, suggesting that experiential learning may be helpful in maintaining knowledge.

Studies also have looked at providing performance data to residents. Hwang et al18 found that providing audit and feedback in the form of individual panel performance data to residents compared with practice targets led to statistically significant improvement in cancer screening rates and composite quality score, indicating that there is tremendous potential in providing residents with their data. While the ACGME mandates that residents should receive data on their quality metrics, on CLER visits, many residents interviewed noted limited access to data on their metrics and benchmarks.1,2

Though previous studies have individually looked at teaching QI concepts, providing panel data, or targeting select metrics, our study was unique in that it reviewed both self-reported resident outcomes data as well as actual patient outcomes. In addition to finding increased knowledge of patient panels and comfort with QI approaches, we found a significant increase in colorectal cancer screening rates post intervention. We thought this finding was particularly important given some data that residents' patients have been found to have worse outcomes on quality metrics compared with patients cared for by staff physicians.14,15 Given that having a resident physician as a PCP has been associated with failing to meet quality measures, it is especially important to focus targeted quality improvement initiatives in this patient population to reduce disparities in care.

We found that residents had improved knowledge on their patient panels as a result of this initiative. The residents were noted to have a higher knowledge of their HTN and colorectal cancer screening rates in comparison to their diabetes metrics. We suspect this is because residents are provided with multiple metrics related to diabetes, including process measures such as A1c testing, as well as outcome measures such as A1c control, so it may be harder for them to elucidate exactly how they are doing with their diabetes patients, whereas in HTN control and colorectal cancer screening, there is only 1 associated metric. Interestingly, even though HTN and colorectal cancer screening were the 2 measures focused on in the study, the residents had a significant improvement in knowledge of the rates of diabetes in their panel as well. This suggests that even just receiving data alone is valuable, hopefully translating to better outcomes with better baseline understanding of panels. We believe that our intervention was successful because it included both a didactic and an experiential component, as well as the use of individual panel performance data.

There were several limitations to our study. It was performed at a single institution, translating to a small sample size. Our data analysis was limited because we were unable to pair our pre- and postintervention survey responses because we used an anonymous survey. We also did not have full participation in postintervention surveys from all residents, which may have biased the study in favor of high performers. Another limitation was that our survey relied on self-reported outcomes for the questions about the residents knowing their patient panels.

This study required a 2-hour workshop every 3 weeks led by a faculty member trained in QI. Given the amount of time needed for the curriculum, this study may be difficult to replicate at other institutions, especially if faculty with an interest or training in QI are not available. Given our finding that residents had increased knowledge of their patient panels after receiving panel metrics, simply providing data with the goal of smaller, focused interventions may be easier to implement. At our institution, we discontinued the longer 2-hour QI workshops designed to teach QI approaches more broadly. We continue to provide individualized panel data to all residents during their primary care rotations and conduct half-hour, small group workshops with the interns that focus on drafting aim statements and planning interventions. All residents are required to submit worksheets to us at the end of their primary care blocks listing their current rates of each predetermined metric and laying out their aim statements and planned interventions. Residents also continue to receive feedback from our faculty with expertise in QI afterward on their plans and evidence of follow-through in the chart, with their preceptors included on the feedback emails. Even without the larger QI workshop, this approach has continued to be successful and appreciated. In fact, it does appear as though improvement in colorectal cancer screening has been sustained over several years. At the end of our study period, the resident patient colorectal cancer screening rate rose from 34% to 43%, and for the 2021-2022 academic year, the rate rose further, from 46% to 50%.

Given that the resident clinic patient population is at higher risk overall, targeted outreach and approaches to improve quality must be continued. Future areas of research include looking at which interventions, whether QI curriculum, provision of panel data, or required panel management interventions, translate to the greatest improvements in patient outcomes in this vulnerable population.

Conclusion

Our study showed that a dedicated QI curriculum for the residents and access to quality metric data improved both resident knowledge and comfort with QI approaches. Beyond resident-centered outcomes, there was also translation to improved patient outcomes, with a significant increase in colon cancer screening rates post intervention.

Corresponding author: Kinjalika Sathi, MD, 800 Washington St., Boston, MA 02111; ksathi@tuftsmedicalcenter.org

Disclosures: None reported.

1. Accreditation Council for Graduate Medical Education. ACGME Common Program Requirements (Residency). Approved June 13, 2021. Updated July 1, 2022. Accessed December 29, 2022. https://www.acgme.org/globalassets/pfassets/programrequirements/cprresidency_2022v3.pdf

2. Koh NJ, Wagner R, Newton RC, et al; on behalf of the CLER Evaluation Committee and the CLER Program. CLER National Report of Findings 2021. Accreditation Council for Graduate Medical Education; 2021. Accessed December 29, 2022. https://www.acgme.org/globalassets/pdfs/cler/2021clernationalreportoffindings.pdf

3. Liao JM, Co JP, Kachalia A. Providing educational content and context for training the next generation of physicians in quality improvement. Acad Med. 2015;90(9):1241-1245. doi:10.1097/ACM.0000000000000799

4. Johnson KM, Fiordellisi W, Kuperman E, et al. X + Y = time for QI: meaningful engagement of residents in quality improvement during the ambulatory block. J Grad Med Educ. 2018;10(3):316-324. doi:10.4300/JGME-D-17-00761.1

5. Kesari K, Ali S, Smith S. Integrating residents with institutional quality improvement teams. Med Educ. 2017;51(11):1173. doi:10.1111/medu.13431

6. Ogrinc G, Cohen ES, van Aalst R, et al. Clinical and educational outcomes of an integrated inpatient quality improvement curriculum for internal medicine residents. J Grad Med Educ. 2016;8(4):563-568. doi:10.4300/JGME-D-15-00412.1

7. Malayala SV, Qazi KJ, Samdani AJ, et al. A multidisciplinary performance improvement rotation in an internal medicine training program. Int J Med Educ. 2016;7:212-213. doi:10.5116/ijme.5765.0bda

8. Duello K, Louh I, Greig H, et al. Residents’ knowledge of quality improvement: the impact of using a group project curriculum. Postgrad Med J. 2015;91(1078):431-435. doi:10.1136/postgradmedj-2014-132886

9. Fok MC, Wong RY. Impact of a competency based curriculum on quality improvement among internal medicine residents. BMC Med Educ. 2014;14:252. doi:10.1186/s12909-014-0252-7

10. Wilper AP, Smith CS, Weppner W. Instituting systems-based practice and practice-based learning and improvement: a curriculum of inquiry. Med Educ Online. 2013;18:21612. doi:10.3402/meo.v18i0.21612

11. Weigel C, Suen W, Gupte G. Using lean methodology to teach quality improvement to internal medicine residents at a safety net hospital. Am J Med Qual. 2013;28(5):392-399. doi:10.1177/1062860612474062

12. Tomolo AM, Lawrence RH, Watts B, et al. Pilot study evaluating a practice-based learning and improvement curriculum focusing on the development of system-level quality improvement skills. J Grad Med Educ. 2011;3(1):49-58. doi:10.4300/JGME-D-10-00104.1

13. Djuricich AM, Ciccarelli M, Swigonski NL. A continuous quality improvement curriculum for residents: addressing core competency, improving systems. Acad Med. 2004;79(10 Suppl):S65-S67. doi:10.1097/00001888-200410001-00020

14. Essien UR, He W, Ray A, et al. Disparities in quality of primary care by resident and staff physicians: is there a conflict between training and equity? J Gen Intern Med. 2019;34(7):1184-1191. doi:10.1007/s11606-019-04960-5

15. Amat M, Norian E, Graham KL. Unmasking a vulnerable patient care process: a qualitative study describing the current state of resident continuity clinic in a nationwide cohort of internal medicine residency programs. Am J Med. 2022;135(6):783-786. doi:10.1016/j.amjmed.2022.02.007

16. Wong BM, Etchells EE, Kuper A, et al. Teaching quality improvement and patient safety to trainees: a systematic review. Acad Med. 2010;85(9):1425-1439. doi:10.1097/ACM.0b013e3181e2d0c6

17. Armstrong G, Headrick L, Madigosky W, et al. Designing education to improve care. Jt Comm J Qual Patient Saf. 2012;38:5-14. doi:10.1016/s1553-7250(12)38002-1

18. Hwang AS, Harding AS, Chang Y, et al. An audit and feedback intervention to improve internal medicine residents’ performance on ambulatory quality measures: a randomized controlled trial. Popul Health Manag. 2019;22(6):529-535. doi:10.1089/pop.2018.0217

19. Institute for Healthcare Improvement. Open school. The paper airplane factory. Accessed December 29, 2022. https://www.ihi.org/education/IHIOpenSchool/resources/Pages/Activities/PaperAirplaneFactory.aspx

ABSTRACT

Objective: To teach internal medicine residents quality improvement (QI) principles in an effort to improve resident knowledge and comfort with QI, as well as address quality care gaps in resident clinic primary care patient panels.

Design: A QI curriculum was implemented for all residents rotating through a primary care block over a 6-month period. Residents completed Institute for Healthcare Improvement (IHI) modules, participated in a QI workshop, and received panel data reports, ultimately completing a plan-do-study-act (PDSA) cycle to improve colorectal cancer screening and hypertension control.

Setting and participants: This project was undertaken at Tufts Medical Center Primary Care, Boston, Massachusetts, the primary care teaching practice for all 75 internal medicine residents at Tufts Medical Center. All internal medicine residents were included, with 55 (73%) of the 75 residents completing the presurvey, and 39 (52%) completing the postsurvey.

Measurements: We administered a 10-question pre- and postsurvey looking at resident attitudes toward and comfort with QI and familiarity with their panel data as well as measured rates of colorectal cancer screening and hypertension control in resident panels.

Results: There was an increase in the numbers of residents who performed a PDSA cycle (P = .002), completed outreach based on their panel data (P = .02), and felt comfortable in both creating aim statements and designing and implementing PDSA cycles (P < .0001). The residents’ knowledge of their panel data significantly increased. There was no significant improvement in hypertension control, but there was an increase in colorectal cancer screening rates (P < .0001).

Conclusion: Providing panel data and performing targeted QI interventions can improve resident comfort with QI, translating to improvement in patient outcomes.

Keywords: quality improvement, resident education, medical education, care gaps, quality metrics.

As quality improvement (QI) has become an integral part of clinical practice, residency training programs have continued to evolve in how best to teach QI. The Accreditation Council for Graduate Medical Education (ACGME) Common Program requirements mandate that core competencies in residency programs include practice-based learning and improvement and systems-based practice.1 Residents should receive education in QI, receive data on quality metrics and benchmarks related to their patient population, and participate in QI activities. The Clinical Learning Environment Review (CLER) program was established to provide feedback to institutions on 6 focused areas, including patient safety and health care quality. In visits to institutions across the United States, the CLER committees found that many residents had limited knowledge of QI concepts and limited access to data on quality metrics and benchmarks.2

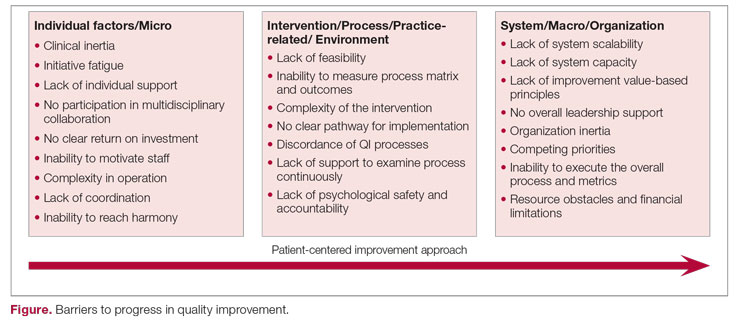

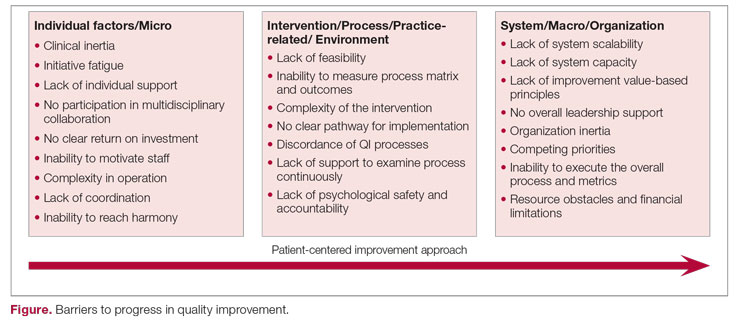

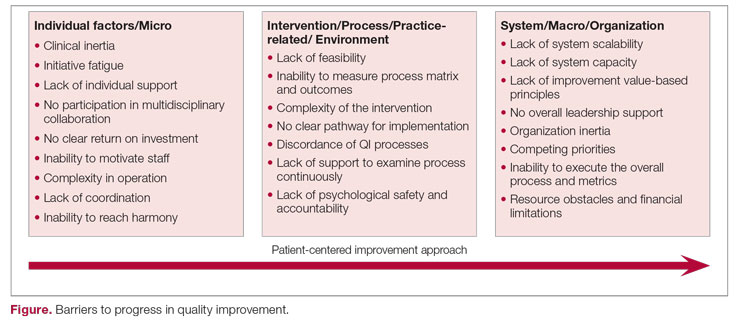

There are many barriers to implementing a QI curriculum in residency programs, and creating and maintaining successful strategies has proven challenging.3 Many QI curricula for internal medicine residents have been described in the literature, but the results of many of these studies focus on resident self-assessment of QI knowledge and numbers of projects rather than on patient outcomes.4-13 As there is some evidence suggesting that patients treated by residents have worse outcomes on ambulatory quality measures when compared with patients treated by staff physicians,14,15 it is important to also look at patient outcomes when evaluating a QI curriculum. Experts in education recommend the following to optimize learning: exposure to both didactic and experiential opportunities, connection to health system improvement efforts, and assessment of patient outcomes in addition to learner feedback.16,17 A study also found that providing panel data to residents could improve quality metrics.18

In this study, we sought to investigate the effects of a resident QI intervention during an ambulatory block on both residents’ self-assessments of QI knowledge and attitudes as well as on patient quality metrics.

Methods

Curriculum

We implemented this educational initiative at Tufts Medical Center Primary Care, Boston, Massachusetts, the primary care teaching practice for all 75 internal medicine residents at Tufts Medical Center. Co-located with the 415-bed academic medical center in downtown Boston, the practice serves more than 40,000 patients, approximately 7000 of whom are cared for by resident primary care physicians (PCPs). The internal medicine residents rotate through the primary care clinic as part of continuity clinic during ambulatory or elective blocks. In addition to continuity clinic, the residents have 2 dedicated 3-week primary care rotations during the course of an academic year. Primary care rotations consist of 5 clinic sessions a week as well as structured teaching sessions. Each resident inherits a panel of patients from an outgoing senior resident, with an average panel size of 96 patients per resident.

Prior to this study intervention, we did not do any formal QI teaching to our residents as part of their primary care curriculum, and previous panel management had focused more on chart reviews of patients whom residents perceived to be higher risk. Residents from all 3 years were included in the intervention. We taught a QI curriculum to our residents from January 2018 to June 2018 during the 3-week primary care rotation, which consisted of the following components:

- Institute for Healthcare Improvement (IHI) module QI 102 completed independently online.

- A 2-hour QI workshop led by 1 of 2 primary care faculty with backgrounds in QI, during which residents were taught basic principles of QI, including how to craft aim statements and design plan-do-study-act (PDSA) cycles, and participated in a hands-on QI activity designed to model rapid cycle improvement (the Paper Airplane Factory19).

- Distribution of individualized reports of residents’ patient panel data by email at the start of the primary care block that detailed patients’ overall rates of colorectal cancer screening and hypertension (HTN) control, along with the average resident panel rates and the average attending panel rates. The reports also included a list of all residents’ patients who were overdue for colorectal cancer screening or whose last blood pressure (BP) was uncontrolled (systolic BP ≥ 140 mm Hg or diastolic BP ≥ 90 mm Hg). These reports were originally designed by our practice’s QI team and run and exported in Microsoft Excel format monthly by our information technology (IT) administrator.

- Instruction on aim statements as a group, followed by the expectation that each resident create an individualized aim statement tailored to each resident’s patient panel rates, with the PDSA cycle to be implemented during the remainder of the primary care rotation, focusing on improvement of colorectal cancer screening and HTN control (see supplementary eFigure 1 online for the worksheet used for the workshop).

- Residents were held accountable for their interventions by various check-ins. At the end of the primary care block, residents were required to submit their completed worksheets showing the intervention they had undertaken and when it was performed. The 2 primary care attendings primarily responsible for QI education would review the resident’s work approximately 1 to 2 months after they submitted their worksheets describing their intervention. These attendings sent the residents personalized feedback based on whether the intervention had been completed or successful as evidenced by documentation in the chart, including direct patient outreach by phone, letter, or portal; outreach to the resident coordinator; scheduled follow-up appointment; or booking or completion of colorectal cancer screening. Along with this feedback, residents were also sent suggestions for next steps. Resident preceptors were copied on the email to facilitate reinforcement of the goals and plans. Finally, the resident preceptors also helped with accountability by going through the residents’ worksheets and patient panel metrics with the residents during biannual evaluations.

Evaluation

Residents were surveyed with a 10-item questionnaire pre and post intervention regarding their attitudes toward QI, understanding of QI principles, and familiarity with their patient panel data. Surveys were anonymous and distributed via the SurveyMonkey platform (see supplementary eFigure 2 online). Residents were asked if they had ever performed a PDSA cycle, performed patient outreach, or performed an intervention and whether they knew the rates of diabetes, HTN, and colorectal cancer screening in their patient panels. Questions rated on a 5-point Likert scale were used to assess comfort with panel management, developing an aim statement, designing and implementing a PDSA cycle, as well as interest in pursuing QI as a career. For the purposes of analysis, these questions were dichotomized into “somewhat comfortable” and “very comfortable” vs “neutral,” “somewhat uncomfortable,” and “very uncomfortable.” Similarly, we dichotomized the question about interest in QI as a career into “somewhat interested” and “very interested” vs “neutral,” “somewhat disinterested,” and “very disinterested.” As the surveys were anonymous, we were unable to pair the pre- and postintervention surveys and used a chi-square test to evaluate whether there was an association between survey assessments pre intervention vs post intervention and a positive or negative response to the question.

We also examined rates of HTN control and colorectal cancer screening in all 75 resident panels pre and post intervention. The paired t-test was used to determine whether the mean change from pre to post intervention was significant. SAS 9.4 (SAS Institute Inc.) was used for all analyses. Institutional Review Board exemption was obtained from the Tufts Medical Center IRB. There was no funding received for this study.

Results

Respondents

Of the 75 residents, 55 (73%) completed the survey prior to the intervention, and 39 (52%) completed the survey after the intervention.

Panel Knowledge and Intervention

Prior to the intervention, 45% of residents had performed a PDSA cycle, compared with 77% post intervention, which was a significant increase (P = .002) (Table 1). Sixty-two percent of residents had performed outreach or an intervention based on their patient panel reports prior to the intervention, compared with 85% of residents post intervention, which was also a significant increase (P = .02). The increase post intervention was not 100%, as there were residents who either missed the initial workshop or who did not follow through with their planned intervention. Common interventions included the residents giving their coordinators a list of patients to call to schedule appointments, utilizing fellow team members (eg, pharmacists, social workers) for targeted patient outreach, or calling patients themselves to reestablish a connection.

In terms of knowledge of their patient panels, prior to the intervention, 55%, 62%, and 62% of residents knew the rates of patients in their panel with diabetes, HTN, and colorectal cancer screening, respectively. After the intervention, the residents’ knowledge of these rates increased significantly, to 85% for diabetes (P = .002), 97% for HTN (P < .0001), and 97% for colorectal cancer screening (P < .0001).

Comfort With QI Approaches

Prior to the intervention, 82% of residents were comfortable managing their primary care panel, which did not change significantly post intervention (Table 2). The residents’ comfort with designing an aim statement did significantly increase, from 55% to 95% (P < .0001). The residents also had a significant increase in comfort with both designing and implementing a PDSA cycle. Prior to the intervention, 22% felt comfortable designing a PDSA cycle, which increased to 79% (P < .0001) post intervention, and 24% felt comfortable implementing a PDSA cycle, which increased to 77% (P < .0001) post intervention.

Patient Outcome Measures

The rate of HTN control in the residents' patient panels did not change significantly pre and post intervention (Table 3). The rate of resident patients who were up to date with colorectal cancer screening increased by 6.5% post intervention (P < .0001).

Interest in QI as a Career

As part of the survey, residents were asked how interested they were in making QI a part of their career. Fifty percent of residents indicated an interest in QI pre intervention, and 54% indicated an interest post intervention, which was not a significant difference (P = .72).

Discussion

In this study, we found that integration of a QI curriculum into a primary care rotation improved both residents’ knowledge of their patient panels and comfort with QI approaches, which translated to improvement in patient outcomes. Several previous studies have found improvements in resident self-assessment or knowledge after implementation of a QI curriculum.4-13 Liao et al implemented a longitudinal curriculum including both didactic and experiential components and found an improvement in both QI confidence and knowledge.3 Similarly, Duello et al8 found that a curriculum including both didactic lectures and QI projects improved subjective QI knowledge and comfort. Interestingly, Fok and Wong9 found that resident knowledge could be sustained post curriculum after completion of a QI project, suggesting that experiential learning may be helpful in maintaining knowledge.

Studies also have looked at providing performance data to residents. Hwang et al18 found that providing audit and feedback in the form of individual panel performance data to residents compared with practice targets led to statistically significant improvement in cancer screening rates and composite quality score, indicating that there is tremendous potential in providing residents with their data. While the ACGME mandates that residents should receive data on their quality metrics, on CLER visits, many residents interviewed noted limited access to data on their metrics and benchmarks.1,2

Though previous studies have individually looked at teaching QI concepts, providing panel data, or targeting select metrics, our study was unique in that it reviewed both self-reported resident outcomes data as well as actual patient outcomes. In addition to finding increased knowledge of patient panels and comfort with QI approaches, we found a significant increase in colorectal cancer screening rates post intervention. We thought this finding was particularly important given some data that residents' patients have been found to have worse outcomes on quality metrics compared with patients cared for by staff physicians.14,15 Given that having a resident physician as a PCP has been associated with failing to meet quality measures, it is especially important to focus targeted quality improvement initiatives in this patient population to reduce disparities in care.

We found that residents had improved knowledge on their patient panels as a result of this initiative. The residents were noted to have a higher knowledge of their HTN and colorectal cancer screening rates in comparison to their diabetes metrics. We suspect this is because residents are provided with multiple metrics related to diabetes, including process measures such as A1c testing, as well as outcome measures such as A1c control, so it may be harder for them to elucidate exactly how they are doing with their diabetes patients, whereas in HTN control and colorectal cancer screening, there is only 1 associated metric. Interestingly, even though HTN and colorectal cancer screening were the 2 measures focused on in the study, the residents had a significant improvement in knowledge of the rates of diabetes in their panel as well. This suggests that even just receiving data alone is valuable, hopefully translating to better outcomes with better baseline understanding of panels. We believe that our intervention was successful because it included both a didactic and an experiential component, as well as the use of individual panel performance data.

There were several limitations to our study. It was performed at a single institution, translating to a small sample size. Our data analysis was limited because we were unable to pair our pre- and postintervention survey responses because we used an anonymous survey. We also did not have full participation in postintervention surveys from all residents, which may have biased the study in favor of high performers. Another limitation was that our survey relied on self-reported outcomes for the questions about the residents knowing their patient panels.

This study required a 2-hour workshop every 3 weeks led by a faculty member trained in QI. Given the amount of time needed for the curriculum, this study may be difficult to replicate at other institutions, especially if faculty with an interest or training in QI are not available. Given our finding that residents had increased knowledge of their patient panels after receiving panel metrics, simply providing data with the goal of smaller, focused interventions may be easier to implement. At our institution, we discontinued the longer 2-hour QI workshops designed to teach QI approaches more broadly. We continue to provide individualized panel data to all residents during their primary care rotations and conduct half-hour, small group workshops with the interns that focus on drafting aim statements and planning interventions. All residents are required to submit worksheets to us at the end of their primary care blocks listing their current rates of each predetermined metric and laying out their aim statements and planned interventions. Residents also continue to receive feedback from our faculty with expertise in QI afterward on their plans and evidence of follow-through in the chart, with their preceptors included on the feedback emails. Even without the larger QI workshop, this approach has continued to be successful and appreciated. In fact, it does appear as though improvement in colorectal cancer screening has been sustained over several years. At the end of our study period, the resident patient colorectal cancer screening rate rose from 34% to 43%, and for the 2021-2022 academic year, the rate rose further, from 46% to 50%.

Given that the resident clinic patient population is at higher risk overall, targeted outreach and approaches to improve quality must be continued. Future areas of research include looking at which interventions, whether QI curriculum, provision of panel data, or required panel management interventions, translate to the greatest improvements in patient outcomes in this vulnerable population.

Conclusion

Our study showed that a dedicated QI curriculum for the residents and access to quality metric data improved both resident knowledge and comfort with QI approaches. Beyond resident-centered outcomes, there was also translation to improved patient outcomes, with a significant increase in colon cancer screening rates post intervention.

Corresponding author: Kinjalika Sathi, MD, 800 Washington St., Boston, MA 02111; ksathi@tuftsmedicalcenter.org

Disclosures: None reported.

ABSTRACT

Objective: To teach internal medicine residents quality improvement (QI) principles in an effort to improve resident knowledge and comfort with QI, as well as address quality care gaps in resident clinic primary care patient panels.

Design: A QI curriculum was implemented for all residents rotating through a primary care block over a 6-month period. Residents completed Institute for Healthcare Improvement (IHI) modules, participated in a QI workshop, and received panel data reports, ultimately completing a plan-do-study-act (PDSA) cycle to improve colorectal cancer screening and hypertension control.

Setting and participants: This project was undertaken at Tufts Medical Center Primary Care, Boston, Massachusetts, the primary care teaching practice for all 75 internal medicine residents at Tufts Medical Center. All internal medicine residents were included, with 55 (73%) of the 75 residents completing the presurvey, and 39 (52%) completing the postsurvey.

Measurements: We administered a 10-question pre- and postsurvey looking at resident attitudes toward and comfort with QI and familiarity with their panel data as well as measured rates of colorectal cancer screening and hypertension control in resident panels.

Results: There was an increase in the numbers of residents who performed a PDSA cycle (P = .002), completed outreach based on their panel data (P = .02), and felt comfortable in both creating aim statements and designing and implementing PDSA cycles (P < .0001). The residents’ knowledge of their panel data significantly increased. There was no significant improvement in hypertension control, but there was an increase in colorectal cancer screening rates (P < .0001).

Conclusion: Providing panel data and performing targeted QI interventions can improve resident comfort with QI, translating to improvement in patient outcomes.

Keywords: quality improvement, resident education, medical education, care gaps, quality metrics.

As quality improvement (QI) has become an integral part of clinical practice, residency training programs have continued to evolve in how best to teach QI. The Accreditation Council for Graduate Medical Education (ACGME) Common Program requirements mandate that core competencies in residency programs include practice-based learning and improvement and systems-based practice.1 Residents should receive education in QI, receive data on quality metrics and benchmarks related to their patient population, and participate in QI activities. The Clinical Learning Environment Review (CLER) program was established to provide feedback to institutions on 6 focused areas, including patient safety and health care quality. In visits to institutions across the United States, the CLER committees found that many residents had limited knowledge of QI concepts and limited access to data on quality metrics and benchmarks.2

There are many barriers to implementing a QI curriculum in residency programs, and creating and maintaining successful strategies has proven challenging.3 Many QI curricula for internal medicine residents have been described in the literature, but the results of many of these studies focus on resident self-assessment of QI knowledge and numbers of projects rather than on patient outcomes.4-13 As there is some evidence suggesting that patients treated by residents have worse outcomes on ambulatory quality measures when compared with patients treated by staff physicians,14,15 it is important to also look at patient outcomes when evaluating a QI curriculum. Experts in education recommend the following to optimize learning: exposure to both didactic and experiential opportunities, connection to health system improvement efforts, and assessment of patient outcomes in addition to learner feedback.16,17 A study also found that providing panel data to residents could improve quality metrics.18

In this study, we sought to investigate the effects of a resident QI intervention during an ambulatory block on both residents’ self-assessments of QI knowledge and attitudes as well as on patient quality metrics.

Methods

Curriculum

We implemented this educational initiative at Tufts Medical Center Primary Care, Boston, Massachusetts, the primary care teaching practice for all 75 internal medicine residents at Tufts Medical Center. Co-located with the 415-bed academic medical center in downtown Boston, the practice serves more than 40,000 patients, approximately 7000 of whom are cared for by resident primary care physicians (PCPs). The internal medicine residents rotate through the primary care clinic as part of continuity clinic during ambulatory or elective blocks. In addition to continuity clinic, the residents have 2 dedicated 3-week primary care rotations during the course of an academic year. Primary care rotations consist of 5 clinic sessions a week as well as structured teaching sessions. Each resident inherits a panel of patients from an outgoing senior resident, with an average panel size of 96 patients per resident.

Prior to this study intervention, we did not do any formal QI teaching to our residents as part of their primary care curriculum, and previous panel management had focused more on chart reviews of patients whom residents perceived to be higher risk. Residents from all 3 years were included in the intervention. We taught a QI curriculum to our residents from January 2018 to June 2018 during the 3-week primary care rotation, which consisted of the following components:

- Institute for Healthcare Improvement (IHI) module QI 102 completed independently online.

- A 2-hour QI workshop led by 1 of 2 primary care faculty with backgrounds in QI, during which residents were taught basic principles of QI, including how to craft aim statements and design plan-do-study-act (PDSA) cycles, and participated in a hands-on QI activity designed to model rapid cycle improvement (the Paper Airplane Factory19).

- Distribution of individualized reports of residents’ patient panel data by email at the start of the primary care block that detailed patients’ overall rates of colorectal cancer screening and hypertension (HTN) control, along with the average resident panel rates and the average attending panel rates. The reports also included a list of all residents’ patients who were overdue for colorectal cancer screening or whose last blood pressure (BP) was uncontrolled (systolic BP ≥ 140 mm Hg or diastolic BP ≥ 90 mm Hg). These reports were originally designed by our practice’s QI team and run and exported in Microsoft Excel format monthly by our information technology (IT) administrator.

- Instruction on aim statements as a group, followed by the expectation that each resident create an individualized aim statement tailored to each resident’s patient panel rates, with the PDSA cycle to be implemented during the remainder of the primary care rotation, focusing on improvement of colorectal cancer screening and HTN control (see supplementary eFigure 1 online for the worksheet used for the workshop).

- Residents were held accountable for their interventions by various check-ins. At the end of the primary care block, residents were required to submit their completed worksheets showing the intervention they had undertaken and when it was performed. The 2 primary care attendings primarily responsible for QI education would review the resident’s work approximately 1 to 2 months after they submitted their worksheets describing their intervention. These attendings sent the residents personalized feedback based on whether the intervention had been completed or successful as evidenced by documentation in the chart, including direct patient outreach by phone, letter, or portal; outreach to the resident coordinator; scheduled follow-up appointment; or booking or completion of colorectal cancer screening. Along with this feedback, residents were also sent suggestions for next steps. Resident preceptors were copied on the email to facilitate reinforcement of the goals and plans. Finally, the resident preceptors also helped with accountability by going through the residents’ worksheets and patient panel metrics with the residents during biannual evaluations.

Evaluation

Residents were surveyed with a 10-item questionnaire pre and post intervention regarding their attitudes toward QI, understanding of QI principles, and familiarity with their patient panel data. Surveys were anonymous and distributed via the SurveyMonkey platform (see supplementary eFigure 2 online). Residents were asked if they had ever performed a PDSA cycle, performed patient outreach, or performed an intervention and whether they knew the rates of diabetes, HTN, and colorectal cancer screening in their patient panels. Questions rated on a 5-point Likert scale were used to assess comfort with panel management, developing an aim statement, designing and implementing a PDSA cycle, as well as interest in pursuing QI as a career. For the purposes of analysis, these questions were dichotomized into “somewhat comfortable” and “very comfortable” vs “neutral,” “somewhat uncomfortable,” and “very uncomfortable.” Similarly, we dichotomized the question about interest in QI as a career into “somewhat interested” and “very interested” vs “neutral,” “somewhat disinterested,” and “very disinterested.” As the surveys were anonymous, we were unable to pair the pre- and postintervention surveys and used a chi-square test to evaluate whether there was an association between survey assessments pre intervention vs post intervention and a positive or negative response to the question.

We also examined rates of HTN control and colorectal cancer screening in all 75 resident panels pre and post intervention. The paired t-test was used to determine whether the mean change from pre to post intervention was significant. SAS 9.4 (SAS Institute Inc.) was used for all analyses. Institutional Review Board exemption was obtained from the Tufts Medical Center IRB. There was no funding received for this study.

Results

Respondents

Of the 75 residents, 55 (73%) completed the survey prior to the intervention, and 39 (52%) completed the survey after the intervention.

Panel Knowledge and Intervention

Prior to the intervention, 45% of residents had performed a PDSA cycle, compared with 77% post intervention, which was a significant increase (P = .002) (Table 1). Sixty-two percent of residents had performed outreach or an intervention based on their patient panel reports prior to the intervention, compared with 85% of residents post intervention, which was also a significant increase (P = .02). The increase post intervention was not 100%, as there were residents who either missed the initial workshop or who did not follow through with their planned intervention. Common interventions included the residents giving their coordinators a list of patients to call to schedule appointments, utilizing fellow team members (eg, pharmacists, social workers) for targeted patient outreach, or calling patients themselves to reestablish a connection.

In terms of knowledge of their patient panels, prior to the intervention, 55%, 62%, and 62% of residents knew the rates of patients in their panel with diabetes, HTN, and colorectal cancer screening, respectively. After the intervention, the residents’ knowledge of these rates increased significantly, to 85% for diabetes (P = .002), 97% for HTN (P < .0001), and 97% for colorectal cancer screening (P < .0001).

Comfort With QI Approaches

Prior to the intervention, 82% of residents were comfortable managing their primary care panel, which did not change significantly post intervention (Table 2). The residents’ comfort with designing an aim statement did significantly increase, from 55% to 95% (P < .0001). The residents also had a significant increase in comfort with both designing and implementing a PDSA cycle. Prior to the intervention, 22% felt comfortable designing a PDSA cycle, which increased to 79% (P < .0001) post intervention, and 24% felt comfortable implementing a PDSA cycle, which increased to 77% (P < .0001) post intervention.

Patient Outcome Measures

The rate of HTN control in the residents' patient panels did not change significantly pre and post intervention (Table 3). The rate of resident patients who were up to date with colorectal cancer screening increased by 6.5% post intervention (P < .0001).

Interest in QI as a Career

As part of the survey, residents were asked how interested they were in making QI a part of their career. Fifty percent of residents indicated an interest in QI pre intervention, and 54% indicated an interest post intervention, which was not a significant difference (P = .72).

Discussion

In this study, we found that integration of a QI curriculum into a primary care rotation improved both residents’ knowledge of their patient panels and comfort with QI approaches, which translated to improvement in patient outcomes. Several previous studies have found improvements in resident self-assessment or knowledge after implementation of a QI curriculum.4-13 Liao et al implemented a longitudinal curriculum including both didactic and experiential components and found an improvement in both QI confidence and knowledge.3 Similarly, Duello et al8 found that a curriculum including both didactic lectures and QI projects improved subjective QI knowledge and comfort. Interestingly, Fok and Wong9 found that resident knowledge could be sustained post curriculum after completion of a QI project, suggesting that experiential learning may be helpful in maintaining knowledge.

Studies also have looked at providing performance data to residents. Hwang et al18 found that providing audit and feedback in the form of individual panel performance data to residents compared with practice targets led to statistically significant improvement in cancer screening rates and composite quality score, indicating that there is tremendous potential in providing residents with their data. While the ACGME mandates that residents should receive data on their quality metrics, on CLER visits, many residents interviewed noted limited access to data on their metrics and benchmarks.1,2

Though previous studies have individually looked at teaching QI concepts, providing panel data, or targeting select metrics, our study was unique in that it reviewed both self-reported resident outcomes data as well as actual patient outcomes. In addition to finding increased knowledge of patient panels and comfort with QI approaches, we found a significant increase in colorectal cancer screening rates post intervention. We thought this finding was particularly important given some data that residents' patients have been found to have worse outcomes on quality metrics compared with patients cared for by staff physicians.14,15 Given that having a resident physician as a PCP has been associated with failing to meet quality measures, it is especially important to focus targeted quality improvement initiatives in this patient population to reduce disparities in care.

We found that residents had improved knowledge on their patient panels as a result of this initiative. The residents were noted to have a higher knowledge of their HTN and colorectal cancer screening rates in comparison to their diabetes metrics. We suspect this is because residents are provided with multiple metrics related to diabetes, including process measures such as A1c testing, as well as outcome measures such as A1c control, so it may be harder for them to elucidate exactly how they are doing with their diabetes patients, whereas in HTN control and colorectal cancer screening, there is only 1 associated metric. Interestingly, even though HTN and colorectal cancer screening were the 2 measures focused on in the study, the residents had a significant improvement in knowledge of the rates of diabetes in their panel as well. This suggests that even just receiving data alone is valuable, hopefully translating to better outcomes with better baseline understanding of panels. We believe that our intervention was successful because it included both a didactic and an experiential component, as well as the use of individual panel performance data.

There were several limitations to our study. It was performed at a single institution, translating to a small sample size. Our data analysis was limited because we were unable to pair our pre- and postintervention survey responses because we used an anonymous survey. We also did not have full participation in postintervention surveys from all residents, which may have biased the study in favor of high performers. Another limitation was that our survey relied on self-reported outcomes for the questions about the residents knowing their patient panels.

This study required a 2-hour workshop every 3 weeks led by a faculty member trained in QI. Given the amount of time needed for the curriculum, this study may be difficult to replicate at other institutions, especially if faculty with an interest or training in QI are not available. Given our finding that residents had increased knowledge of their patient panels after receiving panel metrics, simply providing data with the goal of smaller, focused interventions may be easier to implement. At our institution, we discontinued the longer 2-hour QI workshops designed to teach QI approaches more broadly. We continue to provide individualized panel data to all residents during their primary care rotations and conduct half-hour, small group workshops with the interns that focus on drafting aim statements and planning interventions. All residents are required to submit worksheets to us at the end of their primary care blocks listing their current rates of each predetermined metric and laying out their aim statements and planned interventions. Residents also continue to receive feedback from our faculty with expertise in QI afterward on their plans and evidence of follow-through in the chart, with their preceptors included on the feedback emails. Even without the larger QI workshop, this approach has continued to be successful and appreciated. In fact, it does appear as though improvement in colorectal cancer screening has been sustained over several years. At the end of our study period, the resident patient colorectal cancer screening rate rose from 34% to 43%, and for the 2021-2022 academic year, the rate rose further, from 46% to 50%.

Given that the resident clinic patient population is at higher risk overall, targeted outreach and approaches to improve quality must be continued. Future areas of research include looking at which interventions, whether QI curriculum, provision of panel data, or required panel management interventions, translate to the greatest improvements in patient outcomes in this vulnerable population.

Conclusion

Our study showed that a dedicated QI curriculum for the residents and access to quality metric data improved both resident knowledge and comfort with QI approaches. Beyond resident-centered outcomes, there was also translation to improved patient outcomes, with a significant increase in colon cancer screening rates post intervention.

Corresponding author: Kinjalika Sathi, MD, 800 Washington St., Boston, MA 02111; ksathi@tuftsmedicalcenter.org

Disclosures: None reported.

1. Accreditation Council for Graduate Medical Education. ACGME Common Program Requirements (Residency). Approved June 13, 2021. Updated July 1, 2022. Accessed December 29, 2022. https://www.acgme.org/globalassets/pfassets/programrequirements/cprresidency_2022v3.pdf

2. Koh NJ, Wagner R, Newton RC, et al; on behalf of the CLER Evaluation Committee and the CLER Program. CLER National Report of Findings 2021. Accreditation Council for Graduate Medical Education; 2021. Accessed December 29, 2022. https://www.acgme.org/globalassets/pdfs/cler/2021clernationalreportoffindings.pdf

3. Liao JM, Co JP, Kachalia A. Providing educational content and context for training the next generation of physicians in quality improvement. Acad Med. 2015;90(9):1241-1245. doi:10.1097/ACM.0000000000000799

4. Johnson KM, Fiordellisi W, Kuperman E, et al. X + Y = time for QI: meaningful engagement of residents in quality improvement during the ambulatory block. J Grad Med Educ. 2018;10(3):316-324. doi:10.4300/JGME-D-17-00761.1

5. Kesari K, Ali S, Smith S. Integrating residents with institutional quality improvement teams. Med Educ. 2017;51(11):1173. doi:10.1111/medu.13431

6. Ogrinc G, Cohen ES, van Aalst R, et al. Clinical and educational outcomes of an integrated inpatient quality improvement curriculum for internal medicine residents. J Grad Med Educ. 2016;8(4):563-568. doi:10.4300/JGME-D-15-00412.1

7. Malayala SV, Qazi KJ, Samdani AJ, et al. A multidisciplinary performance improvement rotation in an internal medicine training program. Int J Med Educ. 2016;7:212-213. doi:10.5116/ijme.5765.0bda

8. Duello K, Louh I, Greig H, et al. Residents’ knowledge of quality improvement: the impact of using a group project curriculum. Postgrad Med J. 2015;91(1078):431-435. doi:10.1136/postgradmedj-2014-132886

9. Fok MC, Wong RY. Impact of a competency based curriculum on quality improvement among internal medicine residents. BMC Med Educ. 2014;14:252. doi:10.1186/s12909-014-0252-7

10. Wilper AP, Smith CS, Weppner W. Instituting systems-based practice and practice-based learning and improvement: a curriculum of inquiry. Med Educ Online. 2013;18:21612. doi:10.3402/meo.v18i0.21612

11. Weigel C, Suen W, Gupte G. Using lean methodology to teach quality improvement to internal medicine residents at a safety net hospital. Am J Med Qual. 2013;28(5):392-399. doi:10.1177/1062860612474062

12. Tomolo AM, Lawrence RH, Watts B, et al. Pilot study evaluating a practice-based learning and improvement curriculum focusing on the development of system-level quality improvement skills. J Grad Med Educ. 2011;3(1):49-58. doi:10.4300/JGME-D-10-00104.1

13. Djuricich AM, Ciccarelli M, Swigonski NL. A continuous quality improvement curriculum for residents: addressing core competency, improving systems. Acad Med. 2004;79(10 Suppl):S65-S67. doi:10.1097/00001888-200410001-00020

14. Essien UR, He W, Ray A, et al. Disparities in quality of primary care by resident and staff physicians: is there a conflict between training and equity? J Gen Intern Med. 2019;34(7):1184-1191. doi:10.1007/s11606-019-04960-5

15. Amat M, Norian E, Graham KL. Unmasking a vulnerable patient care process: a qualitative study describing the current state of resident continuity clinic in a nationwide cohort of internal medicine residency programs. Am J Med. 2022;135(6):783-786. doi:10.1016/j.amjmed.2022.02.007

16. Wong BM, Etchells EE, Kuper A, et al. Teaching quality improvement and patient safety to trainees: a systematic review. Acad Med. 2010;85(9):1425-1439. doi:10.1097/ACM.0b013e3181e2d0c6

17. Armstrong G, Headrick L, Madigosky W, et al. Designing education to improve care. Jt Comm J Qual Patient Saf. 2012;38:5-14. doi:10.1016/s1553-7250(12)38002-1

18. Hwang AS, Harding AS, Chang Y, et al. An audit and feedback intervention to improve internal medicine residents’ performance on ambulatory quality measures: a randomized controlled trial. Popul Health Manag. 2019;22(6):529-535. doi:10.1089/pop.2018.0217

19. Institute for Healthcare Improvement. Open school. The paper airplane factory. Accessed December 29, 2022. https://www.ihi.org/education/IHIOpenSchool/resources/Pages/Activities/PaperAirplaneFactory.aspx

1. Accreditation Council for Graduate Medical Education. ACGME Common Program Requirements (Residency). Approved June 13, 2021. Updated July 1, 2022. Accessed December 29, 2022. https://www.acgme.org/globalassets/pfassets/programrequirements/cprresidency_2022v3.pdf

2. Koh NJ, Wagner R, Newton RC, et al; on behalf of the CLER Evaluation Committee and the CLER Program. CLER National Report of Findings 2021. Accreditation Council for Graduate Medical Education; 2021. Accessed December 29, 2022. https://www.acgme.org/globalassets/pdfs/cler/2021clernationalreportoffindings.pdf

3. Liao JM, Co JP, Kachalia A. Providing educational content and context for training the next generation of physicians in quality improvement. Acad Med. 2015;90(9):1241-1245. doi:10.1097/ACM.0000000000000799

4. Johnson KM, Fiordellisi W, Kuperman E, et al. X + Y = time for QI: meaningful engagement of residents in quality improvement during the ambulatory block. J Grad Med Educ. 2018;10(3):316-324. doi:10.4300/JGME-D-17-00761.1

5. Kesari K, Ali S, Smith S. Integrating residents with institutional quality improvement teams. Med Educ. 2017;51(11):1173. doi:10.1111/medu.13431

6. Ogrinc G, Cohen ES, van Aalst R, et al. Clinical and educational outcomes of an integrated inpatient quality improvement curriculum for internal medicine residents. J Grad Med Educ. 2016;8(4):563-568. doi:10.4300/JGME-D-15-00412.1

7. Malayala SV, Qazi KJ, Samdani AJ, et al. A multidisciplinary performance improvement rotation in an internal medicine training program. Int J Med Educ. 2016;7:212-213. doi:10.5116/ijme.5765.0bda

8. Duello K, Louh I, Greig H, et al. Residents’ knowledge of quality improvement: the impact of using a group project curriculum. Postgrad Med J. 2015;91(1078):431-435. doi:10.1136/postgradmedj-2014-132886

9. Fok MC, Wong RY. Impact of a competency based curriculum on quality improvement among internal medicine residents. BMC Med Educ. 2014;14:252. doi:10.1186/s12909-014-0252-7

10. Wilper AP, Smith CS, Weppner W. Instituting systems-based practice and practice-based learning and improvement: a curriculum of inquiry. Med Educ Online. 2013;18:21612. doi:10.3402/meo.v18i0.21612

11. Weigel C, Suen W, Gupte G. Using lean methodology to teach quality improvement to internal medicine residents at a safety net hospital. Am J Med Qual. 2013;28(5):392-399. doi:10.1177/1062860612474062

12. Tomolo AM, Lawrence RH, Watts B, et al. Pilot study evaluating a practice-based learning and improvement curriculum focusing on the development of system-level quality improvement skills. J Grad Med Educ. 2011;3(1):49-58. doi:10.4300/JGME-D-10-00104.1

13. Djuricich AM, Ciccarelli M, Swigonski NL. A continuous quality improvement curriculum for residents: addressing core competency, improving systems. Acad Med. 2004;79(10 Suppl):S65-S67. doi:10.1097/00001888-200410001-00020

14. Essien UR, He W, Ray A, et al. Disparities in quality of primary care by resident and staff physicians: is there a conflict between training and equity? J Gen Intern Med. 2019;34(7):1184-1191. doi:10.1007/s11606-019-04960-5

15. Amat M, Norian E, Graham KL. Unmasking a vulnerable patient care process: a qualitative study describing the current state of resident continuity clinic in a nationwide cohort of internal medicine residency programs. Am J Med. 2022;135(6):783-786. doi:10.1016/j.amjmed.2022.02.007

16. Wong BM, Etchells EE, Kuper A, et al. Teaching quality improvement and patient safety to trainees: a systematic review. Acad Med. 2010;85(9):1425-1439. doi:10.1097/ACM.0b013e3181e2d0c6

17. Armstrong G, Headrick L, Madigosky W, et al. Designing education to improve care. Jt Comm J Qual Patient Saf. 2012;38:5-14. doi:10.1016/s1553-7250(12)38002-1

18. Hwang AS, Harding AS, Chang Y, et al. An audit and feedback intervention to improve internal medicine residents’ performance on ambulatory quality measures: a randomized controlled trial. Popul Health Manag. 2019;22(6):529-535. doi:10.1089/pop.2018.0217

19. Institute for Healthcare Improvement. Open school. The paper airplane factory. Accessed December 29, 2022. https://www.ihi.org/education/IHIOpenSchool/resources/Pages/Activities/PaperAirplaneFactory.aspx

Diagnostic Errors in Hospitalized Patients

Abstract

Diagnostic errors in hospitalized patients are a leading cause of preventable morbidity and mortality. Significant challenges in defining and measuring diagnostic errors and underlying process failure points have led to considerable variability in reported rates of diagnostic errors and adverse outcomes. In this article, we explore the diagnostic process and its discrete components, emphasizing the centrality of the patient in decision-making as well as the continuous nature of the process. We review the incidence of diagnostic errors in hospitalized patients and different methodological approaches that have been used to arrive at these estimates. We discuss different but interdependent provider- and system-related process-failure points that lead to diagnostic errors. We examine specific challenges related to measurement of diagnostic errors and describe traditional and novel approaches that are being used to obtain the most precise estimates. Finally, we examine various patient-, provider-, and organizational-level interventions that have been proposed to improve diagnostic safety in hospitalized patients.

Keywords: diagnostic error, hospital medicine, patient safety.

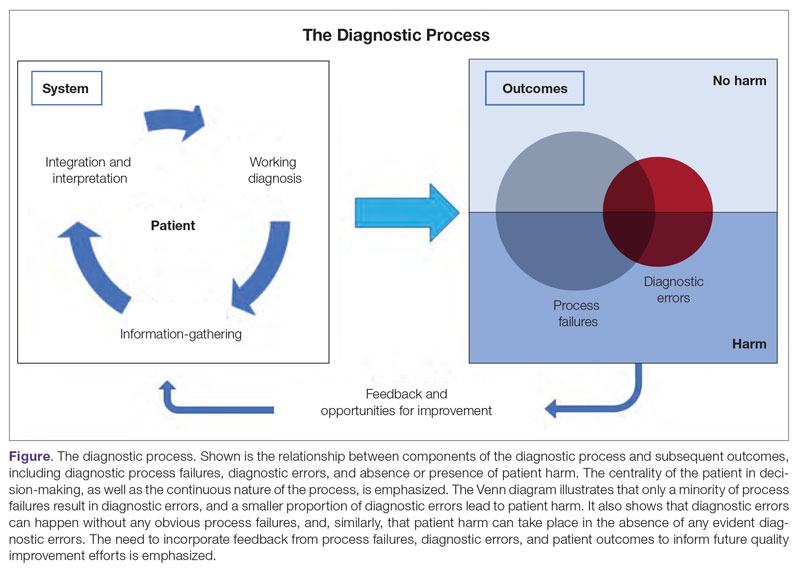

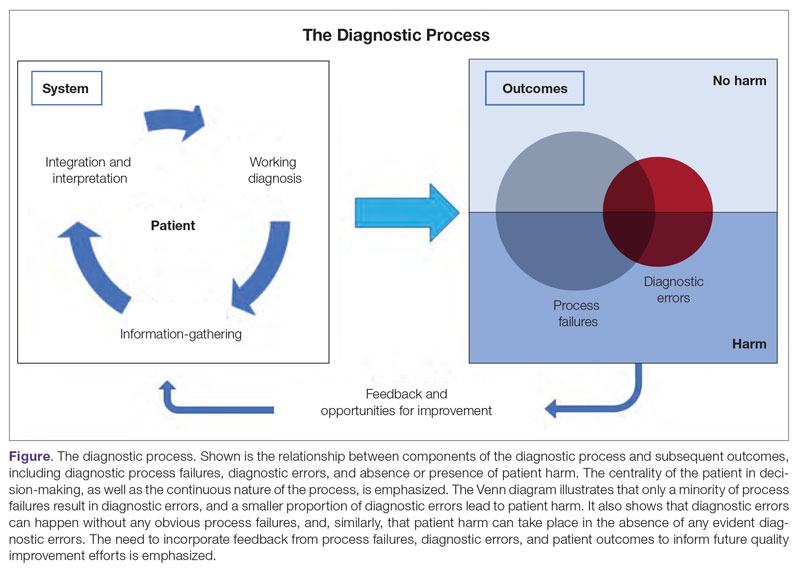

Diagnosis is defined as a “pre-existing set of categories agreed upon by the medical profession to designate a specific condition.”1 The diagnostic process involves obtaining a clinical history, performing a physical examination, conducting diagnostic testing, and consulting with other clinical providers to gather data that are relevant to understanding the underlying disease processes. This exercise involves generating hypotheses and updating prior probabilities as more information and evidence become available. Throughout this process of information gathering, integration, and interpretation, there is an ongoing assessment of whether sufficient and necessary knowledge has been obtained to make an accurate diagnosis and provide appropriate treatment.2

Diagnostic error is defined as a missed opportunity to make a timely diagnosis as part of this iterative process, including the failure of communicating the diagnosis to the patient in a timely manner.3 It can be categorized as a missed, delayed, or incorrect diagnosis based on available evidence at the time. Establishing the correct diagnosis has important implications. A timely and precise diagnosis ensures the patient the highest probability of having a positive health outcome that reflects an appropriate understanding of underlying disease processes and is consistent with their overall goals of care.3 When diagnostic errors occur, they can cause patient harm. Adverse events due to medical errors, including diagnostic errors, are estimated to be the third leading cause of death in the United States.4 Most people will experience at least 1 diagnostic error in their lifetime. In the 2015 National Academy of Medicine report Improving Diagnosis in Healthcare, diagnostic errors were identified as a major hazard as well as an opportunity to improve patient outcomes.2

Diagnostic errors during hospitalizations are especially concerning, as they are more likely to be implicated in a wider spectrum of harm, including permanent disability and death. This has become even more relevant for hospital medicine physicians and other clinical providers as they encounter increasing cognitive and administrative workloads, rising dissatisfaction and burnout, and unique obstacles such as night-time scheduling.5

Incidence of Diagnostic Errors in Hospitalized Patients

Several methodological approaches have been used to estimate the incidence of diagnostic errors in hospitalized patients. These include retrospective reviews of a sample of all hospital admissions, evaluations of selected adverse outcomes including autopsy studies, patient and provider surveys, and malpractice claims. Laboratory testing audits and secondary reviews in other diagnostic subspecialities (eg, radiology, pathology, and microbiology) are also essential to improving diagnostic performance in these specialized fields, which in turn affects overall hospital diagnostic error rates.6-8 These diverse approaches provide unique insights regarding our ability to assess the degree to which potential harms, ranging from temporary impairment to permanent disability, to death, are attributable to different failure points in the diagnostic process.

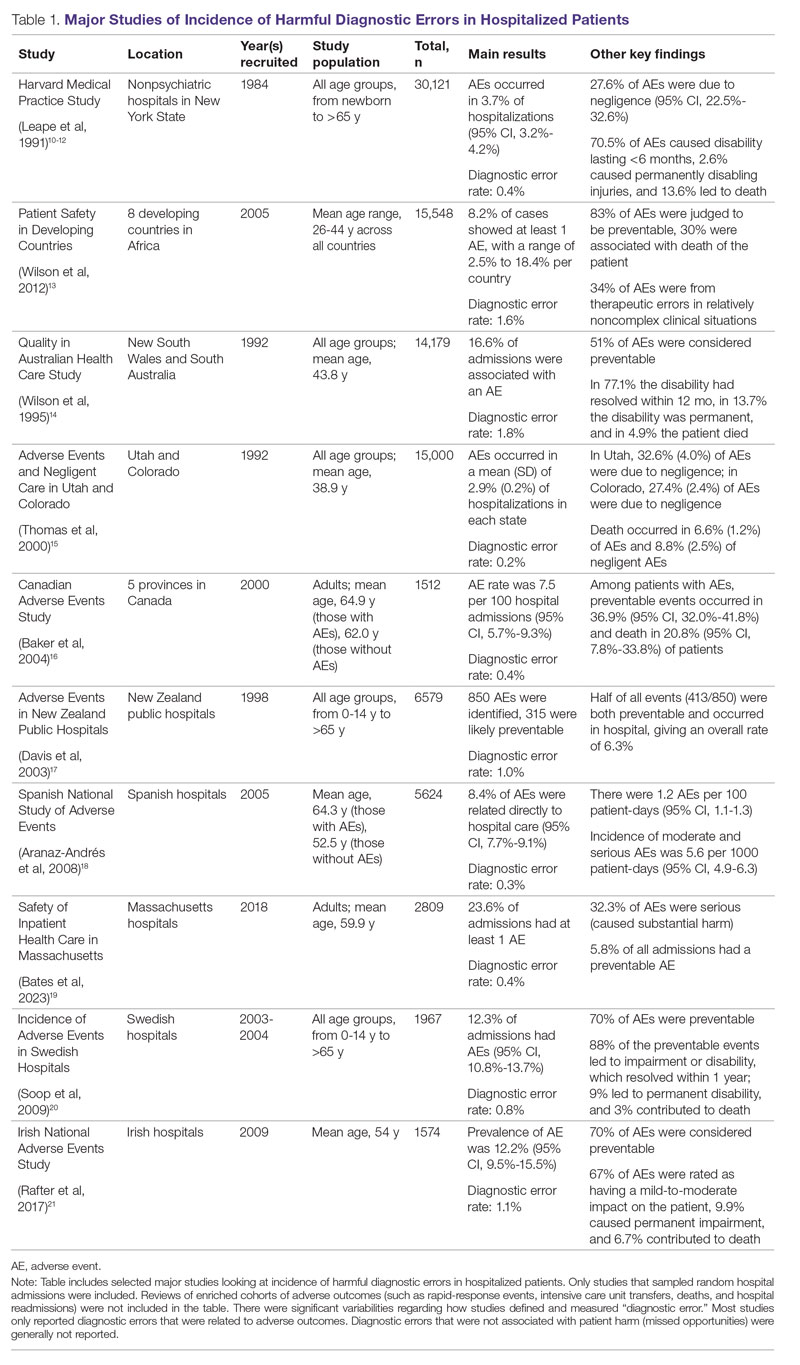

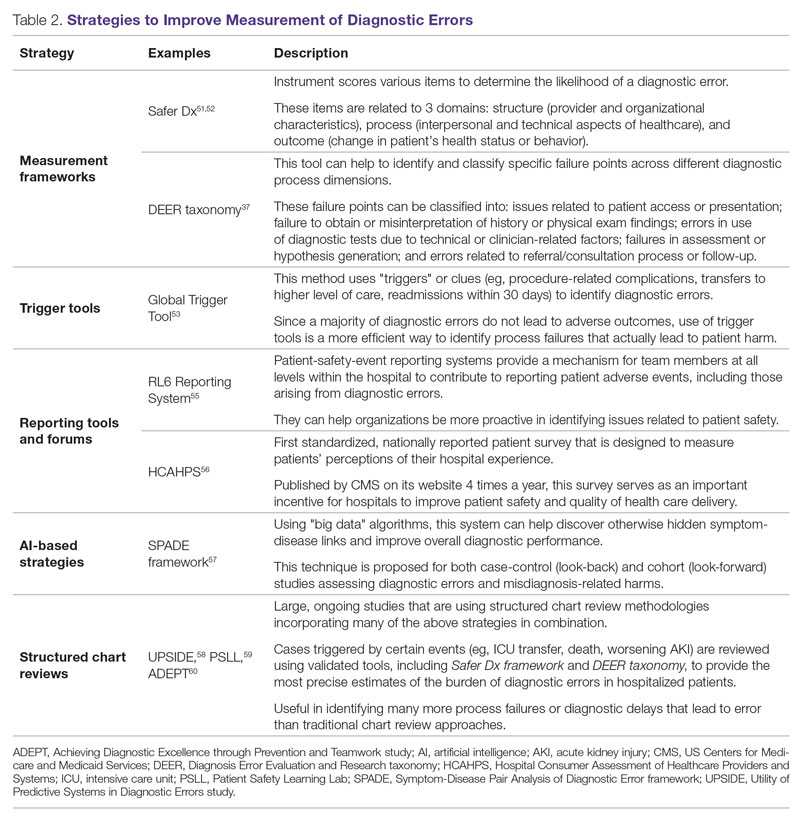

Large retrospective chart reviews of random hospital admissions remain the most accurate way to determine the overall incidence of diagnostic errors in hospitalized patients.9 The Harvard Medical Practice Study, published in 1991, laid the groundwork for measuring the incidence of adverse events in hospitalized patients and assessing their relation to medical error, negligence, and disability. Reviewing 30,121 randomly selected records from 51 randomly selected acute care hospitals in New York State, the study found that adverse events occurred in 3.7% of hospitalizations, diagnostic errors accounted for 13.8% of these events, and these errors were likely attributable to negligence in 74.7% of cases. The study not only outlined individual-level process failures, but also focused attention on some of the systemic causes, setting the agenda for quality improvement research in hospital-based care for years to come.10-12 A recent systematic review and meta-analysis of 22 hospital admission studies found a pooled rate of 0.7% (95% CI, 0.5%-1.1%) for harmful diagnostic errors.9 It found significant variations in the rates of adverse events, diagnostic errors, and range of diagnoses that were missed. This was primarily because of variabilities in pre-test probabilities in detecting diagnostic errors in these specific cohorts, as well as due to heterogeneity in study definitions and methodologies, especially regarding how they defined and measured “diagnostic error.” The analysis, however, did not account for diagnostic errors that were not related to patient harm (missed opportunities); therefore, it likely significantly underestimated the true incidence of diagnostic errors in these study populations. Table 1 summarizes some of key studies that have examined the incidence of harmful diagnostic errors in hospitalized patients.9-21