User login

Does Extended Postop Follow-Up Improve Survival in Gastric Cancer?

TOPLINE:

METHODOLOGY:

- Currently, postgastrectomy cancer surveillance typically lasts 5 years, although some centers now monitor patients beyond this point.

- To investigate the potential benefit of extended surveillance, researchers used Korean National Health Insurance claims data to identify 40,468 patients with gastric cancer who were disease free 5 years after gastrectomy — 14,294 received extended regular follow-up visits and 26,174 did not.

- The extended regular follow-up group was defined as having endoscopy or abdominopelvic CT between 2 months and 2 years before diagnosis of late recurrence or gastric remnant cancer and having two or more examinations between 5.5 and 8.5 years after gastrectomy. Late recurrence was a recurrence diagnosed 5 years after gastrectomy.

- Researchers used Cox proportional hazards regression to evaluate the independent association between follow-up and overall and postrecurrence survival rates.

TAKEAWAY:

- Overall, 5 years postgastrectomy, the incidence of late recurrence or gastric remnant cancer was 7.8% — 4.0% between 5 and 10 years (1610 of 40,468 patients) and 9.4% after 10 years (1528 of 16,287 patients).

- Regular follow-up beyond 5 years was associated with a significant reduction in overall mortality — from 49.4% to 36.9% at 15 years (P < .001). Overall survival after late recurrence or gastric remnant cancer also improved significantly with extended regular follow-up, with the 5-year postrecurrence survival rate increasing from 32.7% to 71.1% (P < .001).

- The combination of endoscopy and abdominopelvic CT provided the highest 5-year postrecurrence survival rate (74.5%), compared with endoscopy alone (54.5%) or CT alone (47.1%).

- A time interval of more than 2 years between a previous endoscopy or abdominopelvic CT and diagnosis of late recurrence or gastric remnant cancer significantly decreased postrecurrence survival (hazard ratio [HR], 1.72 for endoscopy and HR, 1.48 for abdominopelvic CT).

IN PRACTICE:

“These findings suggest that extended regular follow-up after 5 years post gastrectomy should be implemented clinically and that current practice and value of follow-up protocols in postoperative care of patients with gastric cancer be reconsidered,” the authors concluded.

The authors of an accompanying commentary cautioned that, while the study “successfully establishes groundwork for extending surveillance of gastric cancer in high-risk populations, more work is needed to strategically identify those who would benefit most from extended surveillance.”

SOURCE:

The study, with first author Ju-Hee Lee, MD, PhD, Department of Surgery, Hanyang University College of Medicine, Seoul, South Korea, and accompanying commentary were published online on June 18 in JAMA Surgery.

LIMITATIONS:

Recurrent cancer and gastric remnant cancer could not be distinguished from each other because clinical records were not analyzed. The claims database lacked detailed clinical information on individual patients, including cancer stages, and a separate analysis of tumor markers could not be performed.

DISCLOSURES:

The study was funded by a grant from the Korean Gastric Cancer Association. The study authors and commentary authors reported no conflicts of interest.

A version of this article appeared on Medscape.com.

TOPLINE:

METHODOLOGY:

- Currently, postgastrectomy cancer surveillance typically lasts 5 years, although some centers now monitor patients beyond this point.

- To investigate the potential benefit of extended surveillance, researchers used Korean National Health Insurance claims data to identify 40,468 patients with gastric cancer who were disease free 5 years after gastrectomy — 14,294 received extended regular follow-up visits and 26,174 did not.

- The extended regular follow-up group was defined as having endoscopy or abdominopelvic CT between 2 months and 2 years before diagnosis of late recurrence or gastric remnant cancer and having two or more examinations between 5.5 and 8.5 years after gastrectomy. Late recurrence was a recurrence diagnosed 5 years after gastrectomy.

- Researchers used Cox proportional hazards regression to evaluate the independent association between follow-up and overall and postrecurrence survival rates.

TAKEAWAY:

- Overall, 5 years postgastrectomy, the incidence of late recurrence or gastric remnant cancer was 7.8% — 4.0% between 5 and 10 years (1610 of 40,468 patients) and 9.4% after 10 years (1528 of 16,287 patients).

- Regular follow-up beyond 5 years was associated with a significant reduction in overall mortality — from 49.4% to 36.9% at 15 years (P < .001). Overall survival after late recurrence or gastric remnant cancer also improved significantly with extended regular follow-up, with the 5-year postrecurrence survival rate increasing from 32.7% to 71.1% (P < .001).

- The combination of endoscopy and abdominopelvic CT provided the highest 5-year postrecurrence survival rate (74.5%), compared with endoscopy alone (54.5%) or CT alone (47.1%).

- A time interval of more than 2 years between a previous endoscopy or abdominopelvic CT and diagnosis of late recurrence or gastric remnant cancer significantly decreased postrecurrence survival (hazard ratio [HR], 1.72 for endoscopy and HR, 1.48 for abdominopelvic CT).

IN PRACTICE:

“These findings suggest that extended regular follow-up after 5 years post gastrectomy should be implemented clinically and that current practice and value of follow-up protocols in postoperative care of patients with gastric cancer be reconsidered,” the authors concluded.

The authors of an accompanying commentary cautioned that, while the study “successfully establishes groundwork for extending surveillance of gastric cancer in high-risk populations, more work is needed to strategically identify those who would benefit most from extended surveillance.”

SOURCE:

The study, with first author Ju-Hee Lee, MD, PhD, Department of Surgery, Hanyang University College of Medicine, Seoul, South Korea, and accompanying commentary were published online on June 18 in JAMA Surgery.

LIMITATIONS:

Recurrent cancer and gastric remnant cancer could not be distinguished from each other because clinical records were not analyzed. The claims database lacked detailed clinical information on individual patients, including cancer stages, and a separate analysis of tumor markers could not be performed.

DISCLOSURES:

The study was funded by a grant from the Korean Gastric Cancer Association. The study authors and commentary authors reported no conflicts of interest.

A version of this article appeared on Medscape.com.

TOPLINE:

METHODOLOGY:

- Currently, postgastrectomy cancer surveillance typically lasts 5 years, although some centers now monitor patients beyond this point.

- To investigate the potential benefit of extended surveillance, researchers used Korean National Health Insurance claims data to identify 40,468 patients with gastric cancer who were disease free 5 years after gastrectomy — 14,294 received extended regular follow-up visits and 26,174 did not.

- The extended regular follow-up group was defined as having endoscopy or abdominopelvic CT between 2 months and 2 years before diagnosis of late recurrence or gastric remnant cancer and having two or more examinations between 5.5 and 8.5 years after gastrectomy. Late recurrence was a recurrence diagnosed 5 years after gastrectomy.

- Researchers used Cox proportional hazards regression to evaluate the independent association between follow-up and overall and postrecurrence survival rates.

TAKEAWAY:

- Overall, 5 years postgastrectomy, the incidence of late recurrence or gastric remnant cancer was 7.8% — 4.0% between 5 and 10 years (1610 of 40,468 patients) and 9.4% after 10 years (1528 of 16,287 patients).

- Regular follow-up beyond 5 years was associated with a significant reduction in overall mortality — from 49.4% to 36.9% at 15 years (P < .001). Overall survival after late recurrence or gastric remnant cancer also improved significantly with extended regular follow-up, with the 5-year postrecurrence survival rate increasing from 32.7% to 71.1% (P < .001).

- The combination of endoscopy and abdominopelvic CT provided the highest 5-year postrecurrence survival rate (74.5%), compared with endoscopy alone (54.5%) or CT alone (47.1%).

- A time interval of more than 2 years between a previous endoscopy or abdominopelvic CT and diagnosis of late recurrence or gastric remnant cancer significantly decreased postrecurrence survival (hazard ratio [HR], 1.72 for endoscopy and HR, 1.48 for abdominopelvic CT).

IN PRACTICE:

“These findings suggest that extended regular follow-up after 5 years post gastrectomy should be implemented clinically and that current practice and value of follow-up protocols in postoperative care of patients with gastric cancer be reconsidered,” the authors concluded.

The authors of an accompanying commentary cautioned that, while the study “successfully establishes groundwork for extending surveillance of gastric cancer in high-risk populations, more work is needed to strategically identify those who would benefit most from extended surveillance.”

SOURCE:

The study, with first author Ju-Hee Lee, MD, PhD, Department of Surgery, Hanyang University College of Medicine, Seoul, South Korea, and accompanying commentary were published online on June 18 in JAMA Surgery.

LIMITATIONS:

Recurrent cancer and gastric remnant cancer could not be distinguished from each other because clinical records were not analyzed. The claims database lacked detailed clinical information on individual patients, including cancer stages, and a separate analysis of tumor markers could not be performed.

DISCLOSURES:

The study was funded by a grant from the Korean Gastric Cancer Association. The study authors and commentary authors reported no conflicts of interest.

A version of this article appeared on Medscape.com.

New Vitamin D Recs: Testing, Supplementing, Dosing

This transcript has been edited for clarity.

I’m Dr. Neil Skolnik, and today I’m going to talk about the Endocrine Society Guideline on Vitamin D. The question of who and when to test for vitamin D, and when to prescribe vitamin D, comes up frequently. There have been a lot of studies, and many people I know have opinions about this, but I haven’t seen a lot of clear, evidence-based guidance. This much-needed guideline provides guidance, though I’m not sure that everyone is going to be happy with the recommendations. That said, the society did conduct a comprehensive assessment and systematic review of the evidence that was impressive and well done. For our discussion, I will focus on the recommendations for nonpregnant adults.

The assumption for all of the recommendations is that these are for individuals who are already getting the Institute of Medicine’s recommended amount of vitamin D, which is 600 IU daily for those 50-70 years of age and 800 IU daily for those above 80 years.

For adults aged 18-74 years, who do not have prediabetes, the guidelines suggest against routinely testing for vitamin D deficiency and recommend against routine supplementation. For the older part of this cohort, adults aged 50-74 years, there is abundant randomized trial evidence showing little to no significant differences with vitamin D supplementation on outcomes of fracture, cancer, cardiovascular disease, kidney stones, or mortality. While supplementation is safe, there does not appear to be any benefit to routine supplementation or testing. It is important to note that the trials were done in populations that were meeting the daily recommended intake of vitamin D and who did not have low vitamin D levels at baseline, so individuals who may not be meeting the recommended daily intake though their diet or through sun exposure may consider vitamin D supplementation.

For adults with prediabetes, vitamin D supplementation is recommended to reduce the risk for progression from prediabetes to diabetes. This is about 1 in 3 adults in the United States. A number of trials have looked at vitamin D supplementation for adults with prediabetes in addition to lifestyle modification (diet and exercise). Vitamin D decreases the risk for progression from prediabetes to diabetes by approximately 10%-15%. The effect may be greater in those who are over age 60 and who have lower initial vitamin D levels.

Vitamin D in older adults (aged 75 or older) has a separate recommendation. In this age group, low vitamin D levels are common, with up to 20% of older adults having low levels. The guidelines suggest against testing vitamin D in adults aged 75 or over and recommend empiric vitamin D supplementation for all adults aged 75 or older. While observational studies have shown a relationship between low vitamin D levels in this age group and adverse outcomes, including falls, fractures, and respiratory infections, evidence from randomized placebo-controlled trials of vitamin D supplementation have been inconsistent in regard to benefit. That said, a meta-analysis has shown that vitamin D supplementation lowers mortality compared with placebo, with a relative risk of 0.96 (confidence interval, 0.93-1.00). There was no difference in effect according to setting (community vs nursing home), vitamin D dosage, or baseline vitamin D level.

There appeared to be a benefit of low-dose vitamin D supplementation on fall risk, with possibly greater fall risk when high-dose supplementation was used. No significant effect on fracture rate was seen with vitamin D supplementation alone, although there was a decrease in fractures when vitamin D was combined with calcium. In these studies, the median dose of calcium was 1000 mg per day.

Based on the probability of a “slight decrease in all-cause mortality” and its safety, as well as possible benefit to decrease falls, the recommendation is for supplementation for all adults aged 75 or older. Since there was not a consistent difference by vitamin D level, testing is not necessary.

Let’s now discuss dosage. The guidelines recommend daily lower-dose vitamin D over nondaily higher-dose vitamin D. Unfortunately, the guideline does not specify a specific dose of vitamin D. The supplementation dose used in trials of adults aged 75 or older ranged from 400 to 3333 IU daily, with an average dose of 900 IU daily, so it seems to me that a dose of 1000-2000 IU daily is a reasonable choice for older adults. In the prediabetes trials, a higher average dose was used, with a mean of 3500 IU daily, so a higher dose might make sense in this group.

Dr. Skolnik, is a professor in the Department of Family Medicine, Sidney Kimmel Medical College of Thomas Jefferson University, Philadelphia, and associate director, Department of Family Medicine, Abington Jefferson Health, Abington, Pennsylvania. He disclosed ties with AstraZeneca, Bayer, Teva, Eli Lilly, Boehringer Ingelheim, Sanofi, Sanofi Pasteur, GlaxoSmithKline, and Merck.

A version of this article first appeared on Medscape.com.

This transcript has been edited for clarity.

I’m Dr. Neil Skolnik, and today I’m going to talk about the Endocrine Society Guideline on Vitamin D. The question of who and when to test for vitamin D, and when to prescribe vitamin D, comes up frequently. There have been a lot of studies, and many people I know have opinions about this, but I haven’t seen a lot of clear, evidence-based guidance. This much-needed guideline provides guidance, though I’m not sure that everyone is going to be happy with the recommendations. That said, the society did conduct a comprehensive assessment and systematic review of the evidence that was impressive and well done. For our discussion, I will focus on the recommendations for nonpregnant adults.

The assumption for all of the recommendations is that these are for individuals who are already getting the Institute of Medicine’s recommended amount of vitamin D, which is 600 IU daily for those 50-70 years of age and 800 IU daily for those above 80 years.

For adults aged 18-74 years, who do not have prediabetes, the guidelines suggest against routinely testing for vitamin D deficiency and recommend against routine supplementation. For the older part of this cohort, adults aged 50-74 years, there is abundant randomized trial evidence showing little to no significant differences with vitamin D supplementation on outcomes of fracture, cancer, cardiovascular disease, kidney stones, or mortality. While supplementation is safe, there does not appear to be any benefit to routine supplementation or testing. It is important to note that the trials were done in populations that were meeting the daily recommended intake of vitamin D and who did not have low vitamin D levels at baseline, so individuals who may not be meeting the recommended daily intake though their diet or through sun exposure may consider vitamin D supplementation.

For adults with prediabetes, vitamin D supplementation is recommended to reduce the risk for progression from prediabetes to diabetes. This is about 1 in 3 adults in the United States. A number of trials have looked at vitamin D supplementation for adults with prediabetes in addition to lifestyle modification (diet and exercise). Vitamin D decreases the risk for progression from prediabetes to diabetes by approximately 10%-15%. The effect may be greater in those who are over age 60 and who have lower initial vitamin D levels.

Vitamin D in older adults (aged 75 or older) has a separate recommendation. In this age group, low vitamin D levels are common, with up to 20% of older adults having low levels. The guidelines suggest against testing vitamin D in adults aged 75 or over and recommend empiric vitamin D supplementation for all adults aged 75 or older. While observational studies have shown a relationship between low vitamin D levels in this age group and adverse outcomes, including falls, fractures, and respiratory infections, evidence from randomized placebo-controlled trials of vitamin D supplementation have been inconsistent in regard to benefit. That said, a meta-analysis has shown that vitamin D supplementation lowers mortality compared with placebo, with a relative risk of 0.96 (confidence interval, 0.93-1.00). There was no difference in effect according to setting (community vs nursing home), vitamin D dosage, or baseline vitamin D level.

There appeared to be a benefit of low-dose vitamin D supplementation on fall risk, with possibly greater fall risk when high-dose supplementation was used. No significant effect on fracture rate was seen with vitamin D supplementation alone, although there was a decrease in fractures when vitamin D was combined with calcium. In these studies, the median dose of calcium was 1000 mg per day.

Based on the probability of a “slight decrease in all-cause mortality” and its safety, as well as possible benefit to decrease falls, the recommendation is for supplementation for all adults aged 75 or older. Since there was not a consistent difference by vitamin D level, testing is not necessary.

Let’s now discuss dosage. The guidelines recommend daily lower-dose vitamin D over nondaily higher-dose vitamin D. Unfortunately, the guideline does not specify a specific dose of vitamin D. The supplementation dose used in trials of adults aged 75 or older ranged from 400 to 3333 IU daily, with an average dose of 900 IU daily, so it seems to me that a dose of 1000-2000 IU daily is a reasonable choice for older adults. In the prediabetes trials, a higher average dose was used, with a mean of 3500 IU daily, so a higher dose might make sense in this group.

Dr. Skolnik, is a professor in the Department of Family Medicine, Sidney Kimmel Medical College of Thomas Jefferson University, Philadelphia, and associate director, Department of Family Medicine, Abington Jefferson Health, Abington, Pennsylvania. He disclosed ties with AstraZeneca, Bayer, Teva, Eli Lilly, Boehringer Ingelheim, Sanofi, Sanofi Pasteur, GlaxoSmithKline, and Merck.

A version of this article first appeared on Medscape.com.

This transcript has been edited for clarity.

I’m Dr. Neil Skolnik, and today I’m going to talk about the Endocrine Society Guideline on Vitamin D. The question of who and when to test for vitamin D, and when to prescribe vitamin D, comes up frequently. There have been a lot of studies, and many people I know have opinions about this, but I haven’t seen a lot of clear, evidence-based guidance. This much-needed guideline provides guidance, though I’m not sure that everyone is going to be happy with the recommendations. That said, the society did conduct a comprehensive assessment and systematic review of the evidence that was impressive and well done. For our discussion, I will focus on the recommendations for nonpregnant adults.

The assumption for all of the recommendations is that these are for individuals who are already getting the Institute of Medicine’s recommended amount of vitamin D, which is 600 IU daily for those 50-70 years of age and 800 IU daily for those above 80 years.

For adults aged 18-74 years, who do not have prediabetes, the guidelines suggest against routinely testing for vitamin D deficiency and recommend against routine supplementation. For the older part of this cohort, adults aged 50-74 years, there is abundant randomized trial evidence showing little to no significant differences with vitamin D supplementation on outcomes of fracture, cancer, cardiovascular disease, kidney stones, or mortality. While supplementation is safe, there does not appear to be any benefit to routine supplementation or testing. It is important to note that the trials were done in populations that were meeting the daily recommended intake of vitamin D and who did not have low vitamin D levels at baseline, so individuals who may not be meeting the recommended daily intake though their diet or through sun exposure may consider vitamin D supplementation.

For adults with prediabetes, vitamin D supplementation is recommended to reduce the risk for progression from prediabetes to diabetes. This is about 1 in 3 adults in the United States. A number of trials have looked at vitamin D supplementation for adults with prediabetes in addition to lifestyle modification (diet and exercise). Vitamin D decreases the risk for progression from prediabetes to diabetes by approximately 10%-15%. The effect may be greater in those who are over age 60 and who have lower initial vitamin D levels.

Vitamin D in older adults (aged 75 or older) has a separate recommendation. In this age group, low vitamin D levels are common, with up to 20% of older adults having low levels. The guidelines suggest against testing vitamin D in adults aged 75 or over and recommend empiric vitamin D supplementation for all adults aged 75 or older. While observational studies have shown a relationship between low vitamin D levels in this age group and adverse outcomes, including falls, fractures, and respiratory infections, evidence from randomized placebo-controlled trials of vitamin D supplementation have been inconsistent in regard to benefit. That said, a meta-analysis has shown that vitamin D supplementation lowers mortality compared with placebo, with a relative risk of 0.96 (confidence interval, 0.93-1.00). There was no difference in effect according to setting (community vs nursing home), vitamin D dosage, or baseline vitamin D level.

There appeared to be a benefit of low-dose vitamin D supplementation on fall risk, with possibly greater fall risk when high-dose supplementation was used. No significant effect on fracture rate was seen with vitamin D supplementation alone, although there was a decrease in fractures when vitamin D was combined with calcium. In these studies, the median dose of calcium was 1000 mg per day.

Based on the probability of a “slight decrease in all-cause mortality” and its safety, as well as possible benefit to decrease falls, the recommendation is for supplementation for all adults aged 75 or older. Since there was not a consistent difference by vitamin D level, testing is not necessary.

Let’s now discuss dosage. The guidelines recommend daily lower-dose vitamin D over nondaily higher-dose vitamin D. Unfortunately, the guideline does not specify a specific dose of vitamin D. The supplementation dose used in trials of adults aged 75 or older ranged from 400 to 3333 IU daily, with an average dose of 900 IU daily, so it seems to me that a dose of 1000-2000 IU daily is a reasonable choice for older adults. In the prediabetes trials, a higher average dose was used, with a mean of 3500 IU daily, so a higher dose might make sense in this group.

Dr. Skolnik, is a professor in the Department of Family Medicine, Sidney Kimmel Medical College of Thomas Jefferson University, Philadelphia, and associate director, Department of Family Medicine, Abington Jefferson Health, Abington, Pennsylvania. He disclosed ties with AstraZeneca, Bayer, Teva, Eli Lilly, Boehringer Ingelheim, Sanofi, Sanofi Pasteur, GlaxoSmithKline, and Merck.

A version of this article first appeared on Medscape.com.

Let ’em Play: In Defense of Youth Football

Over the last couple of decades, I have become increasingly more uncomfortable watching American-style football on television. Lax refereeing coupled with over-juiced players who can generate g-forces previously attainable only on a NASA rocket sled has resulted in a spate of injuries I find unacceptable. The revolving door of transfers from college to college has made the term scholar-athlete a relic that can be applied to only a handful of players at the smallest uncompetitive schools.

Many of you who are regular readers of Letters from Maine have probably tired of my boasting that when I played football in high school we wore leather helmets. I enjoyed playing football and continued playing in college for a couple of years until it became obvious that “bench” was going to be my usual position. But, I would not want my grandson to play college football. Certainly, not at the elite college level. Were he to do so, he would be putting himself at risk for significant injury by participating in what I no longer view as an appealing activity. Let me add that I am not including chronic traumatic encephalopathy among my concerns, because I think its association with football injuries is far from settled. My concern is more about spinal cord injuries, which, although infrequent, are almost always devastating.

I should also make it perfectly clear that my lack of enthusiasm for college and professional football does not place me among the increasingly vocal throng calling for the elimination of youth football. For the 5- to 12-year-olds, putting on pads and a helmet and scrambling around on a grassy field bumping shoulders and heads with their peers is a wonderful way to burn off energy and satisfies a need for roughhousing that comes naturally to most young boys (and many girls). The chance of anyone of those kids playing youth football reaching the elite college or professional level is extremely unlikely. Other activities and the realization that football is not in their future weeds the field during adolescence.

Although there have been some studies suggesting that starting football at an early age is associated with increased injury risk, a recent and well-controlled study published in the journal Sports Medicine has found no such association in professional football players. This finding makes some sense when you consider that most of the children in this age group are not mustering g-forces anywhere close to those a college or professional athlete can generate.

Another recent study published in the Journal of Pediatrics offers more evidence to consider before one passes judgment on youth football. When reviewing the records of nearly 1500 patients in a specialty-care concussion setting at the Children’s Hospital of Philadelphia, investigators found that recreation-related concussions and non–sport- or recreation-related concussions were more prevalent than sports-related concussions. The authors propose that “less supervision at the time of injury and less access to established concussion healthcare following injury” may explain their observations.

Of course as a card-carrying AARP old fogey, I long for the good old days when youth sports were organized by the kids in backyards and playgrounds. There we learned to pick teams and deal with the disappointment of not being a first-round pick and the embarrassment of being a last rounder. We settled out-of-bounds calls and arguments about ball possession without adults’ assistance — or video replays for that matter. But those days are gone and likely never to return, with parental anxiety running at record highs. We must accept youth sports organized for kids by adults is the way it’s going to be for the foreseeable future.

As long as the program is organized with the emphasis on fun nor structured as a fast track to elite play it will be healthier for the kids than sitting on the couch at home watching the carnage on TV.

Dr. Wilkoff practiced primary care pediatrics in Brunswick, Maine, for nearly 40 years. He has authored several books on behavioral pediatrics, including “How to Say No to Your Toddler.” Other than a Littman stethoscope he accepted as a first-year medical student in 1966, Dr. Wilkoff reports having nothing to disclose. Email him at pdnews@mdedge.com.

Over the last couple of decades, I have become increasingly more uncomfortable watching American-style football on television. Lax refereeing coupled with over-juiced players who can generate g-forces previously attainable only on a NASA rocket sled has resulted in a spate of injuries I find unacceptable. The revolving door of transfers from college to college has made the term scholar-athlete a relic that can be applied to only a handful of players at the smallest uncompetitive schools.

Many of you who are regular readers of Letters from Maine have probably tired of my boasting that when I played football in high school we wore leather helmets. I enjoyed playing football and continued playing in college for a couple of years until it became obvious that “bench” was going to be my usual position. But, I would not want my grandson to play college football. Certainly, not at the elite college level. Were he to do so, he would be putting himself at risk for significant injury by participating in what I no longer view as an appealing activity. Let me add that I am not including chronic traumatic encephalopathy among my concerns, because I think its association with football injuries is far from settled. My concern is more about spinal cord injuries, which, although infrequent, are almost always devastating.

I should also make it perfectly clear that my lack of enthusiasm for college and professional football does not place me among the increasingly vocal throng calling for the elimination of youth football. For the 5- to 12-year-olds, putting on pads and a helmet and scrambling around on a grassy field bumping shoulders and heads with their peers is a wonderful way to burn off energy and satisfies a need for roughhousing that comes naturally to most young boys (and many girls). The chance of anyone of those kids playing youth football reaching the elite college or professional level is extremely unlikely. Other activities and the realization that football is not in their future weeds the field during adolescence.

Although there have been some studies suggesting that starting football at an early age is associated with increased injury risk, a recent and well-controlled study published in the journal Sports Medicine has found no such association in professional football players. This finding makes some sense when you consider that most of the children in this age group are not mustering g-forces anywhere close to those a college or professional athlete can generate.

Another recent study published in the Journal of Pediatrics offers more evidence to consider before one passes judgment on youth football. When reviewing the records of nearly 1500 patients in a specialty-care concussion setting at the Children’s Hospital of Philadelphia, investigators found that recreation-related concussions and non–sport- or recreation-related concussions were more prevalent than sports-related concussions. The authors propose that “less supervision at the time of injury and less access to established concussion healthcare following injury” may explain their observations.

Of course as a card-carrying AARP old fogey, I long for the good old days when youth sports were organized by the kids in backyards and playgrounds. There we learned to pick teams and deal with the disappointment of not being a first-round pick and the embarrassment of being a last rounder. We settled out-of-bounds calls and arguments about ball possession without adults’ assistance — or video replays for that matter. But those days are gone and likely never to return, with parental anxiety running at record highs. We must accept youth sports organized for kids by adults is the way it’s going to be for the foreseeable future.

As long as the program is organized with the emphasis on fun nor structured as a fast track to elite play it will be healthier for the kids than sitting on the couch at home watching the carnage on TV.

Dr. Wilkoff practiced primary care pediatrics in Brunswick, Maine, for nearly 40 years. He has authored several books on behavioral pediatrics, including “How to Say No to Your Toddler.” Other than a Littman stethoscope he accepted as a first-year medical student in 1966, Dr. Wilkoff reports having nothing to disclose. Email him at pdnews@mdedge.com.

Over the last couple of decades, I have become increasingly more uncomfortable watching American-style football on television. Lax refereeing coupled with over-juiced players who can generate g-forces previously attainable only on a NASA rocket sled has resulted in a spate of injuries I find unacceptable. The revolving door of transfers from college to college has made the term scholar-athlete a relic that can be applied to only a handful of players at the smallest uncompetitive schools.

Many of you who are regular readers of Letters from Maine have probably tired of my boasting that when I played football in high school we wore leather helmets. I enjoyed playing football and continued playing in college for a couple of years until it became obvious that “bench” was going to be my usual position. But, I would not want my grandson to play college football. Certainly, not at the elite college level. Were he to do so, he would be putting himself at risk for significant injury by participating in what I no longer view as an appealing activity. Let me add that I am not including chronic traumatic encephalopathy among my concerns, because I think its association with football injuries is far from settled. My concern is more about spinal cord injuries, which, although infrequent, are almost always devastating.

I should also make it perfectly clear that my lack of enthusiasm for college and professional football does not place me among the increasingly vocal throng calling for the elimination of youth football. For the 5- to 12-year-olds, putting on pads and a helmet and scrambling around on a grassy field bumping shoulders and heads with their peers is a wonderful way to burn off energy and satisfies a need for roughhousing that comes naturally to most young boys (and many girls). The chance of anyone of those kids playing youth football reaching the elite college or professional level is extremely unlikely. Other activities and the realization that football is not in their future weeds the field during adolescence.

Although there have been some studies suggesting that starting football at an early age is associated with increased injury risk, a recent and well-controlled study published in the journal Sports Medicine has found no such association in professional football players. This finding makes some sense when you consider that most of the children in this age group are not mustering g-forces anywhere close to those a college or professional athlete can generate.

Another recent study published in the Journal of Pediatrics offers more evidence to consider before one passes judgment on youth football. When reviewing the records of nearly 1500 patients in a specialty-care concussion setting at the Children’s Hospital of Philadelphia, investigators found that recreation-related concussions and non–sport- or recreation-related concussions were more prevalent than sports-related concussions. The authors propose that “less supervision at the time of injury and less access to established concussion healthcare following injury” may explain their observations.

Of course as a card-carrying AARP old fogey, I long for the good old days when youth sports were organized by the kids in backyards and playgrounds. There we learned to pick teams and deal with the disappointment of not being a first-round pick and the embarrassment of being a last rounder. We settled out-of-bounds calls and arguments about ball possession without adults’ assistance — or video replays for that matter. But those days are gone and likely never to return, with parental anxiety running at record highs. We must accept youth sports organized for kids by adults is the way it’s going to be for the foreseeable future.

As long as the program is organized with the emphasis on fun nor structured as a fast track to elite play it will be healthier for the kids than sitting on the couch at home watching the carnage on TV.

Dr. Wilkoff practiced primary care pediatrics in Brunswick, Maine, for nearly 40 years. He has authored several books on behavioral pediatrics, including “How to Say No to Your Toddler.” Other than a Littman stethoscope he accepted as a first-year medical student in 1966, Dr. Wilkoff reports having nothing to disclose. Email him at pdnews@mdedge.com.

Night Owl or Lark? The Answer May Affect Cognition

new research suggests.

“Rather than just being personal preferences, these chronotypes could impact our cognitive function,” said study investigator, Raha West, MBChB, with Imperial College London, London, England, in a statement.

But the researchers also urged caution when interpreting the findings.

“It’s important to note that this doesn’t mean all morning people have worse cognitive performance. The findings reflect an overall trend where the majority might lean toward better cognition in the evening types,” Dr. West added.

In addition, across the board, getting the recommended 7-9 hours of nightly sleep was best for cognitive function, and sleeping for less than 7 or more than 9 hours had detrimental effects on brain function regardless of whether an individual was a night owl or lark.

The study was published online in BMJ Public Health.

A UK Biobank Cohort Study

The findings are based on a cross-sectional analysis of 26,820 adults aged 53-86 years from the UK Biobank database, who were categorized into two cohorts.

Cohort 1 had 10,067 participants (56% women) who completed four cognitive tests measuring fluid intelligence/reasoning, pairs matching, reaction time, and prospective memory. Cohort 2 had 16,753 participants (56% women) who completed two cognitive assessments (pairs matching and reaction time).

Participants self-reported sleep duration, chronotype, and quality. Cognitive test scores were evaluated against sleep parameters and health and lifestyle factors including sex, age, vascular and cardiac conditions, diabetes,alcohol use, smoking habits, and body mass index.

The results revealed a positive association between normal sleep duration (7-9 hours) and cognitive scores in Cohort 1 (beta, 0.0567), while extended sleep duration negatively impacted scores across in Cohort 1 and 2 (beta, –0.188 and beta, –0.2619, respectively).

An individual’s preference for evening or morning activity correlated strongly with their test scores. In particular, night owls consistently performed better on cognitive tests than early birds.

“While understanding and working with your natural sleep tendencies is essential, it’s equally important to remember to get just enough sleep, not too long or too short,” Dr. West noted. “This is crucial for keeping your brain healthy and functioning at its best.”

Contrary to some previous findings, the study did not find a significant relationship between sleep, sleepiness/insomnia, and cognitive performance. This may be because specific aspects of insomnia, such as severity and chronicity, as well as comorbid conditions need to be considered, the investigators wrote.

They added that age and diabetes consistently emerged as negative predictors of cognitive functioning across both cohorts, in line with previous research.

Limitations of the study include the cross-sectional design, which limits causal inferences; the possibility of residual confounding; and reliance on self-reported sleep data.

Also, the study did not adjust for educational attainment, a factor potentially influential on cognitive performance and sleep patterns, because of incomplete data. The study also did not factor in depression and social isolation, which have been shown to increase the risk for cognitive decline.

No Real-World Implications

Several outside experts offered their perspective on the study in a statement from the UK nonprofit Science Media Centre.

The study provides “interesting insights” into the difference in memory and thinking in people who identify themselves as a “morning” or “evening” person, Jacqui Hanley, PhD, with Alzheimer’s Research UK, said in the statement.

However, without a detailed picture of what is going on in the brain, it’s not clear whether being a morning or evening person affects memory and thinking or whether a decline in cognition is causing changes to sleeping patterns, Dr. Hanley added.

Roi Cohen Kadosh, PhD, CPsychol, professor of cognitive neuroscience, University of Surrey, Guildford, England, cautioned that there are “multiple potential reasons” for these associations.

“Therefore, there are no implications in my view for the real world. I fear that the general public will not be able to understand that and will change their sleep pattern, while this study does not give any evidence that this will lead to any benefit,” Dr. Cohen Kadosh said.

Jessica Chelekis, PhD, MBA, a sleep expert from Brunel University London, Uxbridge, England, said that the “main takeaway should be that the cultural belief that early risers are more productive than ‘night owls’ does not hold up to scientific scrutiny.”

“While everyone should aim to get good-quality sleep each night, we should also try to be aware of what time of day we are at our (cognitive) best and work in ways that suit us. Night owls, in particular, should not be shamed into fitting a stereotype that favors an ‘early to bed, early to rise’ practice,” Dr. Chelekis said.

Funding for the study was provided by the Korea Institute of Oriental Medicine in collaboration with Imperial College London. Dr. Hanley, Dr. Cohen Kadosh, and Dr. Chelekis have no relevant disclosures.

A version of this article first appeared on Medscape.com.

new research suggests.

“Rather than just being personal preferences, these chronotypes could impact our cognitive function,” said study investigator, Raha West, MBChB, with Imperial College London, London, England, in a statement.

But the researchers also urged caution when interpreting the findings.

“It’s important to note that this doesn’t mean all morning people have worse cognitive performance. The findings reflect an overall trend where the majority might lean toward better cognition in the evening types,” Dr. West added.

In addition, across the board, getting the recommended 7-9 hours of nightly sleep was best for cognitive function, and sleeping for less than 7 or more than 9 hours had detrimental effects on brain function regardless of whether an individual was a night owl or lark.

The study was published online in BMJ Public Health.

A UK Biobank Cohort Study

The findings are based on a cross-sectional analysis of 26,820 adults aged 53-86 years from the UK Biobank database, who were categorized into two cohorts.

Cohort 1 had 10,067 participants (56% women) who completed four cognitive tests measuring fluid intelligence/reasoning, pairs matching, reaction time, and prospective memory. Cohort 2 had 16,753 participants (56% women) who completed two cognitive assessments (pairs matching and reaction time).

Participants self-reported sleep duration, chronotype, and quality. Cognitive test scores were evaluated against sleep parameters and health and lifestyle factors including sex, age, vascular and cardiac conditions, diabetes,alcohol use, smoking habits, and body mass index.

The results revealed a positive association between normal sleep duration (7-9 hours) and cognitive scores in Cohort 1 (beta, 0.0567), while extended sleep duration negatively impacted scores across in Cohort 1 and 2 (beta, –0.188 and beta, –0.2619, respectively).

An individual’s preference for evening or morning activity correlated strongly with their test scores. In particular, night owls consistently performed better on cognitive tests than early birds.

“While understanding and working with your natural sleep tendencies is essential, it’s equally important to remember to get just enough sleep, not too long or too short,” Dr. West noted. “This is crucial for keeping your brain healthy and functioning at its best.”

Contrary to some previous findings, the study did not find a significant relationship between sleep, sleepiness/insomnia, and cognitive performance. This may be because specific aspects of insomnia, such as severity and chronicity, as well as comorbid conditions need to be considered, the investigators wrote.

They added that age and diabetes consistently emerged as negative predictors of cognitive functioning across both cohorts, in line with previous research.

Limitations of the study include the cross-sectional design, which limits causal inferences; the possibility of residual confounding; and reliance on self-reported sleep data.

Also, the study did not adjust for educational attainment, a factor potentially influential on cognitive performance and sleep patterns, because of incomplete data. The study also did not factor in depression and social isolation, which have been shown to increase the risk for cognitive decline.

No Real-World Implications

Several outside experts offered their perspective on the study in a statement from the UK nonprofit Science Media Centre.

The study provides “interesting insights” into the difference in memory and thinking in people who identify themselves as a “morning” or “evening” person, Jacqui Hanley, PhD, with Alzheimer’s Research UK, said in the statement.

However, without a detailed picture of what is going on in the brain, it’s not clear whether being a morning or evening person affects memory and thinking or whether a decline in cognition is causing changes to sleeping patterns, Dr. Hanley added.

Roi Cohen Kadosh, PhD, CPsychol, professor of cognitive neuroscience, University of Surrey, Guildford, England, cautioned that there are “multiple potential reasons” for these associations.

“Therefore, there are no implications in my view for the real world. I fear that the general public will not be able to understand that and will change their sleep pattern, while this study does not give any evidence that this will lead to any benefit,” Dr. Cohen Kadosh said.

Jessica Chelekis, PhD, MBA, a sleep expert from Brunel University London, Uxbridge, England, said that the “main takeaway should be that the cultural belief that early risers are more productive than ‘night owls’ does not hold up to scientific scrutiny.”

“While everyone should aim to get good-quality sleep each night, we should also try to be aware of what time of day we are at our (cognitive) best and work in ways that suit us. Night owls, in particular, should not be shamed into fitting a stereotype that favors an ‘early to bed, early to rise’ practice,” Dr. Chelekis said.

Funding for the study was provided by the Korea Institute of Oriental Medicine in collaboration with Imperial College London. Dr. Hanley, Dr. Cohen Kadosh, and Dr. Chelekis have no relevant disclosures.

A version of this article first appeared on Medscape.com.

new research suggests.

“Rather than just being personal preferences, these chronotypes could impact our cognitive function,” said study investigator, Raha West, MBChB, with Imperial College London, London, England, in a statement.

But the researchers also urged caution when interpreting the findings.

“It’s important to note that this doesn’t mean all morning people have worse cognitive performance. The findings reflect an overall trend where the majority might lean toward better cognition in the evening types,” Dr. West added.

In addition, across the board, getting the recommended 7-9 hours of nightly sleep was best for cognitive function, and sleeping for less than 7 or more than 9 hours had detrimental effects on brain function regardless of whether an individual was a night owl or lark.

The study was published online in BMJ Public Health.

A UK Biobank Cohort Study

The findings are based on a cross-sectional analysis of 26,820 adults aged 53-86 years from the UK Biobank database, who were categorized into two cohorts.

Cohort 1 had 10,067 participants (56% women) who completed four cognitive tests measuring fluid intelligence/reasoning, pairs matching, reaction time, and prospective memory. Cohort 2 had 16,753 participants (56% women) who completed two cognitive assessments (pairs matching and reaction time).

Participants self-reported sleep duration, chronotype, and quality. Cognitive test scores were evaluated against sleep parameters and health and lifestyle factors including sex, age, vascular and cardiac conditions, diabetes,alcohol use, smoking habits, and body mass index.

The results revealed a positive association between normal sleep duration (7-9 hours) and cognitive scores in Cohort 1 (beta, 0.0567), while extended sleep duration negatively impacted scores across in Cohort 1 and 2 (beta, –0.188 and beta, –0.2619, respectively).

An individual’s preference for evening or morning activity correlated strongly with their test scores. In particular, night owls consistently performed better on cognitive tests than early birds.

“While understanding and working with your natural sleep tendencies is essential, it’s equally important to remember to get just enough sleep, not too long or too short,” Dr. West noted. “This is crucial for keeping your brain healthy and functioning at its best.”

Contrary to some previous findings, the study did not find a significant relationship between sleep, sleepiness/insomnia, and cognitive performance. This may be because specific aspects of insomnia, such as severity and chronicity, as well as comorbid conditions need to be considered, the investigators wrote.

They added that age and diabetes consistently emerged as negative predictors of cognitive functioning across both cohorts, in line with previous research.

Limitations of the study include the cross-sectional design, which limits causal inferences; the possibility of residual confounding; and reliance on self-reported sleep data.

Also, the study did not adjust for educational attainment, a factor potentially influential on cognitive performance and sleep patterns, because of incomplete data. The study also did not factor in depression and social isolation, which have been shown to increase the risk for cognitive decline.

No Real-World Implications

Several outside experts offered their perspective on the study in a statement from the UK nonprofit Science Media Centre.

The study provides “interesting insights” into the difference in memory and thinking in people who identify themselves as a “morning” or “evening” person, Jacqui Hanley, PhD, with Alzheimer’s Research UK, said in the statement.

However, without a detailed picture of what is going on in the brain, it’s not clear whether being a morning or evening person affects memory and thinking or whether a decline in cognition is causing changes to sleeping patterns, Dr. Hanley added.

Roi Cohen Kadosh, PhD, CPsychol, professor of cognitive neuroscience, University of Surrey, Guildford, England, cautioned that there are “multiple potential reasons” for these associations.

“Therefore, there are no implications in my view for the real world. I fear that the general public will not be able to understand that and will change their sleep pattern, while this study does not give any evidence that this will lead to any benefit,” Dr. Cohen Kadosh said.

Jessica Chelekis, PhD, MBA, a sleep expert from Brunel University London, Uxbridge, England, said that the “main takeaway should be that the cultural belief that early risers are more productive than ‘night owls’ does not hold up to scientific scrutiny.”

“While everyone should aim to get good-quality sleep each night, we should also try to be aware of what time of day we are at our (cognitive) best and work in ways that suit us. Night owls, in particular, should not be shamed into fitting a stereotype that favors an ‘early to bed, early to rise’ practice,” Dr. Chelekis said.

Funding for the study was provided by the Korea Institute of Oriental Medicine in collaboration with Imperial College London. Dr. Hanley, Dr. Cohen Kadosh, and Dr. Chelekis have no relevant disclosures.

A version of this article first appeared on Medscape.com.

FROM BMJ PUBLIC HEALTH

EMA Warns of Anaphylactic Reactions to MS Drug

Such reactions could occur even years after the start of treatment, the European Medicines Agency (EMA) warned.

Glatiramer acetate is a disease-modifying therapy (DMT) for relapsing MS that is given by injection.

The drug has been used for treating MS for more than 20 years, during which time, it has had a good safety profile. Common side effects are known to include vasodilation, arthralgia, anxiety, hypertonia, palpitations, and lipoatrophy.

A meeting of the EMA’s Pharmacovigilance Risk Assessment Committee (PRAC), held on July 8-11, considered evidence from an EU-wide review of all available data concerning anaphylactic reactions with glatiramer acetate. As a result, the committee concluded that the medicine is associated with a risk for anaphylactic reactions, which may occur shortly after administration or even months or years later.

Risk for Delays to Treatment

Cases involving the use of glatiramer acetate with a fatal outcome have been reported, PRAC noted.

The committee cautioned that because the initial symptoms could overlap with those of postinjection reaction, there was a risk for delay in identifying an anaphylactic reaction.

PRAC has sanctioned a direct healthcare professional communication (DHPC) to inform healthcare professionals about the risk. Patients and caregivers should be advised of the signs and symptoms of an anaphylactic reaction and the need to seek emergency care if this should occur, the committee added. In the event of such a reaction, treatment with glatiramer acetate must be discontinued, PRAC stated.

Once adopted, the DHPC for glatiramer acetate will be disseminated to healthcare professionals by the marketing authorization holders.

Anaphylactic reactions associated with the use of glatiramer acetate have been noted in medical literature for some years. A letter by members of the department of neurology at Albert Ludwig University Freiburg, Freiburg im Bresigau, Germany, published in the journal European Neurology in 2011, detailed six cases of anaphylactoid or anaphylactic reactions in patients while they were undergoing treatment with glatiramer acetate.

The authors highlighted that in one of the cases, a grade 1 anaphylactic reaction occurred 3 months after treatment with the drug was initiated.

A version of this article first appeared on Medscape.com.

Such reactions could occur even years after the start of treatment, the European Medicines Agency (EMA) warned.

Glatiramer acetate is a disease-modifying therapy (DMT) for relapsing MS that is given by injection.

The drug has been used for treating MS for more than 20 years, during which time, it has had a good safety profile. Common side effects are known to include vasodilation, arthralgia, anxiety, hypertonia, palpitations, and lipoatrophy.

A meeting of the EMA’s Pharmacovigilance Risk Assessment Committee (PRAC), held on July 8-11, considered evidence from an EU-wide review of all available data concerning anaphylactic reactions with glatiramer acetate. As a result, the committee concluded that the medicine is associated with a risk for anaphylactic reactions, which may occur shortly after administration or even months or years later.

Risk for Delays to Treatment

Cases involving the use of glatiramer acetate with a fatal outcome have been reported, PRAC noted.

The committee cautioned that because the initial symptoms could overlap with those of postinjection reaction, there was a risk for delay in identifying an anaphylactic reaction.

PRAC has sanctioned a direct healthcare professional communication (DHPC) to inform healthcare professionals about the risk. Patients and caregivers should be advised of the signs and symptoms of an anaphylactic reaction and the need to seek emergency care if this should occur, the committee added. In the event of such a reaction, treatment with glatiramer acetate must be discontinued, PRAC stated.

Once adopted, the DHPC for glatiramer acetate will be disseminated to healthcare professionals by the marketing authorization holders.

Anaphylactic reactions associated with the use of glatiramer acetate have been noted in medical literature for some years. A letter by members of the department of neurology at Albert Ludwig University Freiburg, Freiburg im Bresigau, Germany, published in the journal European Neurology in 2011, detailed six cases of anaphylactoid or anaphylactic reactions in patients while they were undergoing treatment with glatiramer acetate.

The authors highlighted that in one of the cases, a grade 1 anaphylactic reaction occurred 3 months after treatment with the drug was initiated.

A version of this article first appeared on Medscape.com.

Such reactions could occur even years after the start of treatment, the European Medicines Agency (EMA) warned.

Glatiramer acetate is a disease-modifying therapy (DMT) for relapsing MS that is given by injection.

The drug has been used for treating MS for more than 20 years, during which time, it has had a good safety profile. Common side effects are known to include vasodilation, arthralgia, anxiety, hypertonia, palpitations, and lipoatrophy.

A meeting of the EMA’s Pharmacovigilance Risk Assessment Committee (PRAC), held on July 8-11, considered evidence from an EU-wide review of all available data concerning anaphylactic reactions with glatiramer acetate. As a result, the committee concluded that the medicine is associated with a risk for anaphylactic reactions, which may occur shortly after administration or even months or years later.

Risk for Delays to Treatment

Cases involving the use of glatiramer acetate with a fatal outcome have been reported, PRAC noted.

The committee cautioned that because the initial symptoms could overlap with those of postinjection reaction, there was a risk for delay in identifying an anaphylactic reaction.

PRAC has sanctioned a direct healthcare professional communication (DHPC) to inform healthcare professionals about the risk. Patients and caregivers should be advised of the signs and symptoms of an anaphylactic reaction and the need to seek emergency care if this should occur, the committee added. In the event of such a reaction, treatment with glatiramer acetate must be discontinued, PRAC stated.

Once adopted, the DHPC for glatiramer acetate will be disseminated to healthcare professionals by the marketing authorization holders.

Anaphylactic reactions associated with the use of glatiramer acetate have been noted in medical literature for some years. A letter by members of the department of neurology at Albert Ludwig University Freiburg, Freiburg im Bresigau, Germany, published in the journal European Neurology in 2011, detailed six cases of anaphylactoid or anaphylactic reactions in patients while they were undergoing treatment with glatiramer acetate.

The authors highlighted that in one of the cases, a grade 1 anaphylactic reaction occurred 3 months after treatment with the drug was initiated.

A version of this article first appeared on Medscape.com.

Factors Linked to Complete Response, Survival in Pancreatic Cancer

TOPLINE:

a multicenter cohort study found. Several factors, including treatment type and tumor features, influenced the outcomes.

METHODOLOGY:

- Preoperative chemo(radio)therapy is increasingly used in patients with localized pancreatic adenocarcinoma and may improve the chance of a pathologic complete response. Achieving a pathologic complete response is associated with improved overall survival.

- However, the evidence on pathologic complete response is based on large national databases or small single-center series. Multicenter studies with in-depth data about complete response are lacking.

- In the current analysis, researchers investigated the incidence and factors associated with pathologic complete response after preoperative chemo(radio)therapy among 1758 patients (mean age, 64 years; 50% men) with localized pancreatic adenocarcinoma who underwent resection after two or more cycles of chemotherapy (with or without radiotherapy).

- Patients were treated at 19 centers in eight countries. The median follow-up was 19 months. Pathologic complete response was defined as the absence of vital tumor cells in the patient’s sampled pancreas specimen after resection.

- Factors associated with overall survival and pathologic complete response were investigated with Cox proportional hazards and logistic regression models, respectively.

TAKEAWAY:

- Researchers found that the rate of pathologic complete response was 4.8% in patients who received chemo(radio)therapy before pancreatic cancer resection.

- Having a pathologic complete response was associated with a 54% lower risk for death (hazard ratio, 0.46). At 5 years, the overall survival rate was 63% in patients with a pathologic complete response vs 30% in patients without one.

- More patients who received preoperative modified FOLFIRINOX achieved a pathologic complete response (58.8% vs 44.7%). Other factors associated with pathologic complete response included tumors located in the pancreatic head (odds ratio [OR], 2.51), tumors > 40 mm at diagnosis (OR, 2.58), partial or complete radiologic response (OR, 13.0), and normal(ized) serum carbohydrate antigen 19-9 after preoperative therapy (OR, 3.76).

- Preoperative radiotherapy (OR, 2.03) and preoperative stereotactic body radiotherapy (OR, 8.91) were also associated with a pathologic complete response; however, preoperative radiotherapy did not improve overall survival, and preoperative stereotactic body radiotherapy was independently associated with worse overall survival. These findings suggest that a pathologic complete response might not always reflect an optimal disease response.

IN PRACTICE:

Although pathologic complete response does not reflect cure, it is associated with better overall survival, the authors wrote. Factors associated with a pathologic complete response may inform treatment decisions.

SOURCE:

The study, with first author Thomas F. Stoop, MD, University of Amsterdam, the Netherlands, was published online on June 18 in JAMA Network Open.

LIMITATIONS:

The study had several limitations. The sample size and the limited number of events precluded comparative subanalyses, as well as a more detailed stratification for preoperative chemotherapy regimens. Information about patients’ race and the presence of BRCA germline mutations, both of which seem to be relevant to the chance of achieving a major pathologic response, was not collected or available.

DISCLOSURES:

No specific funding was noted. Several coauthors have industry relationships outside of the submitted work.

A version of this article first appeared on Medscape.com.

TOPLINE:

a multicenter cohort study found. Several factors, including treatment type and tumor features, influenced the outcomes.

METHODOLOGY:

- Preoperative chemo(radio)therapy is increasingly used in patients with localized pancreatic adenocarcinoma and may improve the chance of a pathologic complete response. Achieving a pathologic complete response is associated with improved overall survival.

- However, the evidence on pathologic complete response is based on large national databases or small single-center series. Multicenter studies with in-depth data about complete response are lacking.

- In the current analysis, researchers investigated the incidence and factors associated with pathologic complete response after preoperative chemo(radio)therapy among 1758 patients (mean age, 64 years; 50% men) with localized pancreatic adenocarcinoma who underwent resection after two or more cycles of chemotherapy (with or without radiotherapy).

- Patients were treated at 19 centers in eight countries. The median follow-up was 19 months. Pathologic complete response was defined as the absence of vital tumor cells in the patient’s sampled pancreas specimen after resection.

- Factors associated with overall survival and pathologic complete response were investigated with Cox proportional hazards and logistic regression models, respectively.

TAKEAWAY:

- Researchers found that the rate of pathologic complete response was 4.8% in patients who received chemo(radio)therapy before pancreatic cancer resection.

- Having a pathologic complete response was associated with a 54% lower risk for death (hazard ratio, 0.46). At 5 years, the overall survival rate was 63% in patients with a pathologic complete response vs 30% in patients without one.

- More patients who received preoperative modified FOLFIRINOX achieved a pathologic complete response (58.8% vs 44.7%). Other factors associated with pathologic complete response included tumors located in the pancreatic head (odds ratio [OR], 2.51), tumors > 40 mm at diagnosis (OR, 2.58), partial or complete radiologic response (OR, 13.0), and normal(ized) serum carbohydrate antigen 19-9 after preoperative therapy (OR, 3.76).

- Preoperative radiotherapy (OR, 2.03) and preoperative stereotactic body radiotherapy (OR, 8.91) were also associated with a pathologic complete response; however, preoperative radiotherapy did not improve overall survival, and preoperative stereotactic body radiotherapy was independently associated with worse overall survival. These findings suggest that a pathologic complete response might not always reflect an optimal disease response.

IN PRACTICE:

Although pathologic complete response does not reflect cure, it is associated with better overall survival, the authors wrote. Factors associated with a pathologic complete response may inform treatment decisions.

SOURCE:

The study, with first author Thomas F. Stoop, MD, University of Amsterdam, the Netherlands, was published online on June 18 in JAMA Network Open.

LIMITATIONS:

The study had several limitations. The sample size and the limited number of events precluded comparative subanalyses, as well as a more detailed stratification for preoperative chemotherapy regimens. Information about patients’ race and the presence of BRCA germline mutations, both of which seem to be relevant to the chance of achieving a major pathologic response, was not collected or available.

DISCLOSURES:

No specific funding was noted. Several coauthors have industry relationships outside of the submitted work.

A version of this article first appeared on Medscape.com.

TOPLINE:

a multicenter cohort study found. Several factors, including treatment type and tumor features, influenced the outcomes.

METHODOLOGY:

- Preoperative chemo(radio)therapy is increasingly used in patients with localized pancreatic adenocarcinoma and may improve the chance of a pathologic complete response. Achieving a pathologic complete response is associated with improved overall survival.

- However, the evidence on pathologic complete response is based on large national databases or small single-center series. Multicenter studies with in-depth data about complete response are lacking.

- In the current analysis, researchers investigated the incidence and factors associated with pathologic complete response after preoperative chemo(radio)therapy among 1758 patients (mean age, 64 years; 50% men) with localized pancreatic adenocarcinoma who underwent resection after two or more cycles of chemotherapy (with or without radiotherapy).

- Patients were treated at 19 centers in eight countries. The median follow-up was 19 months. Pathologic complete response was defined as the absence of vital tumor cells in the patient’s sampled pancreas specimen after resection.

- Factors associated with overall survival and pathologic complete response were investigated with Cox proportional hazards and logistic regression models, respectively.

TAKEAWAY:

- Researchers found that the rate of pathologic complete response was 4.8% in patients who received chemo(radio)therapy before pancreatic cancer resection.

- Having a pathologic complete response was associated with a 54% lower risk for death (hazard ratio, 0.46). At 5 years, the overall survival rate was 63% in patients with a pathologic complete response vs 30% in patients without one.

- More patients who received preoperative modified FOLFIRINOX achieved a pathologic complete response (58.8% vs 44.7%). Other factors associated with pathologic complete response included tumors located in the pancreatic head (odds ratio [OR], 2.51), tumors > 40 mm at diagnosis (OR, 2.58), partial or complete radiologic response (OR, 13.0), and normal(ized) serum carbohydrate antigen 19-9 after preoperative therapy (OR, 3.76).

- Preoperative radiotherapy (OR, 2.03) and preoperative stereotactic body radiotherapy (OR, 8.91) were also associated with a pathologic complete response; however, preoperative radiotherapy did not improve overall survival, and preoperative stereotactic body radiotherapy was independently associated with worse overall survival. These findings suggest that a pathologic complete response might not always reflect an optimal disease response.

IN PRACTICE:

Although pathologic complete response does not reflect cure, it is associated with better overall survival, the authors wrote. Factors associated with a pathologic complete response may inform treatment decisions.

SOURCE:

The study, with first author Thomas F. Stoop, MD, University of Amsterdam, the Netherlands, was published online on June 18 in JAMA Network Open.

LIMITATIONS:

The study had several limitations. The sample size and the limited number of events precluded comparative subanalyses, as well as a more detailed stratification for preoperative chemotherapy regimens. Information about patients’ race and the presence of BRCA germline mutations, both of which seem to be relevant to the chance of achieving a major pathologic response, was not collected or available.

DISCLOSURES:

No specific funding was noted. Several coauthors have industry relationships outside of the submitted work.

A version of this article first appeared on Medscape.com.

Managing Cancer in Pregnancy: Improvements and Considerations

Introduction: Tremendous Progress on Cancer Extends to Cancer in Pregnancy

The biomedical research enterprise that took shape in the United States after World War II has had numerous positive effects, including significant progress made during the past 75-plus years in the diagnosis, prevention, and treatment of cancer.

President Franklin D. Roosevelt’s 1944 request of Dr. Vannevar Bush, director of the then Office of Scientific Research and Development, to organize a program that would advance and apply scientific knowledge for times of peace — just as it been advanced and applied in times of war — culminated in a historic report, Science – The Endless Frontier. Presented in 1945 to President Harry S. Truman, this report helped fuel decades of broad, bold, and coordinated government-sponsored biomedical research aimed at addressing disease and improving the health of the American people (National Science Foundation, 1945).

Discoveries made from research in basic and translational sciences deepened our knowledge of the cellular and molecular underpinnings of cancer, leading to advances in chemotherapy, radiotherapy, and other treatment approaches as well as continual refinements in their application. Similarly, our diagnostic armamentarium has significantly improved.

As a result, we have reduced both the incidence and mortality of cancer. Today, some cancers can be prevented. Others can be reversed or put in remission. Granted, progress has been variable, with some cancers such as ovarian cancer still having relatively low survival rates. Much more needs to be done. Overall, however, the positive effects of the U.S. biomedical research enterprise on cancer are evident. According to the National Cancer Institute’s most recent report on the status of cancer, death rates from cancer fell 1.9% per year on average in females from 2015 to 2019 (Cancer. 2022 Oct 22. doi: 10.1002/cncr.34479).

It is not only patients whose cancer occurs outside of pregnancy who have benefited. When treatment is appropriately selected and timing considerations are made, patients whose cancer is diagnosed during pregnancy — and their children — can have good outcomes.

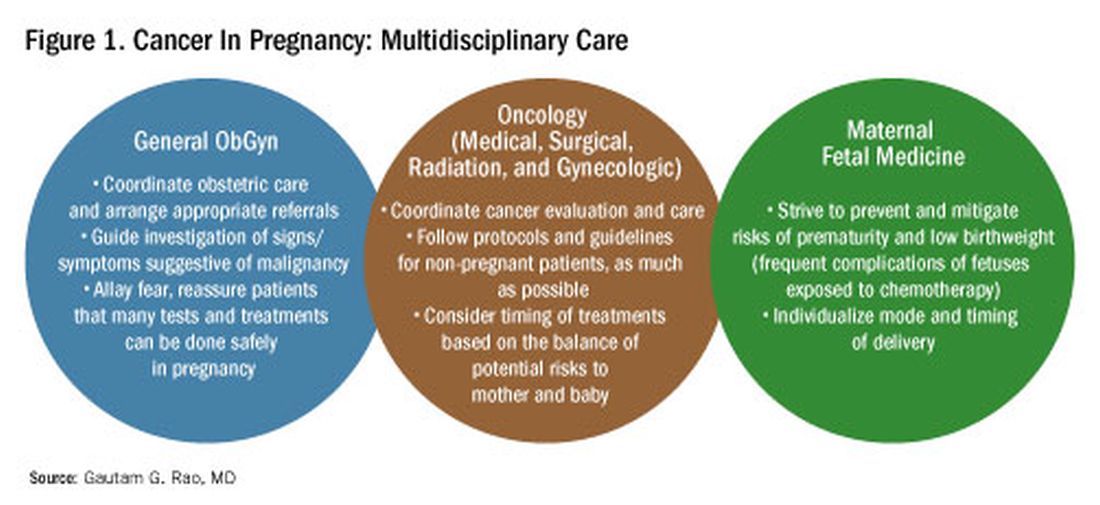

To explain how the management of cancer in pregnancy has improved, we have invited Gautam G. Rao, MD, gynecologic oncologist and associate professor of obstetrics, gynecology, and reproductive sciences at the University of Maryland School of Medicine, to write this installment of the Master Class in Obstetrics. As Dr. Rao explains, radiation is not as dangerous to the fetus as once thought, and the safety of many chemotherapeutic regimens in pregnancy has been documented. Obstetricians can and should counsel patients, he explains, about the likelihood of good maternal and fetal outcomes.

E. Albert Reece, MD, PhD, MBA, a maternal-fetal medicine specialist, is dean emeritus of the University of Maryland School of Medicine, former university executive vice president; currently the endowed professor and director of the Center for Advanced Research Training and Innovation (CARTI), and senior scientist in the Center for Birth Defects Research. Dr. Reece reported no relevant disclosures. He is the medical editor of this column. Contact him at obnews@mdedge.com.

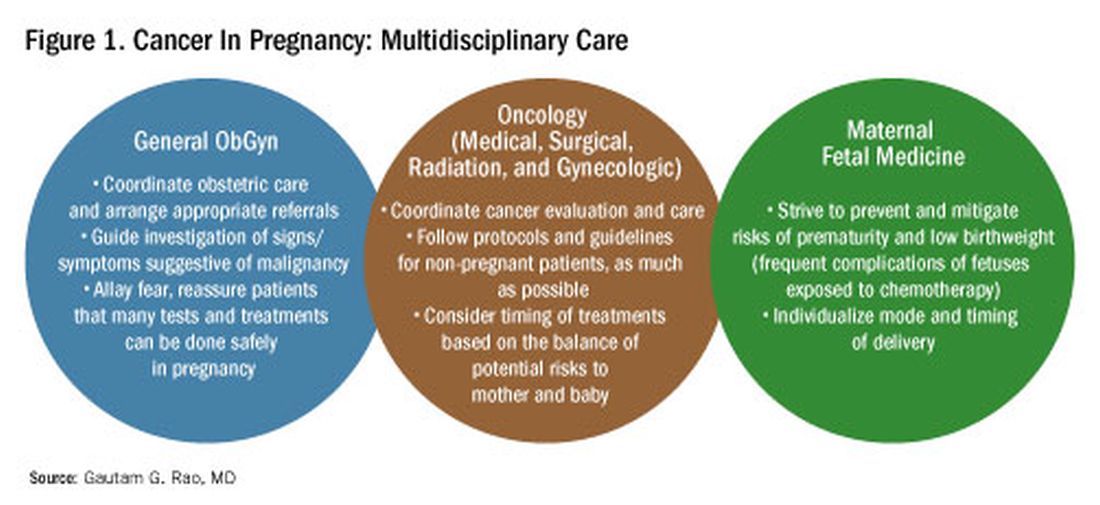

Managing Cancer in Pregnancy

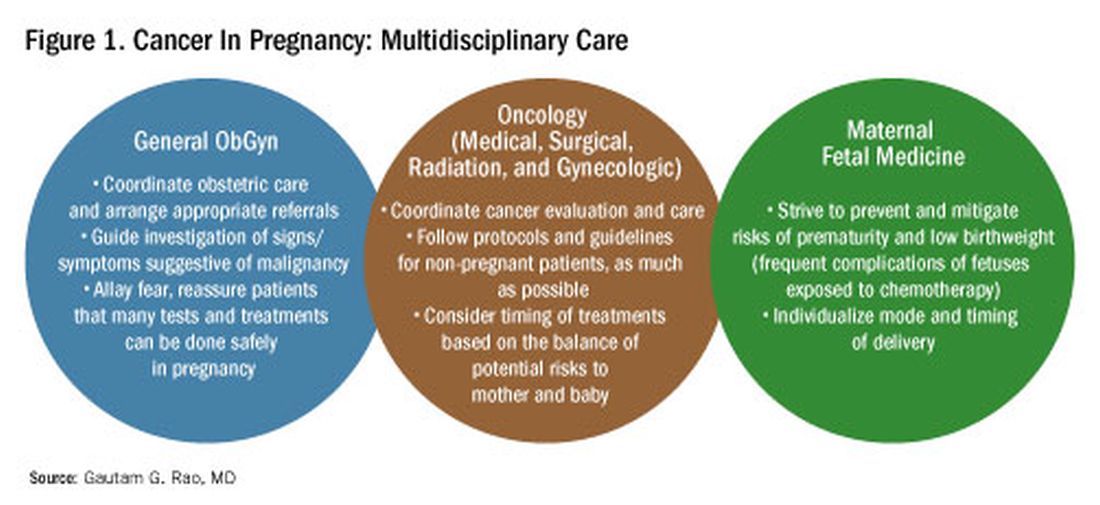

Cancer can cause fear and distress for any patient, but when cancer is diagnosed during pregnancy, an expectant mother fears not only for her own health but for the health of her unborn child. Fortunately, ob.gyn.s and multidisciplinary teams have good reason to reassure patients about the likelihood of good outcomes.

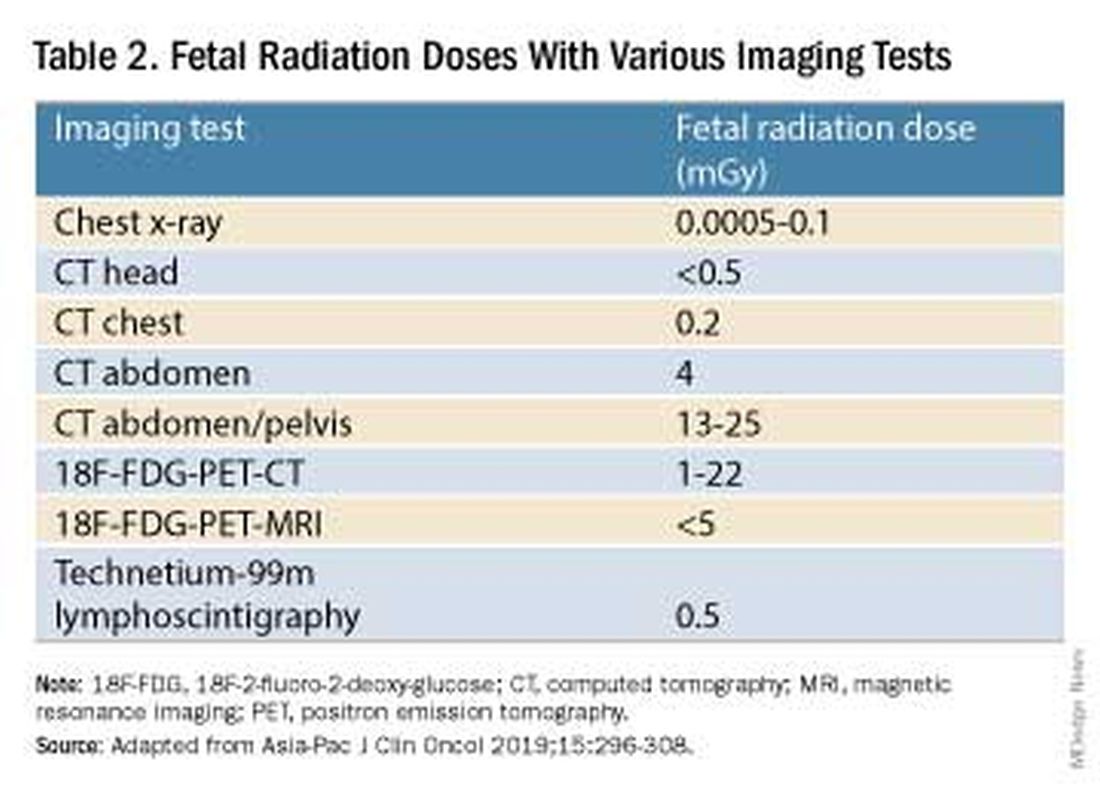

Cancer treatment in pregnancy has improved with advancements in imaging and chemotherapy, and while maternal and fetal outcomes of prenatal cancer treatment are not well reported, evidence acquired in recent years from case series and retrospective studies shows that most imaging studies and procedural diagnostic tests – and many treatments – can be performed safely in pregnancy.

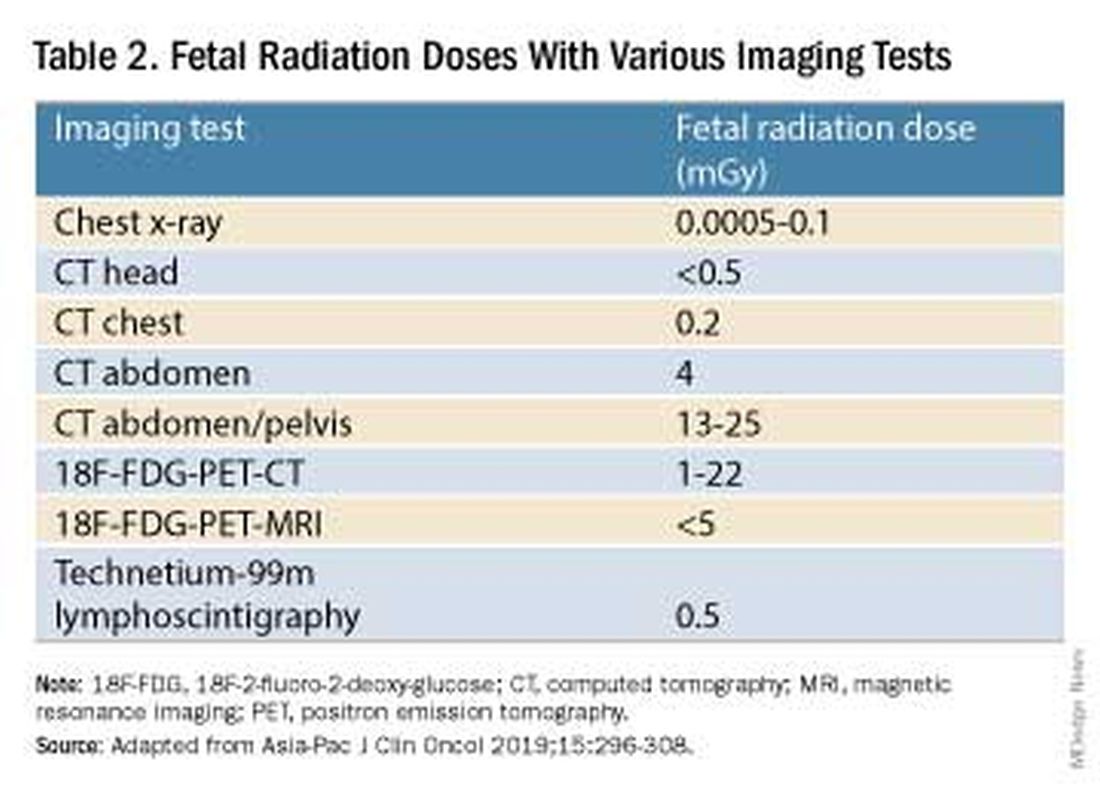

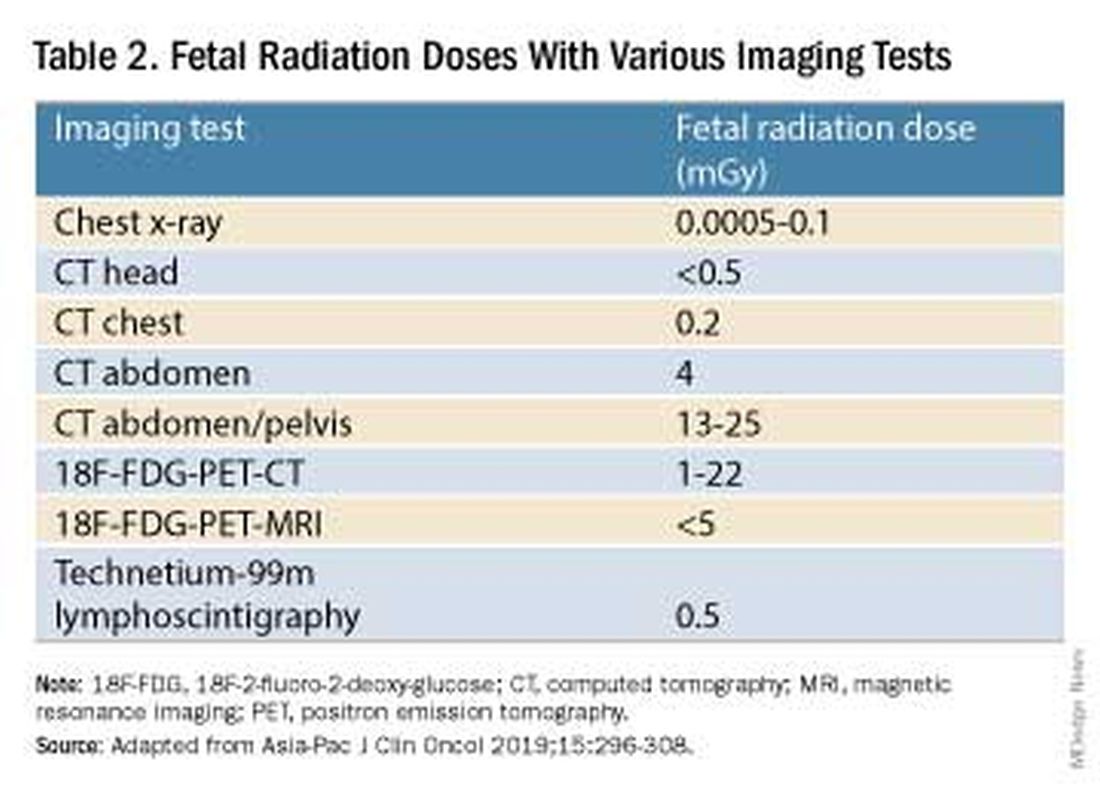

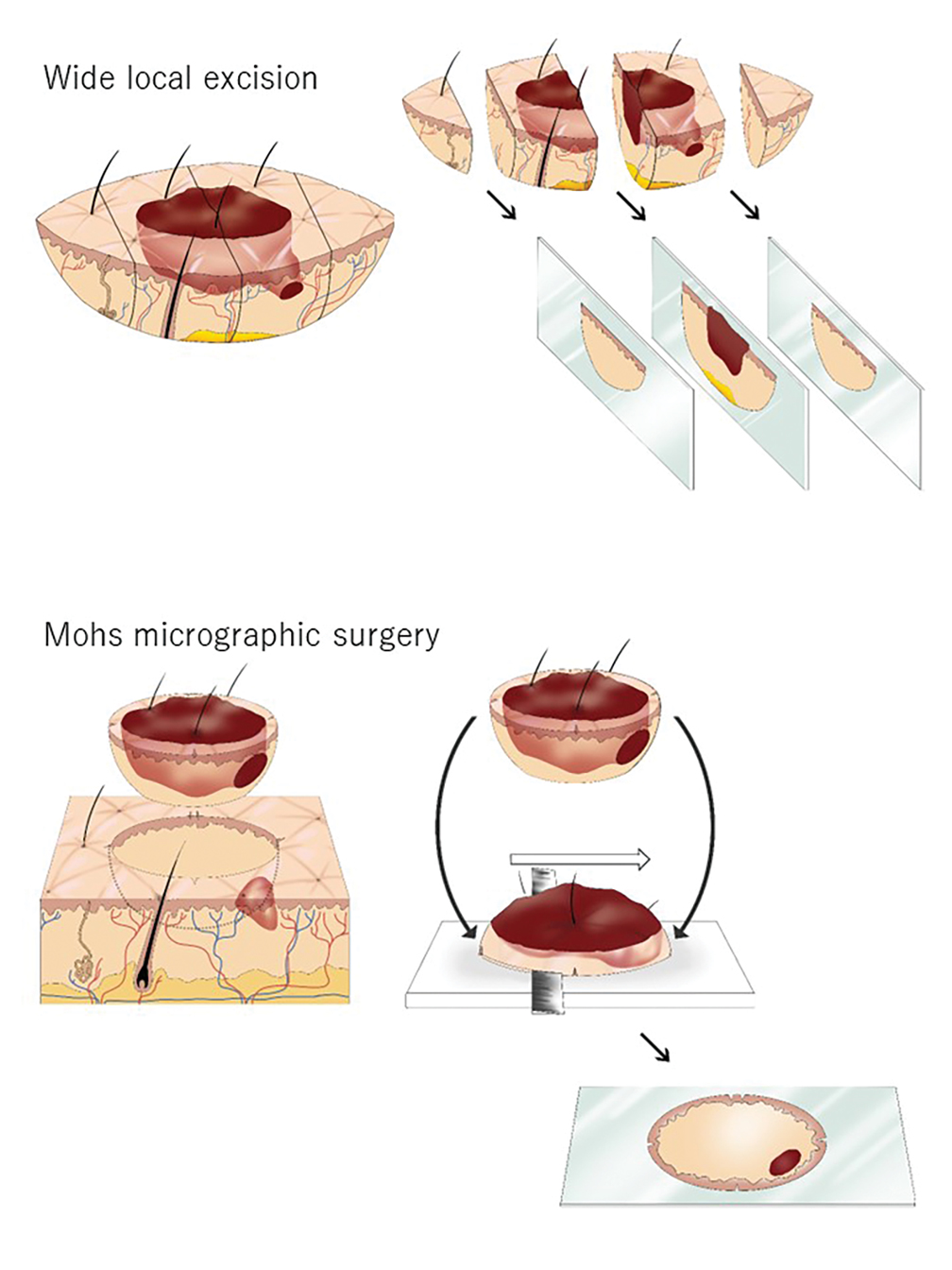

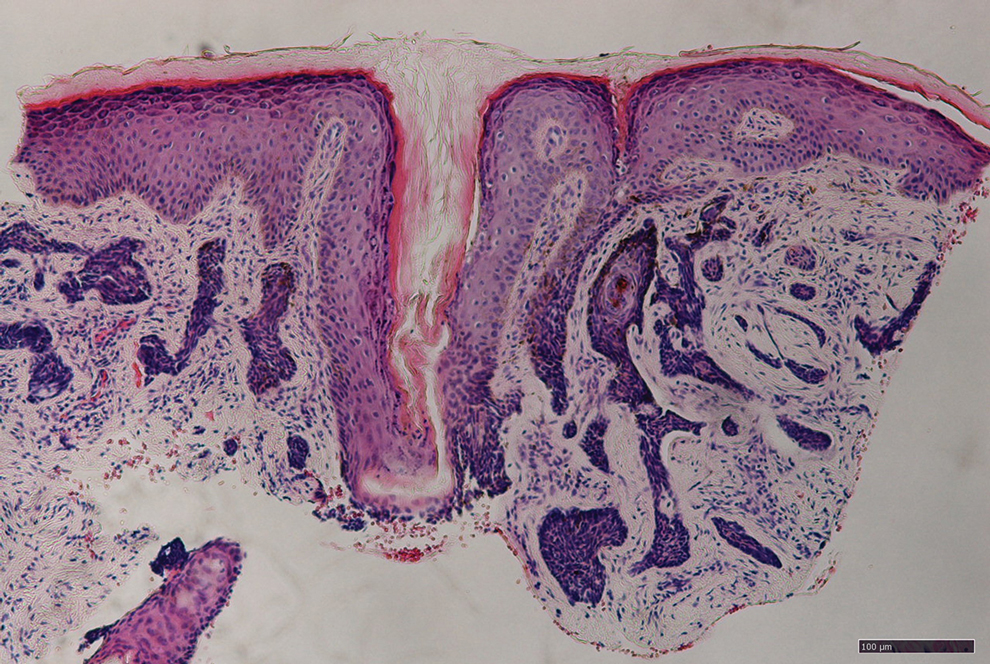

Decades ago, we avoided CT scans during pregnancy because of concerns about radiation exposure to the fetus, leaving some patients without an accurate staging of their cancer. Today, we have evidence that a CT scan is generally safe in pregnancy. Similarly, the safety of many chemotherapeutic regimens in pregnancy has been documented in recent decades,and the use of chemotherapy during pregnancy has increased progressively. Radiation is also commonly utilized in the management of cancers that may occur during pregnancy, such as breast cancer.1

Considerations of timing are often central to decision-making; chemotherapy and radiotherapy are generally avoided in the first trimester to prevent structural fetal anomalies, for instance, and delaying cancer treatment is often warranted when the patient is a few weeks away from delivery. On occasion, iatrogenic preterm birth is considered when the risks to the mother of delaying a necessary cancer treatment outweigh the risks to the fetus of prematurity.1

Pregnancy termination is rarely indicated, however, and information gathered over the past 2 decades suggests that fetal and placental metastases are rare.1 There is broad agreement that prenatal treatment of cancer in pregnancy should adhere as much as possible to protocols and guidelines for nonpregnant patients and that treatment delays driven by fear of fetal anomalies and miscarriage are unnecessary.

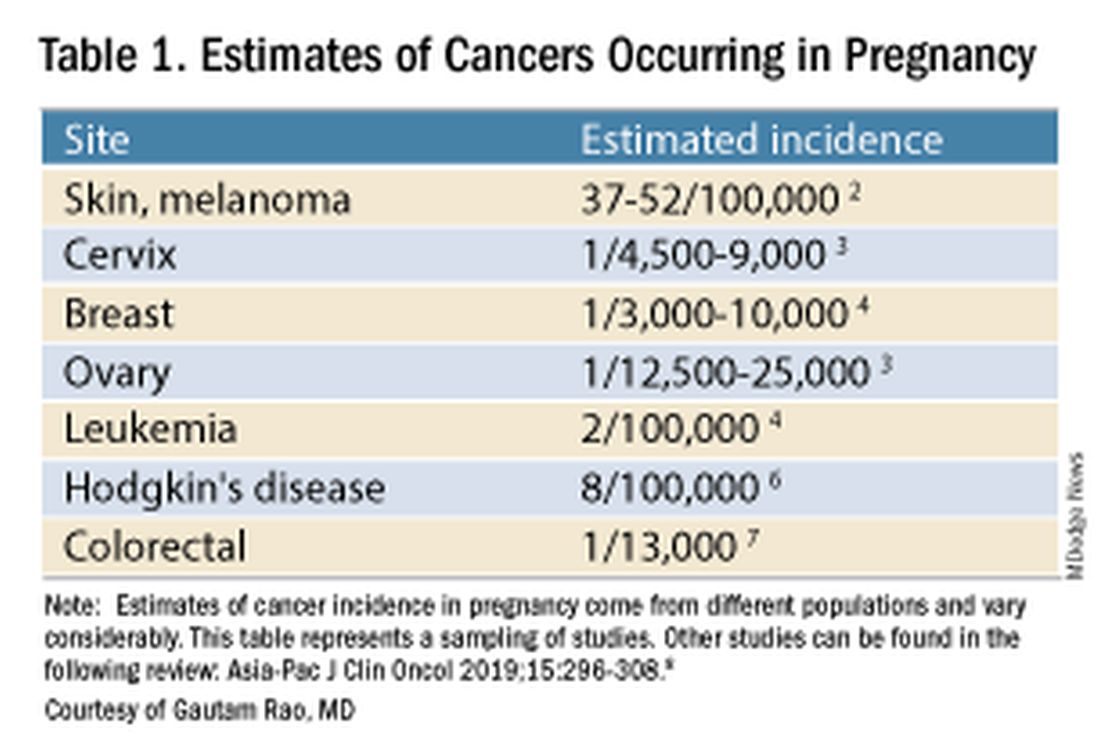

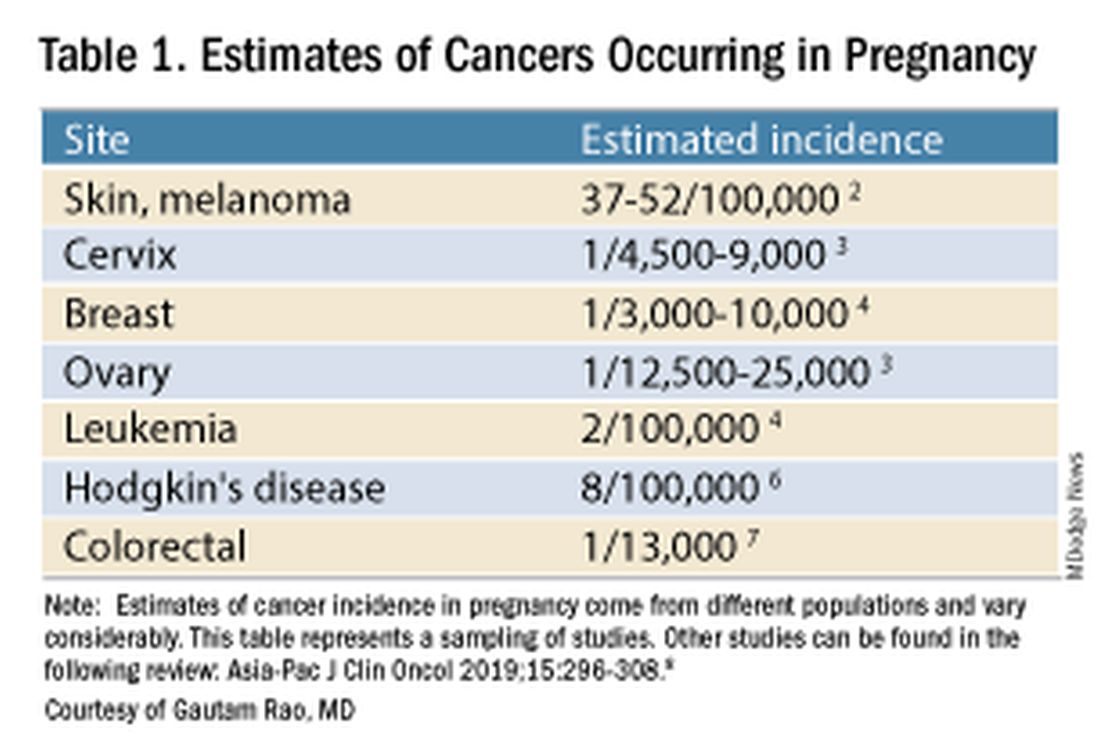

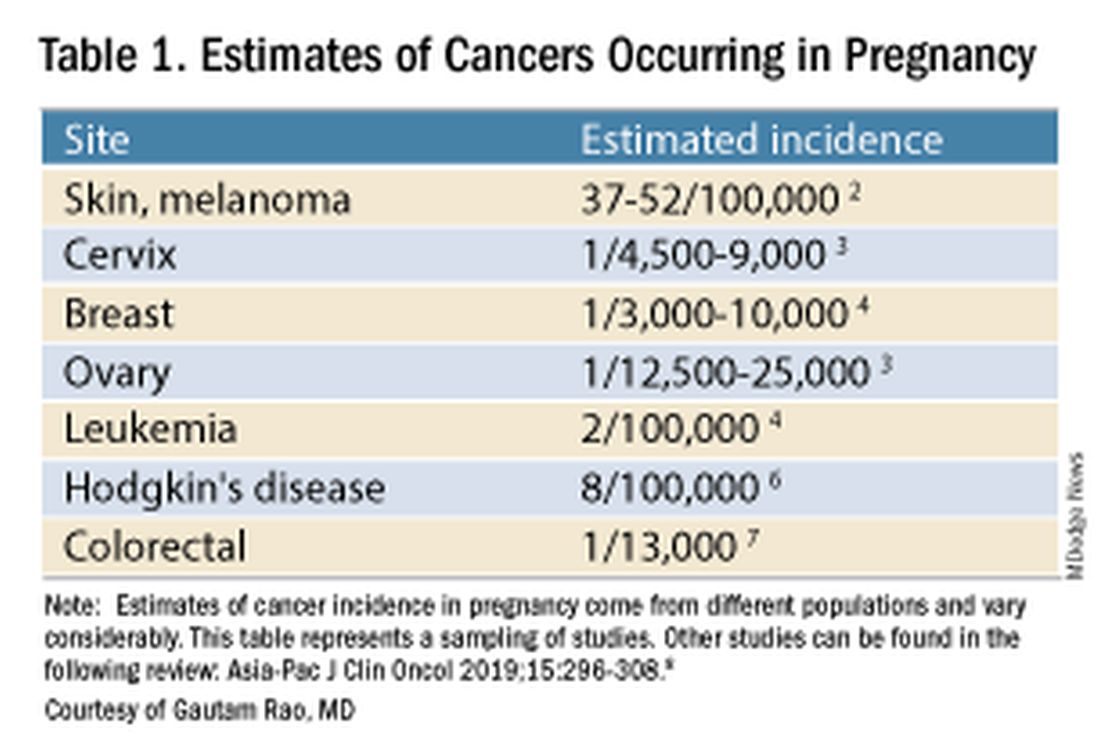

Cancer Incidence, Use of Diagnostic Imaging

Data on the incidence of cancer in pregnancy comes from population-based cancer registries, and unfortunately, these data are not standardized and are often incomplete. Many studies include cancer diagnosed up to 1 year after pregnancy, and some include preinvasive disease. Estimates therefore vary considerably (see Table 1 for a sampling of estimates incidences.)