User login

Examining the Interfacility Variation of Social Determinants of Health in the Veterans Health Administration

Social determinants of health (SDoH) are social, economic, environmental, and occupational factors that are known to influence an individual’s health care utilization and clinical outcomes.1,2 Because the Veterans Health Administration (VHA) is charged to address both the medical and nonmedical needs of the veteran population, it is increasingly interested in the impact SDoH have on veteran care.3,4 To combat the adverse impact of such factors, the VHA has implemented several large-scale programs across the US that focus on prevalent SDoH, such as homelessness, substance abuse, and alcohol use disorders.5,6 While such risk factors are generally universal in their distribution, variation across regions, between urban and rural spaces, and even within cities has been shown to exist in private settings.7 Understanding such variability potentially could be helpful to US Department of Veterans Affairs (VA) policymakers and leaders to better allocate funding and resources to address such issues.

Although previous work has highlighted regional and neighborhood-level variability of SDoH, no study has examined the facility-level variability of commonly encountered social risk factors within the VHA.4,8 The aim of this study was to describe the interfacility variation of 5 common SDoH known to influence health and health outcomes among a national cohort of veterans hospitalized for common medical issues by using administrative data.

Methods

We used a national cohort of veterans aged ≥ 65 years who were hospitalized at a VHA acute care facility with a primary discharge diagnosis of acute myocardial infarction (AMI), heart failure (HF), or pneumonia in 2012. These conditions were chosen because they are publicly reported and frequently used for interfacility comparison.

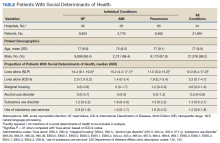

Using the International Classification of Diseases–9th Revision (ICD-9) and VHA clinical stop codes, we calculated the median documented proportion of patients with any of the following 5 SDoH: lived alone, marginal housing, alcohol use disorder, substance use disorder, and use of substance use services for patients presenting with HF, MI, and pneumonia (Table). These SDoH were chosen because they are intervenable risk factors for which the VHA has several programs (eg, homeless outreach, substance abuse, and tobacco cessation). To examine the variability of these SDoH across VHA facilities, we determined the number of hospitals that had a sufficient number of admissions (≥ 50) to be included in the analyses. We then examined the administratively documented, facility-level variation in the proportion of individuals with any of the 5 SDoH administrative codes and examined the distribution of their use across all qualifying facilities.

Because variability may be due to regional coding differences, we examined the difference in the estimated prevalence of the risk factor lives alone by using a previously developed natural language processing (NLP) program.9 The NLP program is a rule-based system designed to automatically extract information that requires inferencing from clinical notes (eg, discharge summaries and nursing, social work, emergency department physician, primary care, and hospital admission notes). For instance, the program identifies whether there was direct or indirect evidence that the patient did or did not live alone. In addition to extracting data on lives alone, the NLP program has the capacity to extract information on lack of social support and living alone—2 characteristics without VHA interventions, which were not examined here. The NLP program was developed and evaluated using at least 1 year of notes prior to index hospitalization. Because this program was developed and validated on a 2012 data set, we were limited to using a cohort from this year as well.

All analyses were conducted using SAS Version 9.4. The San Francisco VA Medical Center Institutional Review Board approved this study.

Results

In total, 21,991 patients with either HF (9,853), pneumonia (9,362), or AMI (2,776) were identified across 91 VHA facilities. The majority were male (98%) and had a median (SD) age of 77.0 (9.0) years. The median facility-level proportion of veterans who had any of the SDoH risk factors extracted through administrative codes was low across all conditions, ranging from 0.5 to 2.2%. The most prevalent factors among patients admitted for HF, AMI, and pneumonia were lives alone (2.0% [Interquartile range (IQR), 1.0-5.2], 1.4% [IQR, 0-3.4], and 1.9% [IQR, 0.7-5.4]), substance use disorder (1.2% [IQR, 0-2.2], 1.6% [IQR: 0-3.0], and 1.3% [IQR, 0-2.2] and use of substance use services (0.9% [IQR, 0-1.6%], 1.0% [IQR, 0-1.7%], and 1.6% [IQR, 0-2.2%], respectively [Table]).

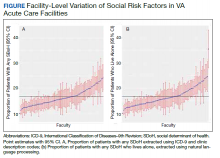

When utilizing the NLP algorithm, the documented prevalence of lives alone in the free text of the medical record was higher than administrative coding across all conditions (12.3% vs. 2.2%; P < .01). Among each of the 3 assessed conditions, HF (14.4% vs 2.0%, P < .01) had higher levels of lives alone compared with pneumonia (11% vs 1.9%, P < .01), and AMI (10.2% vs 1.4%, P < .01) when using the NLP algorithm. When we examined the documented facility-level variation in the proportion of individuals with any of the 5 SDoH administrative codes or NLP, we found large variability across all facilities—regardless of extraction method (Figure).

Discussion

While SDoH are known to impact health outcomes, the presence of these risk factors in administrative data among individuals hospitalized for common medical issues is low and variable across VHA facilities. Understanding the documented, facility-level variability of these measures may assist the VHA in determining how it invests time and resources—as different facilities may disproportionately serve a higher number of vulnerable individuals. Beyond the VHA, these findings have generalizable lessons for the US health care system, which has come to recognize how these risk factors impact patients’ health.10

Although the proportion of individuals with any of the assessed SDoH identified by administrative data was low, our findings are in line with recent studies that showed other risk factors such as social isolation (0.65%), housing issues (0.19%), and financial strain (0.07%) had similarly low prevalence.8,11 Although the exact prevalence of such factors remains unclear, these findings highlight that SDoH do not appear to be well documented in administrative data. Low coding rates are likely due to the fact that SDoH administrative codes are not tied to financial reimbursement—thus not incentivizing their use by clinicians or hospital systems.

In 2014, an Institute of Medicine report suggested that collection of SDoH in electronic health data as a means to better empower clinicians and health care systems to address social disparities and further support research in SDoH.12 Since then, data collection using SDoH screening tools has become more common across settings, but is not consistently translated to standardized data due to lack of industry consensus and technical barriers.13 To improve this process, the Centers for Medicare and Medicaid Services created “z-codes” for the ICD-10 classification system—a subset of codes that are meant to better capture patients’ underlying social risk.14 It remains to be seen if such administrative codes have improved the documentation of SDoH.

As health care systems have grown to understand the impact of SDoH on health outcomes,other means of collecting these data have evolved.1,10 For example, NLP-based extraction methods and electronic screening tools have been proposed and utilized as alternative for obtaining this information. Our findings suggest that some of these measures (eg, lives alone) often may be documented as part of routine care in the electronic health record, thus highlighting NLP as a tool to obtain such data. However, other studies using NLP technology to extract SDoH have shown this technology is often complicated by quality issues (ie, missing data), complex methods, and poor integration with current information technology infrastructures—thus limiting its use in health care delivery.15-18

While variance among SDoH across a national health care system is natural, it remains an important systems-level characteristic that health care leaders and policymakers should appreciate. As health care systems disperse financial resources and initiate quality improvement initiatives to address SDoH, knowing that not all facilities are equally affected by SDoH should impact allocation of such resources and energies. Although previous work has highlighted regional and neighborhood levels of variation within the VHA and other health care systems, to our knowledge, this is the first study to examine variability at the facility-level within the VHA.2,4,13,19

Limitations

There are several limitations to this study. First, though our findings are in line with previous data in other health care systems, generalizability beyond the VA, which primarily cares for older, male patients, may be limited.8 Though, as the nation’s largest health care system, lessons from the VHA can still be useful for other health care systems as they consider SDoH variation. Second, among the many SDoH previously identified to impact health, our analysis only focused on 5 such variables. Administrative and medical record documentation of other SDoH may be more common and less variable across institutions. Third, while our data suggests facility-level variation in these measures, this may be in part related to variation in coding across facilities. However, the single SDoH variable extracted using NLP also varied at the facility-level, suggesting that coding may not entirely drive the variation observed.

Conclusions

As US health care systems continue to address SDoH, our findings highlight the various challenges in obtaining accurate data on a patient’s social risk. Moreover, these findings highlight the large variability that exists among institutions in a national integrated health care system. Future work should explore the prevalence and variance of other SDoH as a means to help guide resource allocation and prioritize spending to better address SDoH where it is most needed.

Acknowledgments

This work was supported by NHLBI R01 RO1 HL116522-01A1. Support for VA/CMS data is provided by the US Department of Veterans Affairs, Veterans Health Administration, Office of Research and Development, Health Services Research and Development, VA Information Resource Center (Project Numbers SDR 02-237 and 98-004).

1. Social determinants of health (SDOH). https://catalyst.nejm.org/doi/full/10.1056/CAT.17.0312. Published December 1, 2017. Accessed December 8, 2020.

2. Hatef E, Searle KM, Predmore Z, et al. The Impact of Social Determinants of Health on hospitalization in the Veterans Health Administration. Am J Prev Med. 2019;56(6):811-818. doi:10.1016/j.amepre.2018.12.012

3. Lushniak BD, Alley DE, Ulin B, Graffunder C. The National Prevention Strategy: leveraging multiple sectors to improve population health. Am J Public Health. 2015;105(2):229-231. doi:10.2105/AJPH.2014.302257

4. Nelson K, Schwartz G, Hernandez S, Simonetti J, Curtis I, Fihn SD. The association between neighborhood environment and mortality: results from a national study of veterans. J Gen Intern Med. 2017;32(4):416-422. doi:10.1007/s11606-016-3905-x

5. Gundlapalli AV, Redd A, Bolton D, et al. Patient-aligned care team engagement to connect veterans experiencing homelessness with appropriate health care. Med Care. 2017;55 Suppl 9 Suppl 2:S104-S110. doi:10.1097/MLR.0000000000000770

6. Rash CJ, DePhilippis D. Considerations for implementing contingency management in substance abuse treatment clinics: the Veterans Affairs initiative as a model. Perspect Behav Sci. 2019;42(3):479-499. doi:10.1007/s40614-019-00204-3.

7. Ompad DC, Galea S, Caiaffa WT, Vlahov D. Social determinants of the health of urban populations: methodologic considerations. J Urban Health. 2007;84(3 Suppl):i42-i53. doi:10.1007/s11524-007-9168-4

8. Hatef E, Rouhizadeh M, Tia I, et al. Assessing the availability of data on social and behavioral determinants in structured and unstructured electronic health records: a retrospective analysis of a multilevel health care system. JMIR Med Inform. 2019;7(3):e13802. doi:10.2196/13802

9. Conway M, Keyhani S, Christensen L, et al. Moonstone: a novel natural language processing system for inferring social risk from clinical narratives. J Biomed Semantics. 2019;10(1):6. doi:10.1186/s13326-019-0198-0

10. Adler NE, Cutler DM, Fielding JE, et al. Addressing social determinants of health and health disparities: a vital direction for health and health care. Discussion Paper. NAM Perspectives. National Academy of Medicine, Washington, DC. doi:10.31478/201609t

11. Cottrell EK, Dambrun K, Cowburn S, et al. Variation in electronic health record documentation of social determinants of health across a national network of community health centers. Am J Prev Med. 2019;57(6):S65-S73. doi:10.1016/j.amepre.2019.07.014

12. Committee on the Recommended Social and Behavioral Domains and Measures for Electronic Health Records, Board on Population Health and Public Health Practice, Institute of Medicine. Capturing Social and Behavioral Domains and Measures in Electronic Health Records: Phase 2. National Academies Press (US); 2015.

13. Gottlieb L, Tobey R, Cantor J, Hessler D, Adler NE. Integrating Social And Medical Data To Improve Population Health: Opportunities And Barriers. Health Aff (Millwood). 2016;35(11):2116-2123. doi:10.1377/hlthaff.2016.0723

14. Centers for Medicare and Medicaid Service, Office of Minority Health. Z codes utilization among medicare fee-for-service (FFS) beneficiaries in 2017. Published January 2020. Accessed December 8, 2020. https://www.cms.gov/files/document/cms-omh-january2020-zcode-data-highlightpdf.pdf

15. Kharrazi H, Wang C, Scharfstein D. Prospective EHR-based clinical trials: the challenge of missing data. J Gen Intern Med. 2014;29(7):976-978. doi:10.1007/s11606-014-2883-0

16. Weiskopf NG, Weng C. Methods and dimensions of electronic health record data quality assessment: enabling reuse for clinical research. J Am Med Inform Assoc. 2013;20(1):144-151. doi:10.1136/amiajnl-2011-000681

17. Anzaldi LJ, Davison A, Boyd CM, Leff B, Kharrazi H. Comparing clinician descriptions of frailty and geriatric syndromes using electronic health records: a retrospective cohort study. BMC Geriatr. 2017;17(1):248. doi:10.1186/s12877-017-0645-7

18. Chen T, Dredze M, Weiner JP, Kharrazi H. Identifying vulnerable older adult populations by contextualizing geriatric syndrome information in clinical notes of electronic health records. J Am Med Inform Assoc. 2019;26(8-9):787-795. doi:10.1093/jamia/ocz093

19. Raphael E, Gaynes R, Hamad R. Cross-sectional analysis of place-based and racial disparities in hospitalisation rates by disease category in California in 2001 and 2011. BMJ Open. 2019;9(10):e031556. doi:10.1136/bmjopen-2019-031556

Social determinants of health (SDoH) are social, economic, environmental, and occupational factors that are known to influence an individual’s health care utilization and clinical outcomes.1,2 Because the Veterans Health Administration (VHA) is charged to address both the medical and nonmedical needs of the veteran population, it is increasingly interested in the impact SDoH have on veteran care.3,4 To combat the adverse impact of such factors, the VHA has implemented several large-scale programs across the US that focus on prevalent SDoH, such as homelessness, substance abuse, and alcohol use disorders.5,6 While such risk factors are generally universal in their distribution, variation across regions, between urban and rural spaces, and even within cities has been shown to exist in private settings.7 Understanding such variability potentially could be helpful to US Department of Veterans Affairs (VA) policymakers and leaders to better allocate funding and resources to address such issues.

Although previous work has highlighted regional and neighborhood-level variability of SDoH, no study has examined the facility-level variability of commonly encountered social risk factors within the VHA.4,8 The aim of this study was to describe the interfacility variation of 5 common SDoH known to influence health and health outcomes among a national cohort of veterans hospitalized for common medical issues by using administrative data.

Methods

We used a national cohort of veterans aged ≥ 65 years who were hospitalized at a VHA acute care facility with a primary discharge diagnosis of acute myocardial infarction (AMI), heart failure (HF), or pneumonia in 2012. These conditions were chosen because they are publicly reported and frequently used for interfacility comparison.

Using the International Classification of Diseases–9th Revision (ICD-9) and VHA clinical stop codes, we calculated the median documented proportion of patients with any of the following 5 SDoH: lived alone, marginal housing, alcohol use disorder, substance use disorder, and use of substance use services for patients presenting with HF, MI, and pneumonia (Table). These SDoH were chosen because they are intervenable risk factors for which the VHA has several programs (eg, homeless outreach, substance abuse, and tobacco cessation). To examine the variability of these SDoH across VHA facilities, we determined the number of hospitals that had a sufficient number of admissions (≥ 50) to be included in the analyses. We then examined the administratively documented, facility-level variation in the proportion of individuals with any of the 5 SDoH administrative codes and examined the distribution of their use across all qualifying facilities.

Because variability may be due to regional coding differences, we examined the difference in the estimated prevalence of the risk factor lives alone by using a previously developed natural language processing (NLP) program.9 The NLP program is a rule-based system designed to automatically extract information that requires inferencing from clinical notes (eg, discharge summaries and nursing, social work, emergency department physician, primary care, and hospital admission notes). For instance, the program identifies whether there was direct or indirect evidence that the patient did or did not live alone. In addition to extracting data on lives alone, the NLP program has the capacity to extract information on lack of social support and living alone—2 characteristics without VHA interventions, which were not examined here. The NLP program was developed and evaluated using at least 1 year of notes prior to index hospitalization. Because this program was developed and validated on a 2012 data set, we were limited to using a cohort from this year as well.

All analyses were conducted using SAS Version 9.4. The San Francisco VA Medical Center Institutional Review Board approved this study.

Results

In total, 21,991 patients with either HF (9,853), pneumonia (9,362), or AMI (2,776) were identified across 91 VHA facilities. The majority were male (98%) and had a median (SD) age of 77.0 (9.0) years. The median facility-level proportion of veterans who had any of the SDoH risk factors extracted through administrative codes was low across all conditions, ranging from 0.5 to 2.2%. The most prevalent factors among patients admitted for HF, AMI, and pneumonia were lives alone (2.0% [Interquartile range (IQR), 1.0-5.2], 1.4% [IQR, 0-3.4], and 1.9% [IQR, 0.7-5.4]), substance use disorder (1.2% [IQR, 0-2.2], 1.6% [IQR: 0-3.0], and 1.3% [IQR, 0-2.2] and use of substance use services (0.9% [IQR, 0-1.6%], 1.0% [IQR, 0-1.7%], and 1.6% [IQR, 0-2.2%], respectively [Table]).

When utilizing the NLP algorithm, the documented prevalence of lives alone in the free text of the medical record was higher than administrative coding across all conditions (12.3% vs. 2.2%; P < .01). Among each of the 3 assessed conditions, HF (14.4% vs 2.0%, P < .01) had higher levels of lives alone compared with pneumonia (11% vs 1.9%, P < .01), and AMI (10.2% vs 1.4%, P < .01) when using the NLP algorithm. When we examined the documented facility-level variation in the proportion of individuals with any of the 5 SDoH administrative codes or NLP, we found large variability across all facilities—regardless of extraction method (Figure).

Discussion

While SDoH are known to impact health outcomes, the presence of these risk factors in administrative data among individuals hospitalized for common medical issues is low and variable across VHA facilities. Understanding the documented, facility-level variability of these measures may assist the VHA in determining how it invests time and resources—as different facilities may disproportionately serve a higher number of vulnerable individuals. Beyond the VHA, these findings have generalizable lessons for the US health care system, which has come to recognize how these risk factors impact patients’ health.10

Although the proportion of individuals with any of the assessed SDoH identified by administrative data was low, our findings are in line with recent studies that showed other risk factors such as social isolation (0.65%), housing issues (0.19%), and financial strain (0.07%) had similarly low prevalence.8,11 Although the exact prevalence of such factors remains unclear, these findings highlight that SDoH do not appear to be well documented in administrative data. Low coding rates are likely due to the fact that SDoH administrative codes are not tied to financial reimbursement—thus not incentivizing their use by clinicians or hospital systems.

In 2014, an Institute of Medicine report suggested that collection of SDoH in electronic health data as a means to better empower clinicians and health care systems to address social disparities and further support research in SDoH.12 Since then, data collection using SDoH screening tools has become more common across settings, but is not consistently translated to standardized data due to lack of industry consensus and technical barriers.13 To improve this process, the Centers for Medicare and Medicaid Services created “z-codes” for the ICD-10 classification system—a subset of codes that are meant to better capture patients’ underlying social risk.14 It remains to be seen if such administrative codes have improved the documentation of SDoH.

As health care systems have grown to understand the impact of SDoH on health outcomes,other means of collecting these data have evolved.1,10 For example, NLP-based extraction methods and electronic screening tools have been proposed and utilized as alternative for obtaining this information. Our findings suggest that some of these measures (eg, lives alone) often may be documented as part of routine care in the electronic health record, thus highlighting NLP as a tool to obtain such data. However, other studies using NLP technology to extract SDoH have shown this technology is often complicated by quality issues (ie, missing data), complex methods, and poor integration with current information technology infrastructures—thus limiting its use in health care delivery.15-18

While variance among SDoH across a national health care system is natural, it remains an important systems-level characteristic that health care leaders and policymakers should appreciate. As health care systems disperse financial resources and initiate quality improvement initiatives to address SDoH, knowing that not all facilities are equally affected by SDoH should impact allocation of such resources and energies. Although previous work has highlighted regional and neighborhood levels of variation within the VHA and other health care systems, to our knowledge, this is the first study to examine variability at the facility-level within the VHA.2,4,13,19

Limitations

There are several limitations to this study. First, though our findings are in line with previous data in other health care systems, generalizability beyond the VA, which primarily cares for older, male patients, may be limited.8 Though, as the nation’s largest health care system, lessons from the VHA can still be useful for other health care systems as they consider SDoH variation. Second, among the many SDoH previously identified to impact health, our analysis only focused on 5 such variables. Administrative and medical record documentation of other SDoH may be more common and less variable across institutions. Third, while our data suggests facility-level variation in these measures, this may be in part related to variation in coding across facilities. However, the single SDoH variable extracted using NLP also varied at the facility-level, suggesting that coding may not entirely drive the variation observed.

Conclusions

As US health care systems continue to address SDoH, our findings highlight the various challenges in obtaining accurate data on a patient’s social risk. Moreover, these findings highlight the large variability that exists among institutions in a national integrated health care system. Future work should explore the prevalence and variance of other SDoH as a means to help guide resource allocation and prioritize spending to better address SDoH where it is most needed.

Acknowledgments

This work was supported by NHLBI R01 RO1 HL116522-01A1. Support for VA/CMS data is provided by the US Department of Veterans Affairs, Veterans Health Administration, Office of Research and Development, Health Services Research and Development, VA Information Resource Center (Project Numbers SDR 02-237 and 98-004).

Social determinants of health (SDoH) are social, economic, environmental, and occupational factors that are known to influence an individual’s health care utilization and clinical outcomes.1,2 Because the Veterans Health Administration (VHA) is charged to address both the medical and nonmedical needs of the veteran population, it is increasingly interested in the impact SDoH have on veteran care.3,4 To combat the adverse impact of such factors, the VHA has implemented several large-scale programs across the US that focus on prevalent SDoH, such as homelessness, substance abuse, and alcohol use disorders.5,6 While such risk factors are generally universal in their distribution, variation across regions, between urban and rural spaces, and even within cities has been shown to exist in private settings.7 Understanding such variability potentially could be helpful to US Department of Veterans Affairs (VA) policymakers and leaders to better allocate funding and resources to address such issues.

Although previous work has highlighted regional and neighborhood-level variability of SDoH, no study has examined the facility-level variability of commonly encountered social risk factors within the VHA.4,8 The aim of this study was to describe the interfacility variation of 5 common SDoH known to influence health and health outcomes among a national cohort of veterans hospitalized for common medical issues by using administrative data.

Methods

We used a national cohort of veterans aged ≥ 65 years who were hospitalized at a VHA acute care facility with a primary discharge diagnosis of acute myocardial infarction (AMI), heart failure (HF), or pneumonia in 2012. These conditions were chosen because they are publicly reported and frequently used for interfacility comparison.

Using the International Classification of Diseases–9th Revision (ICD-9) and VHA clinical stop codes, we calculated the median documented proportion of patients with any of the following 5 SDoH: lived alone, marginal housing, alcohol use disorder, substance use disorder, and use of substance use services for patients presenting with HF, MI, and pneumonia (Table). These SDoH were chosen because they are intervenable risk factors for which the VHA has several programs (eg, homeless outreach, substance abuse, and tobacco cessation). To examine the variability of these SDoH across VHA facilities, we determined the number of hospitals that had a sufficient number of admissions (≥ 50) to be included in the analyses. We then examined the administratively documented, facility-level variation in the proportion of individuals with any of the 5 SDoH administrative codes and examined the distribution of their use across all qualifying facilities.

Because variability may be due to regional coding differences, we examined the difference in the estimated prevalence of the risk factor lives alone by using a previously developed natural language processing (NLP) program.9 The NLP program is a rule-based system designed to automatically extract information that requires inferencing from clinical notes (eg, discharge summaries and nursing, social work, emergency department physician, primary care, and hospital admission notes). For instance, the program identifies whether there was direct or indirect evidence that the patient did or did not live alone. In addition to extracting data on lives alone, the NLP program has the capacity to extract information on lack of social support and living alone—2 characteristics without VHA interventions, which were not examined here. The NLP program was developed and evaluated using at least 1 year of notes prior to index hospitalization. Because this program was developed and validated on a 2012 data set, we were limited to using a cohort from this year as well.

All analyses were conducted using SAS Version 9.4. The San Francisco VA Medical Center Institutional Review Board approved this study.

Results

In total, 21,991 patients with either HF (9,853), pneumonia (9,362), or AMI (2,776) were identified across 91 VHA facilities. The majority were male (98%) and had a median (SD) age of 77.0 (9.0) years. The median facility-level proportion of veterans who had any of the SDoH risk factors extracted through administrative codes was low across all conditions, ranging from 0.5 to 2.2%. The most prevalent factors among patients admitted for HF, AMI, and pneumonia were lives alone (2.0% [Interquartile range (IQR), 1.0-5.2], 1.4% [IQR, 0-3.4], and 1.9% [IQR, 0.7-5.4]), substance use disorder (1.2% [IQR, 0-2.2], 1.6% [IQR: 0-3.0], and 1.3% [IQR, 0-2.2] and use of substance use services (0.9% [IQR, 0-1.6%], 1.0% [IQR, 0-1.7%], and 1.6% [IQR, 0-2.2%], respectively [Table]).

When utilizing the NLP algorithm, the documented prevalence of lives alone in the free text of the medical record was higher than administrative coding across all conditions (12.3% vs. 2.2%; P < .01). Among each of the 3 assessed conditions, HF (14.4% vs 2.0%, P < .01) had higher levels of lives alone compared with pneumonia (11% vs 1.9%, P < .01), and AMI (10.2% vs 1.4%, P < .01) when using the NLP algorithm. When we examined the documented facility-level variation in the proportion of individuals with any of the 5 SDoH administrative codes or NLP, we found large variability across all facilities—regardless of extraction method (Figure).

Discussion

While SDoH are known to impact health outcomes, the presence of these risk factors in administrative data among individuals hospitalized for common medical issues is low and variable across VHA facilities. Understanding the documented, facility-level variability of these measures may assist the VHA in determining how it invests time and resources—as different facilities may disproportionately serve a higher number of vulnerable individuals. Beyond the VHA, these findings have generalizable lessons for the US health care system, which has come to recognize how these risk factors impact patients’ health.10

Although the proportion of individuals with any of the assessed SDoH identified by administrative data was low, our findings are in line with recent studies that showed other risk factors such as social isolation (0.65%), housing issues (0.19%), and financial strain (0.07%) had similarly low prevalence.8,11 Although the exact prevalence of such factors remains unclear, these findings highlight that SDoH do not appear to be well documented in administrative data. Low coding rates are likely due to the fact that SDoH administrative codes are not tied to financial reimbursement—thus not incentivizing their use by clinicians or hospital systems.

In 2014, an Institute of Medicine report suggested that collection of SDoH in electronic health data as a means to better empower clinicians and health care systems to address social disparities and further support research in SDoH.12 Since then, data collection using SDoH screening tools has become more common across settings, but is not consistently translated to standardized data due to lack of industry consensus and technical barriers.13 To improve this process, the Centers for Medicare and Medicaid Services created “z-codes” for the ICD-10 classification system—a subset of codes that are meant to better capture patients’ underlying social risk.14 It remains to be seen if such administrative codes have improved the documentation of SDoH.

As health care systems have grown to understand the impact of SDoH on health outcomes,other means of collecting these data have evolved.1,10 For example, NLP-based extraction methods and electronic screening tools have been proposed and utilized as alternative for obtaining this information. Our findings suggest that some of these measures (eg, lives alone) often may be documented as part of routine care in the electronic health record, thus highlighting NLP as a tool to obtain such data. However, other studies using NLP technology to extract SDoH have shown this technology is often complicated by quality issues (ie, missing data), complex methods, and poor integration with current information technology infrastructures—thus limiting its use in health care delivery.15-18

While variance among SDoH across a national health care system is natural, it remains an important systems-level characteristic that health care leaders and policymakers should appreciate. As health care systems disperse financial resources and initiate quality improvement initiatives to address SDoH, knowing that not all facilities are equally affected by SDoH should impact allocation of such resources and energies. Although previous work has highlighted regional and neighborhood levels of variation within the VHA and other health care systems, to our knowledge, this is the first study to examine variability at the facility-level within the VHA.2,4,13,19

Limitations

There are several limitations to this study. First, though our findings are in line with previous data in other health care systems, generalizability beyond the VA, which primarily cares for older, male patients, may be limited.8 Though, as the nation’s largest health care system, lessons from the VHA can still be useful for other health care systems as they consider SDoH variation. Second, among the many SDoH previously identified to impact health, our analysis only focused on 5 such variables. Administrative and medical record documentation of other SDoH may be more common and less variable across institutions. Third, while our data suggests facility-level variation in these measures, this may be in part related to variation in coding across facilities. However, the single SDoH variable extracted using NLP also varied at the facility-level, suggesting that coding may not entirely drive the variation observed.

Conclusions

As US health care systems continue to address SDoH, our findings highlight the various challenges in obtaining accurate data on a patient’s social risk. Moreover, these findings highlight the large variability that exists among institutions in a national integrated health care system. Future work should explore the prevalence and variance of other SDoH as a means to help guide resource allocation and prioritize spending to better address SDoH where it is most needed.

Acknowledgments

This work was supported by NHLBI R01 RO1 HL116522-01A1. Support for VA/CMS data is provided by the US Department of Veterans Affairs, Veterans Health Administration, Office of Research and Development, Health Services Research and Development, VA Information Resource Center (Project Numbers SDR 02-237 and 98-004).

1. Social determinants of health (SDOH). https://catalyst.nejm.org/doi/full/10.1056/CAT.17.0312. Published December 1, 2017. Accessed December 8, 2020.

2. Hatef E, Searle KM, Predmore Z, et al. The Impact of Social Determinants of Health on hospitalization in the Veterans Health Administration. Am J Prev Med. 2019;56(6):811-818. doi:10.1016/j.amepre.2018.12.012

3. Lushniak BD, Alley DE, Ulin B, Graffunder C. The National Prevention Strategy: leveraging multiple sectors to improve population health. Am J Public Health. 2015;105(2):229-231. doi:10.2105/AJPH.2014.302257

4. Nelson K, Schwartz G, Hernandez S, Simonetti J, Curtis I, Fihn SD. The association between neighborhood environment and mortality: results from a national study of veterans. J Gen Intern Med. 2017;32(4):416-422. doi:10.1007/s11606-016-3905-x

5. Gundlapalli AV, Redd A, Bolton D, et al. Patient-aligned care team engagement to connect veterans experiencing homelessness with appropriate health care. Med Care. 2017;55 Suppl 9 Suppl 2:S104-S110. doi:10.1097/MLR.0000000000000770

6. Rash CJ, DePhilippis D. Considerations for implementing contingency management in substance abuse treatment clinics: the Veterans Affairs initiative as a model. Perspect Behav Sci. 2019;42(3):479-499. doi:10.1007/s40614-019-00204-3.

7. Ompad DC, Galea S, Caiaffa WT, Vlahov D. Social determinants of the health of urban populations: methodologic considerations. J Urban Health. 2007;84(3 Suppl):i42-i53. doi:10.1007/s11524-007-9168-4

8. Hatef E, Rouhizadeh M, Tia I, et al. Assessing the availability of data on social and behavioral determinants in structured and unstructured electronic health records: a retrospective analysis of a multilevel health care system. JMIR Med Inform. 2019;7(3):e13802. doi:10.2196/13802

9. Conway M, Keyhani S, Christensen L, et al. Moonstone: a novel natural language processing system for inferring social risk from clinical narratives. J Biomed Semantics. 2019;10(1):6. doi:10.1186/s13326-019-0198-0

10. Adler NE, Cutler DM, Fielding JE, et al. Addressing social determinants of health and health disparities: a vital direction for health and health care. Discussion Paper. NAM Perspectives. National Academy of Medicine, Washington, DC. doi:10.31478/201609t

11. Cottrell EK, Dambrun K, Cowburn S, et al. Variation in electronic health record documentation of social determinants of health across a national network of community health centers. Am J Prev Med. 2019;57(6):S65-S73. doi:10.1016/j.amepre.2019.07.014

12. Committee on the Recommended Social and Behavioral Domains and Measures for Electronic Health Records, Board on Population Health and Public Health Practice, Institute of Medicine. Capturing Social and Behavioral Domains and Measures in Electronic Health Records: Phase 2. National Academies Press (US); 2015.

13. Gottlieb L, Tobey R, Cantor J, Hessler D, Adler NE. Integrating Social And Medical Data To Improve Population Health: Opportunities And Barriers. Health Aff (Millwood). 2016;35(11):2116-2123. doi:10.1377/hlthaff.2016.0723

14. Centers for Medicare and Medicaid Service, Office of Minority Health. Z codes utilization among medicare fee-for-service (FFS) beneficiaries in 2017. Published January 2020. Accessed December 8, 2020. https://www.cms.gov/files/document/cms-omh-january2020-zcode-data-highlightpdf.pdf

15. Kharrazi H, Wang C, Scharfstein D. Prospective EHR-based clinical trials: the challenge of missing data. J Gen Intern Med. 2014;29(7):976-978. doi:10.1007/s11606-014-2883-0

16. Weiskopf NG, Weng C. Methods and dimensions of electronic health record data quality assessment: enabling reuse for clinical research. J Am Med Inform Assoc. 2013;20(1):144-151. doi:10.1136/amiajnl-2011-000681

17. Anzaldi LJ, Davison A, Boyd CM, Leff B, Kharrazi H. Comparing clinician descriptions of frailty and geriatric syndromes using electronic health records: a retrospective cohort study. BMC Geriatr. 2017;17(1):248. doi:10.1186/s12877-017-0645-7

18. Chen T, Dredze M, Weiner JP, Kharrazi H. Identifying vulnerable older adult populations by contextualizing geriatric syndrome information in clinical notes of electronic health records. J Am Med Inform Assoc. 2019;26(8-9):787-795. doi:10.1093/jamia/ocz093

19. Raphael E, Gaynes R, Hamad R. Cross-sectional analysis of place-based and racial disparities in hospitalisation rates by disease category in California in 2001 and 2011. BMJ Open. 2019;9(10):e031556. doi:10.1136/bmjopen-2019-031556

1. Social determinants of health (SDOH). https://catalyst.nejm.org/doi/full/10.1056/CAT.17.0312. Published December 1, 2017. Accessed December 8, 2020.

2. Hatef E, Searle KM, Predmore Z, et al. The Impact of Social Determinants of Health on hospitalization in the Veterans Health Administration. Am J Prev Med. 2019;56(6):811-818. doi:10.1016/j.amepre.2018.12.012

3. Lushniak BD, Alley DE, Ulin B, Graffunder C. The National Prevention Strategy: leveraging multiple sectors to improve population health. Am J Public Health. 2015;105(2):229-231. doi:10.2105/AJPH.2014.302257

4. Nelson K, Schwartz G, Hernandez S, Simonetti J, Curtis I, Fihn SD. The association between neighborhood environment and mortality: results from a national study of veterans. J Gen Intern Med. 2017;32(4):416-422. doi:10.1007/s11606-016-3905-x

5. Gundlapalli AV, Redd A, Bolton D, et al. Patient-aligned care team engagement to connect veterans experiencing homelessness with appropriate health care. Med Care. 2017;55 Suppl 9 Suppl 2:S104-S110. doi:10.1097/MLR.0000000000000770

6. Rash CJ, DePhilippis D. Considerations for implementing contingency management in substance abuse treatment clinics: the Veterans Affairs initiative as a model. Perspect Behav Sci. 2019;42(3):479-499. doi:10.1007/s40614-019-00204-3.

7. Ompad DC, Galea S, Caiaffa WT, Vlahov D. Social determinants of the health of urban populations: methodologic considerations. J Urban Health. 2007;84(3 Suppl):i42-i53. doi:10.1007/s11524-007-9168-4

8. Hatef E, Rouhizadeh M, Tia I, et al. Assessing the availability of data on social and behavioral determinants in structured and unstructured electronic health records: a retrospective analysis of a multilevel health care system. JMIR Med Inform. 2019;7(3):e13802. doi:10.2196/13802

9. Conway M, Keyhani S, Christensen L, et al. Moonstone: a novel natural language processing system for inferring social risk from clinical narratives. J Biomed Semantics. 2019;10(1):6. doi:10.1186/s13326-019-0198-0

10. Adler NE, Cutler DM, Fielding JE, et al. Addressing social determinants of health and health disparities: a vital direction for health and health care. Discussion Paper. NAM Perspectives. National Academy of Medicine, Washington, DC. doi:10.31478/201609t

11. Cottrell EK, Dambrun K, Cowburn S, et al. Variation in electronic health record documentation of social determinants of health across a national network of community health centers. Am J Prev Med. 2019;57(6):S65-S73. doi:10.1016/j.amepre.2019.07.014

12. Committee on the Recommended Social and Behavioral Domains and Measures for Electronic Health Records, Board on Population Health and Public Health Practice, Institute of Medicine. Capturing Social and Behavioral Domains and Measures in Electronic Health Records: Phase 2. National Academies Press (US); 2015.

13. Gottlieb L, Tobey R, Cantor J, Hessler D, Adler NE. Integrating Social And Medical Data To Improve Population Health: Opportunities And Barriers. Health Aff (Millwood). 2016;35(11):2116-2123. doi:10.1377/hlthaff.2016.0723

14. Centers for Medicare and Medicaid Service, Office of Minority Health. Z codes utilization among medicare fee-for-service (FFS) beneficiaries in 2017. Published January 2020. Accessed December 8, 2020. https://www.cms.gov/files/document/cms-omh-january2020-zcode-data-highlightpdf.pdf

15. Kharrazi H, Wang C, Scharfstein D. Prospective EHR-based clinical trials: the challenge of missing data. J Gen Intern Med. 2014;29(7):976-978. doi:10.1007/s11606-014-2883-0

16. Weiskopf NG, Weng C. Methods and dimensions of electronic health record data quality assessment: enabling reuse for clinical research. J Am Med Inform Assoc. 2013;20(1):144-151. doi:10.1136/amiajnl-2011-000681

17. Anzaldi LJ, Davison A, Boyd CM, Leff B, Kharrazi H. Comparing clinician descriptions of frailty and geriatric syndromes using electronic health records: a retrospective cohort study. BMC Geriatr. 2017;17(1):248. doi:10.1186/s12877-017-0645-7

18. Chen T, Dredze M, Weiner JP, Kharrazi H. Identifying vulnerable older adult populations by contextualizing geriatric syndrome information in clinical notes of electronic health records. J Am Med Inform Assoc. 2019;26(8-9):787-795. doi:10.1093/jamia/ocz093

19. Raphael E, Gaynes R, Hamad R. Cross-sectional analysis of place-based and racial disparities in hospitalisation rates by disease category in California in 2001 and 2011. BMJ Open. 2019;9(10):e031556. doi:10.1136/bmjopen-2019-031556

Examining the Utility of 30-day Readmission Rates and Hospital Profiling in the Veterans Health Administration

Using methodology created by the Centers for Medicare & Medicaid Services (CMS), the Department of Veterans Affairs (VA) calculates and reports hospital performance measures for several key conditions, including acute myocardial infarction (AMI), heart failure (HF), and pneumonia.1 These measures are designed to benchmark individual hospitals against how average hospitals perform when caring for a similar case-mix index. Because readmissions to the hospital within 30-days of discharge are common and costly, this metric has garnered extensive attention in recent years.

To summarize the 30-day readmission metric, the VA utilizes the Strategic Analytics for Improvement and Learning (SAIL) system to present internally its findings to VA practitioners and leadership.2 The VA provides these data as a means to drive quality improvement and allow for comparison of individual hospitals’ performance across measures throughout the VA healthcare system. Since 2010, the VA began using and publicly reporting the CMS-derived 30-day Risk-Stratified Readmission Rate (RSRR) on the Hospital Compare website.3 Similar to CMS, the VA uses three years of combined data so that patients, providers, and other stakeholders can compare individual hospitals’ performance across these measures.1 In response to this, hospitals and healthcare organizations have implemented quality improvement and large-scale programmatic interventions in an attempt to improve quality around readmissions.4-6 A recent assessment on how hospitals within the Medicare fee-for-service program have responded to such reporting found large degrees of variability, with more than half of the participating institutions facing penalties due to greater-than-expected readmission rates.5 Although the VA utilizes the same CMS-derived model in its assessments and reporting, the variability and distribution around this metric are not publicly reported—thus making it difficult to ascertain how individual VA hospitals compare with one another. Without such information, individual facilities may not know how to benchmark the quality of their care to others, nor would the VA recognize which interventions addressing readmissions are working, and which are not. Although previous assessments of interinstitutional variance have been performed in Medicare populations,7 a focused analysis of such variance within the VA has yet to be performed.

In this study, we performed a multiyear assessment of the CMS-derived 30-day RSRR metric for AMI, HF, and pneumonia as a useful measure to drive VA quality improvement or distinguish VA facility performance based on its ability to detect interfacility variability.

METHODS

Data Source

We used VA administrative and Medicare claims data from 2010 to 2012. After identifying index hospitalizations to VA hospitals, we obtained patients’ respective inpatient Medicare claims data from the Medicare Provider Analysis and Review (MedPAR) and Outpatient files. All Medicare records were linked to VA records via scrambled Social Security numbers and were provided by the VA Information Resource Center. This study was approved by the San Francisco VA Medical Center Institutional Review Board.

Study Sample

Our cohort consisted of hospitalized VA beneficiary and Medicare fee-for-service patients who were aged ≥65 years and admitted to and discharged from a VA acute care center with a primary discharge diagnosis of AMI, HF, or pneumonia. These comorbidities were chosen as they are publicly reported and frequently used for interfacility comparisons. Because studies have found that inclusion of secondary payer data (ie, CMS data) may affect hospital-profiling outcomes, we included Medicare data on all available patients.8 We excluded hospitalizations that resulted in a transfer to another acute care facility and those admitted to observation status at their index admission. To ensure a full year of data for risk adjustment, beneficiaries were included only if they were enrolled in Medicare for 12 months prior to and including the date of the index admission.

Index hospitalizations were first identified using VA-only inpatient data similar to methods outlined by the CMS and endorsed by the National Quality Forum for Hospital Profiling.9 An index hospitalization was defined as an acute inpatient discharge between 2010 and 2012 in which the principal diagnosis was AMI, HF, or pneumonia. We excluded in-hospital deaths, discharges against medical advice, and--for the AMI cohort only--discharges on the same day as admission. Patients may have multiple admissions per year, but only admissions after 30 days of discharge from an index admission were eligible to be included as an additional index admission.

Outcomes

A readmission was defined as any unplanned rehospitalization to either non-VA or VA acute care facilities for any cause within 30 days of discharge from the index hospitalization. Readmissions to observation status or nonacute or rehabilitation units, such as skilled nursing facilities, were not included. Planned readmissions for elective procedures, such as elective chemotherapy and revascularization following an AMI index admission, were not considered as an outcome event.

Risk Standardization for 30-day Readmission

Using approaches developed by CMS,10-12 we calculated hospital-specific 30-day RSRRs for each VA. Briefly, the RSRR is a ratio of the number of predicted readmissions within 30 days of discharge to the expected number of readmissions within 30 days of hospital discharge, multiplied by the national unadjusted 30-day readmission rate. This measure calculates hospital-specific RSRRs using hierarchical logistic regression models, which account for clustering of patients within hospitals and risk-adjusting for differences in case-mix, during the assessed time periods.13 This approach simultaneously models two levels (patient and hospital) to account for the variance in patient outcomes within and between hospitals.14 At the patient level, the model uses the log odds of readmissions as the dependent variable and age and selected comorbidities as the independent variables. The second level models the hospital-specific intercepts. According to CMS guidelines, the analysis was limited to facilities with at least 25 patient admissions annually for each condition. All readmissions were attributed to the hospital that initially discharged the patient to a nonacute setting.

Analysis

We examined and reported the distribution of patient and clinical characteristics at the hospital level. For each condition, we determined the number of hospitals that had a sufficient number of admissions (n ≥ 25) to be included in the analyses. We calculated the mean, median, and interquartile range for the observed unadjusted readmission rates across all included hospitals.

Similar to methods used by CMS, we used one year of data in the VA to assess hospital quality and variation in facility performance. First, we calculated the 30-day RSRRs using one year (2012) of data. To assess how variability changed with higher facility volume (ie, more years included in the analysis), we also calculated the 30-day RSRRs using two and three years of data. For this, we identified and quantified the number of hospitals whose RSRRs were calculated as being above or below the national VA average (mean ± 95% CI). Specifically, we calculated the number and percentage of hospitals that were classified as either above (+95% CI) or below the national average (−95% CI) using data from all three time periods. All analyses were conducted using SAS Enterprise Guide, Version 7.1. The SAS statistical packages made available by the CMS Measure Team were used to calculate RSRRs.

RESULTS

Patient Characteristics

Patients were predominantly older males (98.3%). Among those hospitalized for AMI, most of them had a history of previous coronary artery bypass graft (CABG) (69.1%), acute coronary syndrome (ACS; 66.2%), or documented coronary atherosclerosis (89.8%). Similarly, patients admitted for HF had high rates of CABG (71.3%) and HF (94.6%), in addition to cardiac arrhythmias (69.3%) and diabetes (60.8%). Patients admitted with a diagnosis of pneumonia had high rates of CABG (61.9%), chronic obstructive pulmonary disease (COPD; 58.1%), and previous diagnosis of pneumonia (78.8%; Table 1). Patient characteristics for two and three years of data are presented in Supplementary Table 1.

VA Hospitals with Sufficient Volume to Be Included in Profiling Assessments

There were 146 acute-care hospitals in the VA. In 2012, 56 (38%) VA hospitals had at least 25 admissions for AMI, 102 (70%) hospitals had at least 25 admissions for CHF, and 106 (73%) hospitals had at least 25 admissions for pneumonia (Table 1) and therefore qualified for analysis based on CMS criteria for 30-day RSRR calculation. The study sample included 3,571 patients with AMI, 10,609 patients with CHF, and 10,191 patients with pneumonia.

30-Day Readmission Rates

The mean observed readmission rates in 2012 were 20% (95% CI 19%-21%) among patients admitted for AMI, 20% (95% CI 19%-20%) for patients admitted with CHF, and 15% (95% CI 15%-16%) for patients admitted with pneumonia. No significant variation from these rates was noted following risk standardization across hospitals (Table 2). Observed and risk-standardized rates were also calculated for two and three years of data (Supplementary Table 2) but were not found to be grossly different when utilizing a single year of data.

In 2012, two hospitals (2%) exhibited HF RSRRs worse than the national average (+95% CI), whereas no hospital demonstrated worse-than-average rates (+95% CI) for AMI or pneumonia (Table 3, Figure 1). Similarly, in 2012, only three hospitals had RSRRs better than the national average (−95% CI) for HF and pneumonia.

We combined data from three years to increase the volume of admissions per hospital. Even after combining three years of data across all three conditions, only four hospitals (range: 3.5%-5.3%) had RSRRs worse than the national average (+95% CI). However, four (5.3%), eight (7.1%), and 11 (9.7%) VA hospitals had RSRRs better than the national average (−95% CI).

DISCUSSION

We found that the CMS-derived 30-day risk-stratified readmission metric for AMI, HF, and pneumonia showed little variation among VA hospitals. The lack of institutional 30-day readmission volume appears to be a fundamental limitation that subsequently requires multiple years of data to make this metric clinically meaningful. As the largest integrated healthcare system in the United States, the VA relies upon and makes large-scale programmatic decisions based on such performance data. The inability to detect meaningful interhospital variation in a timely manner suggests that the CMS-derived 30-day RSRR may not be a sensitive metric to distinguish facility performance or drive quality improvement initiatives within the VA.

First, we found it notable that among the 146 VA medical centers available for analysis,15 between 38% and 77% of hospitals qualified for evaluation when using CMS-based participation criteria—which excludes institutions with fewer than 25 episodes per year. Although this low degree of qualification for profiling was most dramatic when using one year of data (range: 38%-72%), we noted that it did not dramatically improve when we combined three years of data (range: 52%-77%). These findings act to highlight the population and systems differences between CMS and VA populations16 and further support the idea that CMS-derived models may not be optimized for use in the VA healthcare system.

Our findings are particularly relevant within the VA given the quarterly rate with which these data are reported within the VA SAIL scorecard.2 The VA designed SAIL for internal benchmarking to spotlight successful strategies of top performing institutions and promote high-quality, value-based care. Using one year of data, the minimum required to utilize CMS models, showed that quarterly feedback (ie, three months of data) may not be informative or useful given that few hospitals are able to differentiate themselves from the mean (±95% CI). Although the capacity to distinguish between high and low performers does improve by combining hospital admissions over three years, this is not a reasonable timeline for institutions to wait for quality comparisons. Furthermore, although the VA does present its data on CMS’s Hospital Compare website using three years of combined data, the variability and distribution of such results are not supplied.3

This lack of discriminability raises concerns about the ability to compare hospital performance between low- and high-volume institutions. Although these models function well in CMS settings with large patient volumes in which greater variability exists,5 they lose their capacity to discriminate when applied to low-volume settings such as the VA. Given that several hospitals in the US are small community hospitals with low patient volumes,17 this issue probably occurs in other non-VA settings. Although our study focuses on the VA, others have been able to compare VA and non-VA settings’ variation and distribution. For example, Nuti et al. explored the differences in 30-day RSRRs among hospitalized patients with AMI, HF, and pneumonia and similarly showed little variation, narrow distributions, and few outliers in the VA setting compared to those in the non-VA setting. For small patient volume institutions, including the VA, a focus on high-volume services, outcomes, and measures (ie, blood pressure control, medication reconciliation, etc.) may offer more discriminability between high- and low-performing facilities. For example, Patel et al. found that VA process measures in patients with HF (ie, beta-blocker and ACE-inhibitor use) can be used as valid quality measures as they exhibited consistent reliability over time and validity with adjusted mortality rates, whereas the 30-day RSRR did not.18

Our findings may have substantial financial, resource, and policy implications. Automatically developing and reporting measures created for the Medicare program in the VA may not be a good use of VA resources. In addition, facilities may react to these reported outcomes and expend local resources and finances to implement interventions to improve on a performance outcome whose measure is statistically no different than the vast majority of its comparators. Such events have been highlighted in the public media and have pointed to the fact that small changes in quality, or statistical errors themselves, can have large ramifications within the VA’s hospital rating system.19

These findings may also add to the discussion on whether public reporting of health and quality outcomes improves patient care. Since the CMS began public reporting on RSRRs in 2009, these rates have fallen for all three examined conditions (AMI, HF, and pneumonia),7,20,21 in addition to several other health outcomes.17 Although recent studies have suggested that these decreased rates have been driven by the CMS-sponsored Hospital Readmissions Reduction Program (HRRP),22 others have suggested that these findings are consistent with ongoing secular trends toward decreased readmissions and may not be completely explained by public reporting alone.23 Moreover, prior work has also found that readmissions may be strongly impacted by factors external to the hospital setting, such as patients’ social demographics (ie, household income, social isolation), that are not currently captured in risk-prediction models.24 Given the small variability we see in our data, public reporting within the VA is probably not beneficial, as only a small number of facilities are outliers based on RSRR.

Our study has several limitations. First, although we adapted the CMS model to the VA, we did not include gender in the model because >99% of all patient admissions were male. Second, we assessed only three medical conditions that were being tracked by both CMS and VA during this time period, and these outcomes may not be representative of other aspects of care and cannot be generalized to other medical conditions. Finally, more contemporary data could lead to differing results – though we note that no large-scale structural or policy changes addressing readmission rates have been implemented within the VA since our study period.

The results of this study suggest that the CMS-derived 30-day risk-stratified readmission metric for AMI, HF, and pneumonia may not have the capacity to properly detect interfacility variance and thus may not be an optimal quality indicator within the VA. As the VA and other healthcare systems continually strive to improve the quality of care they provide, they will require more accurate and timely metrics for which to index their performance.

Disclosures

The authors have nothing to disclose

1. Medicare C for, Baltimore MS 7500 SB, Usa M. VA Data. https://www.cms.gov/Medicare/Quality-Initiatives-Patient-Assessment-Instruments/HospitalQualityInits/VA-Data.html. Published October 19, 2016. Accessed July 15, 2018.

2. Strategic Analytics for Improvement and Learning (SAIL) - Quality of Care. https://www.va.gov/QUALITYOFCARE/measure-up/Strategic_Analytics_for_Improvement_and_Learning_SAIL.asp. Accessed July 15, 2018.

3. Snapshot. https://www.cms.gov/Medicare/Quality-Initiatives-Patient-Assessment-Instruments/HospitalQualityInits/VA-Data.html. Accessed September 10, 2018.

4. Bradley EH, Curry L, Horwitz LI, et al. Hospital strategies associated with 30-day readmission rates for patients with heart failure. Circ Cardiovasc Qual Outcomes. 2013;6(4):444-450. doi: 10.1161/CIRCOUTCOMES.111.000101. PubMed

5. Desai NR, Ross JS, Kwon JY, et al. Association between hospital penalty status under the hospital readmission reduction program and readmission rates for target and nontarget conditions. JAMA. 2016;316(24):2647-2656. doi: 10.1001/jama.2016.18533. PubMed

6. McIlvennan CK, Eapen ZJ, Allen LA. Hospital readmissions reduction program. Circulation. 2015;131(20):1796-1803. doi: 10.1161/CIRCULATIONAHA.114.010270. PubMed

7. Suter LG, Li S-X, Grady JN, et al. National patterns of risk-standardized mortality and readmission after hospitalization for acute myocardial infarction, heart failure, and pneumonia: update on publicly reported outcomes measures based on the 2013 release. J Gen Intern Med. 2014;29(10):1333-1340. doi: 10.1007/s11606-014-2862-5. PubMed

8. O’Brien WJ, Chen Q, Mull HJ, et al. What is the value of adding Medicare data in estimating VA hospital readmission rates? Health Serv Res. 2015;50(1):40-57. doi: 10.1111/1475-6773.12207. PubMed

9. NQF: All-Cause Admissions and Readmissions 2015-2017 Technical Report. https://www.qualityforum.org/Publications/2017/04/All-Cause_Admissions_and_Readmissions_2015-2017_Technical_Report.aspx. Accessed August 2, 2018.

10. Keenan PS, Normand S-LT, Lin Z, et al. An administrative claims measure suitable for profiling hospital performance on the basis of 30-day all-cause readmission rates among patients with heart failure. Circ Cardiovasc Qual Outcomes. 2008;1(1):29-37. doi: 10.1161/CIRCOUTCOMES.108.802686. PubMed

11. Krumholz HM, Lin Z, Drye EE, et al. An administrative claims measure suitable for profiling hospital performance based on 30-day all-cause readmission rates among patients with acute myocardial infarction. Circ Cardiovasc Qual Outcomes. 2011;4(2):243-252. doi: 10.1161/CIRCOUTCOMES.110.957498. PubMed

12. Lindenauer PK, Normand S-LT, Drye EE, et al. Development, validation, and results of a measure of 30-day readmission following hospitalization for pneumonia. J Hosp Med. 2011;6(3):142-150. doi: 10.1002/jhm.890. PubMed

13. Medicare C for, Baltimore MS 7500 SB, Usa M. OutcomeMeasures. https://www.cms.gov/Medicare/Quality-Initiatives-Patient-Assessment-Instruments/HospitalQualityInits/OutcomeMeasures.html. Published October 13, 2017. Accessed July 19, 2018.

14. Nuti SV, Qin L, Rumsfeld JS, et al. Association of admission to Veterans Affairs hospitals vs non-Veterans Affairs hospitals with mortality and readmission rates among older hospitalized with acute myocardial infarction, heart failure, or pneumonia. JAMA. 2016;315(6):582-592. doi: 10.1001/jama.2016.0278. PubMed

15. Solutions VW. Veterans Health Administration - Locations. https://www.va.gov/directory/guide/division.asp?dnum=1. Accessed September 13, 2018.

16. Duan-Porter W (Denise), Martinson BC, Taylor B, et al. Evidence Review: Social Determinants of Health for Veterans. Washington (DC): Department of Veterans Affairs (US); 2017. http://www.ncbi.nlm.nih.gov/books/NBK488134/. Accessed June 13, 2018.

17. Fast Facts on U.S. Hospitals, 2018 | AHA. American Hospital Association. https://www.aha.org/statistics/fast-facts-us-hospitals. Accessed September 5, 2018.

18. Patel J, Sandhu A, Parizo J, Moayedi Y, Fonarow GC, Heidenreich PA. Validity of performance and outcome measures for heart failure. Circ Heart Fail. 2018;11(9):e005035. PubMed

19. Philipps D. Canceled Operations. Unsterile Tools. The V.A. Gave This Hospital 5 Stars. The New York Times. https://www.nytimes.com/2018/11/01/us/veterans-hospitals-rating-system-star.html. Published November 3, 2018. Accessed November 19, 2018.

20. DeVore AD, Hammill BG, Hardy NC, Eapen ZJ, Peterson ED, Hernandez AF. Has public reporting of hospital readmission rates affected patient outcomes?: Analysis of Medicare claims data. J Am Coll Cardiol. 2016;67(8):963-972. doi: 10.1016/j.jacc.2015.12.037. PubMed

21. Wasfy JH, Zigler CM, Choirat C, Wang Y, Dominici F, Yeh RW. Readmission rates after passage of the hospital readmissions reduction program: a pre-post analysis. Ann Intern Med. 2017;166(5):324-331. doi: 10.7326/M16-0185. PubMed

22. Medicare C for, Baltimore MS 7500 SB, Usa M. Hospital Readmission Reduction Program. https://www.cms.gov/Medicare/Quality-Initiatives-Patient-Assessment-Instruments/Value-Based-Programs/HRRP/Hospital-Readmission-Reduction-Program.html. Published March 26, 2018. Accessed July 19, 2018.

23. Radford MJ. Does public reporting improve care? J Am Coll Cardiol. 2016;67(8):973-975. doi: 10.1016/j.jacc.2015.12.038. PubMed

24. Barnett ML, Hsu J, McWilliams JM. Patient characteristics and differences in hospital readmission rates. JAMA Intern Med. 2015;175(11):1803-1812. doi: 10.1001/jamainternmed.2015.4660. PubMed

Using methodology created by the Centers for Medicare & Medicaid Services (CMS), the Department of Veterans Affairs (VA) calculates and reports hospital performance measures for several key conditions, including acute myocardial infarction (AMI), heart failure (HF), and pneumonia.1 These measures are designed to benchmark individual hospitals against how average hospitals perform when caring for a similar case-mix index. Because readmissions to the hospital within 30-days of discharge are common and costly, this metric has garnered extensive attention in recent years.

To summarize the 30-day readmission metric, the VA utilizes the Strategic Analytics for Improvement and Learning (SAIL) system to present internally its findings to VA practitioners and leadership.2 The VA provides these data as a means to drive quality improvement and allow for comparison of individual hospitals’ performance across measures throughout the VA healthcare system. Since 2010, the VA began using and publicly reporting the CMS-derived 30-day Risk-Stratified Readmission Rate (RSRR) on the Hospital Compare website.3 Similar to CMS, the VA uses three years of combined data so that patients, providers, and other stakeholders can compare individual hospitals’ performance across these measures.1 In response to this, hospitals and healthcare organizations have implemented quality improvement and large-scale programmatic interventions in an attempt to improve quality around readmissions.4-6 A recent assessment on how hospitals within the Medicare fee-for-service program have responded to such reporting found large degrees of variability, with more than half of the participating institutions facing penalties due to greater-than-expected readmission rates.5 Although the VA utilizes the same CMS-derived model in its assessments and reporting, the variability and distribution around this metric are not publicly reported—thus making it difficult to ascertain how individual VA hospitals compare with one another. Without such information, individual facilities may not know how to benchmark the quality of their care to others, nor would the VA recognize which interventions addressing readmissions are working, and which are not. Although previous assessments of interinstitutional variance have been performed in Medicare populations,7 a focused analysis of such variance within the VA has yet to be performed.

In this study, we performed a multiyear assessment of the CMS-derived 30-day RSRR metric for AMI, HF, and pneumonia as a useful measure to drive VA quality improvement or distinguish VA facility performance based on its ability to detect interfacility variability.

METHODS

Data Source

We used VA administrative and Medicare claims data from 2010 to 2012. After identifying index hospitalizations to VA hospitals, we obtained patients’ respective inpatient Medicare claims data from the Medicare Provider Analysis and Review (MedPAR) and Outpatient files. All Medicare records were linked to VA records via scrambled Social Security numbers and were provided by the VA Information Resource Center. This study was approved by the San Francisco VA Medical Center Institutional Review Board.

Study Sample

Our cohort consisted of hospitalized VA beneficiary and Medicare fee-for-service patients who were aged ≥65 years and admitted to and discharged from a VA acute care center with a primary discharge diagnosis of AMI, HF, or pneumonia. These comorbidities were chosen as they are publicly reported and frequently used for interfacility comparisons. Because studies have found that inclusion of secondary payer data (ie, CMS data) may affect hospital-profiling outcomes, we included Medicare data on all available patients.8 We excluded hospitalizations that resulted in a transfer to another acute care facility and those admitted to observation status at their index admission. To ensure a full year of data for risk adjustment, beneficiaries were included only if they were enrolled in Medicare for 12 months prior to and including the date of the index admission.

Index hospitalizations were first identified using VA-only inpatient data similar to methods outlined by the CMS and endorsed by the National Quality Forum for Hospital Profiling.9 An index hospitalization was defined as an acute inpatient discharge between 2010 and 2012 in which the principal diagnosis was AMI, HF, or pneumonia. We excluded in-hospital deaths, discharges against medical advice, and--for the AMI cohort only--discharges on the same day as admission. Patients may have multiple admissions per year, but only admissions after 30 days of discharge from an index admission were eligible to be included as an additional index admission.

Outcomes

A readmission was defined as any unplanned rehospitalization to either non-VA or VA acute care facilities for any cause within 30 days of discharge from the index hospitalization. Readmissions to observation status or nonacute or rehabilitation units, such as skilled nursing facilities, were not included. Planned readmissions for elective procedures, such as elective chemotherapy and revascularization following an AMI index admission, were not considered as an outcome event.

Risk Standardization for 30-day Readmission

Using approaches developed by CMS,10-12 we calculated hospital-specific 30-day RSRRs for each VA. Briefly, the RSRR is a ratio of the number of predicted readmissions within 30 days of discharge to the expected number of readmissions within 30 days of hospital discharge, multiplied by the national unadjusted 30-day readmission rate. This measure calculates hospital-specific RSRRs using hierarchical logistic regression models, which account for clustering of patients within hospitals and risk-adjusting for differences in case-mix, during the assessed time periods.13 This approach simultaneously models two levels (patient and hospital) to account for the variance in patient outcomes within and between hospitals.14 At the patient level, the model uses the log odds of readmissions as the dependent variable and age and selected comorbidities as the independent variables. The second level models the hospital-specific intercepts. According to CMS guidelines, the analysis was limited to facilities with at least 25 patient admissions annually for each condition. All readmissions were attributed to the hospital that initially discharged the patient to a nonacute setting.

Analysis

We examined and reported the distribution of patient and clinical characteristics at the hospital level. For each condition, we determined the number of hospitals that had a sufficient number of admissions (n ≥ 25) to be included in the analyses. We calculated the mean, median, and interquartile range for the observed unadjusted readmission rates across all included hospitals.

Similar to methods used by CMS, we used one year of data in the VA to assess hospital quality and variation in facility performance. First, we calculated the 30-day RSRRs using one year (2012) of data. To assess how variability changed with higher facility volume (ie, more years included in the analysis), we also calculated the 30-day RSRRs using two and three years of data. For this, we identified and quantified the number of hospitals whose RSRRs were calculated as being above or below the national VA average (mean ± 95% CI). Specifically, we calculated the number and percentage of hospitals that were classified as either above (+95% CI) or below the national average (−95% CI) using data from all three time periods. All analyses were conducted using SAS Enterprise Guide, Version 7.1. The SAS statistical packages made available by the CMS Measure Team were used to calculate RSRRs.

RESULTS

Patient Characteristics

Patients were predominantly older males (98.3%). Among those hospitalized for AMI, most of them had a history of previous coronary artery bypass graft (CABG) (69.1%), acute coronary syndrome (ACS; 66.2%), or documented coronary atherosclerosis (89.8%). Similarly, patients admitted for HF had high rates of CABG (71.3%) and HF (94.6%), in addition to cardiac arrhythmias (69.3%) and diabetes (60.8%). Patients admitted with a diagnosis of pneumonia had high rates of CABG (61.9%), chronic obstructive pulmonary disease (COPD; 58.1%), and previous diagnosis of pneumonia (78.8%; Table 1). Patient characteristics for two and three years of data are presented in Supplementary Table 1.

VA Hospitals with Sufficient Volume to Be Included in Profiling Assessments

There were 146 acute-care hospitals in the VA. In 2012, 56 (38%) VA hospitals had at least 25 admissions for AMI, 102 (70%) hospitals had at least 25 admissions for CHF, and 106 (73%) hospitals had at least 25 admissions for pneumonia (Table 1) and therefore qualified for analysis based on CMS criteria for 30-day RSRR calculation. The study sample included 3,571 patients with AMI, 10,609 patients with CHF, and 10,191 patients with pneumonia.

30-Day Readmission Rates

The mean observed readmission rates in 2012 were 20% (95% CI 19%-21%) among patients admitted for AMI, 20% (95% CI 19%-20%) for patients admitted with CHF, and 15% (95% CI 15%-16%) for patients admitted with pneumonia. No significant variation from these rates was noted following risk standardization across hospitals (Table 2). Observed and risk-standardized rates were also calculated for two and three years of data (Supplementary Table 2) but were not found to be grossly different when utilizing a single year of data.

In 2012, two hospitals (2%) exhibited HF RSRRs worse than the national average (+95% CI), whereas no hospital demonstrated worse-than-average rates (+95% CI) for AMI or pneumonia (Table 3, Figure 1). Similarly, in 2012, only three hospitals had RSRRs better than the national average (−95% CI) for HF and pneumonia.

We combined data from three years to increase the volume of admissions per hospital. Even after combining three years of data across all three conditions, only four hospitals (range: 3.5%-5.3%) had RSRRs worse than the national average (+95% CI). However, four (5.3%), eight (7.1%), and 11 (9.7%) VA hospitals had RSRRs better than the national average (−95% CI).

DISCUSSION

We found that the CMS-derived 30-day risk-stratified readmission metric for AMI, HF, and pneumonia showed little variation among VA hospitals. The lack of institutional 30-day readmission volume appears to be a fundamental limitation that subsequently requires multiple years of data to make this metric clinically meaningful. As the largest integrated healthcare system in the United States, the VA relies upon and makes large-scale programmatic decisions based on such performance data. The inability to detect meaningful interhospital variation in a timely manner suggests that the CMS-derived 30-day RSRR may not be a sensitive metric to distinguish facility performance or drive quality improvement initiatives within the VA.