User login

The Virtual Hospitalist: The Future is Now

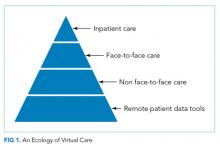

Compared with other industries, medicine has been slow to embrace the digital age. Electronic health records have only recently become ubiquitous, and that was only realized after governmental prodding through Meaningful Use legislation. Other digital tools, such as video or remote sensor technologies, have been available for decades but had not been introduced into routine medical care until recently for various reasons, ranging from costs to security to reimbursement rules. However, we are currently in the midst of a paradigm shift in medicine toward virtual care, as exemplified by the Kaiser Permanente CEO’s proclamation in 2017 that this capitated care system had moved over half of its 100 million annual patient encounters to the virtual environment.1

Regulation – both at the state and federal levels – has been the largest barrier to the adoption of virtual care. State licensure regulations for practicing medicine hamper virtual visits, which can otherwise be easily achieved without regard to geography. Although the Centers for Medicare & Medicaid Services (CMS) has had provisions for telehealth billing, these have been largely limited to rural areas. However, regulations are constantly evolving as the Interstate Medical Licensure Compact list is not CMS. The Interstate Medical Licensure Compact (www.imlcc.org) is an agreement involving 24 states that permits licensed physicians to practice medicine across state lines. CMS has recently proposed to add payments for virtual check-in visits, which will not be subject to the prior limitations on Medicare telehealth services.2 These and future changes in regulation will likely spur the rapid adoption and evolution of virtual services.

In this context, the article by Kuperman et al.3 provides a welcoming view of the future of hospital medicine. The authors demonstrated the feasibility of using a “virtual hospitalist” to manage patients admitted to a small rural hospital that lacked the patient volumes and resources to justify on-site hospitalist staffing. The patients benefited from the clinical expertise of an experienced inpatient provider while staying near their homes. This article adds to the growing literature on the use of these technologies in the hospital settings, which range from the management of patients in the intensive care unit4 to stroke patients in the ED5 and to inpatient psychiatric consultation.6

What are the implications for hospitalists? We need to prepare the current and future generations of hospitalists for practice in an evolving digital environment. “Choosing Wisely®: Things We Do For No Reason” is one of the most popular segments of JHM for a good reason: there are many things in the field of medicine because “that’s the way we always did it.” The capabilities unleashed by digital technologies will require hospitalists to rethink how we manage patients in acute and subacute settings and after discharge. Although these tools show a substantial promise to help us achieve the Triple Aim, we will need considerably more research to understand the costs and effectiveness of these new digital technologies and approaches.7,8 We also need new payment models that recognize their value. Finally, we also need to be aware that doctoring elements, such as human touch, physical presence, and emotional connection, can be encumbered and not enhanced by digital technologies.9

Disclosures

Dr. Ong and Dr. Brotman have nothing to disclose.

1. Why Digital Transformations Are Hard. Wall Street Journal. March 7, 2017, 2017.

2. Medicare Program: Revisions to Payment Policies under the Physician Fee Schedule and Other Revisions to Part B for CY 2019; Medicare Shared Savings Program Requirements; etc. In: Centers for Medicare & Medicaid Services, ed: Federal Register; 2018:1472.

3. Kuperman EF, Linson EL, Klefstad K, Perry E, Glenn K. The virtual hospitalist: a single-site implementation bringing hospitalist coverage to critical access hospitals. J Hosp Med. 2018. In Press. PubMed

4. Lilly CM, Cody S, Zhao H, et al. Hospital mortality, length of stay, and preventable complications among critically ill patients before and after tele-ICU reengineering of critical care processes. JAMA. 2011;305(21):2175-2183. doi: 10.1001/jama.2011.697. PubMed

5. Meyer BC, Raman R, Hemmen T, et al. Efficacy of site-independent telemedicine in the STRokE DOC trial: a randomised, blinded, prospective study. Lancet Neurol. 2008;7(9):787-795. doi: 10.1016/S1474-4422(08)70171-6. PubMed

6. Arevian AC, Jeffrey J, Young AS, Ong MK. Opportunities for flexible, on-demand care delivery through telemedicine. Psychiatr Serv. 2018;69(1):5-8. doi: 10.1176/appi.ps.201600589. PubMed

7. Ashwood JS, Mehrotra A, Cowling D, Uscher-Pines L. Direct-to-consumer telehealth may increase access to care but does not decrease spending. Health Aff (Millwood). 2017;36(3):485-491. doi: 10.1377/hlthaff.2016.1130. PubMed

8. Ong MK, Romano PS, Edgington S, et al. Effectiveness of remote patient monitoring after discharge of hospitalized patients with heart failure: the better effectiveness after transition -- Heart Failure (BEAT-HF) Randomized Clinical Trial. JAMA Intern Med. 2016;176(3):310-318. doi: 10.1001/jamainternmed.2015.7712. PubMed

9. Verghese A. Culture shock--patient as icon, icon as patient. N Engl J Med. 2008;359(26):2748-2751. doi: 10.1056/NEJMp0807461. PubMed

Compared with other industries, medicine has been slow to embrace the digital age. Electronic health records have only recently become ubiquitous, and that was only realized after governmental prodding through Meaningful Use legislation. Other digital tools, such as video or remote sensor technologies, have been available for decades but had not been introduced into routine medical care until recently for various reasons, ranging from costs to security to reimbursement rules. However, we are currently in the midst of a paradigm shift in medicine toward virtual care, as exemplified by the Kaiser Permanente CEO’s proclamation in 2017 that this capitated care system had moved over half of its 100 million annual patient encounters to the virtual environment.1

Regulation – both at the state and federal levels – has been the largest barrier to the adoption of virtual care. State licensure regulations for practicing medicine hamper virtual visits, which can otherwise be easily achieved without regard to geography. Although the Centers for Medicare & Medicaid Services (CMS) has had provisions for telehealth billing, these have been largely limited to rural areas. However, regulations are constantly evolving as the Interstate Medical Licensure Compact list is not CMS. The Interstate Medical Licensure Compact (www.imlcc.org) is an agreement involving 24 states that permits licensed physicians to practice medicine across state lines. CMS has recently proposed to add payments for virtual check-in visits, which will not be subject to the prior limitations on Medicare telehealth services.2 These and future changes in regulation will likely spur the rapid adoption and evolution of virtual services.

In this context, the article by Kuperman et al.3 provides a welcoming view of the future of hospital medicine. The authors demonstrated the feasibility of using a “virtual hospitalist” to manage patients admitted to a small rural hospital that lacked the patient volumes and resources to justify on-site hospitalist staffing. The patients benefited from the clinical expertise of an experienced inpatient provider while staying near their homes. This article adds to the growing literature on the use of these technologies in the hospital settings, which range from the management of patients in the intensive care unit4 to stroke patients in the ED5 and to inpatient psychiatric consultation.6

What are the implications for hospitalists? We need to prepare the current and future generations of hospitalists for practice in an evolving digital environment. “Choosing Wisely®: Things We Do For No Reason” is one of the most popular segments of JHM for a good reason: there are many things in the field of medicine because “that’s the way we always did it.” The capabilities unleashed by digital technologies will require hospitalists to rethink how we manage patients in acute and subacute settings and after discharge. Although these tools show a substantial promise to help us achieve the Triple Aim, we will need considerably more research to understand the costs and effectiveness of these new digital technologies and approaches.7,8 We also need new payment models that recognize their value. Finally, we also need to be aware that doctoring elements, such as human touch, physical presence, and emotional connection, can be encumbered and not enhanced by digital technologies.9

Disclosures

Dr. Ong and Dr. Brotman have nothing to disclose.

Compared with other industries, medicine has been slow to embrace the digital age. Electronic health records have only recently become ubiquitous, and that was only realized after governmental prodding through Meaningful Use legislation. Other digital tools, such as video or remote sensor technologies, have been available for decades but had not been introduced into routine medical care until recently for various reasons, ranging from costs to security to reimbursement rules. However, we are currently in the midst of a paradigm shift in medicine toward virtual care, as exemplified by the Kaiser Permanente CEO’s proclamation in 2017 that this capitated care system had moved over half of its 100 million annual patient encounters to the virtual environment.1

Regulation – both at the state and federal levels – has been the largest barrier to the adoption of virtual care. State licensure regulations for practicing medicine hamper virtual visits, which can otherwise be easily achieved without regard to geography. Although the Centers for Medicare & Medicaid Services (CMS) has had provisions for telehealth billing, these have been largely limited to rural areas. However, regulations are constantly evolving as the Interstate Medical Licensure Compact list is not CMS. The Interstate Medical Licensure Compact (www.imlcc.org) is an agreement involving 24 states that permits licensed physicians to practice medicine across state lines. CMS has recently proposed to add payments for virtual check-in visits, which will not be subject to the prior limitations on Medicare telehealth services.2 These and future changes in regulation will likely spur the rapid adoption and evolution of virtual services.

In this context, the article by Kuperman et al.3 provides a welcoming view of the future of hospital medicine. The authors demonstrated the feasibility of using a “virtual hospitalist” to manage patients admitted to a small rural hospital that lacked the patient volumes and resources to justify on-site hospitalist staffing. The patients benefited from the clinical expertise of an experienced inpatient provider while staying near their homes. This article adds to the growing literature on the use of these technologies in the hospital settings, which range from the management of patients in the intensive care unit4 to stroke patients in the ED5 and to inpatient psychiatric consultation.6

What are the implications for hospitalists? We need to prepare the current and future generations of hospitalists for practice in an evolving digital environment. “Choosing Wisely®: Things We Do For No Reason” is one of the most popular segments of JHM for a good reason: there are many things in the field of medicine because “that’s the way we always did it.” The capabilities unleashed by digital technologies will require hospitalists to rethink how we manage patients in acute and subacute settings and after discharge. Although these tools show a substantial promise to help us achieve the Triple Aim, we will need considerably more research to understand the costs and effectiveness of these new digital technologies and approaches.7,8 We also need new payment models that recognize their value. Finally, we also need to be aware that doctoring elements, such as human touch, physical presence, and emotional connection, can be encumbered and not enhanced by digital technologies.9

Disclosures

Dr. Ong and Dr. Brotman have nothing to disclose.

1. Why Digital Transformations Are Hard. Wall Street Journal. March 7, 2017, 2017.

2. Medicare Program: Revisions to Payment Policies under the Physician Fee Schedule and Other Revisions to Part B for CY 2019; Medicare Shared Savings Program Requirements; etc. In: Centers for Medicare & Medicaid Services, ed: Federal Register; 2018:1472.

3. Kuperman EF, Linson EL, Klefstad K, Perry E, Glenn K. The virtual hospitalist: a single-site implementation bringing hospitalist coverage to critical access hospitals. J Hosp Med. 2018. In Press. PubMed

4. Lilly CM, Cody S, Zhao H, et al. Hospital mortality, length of stay, and preventable complications among critically ill patients before and after tele-ICU reengineering of critical care processes. JAMA. 2011;305(21):2175-2183. doi: 10.1001/jama.2011.697. PubMed

5. Meyer BC, Raman R, Hemmen T, et al. Efficacy of site-independent telemedicine in the STRokE DOC trial: a randomised, blinded, prospective study. Lancet Neurol. 2008;7(9):787-795. doi: 10.1016/S1474-4422(08)70171-6. PubMed

6. Arevian AC, Jeffrey J, Young AS, Ong MK. Opportunities for flexible, on-demand care delivery through telemedicine. Psychiatr Serv. 2018;69(1):5-8. doi: 10.1176/appi.ps.201600589. PubMed

7. Ashwood JS, Mehrotra A, Cowling D, Uscher-Pines L. Direct-to-consumer telehealth may increase access to care but does not decrease spending. Health Aff (Millwood). 2017;36(3):485-491. doi: 10.1377/hlthaff.2016.1130. PubMed

8. Ong MK, Romano PS, Edgington S, et al. Effectiveness of remote patient monitoring after discharge of hospitalized patients with heart failure: the better effectiveness after transition -- Heart Failure (BEAT-HF) Randomized Clinical Trial. JAMA Intern Med. 2016;176(3):310-318. doi: 10.1001/jamainternmed.2015.7712. PubMed

9. Verghese A. Culture shock--patient as icon, icon as patient. N Engl J Med. 2008;359(26):2748-2751. doi: 10.1056/NEJMp0807461. PubMed

1. Why Digital Transformations Are Hard. Wall Street Journal. March 7, 2017, 2017.

2. Medicare Program: Revisions to Payment Policies under the Physician Fee Schedule and Other Revisions to Part B for CY 2019; Medicare Shared Savings Program Requirements; etc. In: Centers for Medicare & Medicaid Services, ed: Federal Register; 2018:1472.

3. Kuperman EF, Linson EL, Klefstad K, Perry E, Glenn K. The virtual hospitalist: a single-site implementation bringing hospitalist coverage to critical access hospitals. J Hosp Med. 2018. In Press. PubMed

4. Lilly CM, Cody S, Zhao H, et al. Hospital mortality, length of stay, and preventable complications among critically ill patients before and after tele-ICU reengineering of critical care processes. JAMA. 2011;305(21):2175-2183. doi: 10.1001/jama.2011.697. PubMed

5. Meyer BC, Raman R, Hemmen T, et al. Efficacy of site-independent telemedicine in the STRokE DOC trial: a randomised, blinded, prospective study. Lancet Neurol. 2008;7(9):787-795. doi: 10.1016/S1474-4422(08)70171-6. PubMed

6. Arevian AC, Jeffrey J, Young AS, Ong MK. Opportunities for flexible, on-demand care delivery through telemedicine. Psychiatr Serv. 2018;69(1):5-8. doi: 10.1176/appi.ps.201600589. PubMed

7. Ashwood JS, Mehrotra A, Cowling D, Uscher-Pines L. Direct-to-consumer telehealth may increase access to care but does not decrease spending. Health Aff (Millwood). 2017;36(3):485-491. doi: 10.1377/hlthaff.2016.1130. PubMed

8. Ong MK, Romano PS, Edgington S, et al. Effectiveness of remote patient monitoring after discharge of hospitalized patients with heart failure: the better effectiveness after transition -- Heart Failure (BEAT-HF) Randomized Clinical Trial. JAMA Intern Med. 2016;176(3):310-318. doi: 10.1001/jamainternmed.2015.7712. PubMed

9. Verghese A. Culture shock--patient as icon, icon as patient. N Engl J Med. 2008;359(26):2748-2751. doi: 10.1056/NEJMp0807461. PubMed

© 2018 Society of Hospital Medicine

Improving Patient Satisfaction

INTRODUCTION

Patient experience and satisfaction is intrinsically valued, as strong physician‐patient communication, empathy, and patient comfort require little justification. However, studies have also shown that patient satisfaction is associated with better health outcomes and greater compliance.[1, 2, 3] A systematic review of studies linking patient satisfaction to outcomes found that patient experience is positively associated with patient safety, clinical effectiveness, health outcomes, adherence, and lower resource utilization.[4] Of 378 associations studied between patient experience and health outcomes, there were 312 positive associations.[4] However, not all studies have shown a positive association between patient satisfaction and outcomes.

Nevertheless, hospitals now have to strive to improve patient satisfaction, as Centers for Medicare & Medicaid Services (CMS) has introduced Hospital Value‐Based Purchasing. CMS started to withhold Medicare Severity Diagnosis‐Related Groups payments, starting at 1.0% in 2013, 1.25% in 2014, and increasing to 2.0% in 2017. This money is redistributed based on performance on core quality measures, including patient satisfaction measured through the Hospital Consumer Assessment of Healthcare Providers and Systems (HCAHPS) survey.[5]

Various studies have evaluated interventions to improve patient satisfaction, but to our knowledge, no study published in a peer‐reviewed research journal has shown a significant improvement in HCAHPS scores.[6, 7, 8, 9, 10, 11, 12] Levinson et al. argue that physician communication skills should be taught during residency, and that individualized feedback is an effective way to allow physicians to track their progress over time and compared to their peers.[13] We thus aimed to evaluate an intervention to improve patient satisfaction designed by the Patient Affairs Department for Ronald Reagan University of California, Los Angeles (UCLA) Medical Center (RRUCLAMC) and the UCLA Department of Medicine.

METHODOLOGY

Design Overview

The intervention for the IM residents consisted of education on improving physician‐patient communication provided at a conference, frequent individualized patient feedback, and an incentive program in addition to existing patient satisfaction training. The results of the intervention were measured by comparing the postintervention HCAHPS scores in the Department of Medicine versus the rest of the hospital and the national averages.

Setting and Participants

The study setting was RRUCLAMC, a large university‐affiliated academic center. The internal medicine (IM) residents and patients in the Department of Medicine were in the intervention cohort. The residents in all other departments that were involved with direct adult patient care and their patients were the control cohort. Our intervention targeted resident physicians because they were most involved in the majority of direct patient care at RRUCLAMC. Residents are in house 24 hours a day, are the first line of contact for nurses and patients, and provide the most continuity, as attendings often rotate every 1 to 2 weeks, but residents are on service for at least 2 to 4 weeks for each rotation. IM residents are on all inpatient general medicine, critical care, and cardiology services at RRUCLAMC. RRUMCLA does not have a nonteaching service for adult IM patients.

Interventions

Since 2006, there has been a program at RRUCLAMC called Assessing Residents' CICARE (ARC). CICARE is an acronym that represents UCLA's patient communication model and training elements (Connect with patients, Introduce yourself and role, Communicate, Ask and anticipate, Respond, Exit courteously). The ARC program consists of trained undergraduate student volunteers surveying hospitalized patients with an optional and anonymous survey regarding specific resident physician's communication skills (see Supporting Information, Appendix A, in the online version of this article). Patients were randomly selected for the ARC and HCAHPS survey, but they were selected separately for each survey. There may have been some overlap between patients selected for ARC and HCAHPS surveys. Residents received feedback from 7 to 10 patients a year on average.

The volunteers show the patients a picture of individual resident physicians assigned to their care to confirm the resident's identity. The volunteer then asks 18 multiple‐choice questions about their physician‐patient communication skills. The patients are also asked to provide general comments regarding the resident physician.[14] The patients were interviewed in private hospital rooms by ARC volunteers. No information linking the patient to the survey is recorded. Survey data are entered into a database, and individual residents are assigned a code that links them to their patient feedback. These survey results and comments are sent to the program directors of the residency programs weekly. However, a review of the practice revealed that results were only reviewed semiannually by the residents with their program director.

Starting December 2011, the results of the ARC survey were directly e‐mailed to the interns and residents in the Department of Medicine in real time while they were on general medicine wards and the cardiology inpatient service at RRUCLAMC. Residents in other departments at RRUCLAMC continued to review the patient feedback with program directors at most biannually. This continued until June 2012 and had to be stopped during July 2012 because many of the CICARE volunteers were away on summer break.

Starting January 2012, IM residents who stood out in the ARC survey received a Commendation of Excellence. Each month, 3 residents were selected for this award based on their patient comments and if they had over 90% overall satisfaction on the survey questions. These residents received department‐wide recognition via e‐mail and a movie package (2 movie tickets, popcorn, and a drink) as a reward.

In January 2012, a 1‐hour lunchtime conference was held for IM residents to discuss best practices in physician‐patient communication, upcoming changes with Hospital Value‐Based Purchasing, and strengths and weaknesses of the Department of Medicine in patient communication. About 50% of the IM residents included in the study arm were not able to attend the education session and so no universal training was provided.

Outcomes

We analyzed the before and after intervention impact on the HCAHPS results. HCAHPS is a standardized national survey measuring patient perspectives after they are discharged from hospitals across the nation. The survey addresses communication with doctors and nurses, responsiveness of hospital staff, pain management, communication about medicines, discharge information, cleanliness of the hospital environment, and quietness of the hospital environment. The survey also includes demographic questions.[15]

Our analysis focused on the following specific questions: Would you recommend this hospital to your friends and family? During this hospital stay, how often did doctors: (1) treat you with courtesy and respect, (2) listen carefully to you, and (3) explain things in a way you could understand? Responders who did not answer all of the above questions were excluded.

Our outcomes focused on the change from January to June 2011 to January to June 2012, during which time the intervention was ongoing. We did not include data past July 2012 in the primary outcome, because the intervention did not continue due to volunteers being away for summer break. In addition, July also marks the time when the third‐year IM residents graduate and the new interns start. Thus, one‐third of the residents in the IM department had never been exposed to the intervention after June of 2012.

Statistical Analysis

We used a difference‐in‐differences regression analysis (DDRA) for these outcomes and controlled for other covariates in the patient populations to predict adjusted probabilities for each of the outcomes studied. The key predictors in the models were indicator variables for year (2011, 2012) and service (IM, all others) and an interaction between year and service. We controlled for perceived patient health, admission through emergency room (ER), age, race, patient education level, intensive care unit (ICU) stay, length of stay, and gender.[16] We calculated adjusted probabilities for each level of the interaction between service and year, holding all controls at their means. The 95% confidence intervals for these predictions were generated using the delta method.

We compared the changes in HCAHPS results for the RRUCLAMC Department of Medicine patients with all other RRUCLAMC department patients and to the national averages. We only had access to national average point estimates and not individual responses from the national sample and so were unable to do statistical analysis involving the national cohort. The prespecified significant P value was 0.05. Stata 13 (StataCorp, College Station, TX) was used for statistical analysis. The study received institutional review board exempt status.

RESULTS

Sample Size and Excluded Cases

There were initially 3637 HCAHPS patient cases. We dropped all HCAHPS cases that were missing values for outcome or demographic/explanatory variables. We dropped 226 cases due to 1 or more missing outcome variables, and we dropped 322 cases due to 1 or more missing demographic/explanatory variables. This resulted in 548 total dropped cases and a final sample size of 3089 (see Supporting Information, Appendix B, in the online version of this article). Of the 548 dropped cases, 228 cases were in the IM cohort and 320 cases from the rest of the hospital. There were 993 patients in the UCLA IM cohort and 2096 patients in the control cohort from all other UCLA adult departments. Patients excluded due to missing data were similar to the patients included in the final analysis except for 2 differences. Patients excluded were older (63 years vs 58 years, P<0.01) and more likely to have been admitted from the ER (57.4% vs 39.6%, P<0.01) than the patients we had included.

Patient Characteristics

The patient population demographics from all patients discharged from RRUCLAMC who completed HCAHPS surveys January to June 2011 and 2012 are displayed in Table 1. In both 2011 and 2012, the patients in the IM cohort were significantly older, more likely to be male, had lower perceived health, and more likely to be admitted through the emergency room than the HCAHPS patients in all other UCLA adult departments. In 2011, the IM cohort had a lower percentage of patients than the non‐IM cohort that required an ICU stay (8.0% vs 20.5%, P<0.01), but there was no statistically significant difference in 2012 (20.6% vs 20.8%, P=0.9). Other than differences in ICU stay, the demographic characteristics from 2011 to 2012 did not change in the intervention and control cohorts. The response rate for UCLA on HCAHPS during the study period was 29%, consistent with national results.[17, 18]

| 2011 | 2012 | |||||

|---|---|---|---|---|---|---|

| UCLA Internal Medicine | All Other UCLA Adult Departments | P | UCLA Internal Medicine | All Other UCLA Adult Departments | P | |

| ||||||

| Total no. | 465 | 865 | 528 | 1,231 | ||

| Age, y | 62.8 | 55.3 | <0.01 | 65.1 | 54.9 | <0.01 |

| Length of stay, d | 5.7 | 5.7 | 0.94 | 5.8 | 4.9 | 0.19 |

| Gender, male | 56.6 | 44.1 | <0.01 | 55.3 | 41.4 | <0.01 |

| Education (4 years of college or greater) | 47.3 | 49.3 | 0.5 | 47.3 | 51.3 | 0.13 |

| Patient‐perceived overall health (responding very good or excellent) | 30.5 | 55.0 | <0.01 | 27.5 | 58.2 | <0.01 |

| Admission through emergency room, yes | 75.5 | 23.8 | <0.01 | 72.4 | 23.1 | <0.01 |

| Intensive care unit, yes | 8.0 | 20.5 | <0.01 | 20.6 | 20.8 | 0.9 |

| Ethnicity (non‐Hispanic white) | 63.2 | 61.4 | 0.6 | 62.5 | 60.9 | 0.5 |

Difference‐in‐Differences Regression Analysis

The adjusted results of the DDRA for the physician‐related HCAHPS questions are presented in Table 2. The adjusted results for the percentage of patients responding positively to all 3 physician‐related HCAHPS questions in the DDRA increased by 8.1% in the IM cohort (from 65.7% to 73.8%) and by 1.5% in the control cohort (from 64.4% to 65.9%) (P=0.04). The adjusted results for the percentage of patients responding always to How often did doctors treat you with courtesy and respect? in the DDRA increased by 5.1% (from 83.8% to 88.9%) in the IM cohort and by 1.0% (from 83.3% to 84.3%) in the control cohort (P=0.09). The adjusted results for the percentage of patients responding always to Does your doctor listen carefully to you? in the DDRA increased by 6.0% in the IM department (75.6% to 81.6%) and by 1.2% (75.2% to 76.4%) in the control (P=0.1). The adjusted results for the percentage of patients responding always to Does your doctor explain things in a way you could understand? in the DDRA increased by 7.8% in the IM department (from 72.1% to 79.9%) and by 1.0% in the control cohort (from 72.2% to 73.2%) (P=0.03). There was no more than 3.1% absolute increase in any of the 4 questions in the national average. There was also a significant improvement in percentage of patients who would definitely recommend this hospital to their friends and family. The adjusted results in the DDRA for the percentage of patients responding that they would definitely recommend this hospital increased by 7.1% in the IM cohort (from 82.7% to 89.8%) and 1.5% in the control group (from 84.1% to 85.6%) (P=0.02).

| UCLA IM | All Other UCLA Adult Departments | National Average | |

|---|---|---|---|

| |||

| % Patients responding that their doctors always treated them with courtesy and respect | |||

| January to June 2011, preintervention (95% CI) | 83.8 (80.587.1) | 83.3 (80.785.9) | 82.4 |

| January to June 2012, postintervention | 88.9 (86.391.4) | 84.3 (82.186.5) | 85.5 |

| Change from 2011 to 2012, January to June | 5.1 | 1.0 | 3.1 |

| Change in UCLA IM minus change in all other UCLA adult departments, difference in differences | 4.1 | ||

| P value of difference in differences between IM and the rest of the hospital | 0.09 | ||

| % Patients responding that their doctors always listened carefully | |||

| January to June 2011, preintervention (95% CI) | 75.6 (71.779.5) | 75.2 (72.278.1) | 76.4 |

| January to June 2012, postintervention (95% CI) | 81.6 (78.484.8) | 76.4 (73.978.9) | 73.7 |

| Change from 2011 to 2012, January to June | 6.0 | 1.2 | 2.7 |

| Change in UCLA IM minus change in all other UCLA adult departments, difference in differences | 4.6 | ||

| P value of difference in differences between IM and the rest of the hospital | 0.1 | ||

| % Patients responding that their doctors always explained things in a way they could understand | |||

| January to June 2011, preintervention (95% CI) | 72.1 (6876.1) | 72.2 (69.275.4) | 70.1 |

| January to June 2012, postintervention | 79.9 (76.683.1) | 73.2 (70.675.8) | 72.2 |

| Change from 2011 to 2012, January to June | 7.8 | 1.0 | 2.1 |

| Change in UCLA IM minus change in all other UCLA adult departments, difference in differences | 6.8 | ||

| P value of difference in differences between IM and the rest of the hospital | 0.03 | ||

| % Patients responding "always" for all 3 physician‐related HCAHPS questions | |||

| January to June 2011, preintervention (95% CI) | 65.7 (61.370.1) | 64.4 (61.267.7) | 80.1 |

| January to June 2012, postintervention | 73.8 (70.177.5) | 65.9 (63.168.6) | 87.8 |

| Change from 2011 to 2012, January to June | 8.1 | 1.5 | 7.7 |

| Change in UCLA IM minus change in all other UCLA adult departments, difference in differences | 6.6 | ||

| P value of difference in differences between IM and the rest of the hospital | 0.04 | ||

| % Patients who would definitely recommend this hospital to their friends and family | |||

| January to June 2011, preintervention (95% CI) | 82.7 (79.386.1) | 84.1 (81.586.6) | 68.8 |

| January to June 2012, postintervention | 89.8 (87.392.3) | 85.6 (83.587.7) | 71.2 |

| Change from 2011 to 2012, January to June | 7.1 | 1.5 | 2.4 |

| Change in UCLA IM minus change in all other UCLA adult departments, difference in differences | 5.6 | ||

| P value of difference in differences between IM and the rest of the hospital | 0.02 | ||

DISCUSSION

Our intervention, which included real‐time feedback to physicians on results of the patient survey, monthly recognition of physicians who stood out on this survey, and an educational conference, was associated with a clear improvement in patient satisfaction with physician‐patient communication and overall recommendation of the hospital. These results are significant because they demonstrate a cost‐effective intervention that can be applied to academic hospitals across the country with the use of nonmedically trained volunteers, such as the undergraduate volunteers involved in our program. The limited costs associated with the intervention were the time in managing the volunteers and movie package award ($20). To our knowledge, it is the first study published in a peer‐reviewed research journal that has demonstrated an intervention associated with significant improvements in HCAHPS scores, the standard by which CMS reimbursement will be affected.

The improvements associated with this intervention could be very valuable to hospitals and patient care. The positive correlation of higher patient satisfaction with improved outcomes suggests this intervention may have additional benefits.[4] Last, these improvements in patient satisfaction in the HCAHPS scores could minimize losses to hospital revenue, as hospitals with low patient‐satisfaction scores will be penalized.

There was a statistically significant improvement in adjusted scores for the question Did your physicians explain things understandably? with patients responding always to all 3 physician‐related HCAHPS questions and Would you recommend this hospital to friends and family. The results for the 2 other physician‐related questions (Did your doctor explain things understandably? and Did your doctor listen carefully?) did show a trend toward significance, with p values of <0.1, and a larger study may have been better powered to detect a statistically significant difference. The improvement in response to the adjusted scores for the question Did your physicians explain things understandably? was the primary driver in the improvement in the adjusted percentage of patients who responded always to all 3 physician‐related HCAHPS questions. This was likely because the IM cohort had the lowest score on this question, and so the feedback to the residents may have helped to address this area of weakness. The UCLA IM HCAHPS scores prior to 2012 have always been lower than other programs at UCLA. As a result, we do not believe the change was due to a regression to the mean.

We believe that the intervention had a positive effect on patient satisfaction for several reasons. The regular e‐mails with the results of the survey may have served as a reminder to residents that patient satisfaction was being monitored and linked to them. The immediate and individualized feedback also may have facilitated adjustments of clinical practice in real time. The residents were able to compare their own scores and comments to the anonymous results of their peers. The monthly department‐wide recognition for residents who excelled in patient communication may have created an incentive and competition among the residents. It is possible that there may be an element of the Hawthorne effect that explained the improvement in HCAHPS scores. However, all of the residents in the departments studied were already being measured through the ARC survey. The primary change was more frequent reporting of ARC survey results, and so we believe that perception of measurement alone was less likely driving the results. The findings from this study are similar to those from provider‐specific report cards, which have shown that outcomes can be improved by forcing greater accountability and competition among physicians.[19]

Brown et al. demonstrated that 2, 4‐hour physician communication workshops in their study had no impact on patient satisfaction, and so we believe that our 1‐hour workshop with only 50% attendance had minimal impact on the improved patient satisfaction scores in our study.[20] Our intervention also coincided with the implementation of the Accreditation Council for Graduate Medical Education (ACGME) work‐hour restrictions implemented in July 2011. These restrictions limited residents to 80 hours per week, intern duty periods were restricted to 16 hours and residents to 28 hours, and interns and residents required 8 to 10 hours free of duty between scheduled duty periods.[21] One of the biggest impacts of ACGME work‐hour restrictions was that interns were doing more day and night shifts rather than 28‐hour calls. However, these work‐hour restrictions were the same for all specialties and so were unlikely to explain the improved patient satisfaction associated with our intervention.

Our study has limitations. The study was a nonrandomized pre‐post study. We attempted to control for the differences in the cohorts with a multivariable regression analysis, but there may be unmeasured differences that we were unable to control for. Due to deidentification of the data, we could only control for patient health based on patient perceived health. In addition, the percentage of patients requiring ICU care in the IM cohort was higher in 2012 than in 2011. We did not identify differences in outcomes from analyses stratified by ICU or non‐ICU patients. In addition, patients who were excluded because of missing outcomes were more likely to be older and admitted through the ER. Further investigation would be needed to see if the findings of this study could be extended to other clinical situations.

In conclusion, our study found an intervention program that was associated with a significant improvement in patient satisfaction in the intervention cohort, even after adjusting for differences in the patient population, whereas there was no change in the control group. This intervention can serve as a model for academic hospitals to improve patient satisfaction, avoid revenue loss in the era of Hospital Value‐Based Purchasing, and to train the next generation of physicians on providing patient‐centered care.

Disclosure

This work was supported by the Beryl Institute and UCLA QI Initiative.

- , , , , . Relationship between patient satisfaction with inpatient care and hospital readmission within 30 Days. Am J Manag Care. 2011;17:41–48.

- , , , . Patients' Perception of Hospital Care in the United States. N Engl J Med. 2008;359:1921–1931.

- , , , et al. Patient satisfaction and its relationship with clinical quality and inpatient mortality in acute myocardial infarction. Circ Cardiovasc Qual Outcomes. 2010;3:188–195.

- , , . A systematic review of evidence on the links between patient experience and clinical safety and effectiveness. BMJ Open. 2013;3(1).

- Centers for Medicare 70:729–732.

- , , , . Emergency department patient satisfaction: customer service training improves patient satisfaction and ratings of physician and nurse skill. J Healthc Manag. 1998;43:427–440; discussion 441–442.

- , , . Emergency department information: does it effect patients' perception and satisfaction about the care given in an emergency department? Eur J Emerg Med 1999;6:245–248.

- . Can communication skills workshops for emergency department doctors improve patient satisfaction? J Accid Emerg Med. 2000;17:251–253.

- , , , . Effects of a physician communication intervention on patient care outcomes. J Gen Intern Med. 1996;11:147–155.

- , , , , . Health‐related quality‐of‐life assessments and patient‐physician communication: a randomized controlled trial. JAMA. 2002;288:3027–3034.

- , , , . Modification of residents' behavior by preceptor feedback of patient satisfaction. J Gen Intern Med. 1986;1:394–398.

- , , . Developing physician communication skills for patient‐centered care. Health Aff (Millwood) 2010;29:1310–1318.

- ARC Medical Program @ UCLA. Available at: http://Arcmedicalprogram.Wordpress.com. Accessed July 1, 2013.

- Hospital Consumer Assessment of Healthcare Providers 12:151–162.

- Summary of HCAHPS survey results January 2010 to December 2010 discharges. Available at: http://Www.Hcahpsonline.Org/Files/Hcahps survey results table %28report_Hei_October_2011_States%29.Pdf. Accessed October 18, 2013.

- , , , et al. A randomized experiment investigating the suitability of speech‐enabled IVR and web modes for publicly reported surveys of patients' experience of hospital care. Med Care Res Rev. 2013;70:165–184.

- . Provider‐specific report cards: a tool for health sector accountability in developing countries. Health Policy Plan. 2006;21:101–109.

- , , , . Effect of clinician communication skills training on patient satisfaction: a randomized, controlled trial. Ann Intern Med. 1999;131:822–829.

- Frequently asked questions: ACGME common duty hour requirements. Available at: http://www.Acgme.Org/Acgmeweb/Portals/0/Pdfs/Dh‐Faqs2011.Pdf. Accessed January 3, 2015.

INTRODUCTION

Patient experience and satisfaction is intrinsically valued, as strong physician‐patient communication, empathy, and patient comfort require little justification. However, studies have also shown that patient satisfaction is associated with better health outcomes and greater compliance.[1, 2, 3] A systematic review of studies linking patient satisfaction to outcomes found that patient experience is positively associated with patient safety, clinical effectiveness, health outcomes, adherence, and lower resource utilization.[4] Of 378 associations studied between patient experience and health outcomes, there were 312 positive associations.[4] However, not all studies have shown a positive association between patient satisfaction and outcomes.

Nevertheless, hospitals now have to strive to improve patient satisfaction, as Centers for Medicare & Medicaid Services (CMS) has introduced Hospital Value‐Based Purchasing. CMS started to withhold Medicare Severity Diagnosis‐Related Groups payments, starting at 1.0% in 2013, 1.25% in 2014, and increasing to 2.0% in 2017. This money is redistributed based on performance on core quality measures, including patient satisfaction measured through the Hospital Consumer Assessment of Healthcare Providers and Systems (HCAHPS) survey.[5]

Various studies have evaluated interventions to improve patient satisfaction, but to our knowledge, no study published in a peer‐reviewed research journal has shown a significant improvement in HCAHPS scores.[6, 7, 8, 9, 10, 11, 12] Levinson et al. argue that physician communication skills should be taught during residency, and that individualized feedback is an effective way to allow physicians to track their progress over time and compared to their peers.[13] We thus aimed to evaluate an intervention to improve patient satisfaction designed by the Patient Affairs Department for Ronald Reagan University of California, Los Angeles (UCLA) Medical Center (RRUCLAMC) and the UCLA Department of Medicine.

METHODOLOGY

Design Overview

The intervention for the IM residents consisted of education on improving physician‐patient communication provided at a conference, frequent individualized patient feedback, and an incentive program in addition to existing patient satisfaction training. The results of the intervention were measured by comparing the postintervention HCAHPS scores in the Department of Medicine versus the rest of the hospital and the national averages.

Setting and Participants

The study setting was RRUCLAMC, a large university‐affiliated academic center. The internal medicine (IM) residents and patients in the Department of Medicine were in the intervention cohort. The residents in all other departments that were involved with direct adult patient care and their patients were the control cohort. Our intervention targeted resident physicians because they were most involved in the majority of direct patient care at RRUCLAMC. Residents are in house 24 hours a day, are the first line of contact for nurses and patients, and provide the most continuity, as attendings often rotate every 1 to 2 weeks, but residents are on service for at least 2 to 4 weeks for each rotation. IM residents are on all inpatient general medicine, critical care, and cardiology services at RRUCLAMC. RRUMCLA does not have a nonteaching service for adult IM patients.

Interventions

Since 2006, there has been a program at RRUCLAMC called Assessing Residents' CICARE (ARC). CICARE is an acronym that represents UCLA's patient communication model and training elements (Connect with patients, Introduce yourself and role, Communicate, Ask and anticipate, Respond, Exit courteously). The ARC program consists of trained undergraduate student volunteers surveying hospitalized patients with an optional and anonymous survey regarding specific resident physician's communication skills (see Supporting Information, Appendix A, in the online version of this article). Patients were randomly selected for the ARC and HCAHPS survey, but they were selected separately for each survey. There may have been some overlap between patients selected for ARC and HCAHPS surveys. Residents received feedback from 7 to 10 patients a year on average.

The volunteers show the patients a picture of individual resident physicians assigned to their care to confirm the resident's identity. The volunteer then asks 18 multiple‐choice questions about their physician‐patient communication skills. The patients are also asked to provide general comments regarding the resident physician.[14] The patients were interviewed in private hospital rooms by ARC volunteers. No information linking the patient to the survey is recorded. Survey data are entered into a database, and individual residents are assigned a code that links them to their patient feedback. These survey results and comments are sent to the program directors of the residency programs weekly. However, a review of the practice revealed that results were only reviewed semiannually by the residents with their program director.

Starting December 2011, the results of the ARC survey were directly e‐mailed to the interns and residents in the Department of Medicine in real time while they were on general medicine wards and the cardiology inpatient service at RRUCLAMC. Residents in other departments at RRUCLAMC continued to review the patient feedback with program directors at most biannually. This continued until June 2012 and had to be stopped during July 2012 because many of the CICARE volunteers were away on summer break.

Starting January 2012, IM residents who stood out in the ARC survey received a Commendation of Excellence. Each month, 3 residents were selected for this award based on their patient comments and if they had over 90% overall satisfaction on the survey questions. These residents received department‐wide recognition via e‐mail and a movie package (2 movie tickets, popcorn, and a drink) as a reward.

In January 2012, a 1‐hour lunchtime conference was held for IM residents to discuss best practices in physician‐patient communication, upcoming changes with Hospital Value‐Based Purchasing, and strengths and weaknesses of the Department of Medicine in patient communication. About 50% of the IM residents included in the study arm were not able to attend the education session and so no universal training was provided.

Outcomes

We analyzed the before and after intervention impact on the HCAHPS results. HCAHPS is a standardized national survey measuring patient perspectives after they are discharged from hospitals across the nation. The survey addresses communication with doctors and nurses, responsiveness of hospital staff, pain management, communication about medicines, discharge information, cleanliness of the hospital environment, and quietness of the hospital environment. The survey also includes demographic questions.[15]

Our analysis focused on the following specific questions: Would you recommend this hospital to your friends and family? During this hospital stay, how often did doctors: (1) treat you with courtesy and respect, (2) listen carefully to you, and (3) explain things in a way you could understand? Responders who did not answer all of the above questions were excluded.

Our outcomes focused on the change from January to June 2011 to January to June 2012, during which time the intervention was ongoing. We did not include data past July 2012 in the primary outcome, because the intervention did not continue due to volunteers being away for summer break. In addition, July also marks the time when the third‐year IM residents graduate and the new interns start. Thus, one‐third of the residents in the IM department had never been exposed to the intervention after June of 2012.

Statistical Analysis

We used a difference‐in‐differences regression analysis (DDRA) for these outcomes and controlled for other covariates in the patient populations to predict adjusted probabilities for each of the outcomes studied. The key predictors in the models were indicator variables for year (2011, 2012) and service (IM, all others) and an interaction between year and service. We controlled for perceived patient health, admission through emergency room (ER), age, race, patient education level, intensive care unit (ICU) stay, length of stay, and gender.[16] We calculated adjusted probabilities for each level of the interaction between service and year, holding all controls at their means. The 95% confidence intervals for these predictions were generated using the delta method.

We compared the changes in HCAHPS results for the RRUCLAMC Department of Medicine patients with all other RRUCLAMC department patients and to the national averages. We only had access to national average point estimates and not individual responses from the national sample and so were unable to do statistical analysis involving the national cohort. The prespecified significant P value was 0.05. Stata 13 (StataCorp, College Station, TX) was used for statistical analysis. The study received institutional review board exempt status.

RESULTS

Sample Size and Excluded Cases

There were initially 3637 HCAHPS patient cases. We dropped all HCAHPS cases that were missing values for outcome or demographic/explanatory variables. We dropped 226 cases due to 1 or more missing outcome variables, and we dropped 322 cases due to 1 or more missing demographic/explanatory variables. This resulted in 548 total dropped cases and a final sample size of 3089 (see Supporting Information, Appendix B, in the online version of this article). Of the 548 dropped cases, 228 cases were in the IM cohort and 320 cases from the rest of the hospital. There were 993 patients in the UCLA IM cohort and 2096 patients in the control cohort from all other UCLA adult departments. Patients excluded due to missing data were similar to the patients included in the final analysis except for 2 differences. Patients excluded were older (63 years vs 58 years, P<0.01) and more likely to have been admitted from the ER (57.4% vs 39.6%, P<0.01) than the patients we had included.

Patient Characteristics

The patient population demographics from all patients discharged from RRUCLAMC who completed HCAHPS surveys January to June 2011 and 2012 are displayed in Table 1. In both 2011 and 2012, the patients in the IM cohort were significantly older, more likely to be male, had lower perceived health, and more likely to be admitted through the emergency room than the HCAHPS patients in all other UCLA adult departments. In 2011, the IM cohort had a lower percentage of patients than the non‐IM cohort that required an ICU stay (8.0% vs 20.5%, P<0.01), but there was no statistically significant difference in 2012 (20.6% vs 20.8%, P=0.9). Other than differences in ICU stay, the demographic characteristics from 2011 to 2012 did not change in the intervention and control cohorts. The response rate for UCLA on HCAHPS during the study period was 29%, consistent with national results.[17, 18]

| 2011 | 2012 | |||||

|---|---|---|---|---|---|---|

| UCLA Internal Medicine | All Other UCLA Adult Departments | P | UCLA Internal Medicine | All Other UCLA Adult Departments | P | |

| ||||||

| Total no. | 465 | 865 | 528 | 1,231 | ||

| Age, y | 62.8 | 55.3 | <0.01 | 65.1 | 54.9 | <0.01 |

| Length of stay, d | 5.7 | 5.7 | 0.94 | 5.8 | 4.9 | 0.19 |

| Gender, male | 56.6 | 44.1 | <0.01 | 55.3 | 41.4 | <0.01 |

| Education (4 years of college or greater) | 47.3 | 49.3 | 0.5 | 47.3 | 51.3 | 0.13 |

| Patient‐perceived overall health (responding very good or excellent) | 30.5 | 55.0 | <0.01 | 27.5 | 58.2 | <0.01 |

| Admission through emergency room, yes | 75.5 | 23.8 | <0.01 | 72.4 | 23.1 | <0.01 |

| Intensive care unit, yes | 8.0 | 20.5 | <0.01 | 20.6 | 20.8 | 0.9 |

| Ethnicity (non‐Hispanic white) | 63.2 | 61.4 | 0.6 | 62.5 | 60.9 | 0.5 |

Difference‐in‐Differences Regression Analysis

The adjusted results of the DDRA for the physician‐related HCAHPS questions are presented in Table 2. The adjusted results for the percentage of patients responding positively to all 3 physician‐related HCAHPS questions in the DDRA increased by 8.1% in the IM cohort (from 65.7% to 73.8%) and by 1.5% in the control cohort (from 64.4% to 65.9%) (P=0.04). The adjusted results for the percentage of patients responding always to How often did doctors treat you with courtesy and respect? in the DDRA increased by 5.1% (from 83.8% to 88.9%) in the IM cohort and by 1.0% (from 83.3% to 84.3%) in the control cohort (P=0.09). The adjusted results for the percentage of patients responding always to Does your doctor listen carefully to you? in the DDRA increased by 6.0% in the IM department (75.6% to 81.6%) and by 1.2% (75.2% to 76.4%) in the control (P=0.1). The adjusted results for the percentage of patients responding always to Does your doctor explain things in a way you could understand? in the DDRA increased by 7.8% in the IM department (from 72.1% to 79.9%) and by 1.0% in the control cohort (from 72.2% to 73.2%) (P=0.03). There was no more than 3.1% absolute increase in any of the 4 questions in the national average. There was also a significant improvement in percentage of patients who would definitely recommend this hospital to their friends and family. The adjusted results in the DDRA for the percentage of patients responding that they would definitely recommend this hospital increased by 7.1% in the IM cohort (from 82.7% to 89.8%) and 1.5% in the control group (from 84.1% to 85.6%) (P=0.02).

| UCLA IM | All Other UCLA Adult Departments | National Average | |

|---|---|---|---|

| |||

| % Patients responding that their doctors always treated them with courtesy and respect | |||

| January to June 2011, preintervention (95% CI) | 83.8 (80.587.1) | 83.3 (80.785.9) | 82.4 |

| January to June 2012, postintervention | 88.9 (86.391.4) | 84.3 (82.186.5) | 85.5 |

| Change from 2011 to 2012, January to June | 5.1 | 1.0 | 3.1 |

| Change in UCLA IM minus change in all other UCLA adult departments, difference in differences | 4.1 | ||

| P value of difference in differences between IM and the rest of the hospital | 0.09 | ||

| % Patients responding that their doctors always listened carefully | |||

| January to June 2011, preintervention (95% CI) | 75.6 (71.779.5) | 75.2 (72.278.1) | 76.4 |

| January to June 2012, postintervention (95% CI) | 81.6 (78.484.8) | 76.4 (73.978.9) | 73.7 |

| Change from 2011 to 2012, January to June | 6.0 | 1.2 | 2.7 |

| Change in UCLA IM minus change in all other UCLA adult departments, difference in differences | 4.6 | ||

| P value of difference in differences between IM and the rest of the hospital | 0.1 | ||

| % Patients responding that their doctors always explained things in a way they could understand | |||

| January to June 2011, preintervention (95% CI) | 72.1 (6876.1) | 72.2 (69.275.4) | 70.1 |

| January to June 2012, postintervention | 79.9 (76.683.1) | 73.2 (70.675.8) | 72.2 |

| Change from 2011 to 2012, January to June | 7.8 | 1.0 | 2.1 |

| Change in UCLA IM minus change in all other UCLA adult departments, difference in differences | 6.8 | ||

| P value of difference in differences between IM and the rest of the hospital | 0.03 | ||

| % Patients responding "always" for all 3 physician‐related HCAHPS questions | |||

| January to June 2011, preintervention (95% CI) | 65.7 (61.370.1) | 64.4 (61.267.7) | 80.1 |

| January to June 2012, postintervention | 73.8 (70.177.5) | 65.9 (63.168.6) | 87.8 |

| Change from 2011 to 2012, January to June | 8.1 | 1.5 | 7.7 |

| Change in UCLA IM minus change in all other UCLA adult departments, difference in differences | 6.6 | ||

| P value of difference in differences between IM and the rest of the hospital | 0.04 | ||

| % Patients who would definitely recommend this hospital to their friends and family | |||

| January to June 2011, preintervention (95% CI) | 82.7 (79.386.1) | 84.1 (81.586.6) | 68.8 |

| January to June 2012, postintervention | 89.8 (87.392.3) | 85.6 (83.587.7) | 71.2 |

| Change from 2011 to 2012, January to June | 7.1 | 1.5 | 2.4 |

| Change in UCLA IM minus change in all other UCLA adult departments, difference in differences | 5.6 | ||

| P value of difference in differences between IM and the rest of the hospital | 0.02 | ||

DISCUSSION

Our intervention, which included real‐time feedback to physicians on results of the patient survey, monthly recognition of physicians who stood out on this survey, and an educational conference, was associated with a clear improvement in patient satisfaction with physician‐patient communication and overall recommendation of the hospital. These results are significant because they demonstrate a cost‐effective intervention that can be applied to academic hospitals across the country with the use of nonmedically trained volunteers, such as the undergraduate volunteers involved in our program. The limited costs associated with the intervention were the time in managing the volunteers and movie package award ($20). To our knowledge, it is the first study published in a peer‐reviewed research journal that has demonstrated an intervention associated with significant improvements in HCAHPS scores, the standard by which CMS reimbursement will be affected.

The improvements associated with this intervention could be very valuable to hospitals and patient care. The positive correlation of higher patient satisfaction with improved outcomes suggests this intervention may have additional benefits.[4] Last, these improvements in patient satisfaction in the HCAHPS scores could minimize losses to hospital revenue, as hospitals with low patient‐satisfaction scores will be penalized.

There was a statistically significant improvement in adjusted scores for the question Did your physicians explain things understandably? with patients responding always to all 3 physician‐related HCAHPS questions and Would you recommend this hospital to friends and family. The results for the 2 other physician‐related questions (Did your doctor explain things understandably? and Did your doctor listen carefully?) did show a trend toward significance, with p values of <0.1, and a larger study may have been better powered to detect a statistically significant difference. The improvement in response to the adjusted scores for the question Did your physicians explain things understandably? was the primary driver in the improvement in the adjusted percentage of patients who responded always to all 3 physician‐related HCAHPS questions. This was likely because the IM cohort had the lowest score on this question, and so the feedback to the residents may have helped to address this area of weakness. The UCLA IM HCAHPS scores prior to 2012 have always been lower than other programs at UCLA. As a result, we do not believe the change was due to a regression to the mean.

We believe that the intervention had a positive effect on patient satisfaction for several reasons. The regular e‐mails with the results of the survey may have served as a reminder to residents that patient satisfaction was being monitored and linked to them. The immediate and individualized feedback also may have facilitated adjustments of clinical practice in real time. The residents were able to compare their own scores and comments to the anonymous results of their peers. The monthly department‐wide recognition for residents who excelled in patient communication may have created an incentive and competition among the residents. It is possible that there may be an element of the Hawthorne effect that explained the improvement in HCAHPS scores. However, all of the residents in the departments studied were already being measured through the ARC survey. The primary change was more frequent reporting of ARC survey results, and so we believe that perception of measurement alone was less likely driving the results. The findings from this study are similar to those from provider‐specific report cards, which have shown that outcomes can be improved by forcing greater accountability and competition among physicians.[19]

Brown et al. demonstrated that 2, 4‐hour physician communication workshops in their study had no impact on patient satisfaction, and so we believe that our 1‐hour workshop with only 50% attendance had minimal impact on the improved patient satisfaction scores in our study.[20] Our intervention also coincided with the implementation of the Accreditation Council for Graduate Medical Education (ACGME) work‐hour restrictions implemented in July 2011. These restrictions limited residents to 80 hours per week, intern duty periods were restricted to 16 hours and residents to 28 hours, and interns and residents required 8 to 10 hours free of duty between scheduled duty periods.[21] One of the biggest impacts of ACGME work‐hour restrictions was that interns were doing more day and night shifts rather than 28‐hour calls. However, these work‐hour restrictions were the same for all specialties and so were unlikely to explain the improved patient satisfaction associated with our intervention.

Our study has limitations. The study was a nonrandomized pre‐post study. We attempted to control for the differences in the cohorts with a multivariable regression analysis, but there may be unmeasured differences that we were unable to control for. Due to deidentification of the data, we could only control for patient health based on patient perceived health. In addition, the percentage of patients requiring ICU care in the IM cohort was higher in 2012 than in 2011. We did not identify differences in outcomes from analyses stratified by ICU or non‐ICU patients. In addition, patients who were excluded because of missing outcomes were more likely to be older and admitted through the ER. Further investigation would be needed to see if the findings of this study could be extended to other clinical situations.

In conclusion, our study found an intervention program that was associated with a significant improvement in patient satisfaction in the intervention cohort, even after adjusting for differences in the patient population, whereas there was no change in the control group. This intervention can serve as a model for academic hospitals to improve patient satisfaction, avoid revenue loss in the era of Hospital Value‐Based Purchasing, and to train the next generation of physicians on providing patient‐centered care.

Disclosure

This work was supported by the Beryl Institute and UCLA QI Initiative.

INTRODUCTION

Patient experience and satisfaction is intrinsically valued, as strong physician‐patient communication, empathy, and patient comfort require little justification. However, studies have also shown that patient satisfaction is associated with better health outcomes and greater compliance.[1, 2, 3] A systematic review of studies linking patient satisfaction to outcomes found that patient experience is positively associated with patient safety, clinical effectiveness, health outcomes, adherence, and lower resource utilization.[4] Of 378 associations studied between patient experience and health outcomes, there were 312 positive associations.[4] However, not all studies have shown a positive association between patient satisfaction and outcomes.

Nevertheless, hospitals now have to strive to improve patient satisfaction, as Centers for Medicare & Medicaid Services (CMS) has introduced Hospital Value‐Based Purchasing. CMS started to withhold Medicare Severity Diagnosis‐Related Groups payments, starting at 1.0% in 2013, 1.25% in 2014, and increasing to 2.0% in 2017. This money is redistributed based on performance on core quality measures, including patient satisfaction measured through the Hospital Consumer Assessment of Healthcare Providers and Systems (HCAHPS) survey.[5]

Various studies have evaluated interventions to improve patient satisfaction, but to our knowledge, no study published in a peer‐reviewed research journal has shown a significant improvement in HCAHPS scores.[6, 7, 8, 9, 10, 11, 12] Levinson et al. argue that physician communication skills should be taught during residency, and that individualized feedback is an effective way to allow physicians to track their progress over time and compared to their peers.[13] We thus aimed to evaluate an intervention to improve patient satisfaction designed by the Patient Affairs Department for Ronald Reagan University of California, Los Angeles (UCLA) Medical Center (RRUCLAMC) and the UCLA Department of Medicine.

METHODOLOGY

Design Overview

The intervention for the IM residents consisted of education on improving physician‐patient communication provided at a conference, frequent individualized patient feedback, and an incentive program in addition to existing patient satisfaction training. The results of the intervention were measured by comparing the postintervention HCAHPS scores in the Department of Medicine versus the rest of the hospital and the national averages.

Setting and Participants

The study setting was RRUCLAMC, a large university‐affiliated academic center. The internal medicine (IM) residents and patients in the Department of Medicine were in the intervention cohort. The residents in all other departments that were involved with direct adult patient care and their patients were the control cohort. Our intervention targeted resident physicians because they were most involved in the majority of direct patient care at RRUCLAMC. Residents are in house 24 hours a day, are the first line of contact for nurses and patients, and provide the most continuity, as attendings often rotate every 1 to 2 weeks, but residents are on service for at least 2 to 4 weeks for each rotation. IM residents are on all inpatient general medicine, critical care, and cardiology services at RRUCLAMC. RRUMCLA does not have a nonteaching service for adult IM patients.

Interventions

Since 2006, there has been a program at RRUCLAMC called Assessing Residents' CICARE (ARC). CICARE is an acronym that represents UCLA's patient communication model and training elements (Connect with patients, Introduce yourself and role, Communicate, Ask and anticipate, Respond, Exit courteously). The ARC program consists of trained undergraduate student volunteers surveying hospitalized patients with an optional and anonymous survey regarding specific resident physician's communication skills (see Supporting Information, Appendix A, in the online version of this article). Patients were randomly selected for the ARC and HCAHPS survey, but they were selected separately for each survey. There may have been some overlap between patients selected for ARC and HCAHPS surveys. Residents received feedback from 7 to 10 patients a year on average.

The volunteers show the patients a picture of individual resident physicians assigned to their care to confirm the resident's identity. The volunteer then asks 18 multiple‐choice questions about their physician‐patient communication skills. The patients are also asked to provide general comments regarding the resident physician.[14] The patients were interviewed in private hospital rooms by ARC volunteers. No information linking the patient to the survey is recorded. Survey data are entered into a database, and individual residents are assigned a code that links them to their patient feedback. These survey results and comments are sent to the program directors of the residency programs weekly. However, a review of the practice revealed that results were only reviewed semiannually by the residents with their program director.

Starting December 2011, the results of the ARC survey were directly e‐mailed to the interns and residents in the Department of Medicine in real time while they were on general medicine wards and the cardiology inpatient service at RRUCLAMC. Residents in other departments at RRUCLAMC continued to review the patient feedback with program directors at most biannually. This continued until June 2012 and had to be stopped during July 2012 because many of the CICARE volunteers were away on summer break.

Starting January 2012, IM residents who stood out in the ARC survey received a Commendation of Excellence. Each month, 3 residents were selected for this award based on their patient comments and if they had over 90% overall satisfaction on the survey questions. These residents received department‐wide recognition via e‐mail and a movie package (2 movie tickets, popcorn, and a drink) as a reward.

In January 2012, a 1‐hour lunchtime conference was held for IM residents to discuss best practices in physician‐patient communication, upcoming changes with Hospital Value‐Based Purchasing, and strengths and weaknesses of the Department of Medicine in patient communication. About 50% of the IM residents included in the study arm were not able to attend the education session and so no universal training was provided.

Outcomes

We analyzed the before and after intervention impact on the HCAHPS results. HCAHPS is a standardized national survey measuring patient perspectives after they are discharged from hospitals across the nation. The survey addresses communication with doctors and nurses, responsiveness of hospital staff, pain management, communication about medicines, discharge information, cleanliness of the hospital environment, and quietness of the hospital environment. The survey also includes demographic questions.[15]

Our analysis focused on the following specific questions: Would you recommend this hospital to your friends and family? During this hospital stay, how often did doctors: (1) treat you with courtesy and respect, (2) listen carefully to you, and (3) explain things in a way you could understand? Responders who did not answer all of the above questions were excluded.

Our outcomes focused on the change from January to June 2011 to January to June 2012, during which time the intervention was ongoing. We did not include data past July 2012 in the primary outcome, because the intervention did not continue due to volunteers being away for summer break. In addition, July also marks the time when the third‐year IM residents graduate and the new interns start. Thus, one‐third of the residents in the IM department had never been exposed to the intervention after June of 2012.

Statistical Analysis

We used a difference‐in‐differences regression analysis (DDRA) for these outcomes and controlled for other covariates in the patient populations to predict adjusted probabilities for each of the outcomes studied. The key predictors in the models were indicator variables for year (2011, 2012) and service (IM, all others) and an interaction between year and service. We controlled for perceived patient health, admission through emergency room (ER), age, race, patient education level, intensive care unit (ICU) stay, length of stay, and gender.[16] We calculated adjusted probabilities for each level of the interaction between service and year, holding all controls at their means. The 95% confidence intervals for these predictions were generated using the delta method.

We compared the changes in HCAHPS results for the RRUCLAMC Department of Medicine patients with all other RRUCLAMC department patients and to the national averages. We only had access to national average point estimates and not individual responses from the national sample and so were unable to do statistical analysis involving the national cohort. The prespecified significant P value was 0.05. Stata 13 (StataCorp, College Station, TX) was used for statistical analysis. The study received institutional review board exempt status.

RESULTS

Sample Size and Excluded Cases

There were initially 3637 HCAHPS patient cases. We dropped all HCAHPS cases that were missing values for outcome or demographic/explanatory variables. We dropped 226 cases due to 1 or more missing outcome variables, and we dropped 322 cases due to 1 or more missing demographic/explanatory variables. This resulted in 548 total dropped cases and a final sample size of 3089 (see Supporting Information, Appendix B, in the online version of this article). Of the 548 dropped cases, 228 cases were in the IM cohort and 320 cases from the rest of the hospital. There were 993 patients in the UCLA IM cohort and 2096 patients in the control cohort from all other UCLA adult departments. Patients excluded due to missing data were similar to the patients included in the final analysis except for 2 differences. Patients excluded were older (63 years vs 58 years, P<0.01) and more likely to have been admitted from the ER (57.4% vs 39.6%, P<0.01) than the patients we had included.

Patient Characteristics

The patient population demographics from all patients discharged from RRUCLAMC who completed HCAHPS surveys January to June 2011 and 2012 are displayed in Table 1. In both 2011 and 2012, the patients in the IM cohort were significantly older, more likely to be male, had lower perceived health, and more likely to be admitted through the emergency room than the HCAHPS patients in all other UCLA adult departments. In 2011, the IM cohort had a lower percentage of patients than the non‐IM cohort that required an ICU stay (8.0% vs 20.5%, P<0.01), but there was no statistically significant difference in 2012 (20.6% vs 20.8%, P=0.9). Other than differences in ICU stay, the demographic characteristics from 2011 to 2012 did not change in the intervention and control cohorts. The response rate for UCLA on HCAHPS during the study period was 29%, consistent with national results.[17, 18]

| 2011 | 2012 | |||||

|---|---|---|---|---|---|---|

| UCLA Internal Medicine | All Other UCLA Adult Departments | P | UCLA Internal Medicine | All Other UCLA Adult Departments | P | |

| ||||||

| Total no. | 465 | 865 | 528 | 1,231 | ||

| Age, y | 62.8 | 55.3 | <0.01 | 65.1 | 54.9 | <0.01 |

| Length of stay, d | 5.7 | 5.7 | 0.94 | 5.8 | 4.9 | 0.19 |

| Gender, male | 56.6 | 44.1 | <0.01 | 55.3 | 41.4 | <0.01 |

| Education (4 years of college or greater) | 47.3 | 49.3 | 0.5 | 47.3 | 51.3 | 0.13 |

| Patient‐perceived overall health (responding very good or excellent) | 30.5 | 55.0 | <0.01 | 27.5 | 58.2 | <0.01 |

| Admission through emergency room, yes | 75.5 | 23.8 | <0.01 | 72.4 | 23.1 | <0.01 |

| Intensive care unit, yes | 8.0 | 20.5 | <0.01 | 20.6 | 20.8 | 0.9 |

| Ethnicity (non‐Hispanic white) | 63.2 | 61.4 | 0.6 | 62.5 | 60.9 | 0.5 |

Difference‐in‐Differences Regression Analysis

The adjusted results of the DDRA for the physician‐related HCAHPS questions are presented in Table 2. The adjusted results for the percentage of patients responding positively to all 3 physician‐related HCAHPS questions in the DDRA increased by 8.1% in the IM cohort (from 65.7% to 73.8%) and by 1.5% in the control cohort (from 64.4% to 65.9%) (P=0.04). The adjusted results for the percentage of patients responding always to How often did doctors treat you with courtesy and respect? in the DDRA increased by 5.1% (from 83.8% to 88.9%) in the IM cohort and by 1.0% (from 83.3% to 84.3%) in the control cohort (P=0.09). The adjusted results for the percentage of patients responding always to Does your doctor listen carefully to you? in the DDRA increased by 6.0% in the IM department (75.6% to 81.6%) and by 1.2% (75.2% to 76.4%) in the control (P=0.1). The adjusted results for the percentage of patients responding always to Does your doctor explain things in a way you could understand? in the DDRA increased by 7.8% in the IM department (from 72.1% to 79.9%) and by 1.0% in the control cohort (from 72.2% to 73.2%) (P=0.03). There was no more than 3.1% absolute increase in any of the 4 questions in the national average. There was also a significant improvement in percentage of patients who would definitely recommend this hospital to their friends and family. The adjusted results in the DDRA for the percentage of patients responding that they would definitely recommend this hospital increased by 7.1% in the IM cohort (from 82.7% to 89.8%) and 1.5% in the control group (from 84.1% to 85.6%) (P=0.02).

| UCLA IM | All Other UCLA Adult Departments | National Average | |

|---|---|---|---|

| |||

| % Patients responding that their doctors always treated them with courtesy and respect | |||

| January to June 2011, preintervention (95% CI) | 83.8 (80.587.1) | 83.3 (80.785.9) | 82.4 |

| January to June 2012, postintervention | 88.9 (86.391.4) | 84.3 (82.186.5) | 85.5 |

| Change from 2011 to 2012, January to June | 5.1 | 1.0 | 3.1 |

| Change in UCLA IM minus change in all other UCLA adult departments, difference in differences | 4.1 | ||

| P value of difference in differences between IM and the rest of the hospital | 0.09 | ||

| % Patients responding that their doctors always listened carefully | |||