User login

Sepsis and heart failure are two common, costly, and deadly conditions. Among hospitalized Medicare patients, these conditions rank as the first and second most frequent principal diagnoses accounting for over $33 billion in spending across all payers.1 One-third to one-half of all hospital deaths are estimated to occur in patients with sepsis,2 and heart failure is listed as a contributing factor in over 10% of deaths in the United States.3

Previous research shows that evidence-based care decisions can impact the outcomes for these patients. For example, sepsis patients receiving intravenous fluids, blood cultures, broad-spectrum antibiotics, and lactate measurement within three hours of presentation have lower mortality rates.4 In heart failure, key interventions such as the appropriate use of ACE inhibitors, beta blockers, and referral to disease management programs reduce morbidity and mortality.5

However, rapid dissemination and adoption of evidence-based guidelines remain a challenge.6,7 Policy makers have introduced incentives and penalties to support adoption, with varying levels of success. After four years of Centers for Medicare and Medicaid Services (CMS) penalties for hospitals with excess heart failure readmissions, only 21% performed well enough to avoid a penalty in 2017.8 CMS has been tracking sepsis bundle adherence as a core measure, but the rate in 2018 sat at just 54%.9 It is clear that new solutions are needed.10

AdventHealth (formerly Adventist Health System) is a growing, faith-based health system with hospitals across nine states. AdventHealth is a national leader in quality, safety, and patient satisfaction but is not immune to the challenges of delivering consistent, evidence-based care across an extensive network. To accelerate system-wide practice change, AdventHealth’s Office of Clinical Excellence (OCE) partnered with QURE Healthcare and Premier, Inc., to implement a physician engagement and care standardization collaboration involving nearly 100 hospitalists at eight facilities across five states.

This paper describes the results of the Adventist QURE Quality Project (AQQP), which used QURE’s validated, simulation-based measurement and feedback approach to engage hospitalists and standardize evidence-based practices for patients with sepsis and heart failure. We documented specific areas of variation identified in the simulations, how those practices changed through serial feedback, and the impact of those changes on real-world outcomes and costs.

METHODS

Setting

AdventHealth has its headquarters in Altamonte Springs, Florida. It has facilities in nine states, which includes 48 hospitals. The OCE is comprised of physician leaders, project managers, and data analysts who sponsored the project from July 2016 through July 2018.

Study Participants

AdventHealth hospitals were invited to enroll their hospitalists in AQQP; eight AdventHealth hospitals across five states, representing 91 physicians and 16 nurse practitioners/physician’s assistants (APPs), agreed to participate. Participants included both AdventHealth-employed providers and contracted hospitalist groups. Provider participation was voluntary and not tied to financial incentives; however, participants received Continuing Medical Education credit and, if applicable, Maintenance of Certification points through the American Board of Internal Medicine.

Quasi-experimental Design

We used AdventHealth hospitals not participating in AQQP as a quasi-experimental control group. We leveraged this to measure the impact of concurrent secular effects, such as order sets and other system-wide training, that could also improve practice and outcomes in our study.

Study Objectives and Approach

The explicit goals of AQQP were to (1) measure how sepsis and heart failure patients are cared for across AdventHealth using Clinical Performance and Value (CPV) case simulations, (2) provide a forum for hospitalists to discuss clinical variation, and (3) reduce unneeded variation to improve quality and reduce cost. QURE developed 12 CPV simulated patient cases (six sepsis and six heart failure cases) with case-specific evidenced-based scoring criteria tied to national and AdventHealth evidence-based guidelines. AdventHealth order sets were embedded in the cases and accessible by participants as they cared for their patients.

CPV vignettes are simulated patient cases administered online, and have been validated as an accurate and responsive measure of clinical decision-making in both ambulatory11-13 and inpatient settings.14,15 Cases take 20-30 minutes each to complete and simulate a typical clinical encounter: taking the medical history, performing a physical examination, ordering tests, making the diagnosis, implementing initial treatment, and outlining a follow-up plan. Each case has predefined, evidence-based scoring criteria for each care domain. Cases and scoring criteria were reviewed by AdventHealth hospitalist program leaders and physician leaders in OCE. Provider responses were double-scored by trained physician abstractors. Scores range from 0%-100%, with higher scores reflecting greater alignment with best practice recommendations.

In each round of the project, AQQP participants completed two CPV cases, received personalized online feedback reports on their care decisions, and met (at the various sites and via web conference) for a facilitated group discussion on areas of high group variation. The personal feedback reports included the participant’s case score compared to the group average, a list of high-priority personalized improvement opportunities, a summary of the cost of unneeded care items, and links to relevant references. The group discussions focused on six items of high variation. Six total rounds of CPV measurement and feedback were held, one every four months.

At the study’s conclusion, we administered a brief satisfaction survey, asking providers to rate various aspects of the project on a five-point Likert scale.

Data

The study used two primary data sources: (1) care decisions made in the CPV simulated cases and (2) patient-level utilization data from Premier Inc.’s QualityAdvisorTM (QA) data system. QA integrates quality, safety, and financial data from AdventHealth’s electronic medical record, claims data, charge master, and other resources. QualityAdvisor also calculates expected performance for critical measures, including cost per case and length of stay (LOS), based on a proprietary algorithm, which uses DRG classification, severity-of-illness, risk-of-mortality, and other patient risk factors. We pulled patient-level observed and expected data from AQQP qualifying physicians, defined as physicians participating in a majority of CPV measurement rounds. Of the 107 total hospitalists who participated, six providers did not participate in enough CPV rounds, and 22 providers left AdventHealth and could not be included in a patient-level impact analysis. These providers were replaced with 21 new hospitalists who were enrolled in the study and included in the CPV analysis but who did not have patient-level data before AQQP enrollment. Overall, 58 providers met the qualifying criteria to be included in the impact analysis. We compared their performance to a group of 96 hospitalists at facilities that were not participating in the project. Comparator facilities were selected based on quantitative measures of size and demographic matching the AQQP-facilities ensuring that both sets of hospitals (comparator and AQQP) exhibited similar levels of engagement with Advent- Health quality activities such as quality dashboard performance and order set usage. Baseline patient-level cost and LOS data covered from October 2015 to June 2016 and were re-measured annually throughout the project, from July 2016 to June 2018.

Statistical Analyses

We analyzed three primary outcomes: (1) general CPV-measured improvements in each round (scored against evidence-based scoring criteria); (2) disease-specific CPV improvements over each round; and (3) changes in patient-level outcomes and economic savings among AdventHealth pneumonia/sepsis and heart failure patients from the aforementioned improvements. We used Student’s t-test to analyze continuous outcome variables (including CPV, cost of care, and length of stay data) and Fisher’s exact test for binary outcome data. All statistical analyses were performed using Stata 14.2 (StataCorp LLC, College Station, Texas).

RESULTS

Baseline Characteristics and Assessment

A total of 107 AdventHealth hospitalists participated in this study (Appendix Table 1). 78.1% of these providers rated the organization’s focus on quality and lowering unnecessary costs as either “good” or “excellent,” but 78.8% also reported that variation in care provided by the group was “moderate” to “very high”.

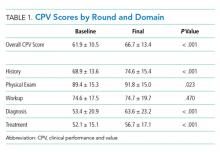

At baseline, we observed high variability in the care of pneumonia patients with sepsis (pneumonia/sepsis) and heart failure patients as measured by the care decisions obtained in the CPV cases. The overall quality score, which is a weighted average across all domains, averaged 61.9% ± 10.5% for the group (Table 1). Disaggregating scores by condition, we found an average overall score of 59.4% ± 10.9% for pneumonia/sepsis and 64.4% ± 9.4% for heart failure. The diagnosis and treatment domains, which require the most clinical judgment, had the lowest average domain scores of 53.4% ± 20.9% and 51.6% ± 15.1%, respectively.

Changes in CPV Scores

To determine the impact of serial measurement and feedback, we compared performance in the first two rounds of the project with the last two rounds. We found that overall CPV quality scores showed a 4.8%-point absolute improvement (P < .001; Table 1). We saw improvements in all care domains, and those increases were significant in all but the workup (P = .470); the most significant increase was in diagnostic accuracy (+19.1%; P < .001).

By condition, scores showed similar, statistically significant overall improvements: +4.4%-points for pneumonia/sepsis (P = .001) and +5.5%-points for heart failure (P < .001) driven by increases in the diagnosis and treatment domains. For example, providers increased appropriate identification of HF severity by 21.5%-points (P < .001) and primary diagnosis of pneumonia/sepsis by 3.6%-points (P = .385).

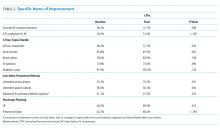

In the treatment domain, which included clinical decisions related to initial management and follow-up care, there were several specific improvements. For HF, we found that performing all the essential treatment elements—prescribing diuretics, ACE inhibitors and beta blockers for appropriate patients—improved by 13.9%-points (P = .038); ordering VTE prophylaxis increased more than threefold, from 16.6% to 51.0% (P < .001; Table 2). For pneumonia/sepsis patients, absolute adherence to all four elements of the 3-hour sepsis bundle improved by 11.7%-points (P = .034). We also saw a decrease in low-value diagnostic workup items for patient cases in which the guidelines suggest they are not needed, such as urinary antigen testing, which declined by 14.6%-points (P = .001) and sputum cultures, which declined 26.4%-points (P = .004). In addition, outlining an evidence-based discharge plan including a follow-up visit, patient education and medication reconciliation improved, especially for pneumonia/sepsis patients by 24.3%-points (P < .001).

Adherence to AdventHealth-preferred, evidence-based empiric antibiotic regimens was only 41.1% at baseline, but by the third round, adherence to preferred antibiotics had increased by 37% (P = .047). In the summer of 2017, after the third round, we updated scoring criteria for the cases to align with new AdventHealth-preferred antibiotic regimens. Not surprisingly, when the new antibiotic regimens were introduced, CPV-measured adherence to the new guidelines then regressed to nearly baseline levels (42.4%) as providers adjusted to the new recommendations. However, by the end of the final round, AdventHealth-preferred antibiotics orders improved by 12%.

Next, we explored whether the improvements seen were due to the best performers getting better, which was not the case. At baseline the bottom-half performers scored 10.7%-points less than top-half performers but, over the course of the study, we found that the bottom half performers had an absolute improvement nearly two times of those in the top half (+5.7%-points vs +2.9%-points; P = .006), indicating that these bottom performers were able to close the gap in quality-of-care provided. In particular, these bottom performers improved the accuracy of their primary diagnosis by 16.7%-points, compared to a 2.0%-point improvement for the top-half performers.

Patient-Level Impact on LOS and Cost Per Case

We took advantage of the quasi-experimental design, in which only a portion of AdventHealth facilities participated in the project, to compare patient-level results from AQQP-participating physicians against the engagement-matched cohort of hospitalists at nonparticipating AdventHealth facilities. We adjusted for potential differences in patient-level case mix between the two groups by comparing the observed/expected (O/E) LOS and cost per case ratios for pneumonia/sepsis and heart failure patients.

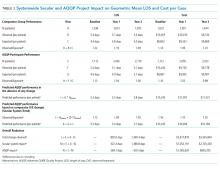

At baseline, AQQP-hospitalists performed better on geometric LOS versus the comparator group (O/E of 1.13 vs 1.22; P = .006) but at about the same on cost per case (O/E of 1.16 vs 1.14; P = .390). Throughout the project, as patient volumes and expected per patient costs rose for both groups, O/E ratios improved among both AQQP and non-AQQP providers.

To set apart the contribution of system-wide improvements from the AQQP project-specific impacts, we applied the O/E improvement rates seen in the comparator group to the AQQP group baseline performance. We then compared that to the actual changes seen in the AQQP throughout the project to see if there was any additional benefit from the simulation measurement and feedback (Figure).

From baseline through year one of the project, the O/E LOS ratio decreased by 8.0% in the AQQP group (1.13 to 1.04; P = .004) and only 2.5% in the comparator group (1.22 to 1.19; P = .480), which is an absolute difference-in-difference of 0.06 LOS O/E. In year 1, these improvements represent a reduction in 892 patient days among patients cared for by AQQP-hospitalists of which 570 appear to be driven by the AQQP intervention and 322 attributable to secular system-wide improvements (Table 3). In year two, both groups continued to improve with the comparator group catching up to the AQQP group.

Geometric mean O/E cost per case also decreased for both AQQP (1.16 Baseline vs 0.98 Year 2; P < .001) and comparator physicians (1.14 Baseline vs 1.01 Year 2; P = .002), for an absolute difference-in-difference of 0.05 cost O/E. However, the AQQP-hospitalists showed greater improvement (15% vs 12%; P = .346; Table 3). As in the LOS analysis, the AQQP-specific impact on cost was markedly accelerated in year one, accounting for $1.6 million of the estimated $2.6 million total savings that year. Over the two-year project, these combined improvements drove an estimated $6.2 million in total savings among AQQP-hospitalists: $3.8 million of this appear to be driven by secular system effects and, based upon our quasi-experimental design, an additional $2.4 million of which are attributable to participation in AQQP.

A Levene’s test for equality of variances on the log-transformed costs and LOS shows that the AQQP reductions in costs and LOS come from reduced variation among providers. Throughout the project, the standard deviation in LOS was reduced by 4.3%, from 3.8 days to 3.6 days (P = .046) and costs by 27.7%, from $9,391 to $6,793 (P < .001). The non-AQQP group saw a smaller, but still significant 14.6% reduction in cost variation (from $9,928 to $8,482), but saw a variation in LOS increase significantly by 20.6%, from 4.1 days to 5.0 days (P < .001).

Provider Satisfaction

At the project conclusion, we administered a brief survey. Participants were asked to rate aspects of the project (a five-point Likert scale with five being the highest), and 24 responded. The mean ratings of the relevance of the project to their practice and the overall quality of the material were 4.5 and 4.2, respectively. Providers found the individual feedback reports (3.9) slightly more helpful than the webcast group discussions (3.7; Appendix Table 2 ).

DISCUSSION

As health systems expand, the opportunity to standardize clinical practice within a system has the potential to enhance patient care and lower costs. However, achieving these goals is challenging when providers are dispersed across geographically separated sites and clinical decision-making is difficult to measure in a standardized way.16,17 We brought together over 100 physicians and APPs from eight different-sized hospitals in five different states to prospectively determine if we could improve care using a standardized measurement and feedback system. At baseline, we found that care varied dramatically among providers. Care varied in terms of diagnostic accuracy and treatment, which directly relate to care quality and outcomes.4 After serial measurement and feedback, we saw reductions in unnecessary testing, more guideline-based treatment decisions, and better discharge planning in the clinical vignettes.

We confirmed that changes in CPV-measured practice translated into lower costs and shorter LOS at the patient level. We further validated the improvements through a quasi-experimental design that compared these changes to those at nonparticipating AdventHealth facilities. We saw more significant cost reductions and decreases in LOS in the simulation-based measurement and feedback cohort with the biggest impact early on. The overall savings to the system, attributable specifically to the AQQP approach, is estimated to be $2.4 million.

One advantage of the online case simulation approach is the ability to bring geographically remote sites together in a shared quality-of-care discussion. The interventions specifically sought to remove barriers between facilities. For example, individual feedback reports allowed providers to see how they compare with providers at other AdventHealth facilities and webcast results discussions enable providers across facilities to discuss specific care decisions.

There were several limitations to the study. While the quasi-experimental design allowed us to make informative comparisons between AQQP-participating facilities and nonparticipating facilities, the assignments were not random, and participants were generally from higher performing hospital medicine groups. The determination of secular versus CPV-related improvement is confounded by other system improvement initiatives that may have impacted cost and LOS results. This is mitigated by the observation that facilities that opted to participate performed better at baseline in risk-adjusted LOS but slightly worse in cost per case, indicating that baseline differences were not dramatic. While both groups improved over time, the QURE measurement and feedback approach led to larger and more rapid gains than those seen in the comparator group. However, we could not exclude the potential that project participation at the site level was biased to those groups disposed to performance improvement. In addition, our patient-level data analysis was limited to the metrics available and did not allow us to directly compare patient-level performance across the plethora of clinically relevant CPV data that showed improvement. Our inpatient cost per case analysis showed significant savings for the system but did not include all potentially favorable economic impacts such as lower follow-up care costs for patients, more accurate reimbursement through better coding or fewer lost days of productivity.

With continued consolidation in healthcare and broader health systems spanning multiple geographies, new tools are needed to support standardized, evidence-based care across sites. This standardization is especially important, both clinically and financially, for high-volume, high-cost diseases such as sepsis and heart failure. However, changing practice cannot happen without collaborative engagement with providers. Standardized patient vignettes are an opportunity to measure and provide feedback in a systematic way that engages providers and is particularly well-suited to large systems and common clinical conditions. This analysis, from a real-world study, shows that an approach that standardizes care and lowers costs may be particularly helpful for large systems needing to bring disparate sites together as they concurrently move toward value-based payment.

Disclosures

QURE, LLC, whose intellectual property was used to prepare the cases and collect the data, was contracted by AdventHealth. Otherwise, any of the study authors report no potential conflicts to disclose.

Funding

This work was funded by a contract between AdventHealth (formerly Adventist Health System) and QURE, LLC.

1. Torio C, Moore B. National inpatient hospital costs: the most expensive conditions by payer, 2013. HCUP Statistical Brief #204. Published May 2016 http://www.hcup-us.ahrq.gov/reports/statbriefs/sb204-Most-Expensive-Hospital-Conditions.pdf. Accessed December 2018.

2. Liu, V, GJ Escobar, Greene JD, et al. Hospital deaths in patients with sepsis from 2 independent cohorts. JAMA. 2014;312(1):90-92. https://doi.org/10.1001/jama.2014.5804.

3. Mozzafarian D, Benjamin EJ, Go AS, et al. Heart disease and stroke statistics—2016 update: a report from the American Heart Association. Circulation. 2016;133(4):e38-e360. https://doi.org/10.1161/CIR.0000000000000350.

4. Seymour CW, Gesten F, Prescott HC, et al. Time to treatment and mortality during mandated emergency care for sepsis. N Engl J Med. 2017;376(23):2235-2244. https://doi.org/10.1056/NEJMoa1703058.

5. Yancy CW, Jessup M, Bozkurt B, et al. 2016 ACC/AHA/HFSA focused update on new pharmacological therapy for heart failure: an update of the 2013 ACCF/AHA guideline for the management of heart failure: a report of the American College of Cardiology/American Heart Association Task Force on Clinical Practice Guidelines and the Heart Failure Society of America. Circulation. 2016;134(13):e282-e293. https://doi.org/10.1161/CIR.0000000000000460.

6. Warren JI, McLaughlin M, Bardsley J, et al. The strengths and challenges of implementing EBP in healthcare systems. Worldviews Evid Based Nurs. 2016;13(1):15-24. https://doi.org/10.1111/wvn.12149.

7. Hisham R, Ng CJ, Liew SM, Hamzah N, Ho GJ. Why is there variation in the practice of evidence-based medicine in primary care? A qualitative study. BMJ Open. 2016;6(3):e010565. https://doi.org/10.1136/bmjopen-2015-010565.

8. Boccuti C, Casillas G. Aiming for Fewer Hospital U-turns: The Medicare Hospital Readmission Reduction Program, The Henry J. Kaiser Family Foundation. https://www.kff.org/medicare/issue-brief/aiming-for-fewer-hospital-u-turns-the-medicare-hospital-readmission-reduction-program/. Accessed Mar 10, 2017.

9. Venkatesh AK, Slesinger T, Whittle J, et al. Preliminary performance on the new CMS sepsis-1 national quality measure: early insights from the emergency quality network (E-QUAL). Ann Emerg Med. 2018;71(1):10-15. https://doi.org/10.1016/j.annemergmed.2017.06.032.

10. Braithwaite, J. Changing how we think about healthcare improvement. BMJ. 2018;36:k2014. https://doi.org/10.1136/bmj.k2014.

11. Peabody JW, Luck J, Glassman P, Dresselhaus TR, Lee M. Comparison of vignettes, standardized patients, and chart abstraction: a prospective validation study of 3 methods for measuring quality. JAMA. 2000;283(13):1715-1722. PubMed

12. Peabody JW, Luck J, Glassman P, et al. Measuring the quality of physician practice by using clinical vignettes: a prospective validation study. Ann Intern Med. 2004;141(10):771-780. https://doi.org/10.7326/0003-4819-141-10-200411160-00008.

13. Peabody JW, Shimkhada S, Quimbo S, Solon O, Javier X, McCulloch C. The impact of performance incentives on health outcomes: results from a cluster randomized controlled trial in the Philippines. Health Policy Plan. 2014;29(5):615-621. https://doi.org/10.1093/heapol/czt047.

14. Weems L, Strong J, Plummer D, et al. A quality collaboration in heart failure and pneumonia inpatient care at Novant Health: standardizing hospitalist practices to improve patient care and system performance. Jt Comm J Qual Patient Saf. 2019;45(3):199-206. https://doi.org/10.1016/j.jcjq.2018.09.005.

15. Bergmann S, Tran M, Robison K, et al. Standardizing hospitalist practice in sepsis and COPD care. BMJ Qual Safety. 2019. https://doi.org/10.1136/bmjqs-2018-008829.

16. Chassin MR, Galvin RM. the National Roundtable on Health Care Quality. The urgent need to improve health care quality: Institute of Medicine National Roundtable on Health Care Quality. JAMA. 1998;280(11):1000-1005. https://doi.org/10.1001/jama.280.11.1000.

17. Gupta DM, Boland RJ, Aron DC. The physician’s experience of changing clinical practice: a struggle to unlearn. Implementation Sci. 2017;12(1):28. https://doi.org/10.1186/s13012-017-0555-2.

Sepsis and heart failure are two common, costly, and deadly conditions. Among hospitalized Medicare patients, these conditions rank as the first and second most frequent principal diagnoses accounting for over $33 billion in spending across all payers.1 One-third to one-half of all hospital deaths are estimated to occur in patients with sepsis,2 and heart failure is listed as a contributing factor in over 10% of deaths in the United States.3

Previous research shows that evidence-based care decisions can impact the outcomes for these patients. For example, sepsis patients receiving intravenous fluids, blood cultures, broad-spectrum antibiotics, and lactate measurement within three hours of presentation have lower mortality rates.4 In heart failure, key interventions such as the appropriate use of ACE inhibitors, beta blockers, and referral to disease management programs reduce morbidity and mortality.5

However, rapid dissemination and adoption of evidence-based guidelines remain a challenge.6,7 Policy makers have introduced incentives and penalties to support adoption, with varying levels of success. After four years of Centers for Medicare and Medicaid Services (CMS) penalties for hospitals with excess heart failure readmissions, only 21% performed well enough to avoid a penalty in 2017.8 CMS has been tracking sepsis bundle adherence as a core measure, but the rate in 2018 sat at just 54%.9 It is clear that new solutions are needed.10

AdventHealth (formerly Adventist Health System) is a growing, faith-based health system with hospitals across nine states. AdventHealth is a national leader in quality, safety, and patient satisfaction but is not immune to the challenges of delivering consistent, evidence-based care across an extensive network. To accelerate system-wide practice change, AdventHealth’s Office of Clinical Excellence (OCE) partnered with QURE Healthcare and Premier, Inc., to implement a physician engagement and care standardization collaboration involving nearly 100 hospitalists at eight facilities across five states.

This paper describes the results of the Adventist QURE Quality Project (AQQP), which used QURE’s validated, simulation-based measurement and feedback approach to engage hospitalists and standardize evidence-based practices for patients with sepsis and heart failure. We documented specific areas of variation identified in the simulations, how those practices changed through serial feedback, and the impact of those changes on real-world outcomes and costs.

METHODS

Setting

AdventHealth has its headquarters in Altamonte Springs, Florida. It has facilities in nine states, which includes 48 hospitals. The OCE is comprised of physician leaders, project managers, and data analysts who sponsored the project from July 2016 through July 2018.

Study Participants

AdventHealth hospitals were invited to enroll their hospitalists in AQQP; eight AdventHealth hospitals across five states, representing 91 physicians and 16 nurse practitioners/physician’s assistants (APPs), agreed to participate. Participants included both AdventHealth-employed providers and contracted hospitalist groups. Provider participation was voluntary and not tied to financial incentives; however, participants received Continuing Medical Education credit and, if applicable, Maintenance of Certification points through the American Board of Internal Medicine.

Quasi-experimental Design

We used AdventHealth hospitals not participating in AQQP as a quasi-experimental control group. We leveraged this to measure the impact of concurrent secular effects, such as order sets and other system-wide training, that could also improve practice and outcomes in our study.

Study Objectives and Approach

The explicit goals of AQQP were to (1) measure how sepsis and heart failure patients are cared for across AdventHealth using Clinical Performance and Value (CPV) case simulations, (2) provide a forum for hospitalists to discuss clinical variation, and (3) reduce unneeded variation to improve quality and reduce cost. QURE developed 12 CPV simulated patient cases (six sepsis and six heart failure cases) with case-specific evidenced-based scoring criteria tied to national and AdventHealth evidence-based guidelines. AdventHealth order sets were embedded in the cases and accessible by participants as they cared for their patients.

CPV vignettes are simulated patient cases administered online, and have been validated as an accurate and responsive measure of clinical decision-making in both ambulatory11-13 and inpatient settings.14,15 Cases take 20-30 minutes each to complete and simulate a typical clinical encounter: taking the medical history, performing a physical examination, ordering tests, making the diagnosis, implementing initial treatment, and outlining a follow-up plan. Each case has predefined, evidence-based scoring criteria for each care domain. Cases and scoring criteria were reviewed by AdventHealth hospitalist program leaders and physician leaders in OCE. Provider responses were double-scored by trained physician abstractors. Scores range from 0%-100%, with higher scores reflecting greater alignment with best practice recommendations.

In each round of the project, AQQP participants completed two CPV cases, received personalized online feedback reports on their care decisions, and met (at the various sites and via web conference) for a facilitated group discussion on areas of high group variation. The personal feedback reports included the participant’s case score compared to the group average, a list of high-priority personalized improvement opportunities, a summary of the cost of unneeded care items, and links to relevant references. The group discussions focused on six items of high variation. Six total rounds of CPV measurement and feedback were held, one every four months.

At the study’s conclusion, we administered a brief satisfaction survey, asking providers to rate various aspects of the project on a five-point Likert scale.

Data

The study used two primary data sources: (1) care decisions made in the CPV simulated cases and (2) patient-level utilization data from Premier Inc.’s QualityAdvisorTM (QA) data system. QA integrates quality, safety, and financial data from AdventHealth’s electronic medical record, claims data, charge master, and other resources. QualityAdvisor also calculates expected performance for critical measures, including cost per case and length of stay (LOS), based on a proprietary algorithm, which uses DRG classification, severity-of-illness, risk-of-mortality, and other patient risk factors. We pulled patient-level observed and expected data from AQQP qualifying physicians, defined as physicians participating in a majority of CPV measurement rounds. Of the 107 total hospitalists who participated, six providers did not participate in enough CPV rounds, and 22 providers left AdventHealth and could not be included in a patient-level impact analysis. These providers were replaced with 21 new hospitalists who were enrolled in the study and included in the CPV analysis but who did not have patient-level data before AQQP enrollment. Overall, 58 providers met the qualifying criteria to be included in the impact analysis. We compared their performance to a group of 96 hospitalists at facilities that were not participating in the project. Comparator facilities were selected based on quantitative measures of size and demographic matching the AQQP-facilities ensuring that both sets of hospitals (comparator and AQQP) exhibited similar levels of engagement with Advent- Health quality activities such as quality dashboard performance and order set usage. Baseline patient-level cost and LOS data covered from October 2015 to June 2016 and were re-measured annually throughout the project, from July 2016 to June 2018.

Statistical Analyses

We analyzed three primary outcomes: (1) general CPV-measured improvements in each round (scored against evidence-based scoring criteria); (2) disease-specific CPV improvements over each round; and (3) changes in patient-level outcomes and economic savings among AdventHealth pneumonia/sepsis and heart failure patients from the aforementioned improvements. We used Student’s t-test to analyze continuous outcome variables (including CPV, cost of care, and length of stay data) and Fisher’s exact test for binary outcome data. All statistical analyses were performed using Stata 14.2 (StataCorp LLC, College Station, Texas).

RESULTS

Baseline Characteristics and Assessment

A total of 107 AdventHealth hospitalists participated in this study (Appendix Table 1). 78.1% of these providers rated the organization’s focus on quality and lowering unnecessary costs as either “good” or “excellent,” but 78.8% also reported that variation in care provided by the group was “moderate” to “very high”.

At baseline, we observed high variability in the care of pneumonia patients with sepsis (pneumonia/sepsis) and heart failure patients as measured by the care decisions obtained in the CPV cases. The overall quality score, which is a weighted average across all domains, averaged 61.9% ± 10.5% for the group (Table 1). Disaggregating scores by condition, we found an average overall score of 59.4% ± 10.9% for pneumonia/sepsis and 64.4% ± 9.4% for heart failure. The diagnosis and treatment domains, which require the most clinical judgment, had the lowest average domain scores of 53.4% ± 20.9% and 51.6% ± 15.1%, respectively.

Changes in CPV Scores

To determine the impact of serial measurement and feedback, we compared performance in the first two rounds of the project with the last two rounds. We found that overall CPV quality scores showed a 4.8%-point absolute improvement (P < .001; Table 1). We saw improvements in all care domains, and those increases were significant in all but the workup (P = .470); the most significant increase was in diagnostic accuracy (+19.1%; P < .001).

By condition, scores showed similar, statistically significant overall improvements: +4.4%-points for pneumonia/sepsis (P = .001) and +5.5%-points for heart failure (P < .001) driven by increases in the diagnosis and treatment domains. For example, providers increased appropriate identification of HF severity by 21.5%-points (P < .001) and primary diagnosis of pneumonia/sepsis by 3.6%-points (P = .385).

In the treatment domain, which included clinical decisions related to initial management and follow-up care, there were several specific improvements. For HF, we found that performing all the essential treatment elements—prescribing diuretics, ACE inhibitors and beta blockers for appropriate patients—improved by 13.9%-points (P = .038); ordering VTE prophylaxis increased more than threefold, from 16.6% to 51.0% (P < .001; Table 2). For pneumonia/sepsis patients, absolute adherence to all four elements of the 3-hour sepsis bundle improved by 11.7%-points (P = .034). We also saw a decrease in low-value diagnostic workup items for patient cases in which the guidelines suggest they are not needed, such as urinary antigen testing, which declined by 14.6%-points (P = .001) and sputum cultures, which declined 26.4%-points (P = .004). In addition, outlining an evidence-based discharge plan including a follow-up visit, patient education and medication reconciliation improved, especially for pneumonia/sepsis patients by 24.3%-points (P < .001).

Adherence to AdventHealth-preferred, evidence-based empiric antibiotic regimens was only 41.1% at baseline, but by the third round, adherence to preferred antibiotics had increased by 37% (P = .047). In the summer of 2017, after the third round, we updated scoring criteria for the cases to align with new AdventHealth-preferred antibiotic regimens. Not surprisingly, when the new antibiotic regimens were introduced, CPV-measured adherence to the new guidelines then regressed to nearly baseline levels (42.4%) as providers adjusted to the new recommendations. However, by the end of the final round, AdventHealth-preferred antibiotics orders improved by 12%.

Next, we explored whether the improvements seen were due to the best performers getting better, which was not the case. At baseline the bottom-half performers scored 10.7%-points less than top-half performers but, over the course of the study, we found that the bottom half performers had an absolute improvement nearly two times of those in the top half (+5.7%-points vs +2.9%-points; P = .006), indicating that these bottom performers were able to close the gap in quality-of-care provided. In particular, these bottom performers improved the accuracy of their primary diagnosis by 16.7%-points, compared to a 2.0%-point improvement for the top-half performers.

Patient-Level Impact on LOS and Cost Per Case

We took advantage of the quasi-experimental design, in which only a portion of AdventHealth facilities participated in the project, to compare patient-level results from AQQP-participating physicians against the engagement-matched cohort of hospitalists at nonparticipating AdventHealth facilities. We adjusted for potential differences in patient-level case mix between the two groups by comparing the observed/expected (O/E) LOS and cost per case ratios for pneumonia/sepsis and heart failure patients.

At baseline, AQQP-hospitalists performed better on geometric LOS versus the comparator group (O/E of 1.13 vs 1.22; P = .006) but at about the same on cost per case (O/E of 1.16 vs 1.14; P = .390). Throughout the project, as patient volumes and expected per patient costs rose for both groups, O/E ratios improved among both AQQP and non-AQQP providers.

To set apart the contribution of system-wide improvements from the AQQP project-specific impacts, we applied the O/E improvement rates seen in the comparator group to the AQQP group baseline performance. We then compared that to the actual changes seen in the AQQP throughout the project to see if there was any additional benefit from the simulation measurement and feedback (Figure).

From baseline through year one of the project, the O/E LOS ratio decreased by 8.0% in the AQQP group (1.13 to 1.04; P = .004) and only 2.5% in the comparator group (1.22 to 1.19; P = .480), which is an absolute difference-in-difference of 0.06 LOS O/E. In year 1, these improvements represent a reduction in 892 patient days among patients cared for by AQQP-hospitalists of which 570 appear to be driven by the AQQP intervention and 322 attributable to secular system-wide improvements (Table 3). In year two, both groups continued to improve with the comparator group catching up to the AQQP group.

Geometric mean O/E cost per case also decreased for both AQQP (1.16 Baseline vs 0.98 Year 2; P < .001) and comparator physicians (1.14 Baseline vs 1.01 Year 2; P = .002), for an absolute difference-in-difference of 0.05 cost O/E. However, the AQQP-hospitalists showed greater improvement (15% vs 12%; P = .346; Table 3). As in the LOS analysis, the AQQP-specific impact on cost was markedly accelerated in year one, accounting for $1.6 million of the estimated $2.6 million total savings that year. Over the two-year project, these combined improvements drove an estimated $6.2 million in total savings among AQQP-hospitalists: $3.8 million of this appear to be driven by secular system effects and, based upon our quasi-experimental design, an additional $2.4 million of which are attributable to participation in AQQP.

A Levene’s test for equality of variances on the log-transformed costs and LOS shows that the AQQP reductions in costs and LOS come from reduced variation among providers. Throughout the project, the standard deviation in LOS was reduced by 4.3%, from 3.8 days to 3.6 days (P = .046) and costs by 27.7%, from $9,391 to $6,793 (P < .001). The non-AQQP group saw a smaller, but still significant 14.6% reduction in cost variation (from $9,928 to $8,482), but saw a variation in LOS increase significantly by 20.6%, from 4.1 days to 5.0 days (P < .001).

Provider Satisfaction

At the project conclusion, we administered a brief survey. Participants were asked to rate aspects of the project (a five-point Likert scale with five being the highest), and 24 responded. The mean ratings of the relevance of the project to their practice and the overall quality of the material were 4.5 and 4.2, respectively. Providers found the individual feedback reports (3.9) slightly more helpful than the webcast group discussions (3.7; Appendix Table 2 ).

DISCUSSION

As health systems expand, the opportunity to standardize clinical practice within a system has the potential to enhance patient care and lower costs. However, achieving these goals is challenging when providers are dispersed across geographically separated sites and clinical decision-making is difficult to measure in a standardized way.16,17 We brought together over 100 physicians and APPs from eight different-sized hospitals in five different states to prospectively determine if we could improve care using a standardized measurement and feedback system. At baseline, we found that care varied dramatically among providers. Care varied in terms of diagnostic accuracy and treatment, which directly relate to care quality and outcomes.4 After serial measurement and feedback, we saw reductions in unnecessary testing, more guideline-based treatment decisions, and better discharge planning in the clinical vignettes.

We confirmed that changes in CPV-measured practice translated into lower costs and shorter LOS at the patient level. We further validated the improvements through a quasi-experimental design that compared these changes to those at nonparticipating AdventHealth facilities. We saw more significant cost reductions and decreases in LOS in the simulation-based measurement and feedback cohort with the biggest impact early on. The overall savings to the system, attributable specifically to the AQQP approach, is estimated to be $2.4 million.

One advantage of the online case simulation approach is the ability to bring geographically remote sites together in a shared quality-of-care discussion. The interventions specifically sought to remove barriers between facilities. For example, individual feedback reports allowed providers to see how they compare with providers at other AdventHealth facilities and webcast results discussions enable providers across facilities to discuss specific care decisions.

There were several limitations to the study. While the quasi-experimental design allowed us to make informative comparisons between AQQP-participating facilities and nonparticipating facilities, the assignments were not random, and participants were generally from higher performing hospital medicine groups. The determination of secular versus CPV-related improvement is confounded by other system improvement initiatives that may have impacted cost and LOS results. This is mitigated by the observation that facilities that opted to participate performed better at baseline in risk-adjusted LOS but slightly worse in cost per case, indicating that baseline differences were not dramatic. While both groups improved over time, the QURE measurement and feedback approach led to larger and more rapid gains than those seen in the comparator group. However, we could not exclude the potential that project participation at the site level was biased to those groups disposed to performance improvement. In addition, our patient-level data analysis was limited to the metrics available and did not allow us to directly compare patient-level performance across the plethora of clinically relevant CPV data that showed improvement. Our inpatient cost per case analysis showed significant savings for the system but did not include all potentially favorable economic impacts such as lower follow-up care costs for patients, more accurate reimbursement through better coding or fewer lost days of productivity.

With continued consolidation in healthcare and broader health systems spanning multiple geographies, new tools are needed to support standardized, evidence-based care across sites. This standardization is especially important, both clinically and financially, for high-volume, high-cost diseases such as sepsis and heart failure. However, changing practice cannot happen without collaborative engagement with providers. Standardized patient vignettes are an opportunity to measure and provide feedback in a systematic way that engages providers and is particularly well-suited to large systems and common clinical conditions. This analysis, from a real-world study, shows that an approach that standardizes care and lowers costs may be particularly helpful for large systems needing to bring disparate sites together as they concurrently move toward value-based payment.

Disclosures

QURE, LLC, whose intellectual property was used to prepare the cases and collect the data, was contracted by AdventHealth. Otherwise, any of the study authors report no potential conflicts to disclose.

Funding

This work was funded by a contract between AdventHealth (formerly Adventist Health System) and QURE, LLC.

Sepsis and heart failure are two common, costly, and deadly conditions. Among hospitalized Medicare patients, these conditions rank as the first and second most frequent principal diagnoses accounting for over $33 billion in spending across all payers.1 One-third to one-half of all hospital deaths are estimated to occur in patients with sepsis,2 and heart failure is listed as a contributing factor in over 10% of deaths in the United States.3

Previous research shows that evidence-based care decisions can impact the outcomes for these patients. For example, sepsis patients receiving intravenous fluids, blood cultures, broad-spectrum antibiotics, and lactate measurement within three hours of presentation have lower mortality rates.4 In heart failure, key interventions such as the appropriate use of ACE inhibitors, beta blockers, and referral to disease management programs reduce morbidity and mortality.5

However, rapid dissemination and adoption of evidence-based guidelines remain a challenge.6,7 Policy makers have introduced incentives and penalties to support adoption, with varying levels of success. After four years of Centers for Medicare and Medicaid Services (CMS) penalties for hospitals with excess heart failure readmissions, only 21% performed well enough to avoid a penalty in 2017.8 CMS has been tracking sepsis bundle adherence as a core measure, but the rate in 2018 sat at just 54%.9 It is clear that new solutions are needed.10

AdventHealth (formerly Adventist Health System) is a growing, faith-based health system with hospitals across nine states. AdventHealth is a national leader in quality, safety, and patient satisfaction but is not immune to the challenges of delivering consistent, evidence-based care across an extensive network. To accelerate system-wide practice change, AdventHealth’s Office of Clinical Excellence (OCE) partnered with QURE Healthcare and Premier, Inc., to implement a physician engagement and care standardization collaboration involving nearly 100 hospitalists at eight facilities across five states.

This paper describes the results of the Adventist QURE Quality Project (AQQP), which used QURE’s validated, simulation-based measurement and feedback approach to engage hospitalists and standardize evidence-based practices for patients with sepsis and heart failure. We documented specific areas of variation identified in the simulations, how those practices changed through serial feedback, and the impact of those changes on real-world outcomes and costs.

METHODS

Setting

AdventHealth has its headquarters in Altamonte Springs, Florida. It has facilities in nine states, which includes 48 hospitals. The OCE is comprised of physician leaders, project managers, and data analysts who sponsored the project from July 2016 through July 2018.

Study Participants

AdventHealth hospitals were invited to enroll their hospitalists in AQQP; eight AdventHealth hospitals across five states, representing 91 physicians and 16 nurse practitioners/physician’s assistants (APPs), agreed to participate. Participants included both AdventHealth-employed providers and contracted hospitalist groups. Provider participation was voluntary and not tied to financial incentives; however, participants received Continuing Medical Education credit and, if applicable, Maintenance of Certification points through the American Board of Internal Medicine.

Quasi-experimental Design

We used AdventHealth hospitals not participating in AQQP as a quasi-experimental control group. We leveraged this to measure the impact of concurrent secular effects, such as order sets and other system-wide training, that could also improve practice and outcomes in our study.

Study Objectives and Approach

The explicit goals of AQQP were to (1) measure how sepsis and heart failure patients are cared for across AdventHealth using Clinical Performance and Value (CPV) case simulations, (2) provide a forum for hospitalists to discuss clinical variation, and (3) reduce unneeded variation to improve quality and reduce cost. QURE developed 12 CPV simulated patient cases (six sepsis and six heart failure cases) with case-specific evidenced-based scoring criteria tied to national and AdventHealth evidence-based guidelines. AdventHealth order sets were embedded in the cases and accessible by participants as they cared for their patients.

CPV vignettes are simulated patient cases administered online, and have been validated as an accurate and responsive measure of clinical decision-making in both ambulatory11-13 and inpatient settings.14,15 Cases take 20-30 minutes each to complete and simulate a typical clinical encounter: taking the medical history, performing a physical examination, ordering tests, making the diagnosis, implementing initial treatment, and outlining a follow-up plan. Each case has predefined, evidence-based scoring criteria for each care domain. Cases and scoring criteria were reviewed by AdventHealth hospitalist program leaders and physician leaders in OCE. Provider responses were double-scored by trained physician abstractors. Scores range from 0%-100%, with higher scores reflecting greater alignment with best practice recommendations.

In each round of the project, AQQP participants completed two CPV cases, received personalized online feedback reports on their care decisions, and met (at the various sites and via web conference) for a facilitated group discussion on areas of high group variation. The personal feedback reports included the participant’s case score compared to the group average, a list of high-priority personalized improvement opportunities, a summary of the cost of unneeded care items, and links to relevant references. The group discussions focused on six items of high variation. Six total rounds of CPV measurement and feedback were held, one every four months.

At the study’s conclusion, we administered a brief satisfaction survey, asking providers to rate various aspects of the project on a five-point Likert scale.

Data

The study used two primary data sources: (1) care decisions made in the CPV simulated cases and (2) patient-level utilization data from Premier Inc.’s QualityAdvisorTM (QA) data system. QA integrates quality, safety, and financial data from AdventHealth’s electronic medical record, claims data, charge master, and other resources. QualityAdvisor also calculates expected performance for critical measures, including cost per case and length of stay (LOS), based on a proprietary algorithm, which uses DRG classification, severity-of-illness, risk-of-mortality, and other patient risk factors. We pulled patient-level observed and expected data from AQQP qualifying physicians, defined as physicians participating in a majority of CPV measurement rounds. Of the 107 total hospitalists who participated, six providers did not participate in enough CPV rounds, and 22 providers left AdventHealth and could not be included in a patient-level impact analysis. These providers were replaced with 21 new hospitalists who were enrolled in the study and included in the CPV analysis but who did not have patient-level data before AQQP enrollment. Overall, 58 providers met the qualifying criteria to be included in the impact analysis. We compared their performance to a group of 96 hospitalists at facilities that were not participating in the project. Comparator facilities were selected based on quantitative measures of size and demographic matching the AQQP-facilities ensuring that both sets of hospitals (comparator and AQQP) exhibited similar levels of engagement with Advent- Health quality activities such as quality dashboard performance and order set usage. Baseline patient-level cost and LOS data covered from October 2015 to June 2016 and were re-measured annually throughout the project, from July 2016 to June 2018.

Statistical Analyses

We analyzed three primary outcomes: (1) general CPV-measured improvements in each round (scored against evidence-based scoring criteria); (2) disease-specific CPV improvements over each round; and (3) changes in patient-level outcomes and economic savings among AdventHealth pneumonia/sepsis and heart failure patients from the aforementioned improvements. We used Student’s t-test to analyze continuous outcome variables (including CPV, cost of care, and length of stay data) and Fisher’s exact test for binary outcome data. All statistical analyses were performed using Stata 14.2 (StataCorp LLC, College Station, Texas).

RESULTS

Baseline Characteristics and Assessment

A total of 107 AdventHealth hospitalists participated in this study (Appendix Table 1). 78.1% of these providers rated the organization’s focus on quality and lowering unnecessary costs as either “good” or “excellent,” but 78.8% also reported that variation in care provided by the group was “moderate” to “very high”.

At baseline, we observed high variability in the care of pneumonia patients with sepsis (pneumonia/sepsis) and heart failure patients as measured by the care decisions obtained in the CPV cases. The overall quality score, which is a weighted average across all domains, averaged 61.9% ± 10.5% for the group (Table 1). Disaggregating scores by condition, we found an average overall score of 59.4% ± 10.9% for pneumonia/sepsis and 64.4% ± 9.4% for heart failure. The diagnosis and treatment domains, which require the most clinical judgment, had the lowest average domain scores of 53.4% ± 20.9% and 51.6% ± 15.1%, respectively.

Changes in CPV Scores

To determine the impact of serial measurement and feedback, we compared performance in the first two rounds of the project with the last two rounds. We found that overall CPV quality scores showed a 4.8%-point absolute improvement (P < .001; Table 1). We saw improvements in all care domains, and those increases were significant in all but the workup (P = .470); the most significant increase was in diagnostic accuracy (+19.1%; P < .001).

By condition, scores showed similar, statistically significant overall improvements: +4.4%-points for pneumonia/sepsis (P = .001) and +5.5%-points for heart failure (P < .001) driven by increases in the diagnosis and treatment domains. For example, providers increased appropriate identification of HF severity by 21.5%-points (P < .001) and primary diagnosis of pneumonia/sepsis by 3.6%-points (P = .385).

In the treatment domain, which included clinical decisions related to initial management and follow-up care, there were several specific improvements. For HF, we found that performing all the essential treatment elements—prescribing diuretics, ACE inhibitors and beta blockers for appropriate patients—improved by 13.9%-points (P = .038); ordering VTE prophylaxis increased more than threefold, from 16.6% to 51.0% (P < .001; Table 2). For pneumonia/sepsis patients, absolute adherence to all four elements of the 3-hour sepsis bundle improved by 11.7%-points (P = .034). We also saw a decrease in low-value diagnostic workup items for patient cases in which the guidelines suggest they are not needed, such as urinary antigen testing, which declined by 14.6%-points (P = .001) and sputum cultures, which declined 26.4%-points (P = .004). In addition, outlining an evidence-based discharge plan including a follow-up visit, patient education and medication reconciliation improved, especially for pneumonia/sepsis patients by 24.3%-points (P < .001).

Adherence to AdventHealth-preferred, evidence-based empiric antibiotic regimens was only 41.1% at baseline, but by the third round, adherence to preferred antibiotics had increased by 37% (P = .047). In the summer of 2017, after the third round, we updated scoring criteria for the cases to align with new AdventHealth-preferred antibiotic regimens. Not surprisingly, when the new antibiotic regimens were introduced, CPV-measured adherence to the new guidelines then regressed to nearly baseline levels (42.4%) as providers adjusted to the new recommendations. However, by the end of the final round, AdventHealth-preferred antibiotics orders improved by 12%.

Next, we explored whether the improvements seen were due to the best performers getting better, which was not the case. At baseline the bottom-half performers scored 10.7%-points less than top-half performers but, over the course of the study, we found that the bottom half performers had an absolute improvement nearly two times of those in the top half (+5.7%-points vs +2.9%-points; P = .006), indicating that these bottom performers were able to close the gap in quality-of-care provided. In particular, these bottom performers improved the accuracy of their primary diagnosis by 16.7%-points, compared to a 2.0%-point improvement for the top-half performers.

Patient-Level Impact on LOS and Cost Per Case

We took advantage of the quasi-experimental design, in which only a portion of AdventHealth facilities participated in the project, to compare patient-level results from AQQP-participating physicians against the engagement-matched cohort of hospitalists at nonparticipating AdventHealth facilities. We adjusted for potential differences in patient-level case mix between the two groups by comparing the observed/expected (O/E) LOS and cost per case ratios for pneumonia/sepsis and heart failure patients.

At baseline, AQQP-hospitalists performed better on geometric LOS versus the comparator group (O/E of 1.13 vs 1.22; P = .006) but at about the same on cost per case (O/E of 1.16 vs 1.14; P = .390). Throughout the project, as patient volumes and expected per patient costs rose for both groups, O/E ratios improved among both AQQP and non-AQQP providers.

To set apart the contribution of system-wide improvements from the AQQP project-specific impacts, we applied the O/E improvement rates seen in the comparator group to the AQQP group baseline performance. We then compared that to the actual changes seen in the AQQP throughout the project to see if there was any additional benefit from the simulation measurement and feedback (Figure).

From baseline through year one of the project, the O/E LOS ratio decreased by 8.0% in the AQQP group (1.13 to 1.04; P = .004) and only 2.5% in the comparator group (1.22 to 1.19; P = .480), which is an absolute difference-in-difference of 0.06 LOS O/E. In year 1, these improvements represent a reduction in 892 patient days among patients cared for by AQQP-hospitalists of which 570 appear to be driven by the AQQP intervention and 322 attributable to secular system-wide improvements (Table 3). In year two, both groups continued to improve with the comparator group catching up to the AQQP group.

Geometric mean O/E cost per case also decreased for both AQQP (1.16 Baseline vs 0.98 Year 2; P < .001) and comparator physicians (1.14 Baseline vs 1.01 Year 2; P = .002), for an absolute difference-in-difference of 0.05 cost O/E. However, the AQQP-hospitalists showed greater improvement (15% vs 12%; P = .346; Table 3). As in the LOS analysis, the AQQP-specific impact on cost was markedly accelerated in year one, accounting for $1.6 million of the estimated $2.6 million total savings that year. Over the two-year project, these combined improvements drove an estimated $6.2 million in total savings among AQQP-hospitalists: $3.8 million of this appear to be driven by secular system effects and, based upon our quasi-experimental design, an additional $2.4 million of which are attributable to participation in AQQP.

A Levene’s test for equality of variances on the log-transformed costs and LOS shows that the AQQP reductions in costs and LOS come from reduced variation among providers. Throughout the project, the standard deviation in LOS was reduced by 4.3%, from 3.8 days to 3.6 days (P = .046) and costs by 27.7%, from $9,391 to $6,793 (P < .001). The non-AQQP group saw a smaller, but still significant 14.6% reduction in cost variation (from $9,928 to $8,482), but saw a variation in LOS increase significantly by 20.6%, from 4.1 days to 5.0 days (P < .001).

Provider Satisfaction

At the project conclusion, we administered a brief survey. Participants were asked to rate aspects of the project (a five-point Likert scale with five being the highest), and 24 responded. The mean ratings of the relevance of the project to their practice and the overall quality of the material were 4.5 and 4.2, respectively. Providers found the individual feedback reports (3.9) slightly more helpful than the webcast group discussions (3.7; Appendix Table 2 ).

DISCUSSION

As health systems expand, the opportunity to standardize clinical practice within a system has the potential to enhance patient care and lower costs. However, achieving these goals is challenging when providers are dispersed across geographically separated sites and clinical decision-making is difficult to measure in a standardized way.16,17 We brought together over 100 physicians and APPs from eight different-sized hospitals in five different states to prospectively determine if we could improve care using a standardized measurement and feedback system. At baseline, we found that care varied dramatically among providers. Care varied in terms of diagnostic accuracy and treatment, which directly relate to care quality and outcomes.4 After serial measurement and feedback, we saw reductions in unnecessary testing, more guideline-based treatment decisions, and better discharge planning in the clinical vignettes.

We confirmed that changes in CPV-measured practice translated into lower costs and shorter LOS at the patient level. We further validated the improvements through a quasi-experimental design that compared these changes to those at nonparticipating AdventHealth facilities. We saw more significant cost reductions and decreases in LOS in the simulation-based measurement and feedback cohort with the biggest impact early on. The overall savings to the system, attributable specifically to the AQQP approach, is estimated to be $2.4 million.

One advantage of the online case simulation approach is the ability to bring geographically remote sites together in a shared quality-of-care discussion. The interventions specifically sought to remove barriers between facilities. For example, individual feedback reports allowed providers to see how they compare with providers at other AdventHealth facilities and webcast results discussions enable providers across facilities to discuss specific care decisions.

There were several limitations to the study. While the quasi-experimental design allowed us to make informative comparisons between AQQP-participating facilities and nonparticipating facilities, the assignments were not random, and participants were generally from higher performing hospital medicine groups. The determination of secular versus CPV-related improvement is confounded by other system improvement initiatives that may have impacted cost and LOS results. This is mitigated by the observation that facilities that opted to participate performed better at baseline in risk-adjusted LOS but slightly worse in cost per case, indicating that baseline differences were not dramatic. While both groups improved over time, the QURE measurement and feedback approach led to larger and more rapid gains than those seen in the comparator group. However, we could not exclude the potential that project participation at the site level was biased to those groups disposed to performance improvement. In addition, our patient-level data analysis was limited to the metrics available and did not allow us to directly compare patient-level performance across the plethora of clinically relevant CPV data that showed improvement. Our inpatient cost per case analysis showed significant savings for the system but did not include all potentially favorable economic impacts such as lower follow-up care costs for patients, more accurate reimbursement through better coding or fewer lost days of productivity.

With continued consolidation in healthcare and broader health systems spanning multiple geographies, new tools are needed to support standardized, evidence-based care across sites. This standardization is especially important, both clinically and financially, for high-volume, high-cost diseases such as sepsis and heart failure. However, changing practice cannot happen without collaborative engagement with providers. Standardized patient vignettes are an opportunity to measure and provide feedback in a systematic way that engages providers and is particularly well-suited to large systems and common clinical conditions. This analysis, from a real-world study, shows that an approach that standardizes care and lowers costs may be particularly helpful for large systems needing to bring disparate sites together as they concurrently move toward value-based payment.

Disclosures

QURE, LLC, whose intellectual property was used to prepare the cases and collect the data, was contracted by AdventHealth. Otherwise, any of the study authors report no potential conflicts to disclose.

Funding

This work was funded by a contract between AdventHealth (formerly Adventist Health System) and QURE, LLC.

1. Torio C, Moore B. National inpatient hospital costs: the most expensive conditions by payer, 2013. HCUP Statistical Brief #204. Published May 2016 http://www.hcup-us.ahrq.gov/reports/statbriefs/sb204-Most-Expensive-Hospital-Conditions.pdf. Accessed December 2018.

2. Liu, V, GJ Escobar, Greene JD, et al. Hospital deaths in patients with sepsis from 2 independent cohorts. JAMA. 2014;312(1):90-92. https://doi.org/10.1001/jama.2014.5804.

3. Mozzafarian D, Benjamin EJ, Go AS, et al. Heart disease and stroke statistics—2016 update: a report from the American Heart Association. Circulation. 2016;133(4):e38-e360. https://doi.org/10.1161/CIR.0000000000000350.

4. Seymour CW, Gesten F, Prescott HC, et al. Time to treatment and mortality during mandated emergency care for sepsis. N Engl J Med. 2017;376(23):2235-2244. https://doi.org/10.1056/NEJMoa1703058.

5. Yancy CW, Jessup M, Bozkurt B, et al. 2016 ACC/AHA/HFSA focused update on new pharmacological therapy for heart failure: an update of the 2013 ACCF/AHA guideline for the management of heart failure: a report of the American College of Cardiology/American Heart Association Task Force on Clinical Practice Guidelines and the Heart Failure Society of America. Circulation. 2016;134(13):e282-e293. https://doi.org/10.1161/CIR.0000000000000460.

6. Warren JI, McLaughlin M, Bardsley J, et al. The strengths and challenges of implementing EBP in healthcare systems. Worldviews Evid Based Nurs. 2016;13(1):15-24. https://doi.org/10.1111/wvn.12149.

7. Hisham R, Ng CJ, Liew SM, Hamzah N, Ho GJ. Why is there variation in the practice of evidence-based medicine in primary care? A qualitative study. BMJ Open. 2016;6(3):e010565. https://doi.org/10.1136/bmjopen-2015-010565.

8. Boccuti C, Casillas G. Aiming for Fewer Hospital U-turns: The Medicare Hospital Readmission Reduction Program, The Henry J. Kaiser Family Foundation. https://www.kff.org/medicare/issue-brief/aiming-for-fewer-hospital-u-turns-the-medicare-hospital-readmission-reduction-program/. Accessed Mar 10, 2017.

9. Venkatesh AK, Slesinger T, Whittle J, et al. Preliminary performance on the new CMS sepsis-1 national quality measure: early insights from the emergency quality network (E-QUAL). Ann Emerg Med. 2018;71(1):10-15. https://doi.org/10.1016/j.annemergmed.2017.06.032.

10. Braithwaite, J. Changing how we think about healthcare improvement. BMJ. 2018;36:k2014. https://doi.org/10.1136/bmj.k2014.

11. Peabody JW, Luck J, Glassman P, Dresselhaus TR, Lee M. Comparison of vignettes, standardized patients, and chart abstraction: a prospective validation study of 3 methods for measuring quality. JAMA. 2000;283(13):1715-1722. PubMed

12. Peabody JW, Luck J, Glassman P, et al. Measuring the quality of physician practice by using clinical vignettes: a prospective validation study. Ann Intern Med. 2004;141(10):771-780. https://doi.org/10.7326/0003-4819-141-10-200411160-00008.

13. Peabody JW, Shimkhada S, Quimbo S, Solon O, Javier X, McCulloch C. The impact of performance incentives on health outcomes: results from a cluster randomized controlled trial in the Philippines. Health Policy Plan. 2014;29(5):615-621. https://doi.org/10.1093/heapol/czt047.

14. Weems L, Strong J, Plummer D, et al. A quality collaboration in heart failure and pneumonia inpatient care at Novant Health: standardizing hospitalist practices to improve patient care and system performance. Jt Comm J Qual Patient Saf. 2019;45(3):199-206. https://doi.org/10.1016/j.jcjq.2018.09.005.

15. Bergmann S, Tran M, Robison K, et al. Standardizing hospitalist practice in sepsis and COPD care. BMJ Qual Safety. 2019. https://doi.org/10.1136/bmjqs-2018-008829.

16. Chassin MR, Galvin RM. the National Roundtable on Health Care Quality. The urgent need to improve health care quality: Institute of Medicine National Roundtable on Health Care Quality. JAMA. 1998;280(11):1000-1005. https://doi.org/10.1001/jama.280.11.1000.

17. Gupta DM, Boland RJ, Aron DC. The physician’s experience of changing clinical practice: a struggle to unlearn. Implementation Sci. 2017;12(1):28. https://doi.org/10.1186/s13012-017-0555-2.

1. Torio C, Moore B. National inpatient hospital costs: the most expensive conditions by payer, 2013. HCUP Statistical Brief #204. Published May 2016 http://www.hcup-us.ahrq.gov/reports/statbriefs/sb204-Most-Expensive-Hospital-Conditions.pdf. Accessed December 2018.

2. Liu, V, GJ Escobar, Greene JD, et al. Hospital deaths in patients with sepsis from 2 independent cohorts. JAMA. 2014;312(1):90-92. https://doi.org/10.1001/jama.2014.5804.

3. Mozzafarian D, Benjamin EJ, Go AS, et al. Heart disease and stroke statistics—2016 update: a report from the American Heart Association. Circulation. 2016;133(4):e38-e360. https://doi.org/10.1161/CIR.0000000000000350.

4. Seymour CW, Gesten F, Prescott HC, et al. Time to treatment and mortality during mandated emergency care for sepsis. N Engl J Med. 2017;376(23):2235-2244. https://doi.org/10.1056/NEJMoa1703058.

5. Yancy CW, Jessup M, Bozkurt B, et al. 2016 ACC/AHA/HFSA focused update on new pharmacological therapy for heart failure: an update of the 2013 ACCF/AHA guideline for the management of heart failure: a report of the American College of Cardiology/American Heart Association Task Force on Clinical Practice Guidelines and the Heart Failure Society of America. Circulation. 2016;134(13):e282-e293. https://doi.org/10.1161/CIR.0000000000000460.

6. Warren JI, McLaughlin M, Bardsley J, et al. The strengths and challenges of implementing EBP in healthcare systems. Worldviews Evid Based Nurs. 2016;13(1):15-24. https://doi.org/10.1111/wvn.12149.

7. Hisham R, Ng CJ, Liew SM, Hamzah N, Ho GJ. Why is there variation in the practice of evidence-based medicine in primary care? A qualitative study. BMJ Open. 2016;6(3):e010565. https://doi.org/10.1136/bmjopen-2015-010565.

8. Boccuti C, Casillas G. Aiming for Fewer Hospital U-turns: The Medicare Hospital Readmission Reduction Program, The Henry J. Kaiser Family Foundation. https://www.kff.org/medicare/issue-brief/aiming-for-fewer-hospital-u-turns-the-medicare-hospital-readmission-reduction-program/. Accessed Mar 10, 2017.

9. Venkatesh AK, Slesinger T, Whittle J, et al. Preliminary performance on the new CMS sepsis-1 national quality measure: early insights from the emergency quality network (E-QUAL). Ann Emerg Med. 2018;71(1):10-15. https://doi.org/10.1016/j.annemergmed.2017.06.032.

10. Braithwaite, J. Changing how we think about healthcare improvement. BMJ. 2018;36:k2014. https://doi.org/10.1136/bmj.k2014.

11. Peabody JW, Luck J, Glassman P, Dresselhaus TR, Lee M. Comparison of vignettes, standardized patients, and chart abstraction: a prospective validation study of 3 methods for measuring quality. JAMA. 2000;283(13):1715-1722. PubMed

12. Peabody JW, Luck J, Glassman P, et al. Measuring the quality of physician practice by using clinical vignettes: a prospective validation study. Ann Intern Med. 2004;141(10):771-780. https://doi.org/10.7326/0003-4819-141-10-200411160-00008.

13. Peabody JW, Shimkhada S, Quimbo S, Solon O, Javier X, McCulloch C. The impact of performance incentives on health outcomes: results from a cluster randomized controlled trial in the Philippines. Health Policy Plan. 2014;29(5):615-621. https://doi.org/10.1093/heapol/czt047.

14. Weems L, Strong J, Plummer D, et al. A quality collaboration in heart failure and pneumonia inpatient care at Novant Health: standardizing hospitalist practices to improve patient care and system performance. Jt Comm J Qual Patient Saf. 2019;45(3):199-206. https://doi.org/10.1016/j.jcjq.2018.09.005.

15. Bergmann S, Tran M, Robison K, et al. Standardizing hospitalist practice in sepsis and COPD care. BMJ Qual Safety. 2019. https://doi.org/10.1136/bmjqs-2018-008829.

16. Chassin MR, Galvin RM. the National Roundtable on Health Care Quality. The urgent need to improve health care quality: Institute of Medicine National Roundtable on Health Care Quality. JAMA. 1998;280(11):1000-1005. https://doi.org/10.1001/jama.280.11.1000.

17. Gupta DM, Boland RJ, Aron DC. The physician’s experience of changing clinical practice: a struggle to unlearn. Implementation Sci. 2017;12(1):28. https://doi.org/10.1186/s13012-017-0555-2.

© 2019 Society of Hospital Medicine