User login

“July Phenomenon” Revisited

The July Phenomenon is a commonly used term referring to poor hospital‐patient outcomes when inexperienced house‐staff start their postgraduate training in July. In addition to being an interesting observation, the validity of July Phenomenon has policy implications for teaching hospitals and residency training programs.

Twenty‐three published studies have tried to determine whether the arrival of new house‐staff is associated with increased patient mortality (see Supporting Appendix A in the online version of this article).123 While those studies make an important attempt to determine the validity of the July Phenomenon, they have some notable limitations. All but four of these studies2, 4, 6, 16 limited their analysis to patients with a specific diagnosis, within a particular hospital unit, or treated by a particular specialty. Many studies limited data to those from a single hospital.1, 3, 4, 10, 11, 14, 15, 20, 22 Nine studies did not include data from the entire year in their analyses,4, 6, 7, 10, 13, 1517, 23 and one did not include data from multiple years.22 One study conducted its analysis on death counts alone and did not account for the number of hospitalized people at risk.6 Finally, the analysis of several studies controlled for no severity of illness markers,6, 10, 21 whereas that from several other studies contained only crude measures of comorbidity and severity of illness.14

In this study, we analyzed data at our teaching hospital to determine if evidence exists for the July Phenomenon at our center. We used a highly discriminative and well‐calibrated multivariate model to calculate the risk of dying in hospital, and quantify the ratio of observed to expected number of hospital deaths. Using this as our outcome statistic, we determined whether or not our hospital experiences a July Phenomenon.

METHODS

This study was approved by The Ottawa Hospital (TOH) Research Ethics Board.

Study Setting

TOH is a tertiary‐care teaching hospital with two inpatient campuses. The hospital operates within a publicly funded health care system, serves a population of approximately 1.5 million people in Ottawa and Eastern Ontario, treats all major trauma patients for the region, and provides most of the oncological care in the region.

TOH is the primary medical teaching hospital at the University of Ottawa. In 2010, there were 197 residents starting their first year of postgraduate training in one of 29 programs.

Inclusion Criteria

The study period extended from April 15, 2004 to December 31, 2008. We used this start time because our hospital switched to new coding systems for procedures and diagnoses in April 2002. Since these new coding systems contributed to our outcome statistic, we used a very long period (ie, two years) for coding patterns to stabilize to ensure that any changes seen were not a function of coding patterns. We ended our study in December 2008 because this was the last date of complete data at the time we started the analysis.

We included all medical, surgical, and obstetrical patients admitted to TOH during this time except those who were: younger than 15 years old; transferred to or from another acute care hospital; or obstetrical patients hospitalized for routine childbirth. These patients were excluded because they were not part of the multivariate model that we used to calculate risk of death in hospital (discussed below).24 These exclusions accounted for 25.4% of all admissions during the study period (36,820less than 15 years old; 12,931transferred to or from the hospital; and 44,220uncomplicated admission for childbirth).

All data used in this study came from The Ottawa Hospital Data Warehouse (TOHDW). This is a repository of clinical, laboratory, and administrative data originating from the hospital's major operational information systems. TOHDW contains information on patient demographics and diagnoses, as well as procedures and patient transfers between different units or hospital services during the admission.

Primary OutcomeRatio of Observed to Expected Number of Deaths per Week

For each study day, we measured the number of hospital deaths from the patient registration table in TOHDW. This statistic was collated for each week to ensure numeric stability, especially in our subgroup analyses.

We calculated the weekly expected number of hospital deaths using an extension of the Escobar model.24 The Escobar is a logistic regression model that estimated the probability of death in hospital that was derived and internally validated on almost 260,000 hospitalizations at 17 hospitals in the Kaiser Permanente Health Plan. It included six covariates that were measurable at admission including: patient age; patient sex; admission urgency (ie, elective or emergent) and service (ie, medical or surgical); admission diagnosis; severity of acute illness as measured by the Laboratory‐based Acute Physiology Score (LAPS); and chronic comorbidities as measured by the COmorbidity Point Score (COPS). Hospitalizations were grouped by admission diagnosis. The final model had excellent discrimination (c‐statistic 0.88) and calibration (P value of Hosmer Lemeshow statistic for entire cohort 0.66). This model was externally validated in our center with a c‐statistic of 0.901.25

We extended the Escobar model in several ways (Wong et al., Derivation and validation of a model to predict the daily risk of death in hospital, 2010, unpublished work). First, we modified it into a survival (rather than a logistic) model so it could estimate a daily probability of death in hospital. Second, we included the same covariates as Escobar except that we expressed LAPS as a time‐dependent covariate (meaning that the model accounted for changes in its value during the hospitalization). Finally, we included other time‐dependent covariates including: admission to intensive care unit; undergoing significant procedures; and awaiting long‐term care. This model had excellent discrimination (concordance probability of 0.895, 95% confidence interval [CI] 0.8890.902) and calibration.

We used this survival model to estimate the daily risk of death for all patients in the hospital each day. Summing these risks over hospital patients on each day returned the daily number of expected hospital deaths. This was collated per week.

The outcome statistic for this study was the ratio of the observed to expected weekly number of hospital deaths. Ratios exceeding 1 indicate that more deaths were observed than were expected (given the distribution of important covariates in those people during that week). This outcome statistic has several advantages. First, it accounts for the number of patients in the hospital each day. This is important because the number of hospital deaths will increase as the number of people in hospital increase. Second, it accounts for the severity of illness in each patient on each hospital day. This accounts for daily changes in risk of patient death, because calculation of the expected number of deaths per day was done using a multivariate survival model that included time‐dependent covariates. Therefore, each individual's predicted hazard of death (which was summed over the entire hospital to calculate the total expected number of deaths in hospital each day) took into account the latest values of these covariates. Previous analyses only accounted for risk of death at admission.

Expressing Physician Experience

The latent measure26 in all July Phenomenon studies is collective house‐staff physician experience. This is quantified by a surrogate date variable in which July 1the date that new house‐staff start their training in North Americarepresents minimal experience and June 30 represents maximal experience. We expressed collective physician experience on a scale from 0 (minimum experience) on July 1 to 1 (maximum experience) on June 30. A similar approach has been used previously13 and has advantages over the other methods used to capture collective house‐staff experience. In the stratified, incomplete approach,47, 911, 13, 1517 periods with inexperienced house‐staff (eg, July and August) are grouped together and compared to times with experienced house‐staff (eg, May and June), while ignoring all other data. The specification of cut‐points for this stratification is arbitrary and the method ignores large amounts of data. In the stratified, complete approach, periods with inexperienced house‐staff (eg, July and August) are grouped together and compared to all other times of the year.8, 12, 14, 1820, 22 This is potentially less biased because there are no lost data. However, the cut‐point for determining when house‐staff transition from inexperienced to experienced is arbitrary, and the model assumes that the transition is sudden. This is suboptimal because acquisition of experience is a gradual, constant process.

The pattern by which collective physician experience changes between July 1st and June 30th is unknown. We therefore expressed this evolution using five different patterns varying from a linear change to a natural logarithmic change (see Supporting Appendix B in the online version of this article).

Analysis

We first examined for autocorrelation in our outcome variable using Ljung‐Box statistics at lag 6 and 12 in PROC ARIMA (SAS 9.2, Cary, NC). If significant autocorrelation was absent in our data, linear regression modeling was used to associate the ratio of the observed to expected number of weekly deaths (the outcome variable) with the collective first year physician experience (the predictor variable). Time‐series methodology was to be used if significant autocorrelation was present.

In our baseline analysis, we included all hospitalizations together. In stratified analyses, we categorized hospitalizations by admission status (emergent vs elective) and admission service (medicine vs surgery).

RESULTS

Between April 15, 2004 and December 31, 2008, The Ottawa Hospital had a total of 152,017 inpatient admissions and 107,731 same day surgeries (an annual rate of 32,222 and 22,835, respectively; an average daily rate of 88 and 63, respectively) that met our study's inclusion criteria. These 259,748 encounters included 164,318 people. Table 1 provides an overall description of the study population.

| Characteristic | |

|---|---|

| |

| Patients/hospitalizations, n | 164,318/259,748 |

| Deaths in‐hospital, n (%) | 7,679 (3.0) |

| Length of admission in days, median (IQR) | 2 (16) |

| Male, n (%) | 124,848 (48.1) |

| Age at admission, median (IQR) | 60 (4674) |

| Admission type, n (%) | |

| Elective surgical | 136,406 (52.5) |

| Elective nonsurgical | 20,104 (7.7) |

| Emergent surgical | 32,046 (12.3) |

| Emergent nonsurgical | 71,192 (27.4) |

| Elixhauser score, median (IQR) | 0 (04) |

| LAPS at admission, median (IQR) | 0 (015) |

| At least one admission to intensive care unit, n (%) | 7,779 (3.0) |

| At least one alternative level of care episode, n (%) | 6,971 (2.7) |

| At least one PIMR procedure, n (%) | 47,288 (18.2) |

| First PIMR score,* median (IQR) | 2 (52) |

Weekly Deaths: Observed, Expected, and Ratio

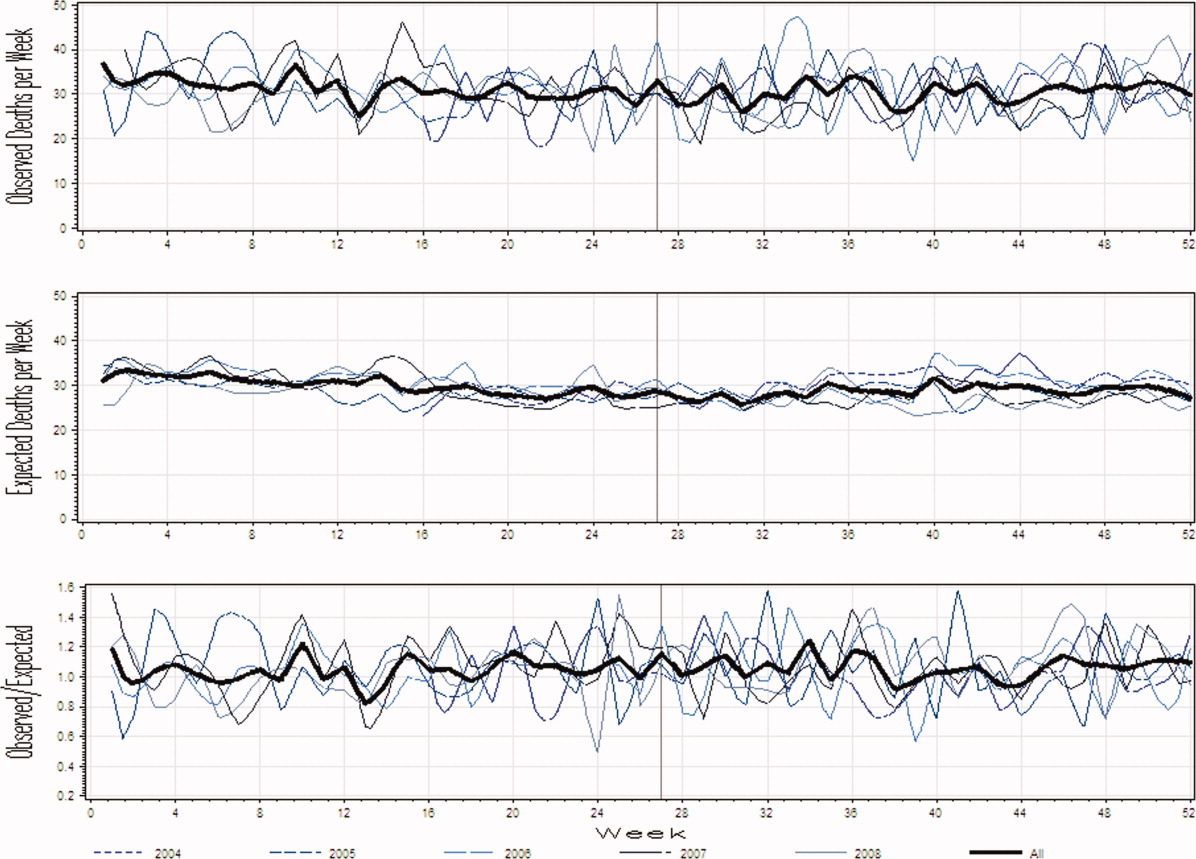

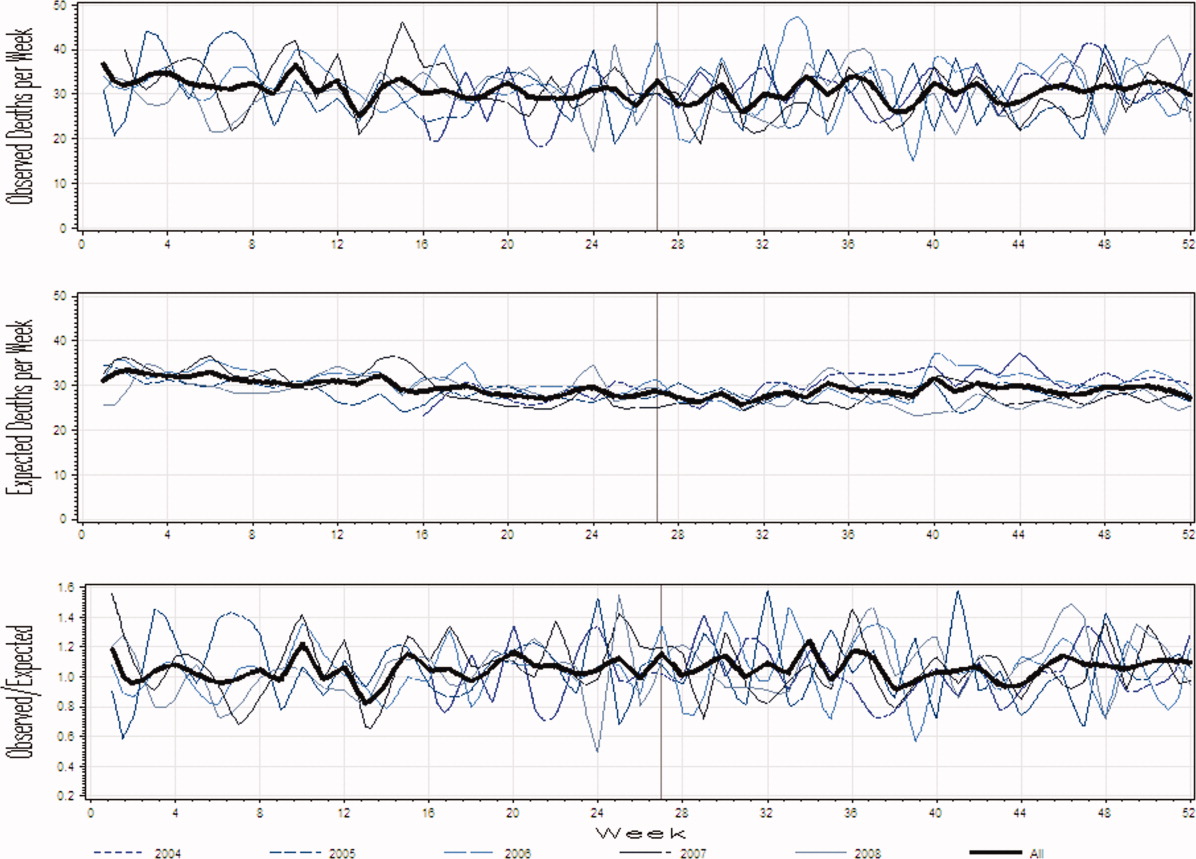

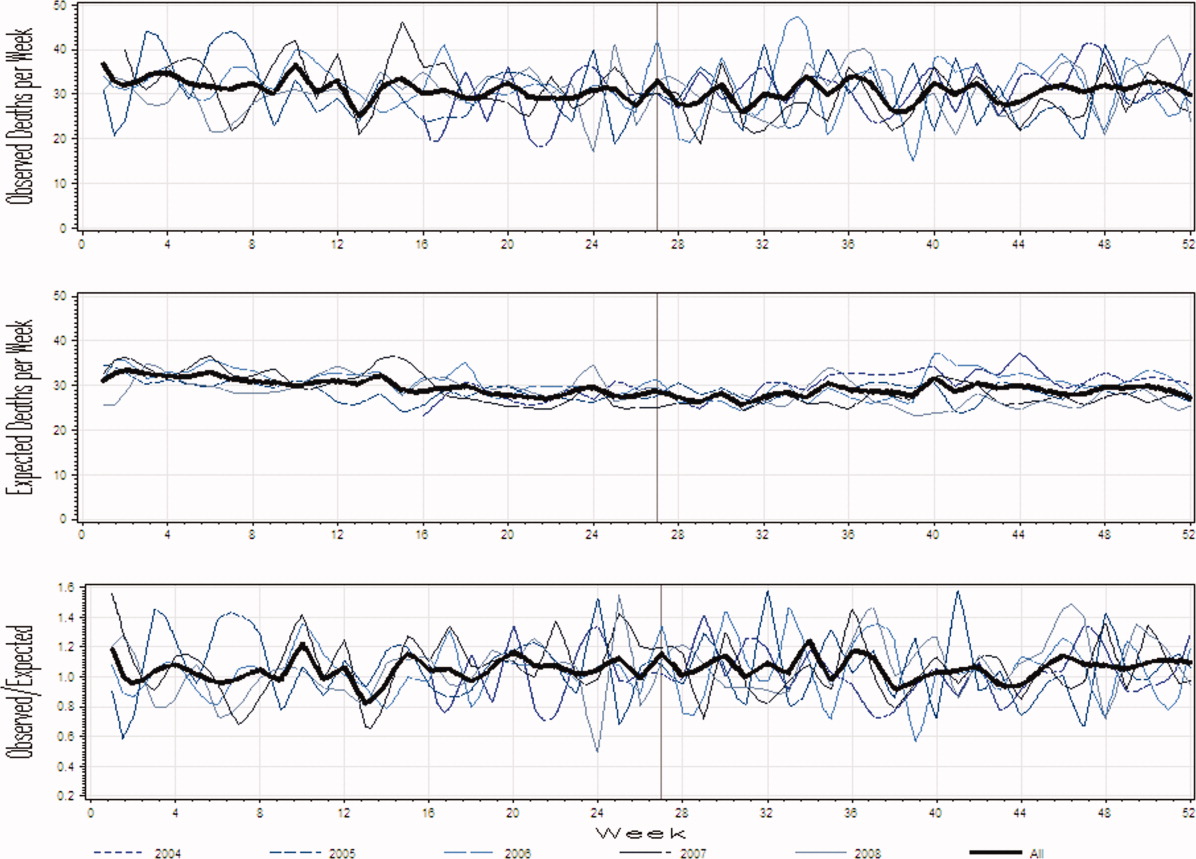

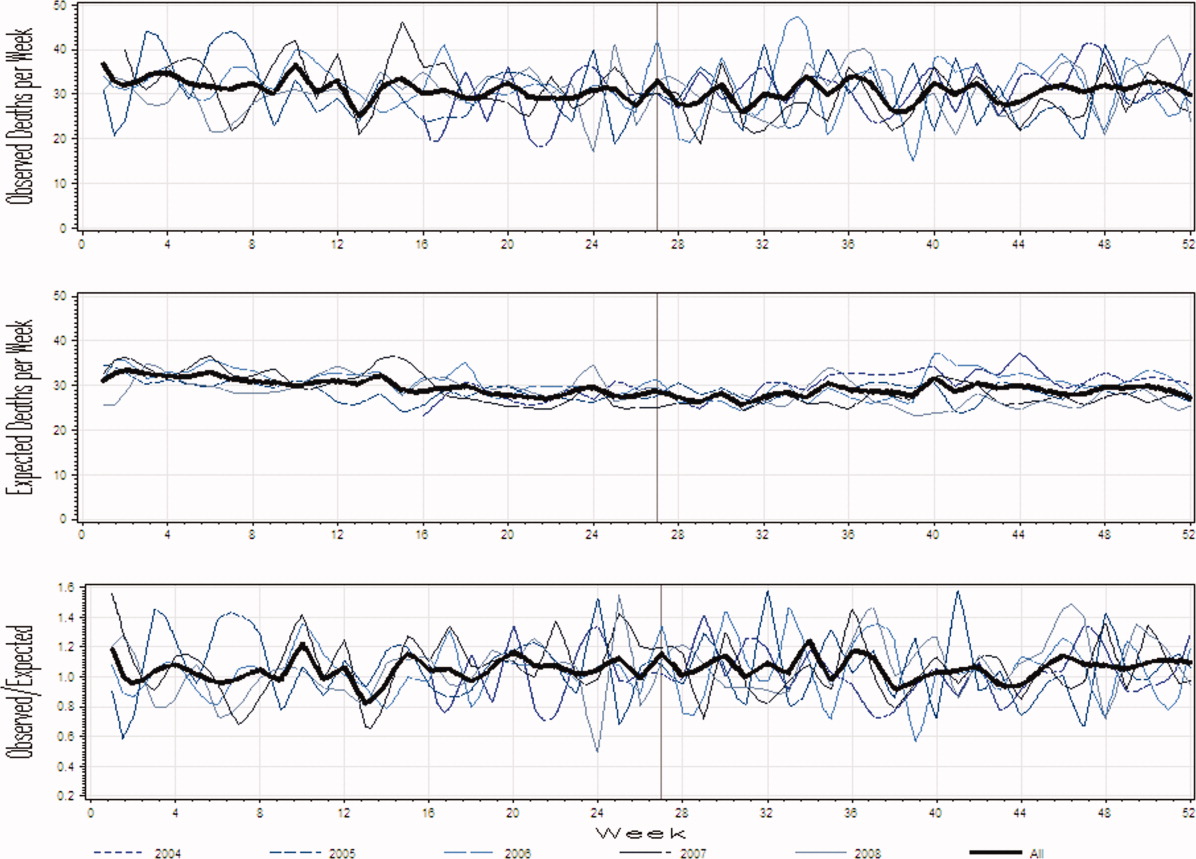

Figure 1A presents the observed weekly number of deaths during the study period. There was an average of 31 deaths per week (range 1551). Some large fluctuations in the weekly number of deaths were seen; in 2007, for example, the number of observed deaths went from 21 in week 13 up to 46 in week 15. However, no obvious seasonal trends in the observed weekly number of deaths were seen (Figure 1A, heavy line) nor were trends between years obvious.

Figure 1B presents the expected weekly number of deaths during the study period. The expected weekly number of deaths averaged 29.6 (range 22.238.7). The expected weekly number of deaths was notably less variable than the observed number of deaths. However, important variations in the expected number of deaths were seen; for example, in 2005, the expected number of deaths increased from 24.1 in week 41 to 29.6 in week 44. Again, we saw no obvious seasonal trends in the expected weekly number of deaths (Figure 1B, heavy line).

Figure 1C illustrates the ratio of observed to the expected weekly number of deaths. The average observed to expected ratio slightly exceeded unity (1.05) and ranged from 0.488 (week 24, in 2008) to 1.821 (week 51, in 2008). We saw no obvious seasonal trends in the ratio of the observed to expected number of weekly deaths. In addition, obvious trends in this ratio were absent over the study period.

Association Between House‐Staff Experience and Death in Hospital

We found no evidence of autocorrelation in the ratio of observed to expected weekly number of deaths. The ratio of observed to expected number of hospital deaths was not significantly associated with house‐staff physician experience (Table 2). This conclusion did not change regardless of which house‐staff physician experience pattern was used in the linear model (Table 2). In addition, our analysis found no significant association between physician experience and patient mortality when analyses were stratified by admission service or admission status (Table 2).

| Patient Population | House‐Staff Experience Pattern (95% CI) | ||||

|---|---|---|---|---|---|

| Linear | Square | Square Root | Cubic | Natural Logarithm | |

| |||||

| All | 0.03 (0.11, 0.06) | 0.02 (0.10, 0.07) | 0.04 (0.15, 0.07) | 0.01 (0.10, 0.08) | 0.05 (0.16, 0.07) |

| Admitting service | |||||

| Medicine | 0.0004 (0.09, 0.10) | 0.01 (0.08, 0.10) | 0.01 (0.13, 0.11) | 0.02 (0.07, 0.11) | 0.03 (0.15, 0.09) |

| Surgery | 0.10 (0.30, 0.10) | 0.11 (0.30, 0.08) | 0.12 (0.37, 0.14) | 0.11 (0.31, 0.08) | 0.09 (0.35, 0.17) |

| Admission status | |||||

| Elective | 0.09 (0.53, 0.35) | 0.10 (0.51, 0.32) | 0.11 (0.66, 0.44) | 0.10 (0.53, 0.33) | 0.11 (0.68, 0.45) |

| Emergent | 0.02 (0.11, 0.07) | 0.01 (0.09, 0.08) | 0.03 (0.14, 0.08) | 0.003 (0.09, 0.09) | 0.04 (0.16, 0.08) |

DISCUSSION

It is natural to suspect that physician experience influences patient outcomes. The commonly discussed July Phenomenon explores changes in teaching‐hospital patient outcomes by time of the academic year. This serves as an ecological surrogate for the latent variable of overall house‐staff experience. Our study used a detailed outcomethe ratio of observed to the expected number of weekly hospital deathsthat adjusted for patient severity of illness. We also modeled collective physician experience using a broad range of patterns. We found no significant variation in mortality rates during the academic year; therefore, the risk of death in hospital does not vary by house‐staff experience at our hospital. This is no evidence of a July Phenomenon for mortality at our center.

We were not surprised that the arrival of inexperienced house‐staff did not significantly change patient mortality for several reasons. First year residents are but one group of treating physicians in a teaching hospital. They are surrounded by many other, more experienced physicians who also contribute to patient care and their outcomes. Given these other physicians, the influence that the relatively smaller number of first year residents have on patient outcomes will be minimized. In addition, the role that these more experienced physicians play in patient care will vary by the experience and ability of residents. The influence of new and inexperienced house‐staff in July will be blunted by an increased role played by staff‐people, fellows, and more experienced house‐staff at that time.

Our study was a methodologically rigorous examination of the July Phenomenon. We used a reliable outcome statisticthe ratio of observed to expected weekly number of hospital deathsthat was created with a validated, discriminative, and well‐calibrated model which predicted risk of death in hospital (Wong et al., Derivation and validation of a model to predict the daily risk of death in hospital, 2010, unpublished work). This statistic is inherently understandable and controlled for patient severity of illness. In addition, our study included a very broad and inclusive group of patients over five years at two hospitals.

Twenty‐three other studies have quantitatively sought a July Phenomenon for patient mortality (see Supporting Appendix A in the online version of this article). The studies contained a broad assortment of research methodologies, patient populations, and analytical methodologies. Nineteen of these studies (83%) found no evidence of a July Phenomenon for teaching‐hospital mortality. In contrast, two of these studies found notable adjusted odds ratios for death in hospital (1.41 and 1.34) in patients undergoing either general surgery13 or complex cardiovascular surgery,19 respectively. Blumberg22 also found an increased risk of death in surgical patients in July, but used indirect standardized mortality ratios as the outcome statistic and was based on only 139 cases at Maryland teaching hospitals in 1984. Only Jen et al.16 showed an increased risk of hospital death with new house‐staff in a broad patient population. However, this study was restricted to two arbitrarily chosen days (one before and one after house‐staff change‐over) and showed an increased risk of hospital death (adjusted OR 1.05, 95% CI 1.001.15) whose borderline statistical significance could have been driven by the large sample size of the study (n = 299,741).

Therefore, the vast majority of dataincluding those presented in our analysesshow that the risk of teaching‐hospital death does not significantly increase with the arrival of new house‐staff. This prompts the question as to why the July Phenomenon is commonly presented in popular media as a proven fact.2733 We believe this is likely because the concept of the July Phenomenon is understandable and has a rather morbid attraction to people, both inside and outside of the medical profession. Given the large amount of data refuting the true existence of a July Phenomenon for patient mortality (see Supporting Appendix A in the online version of this article), we believe that this term should only be used only as an example of an interesting idea that is refuted by a proper analysis of the data.

Several limitations of our study are notable. First, our analysis is limited to a single center, albeit with two hospitals. However, ours is one of the largest teaching centers in Canada with many new residents each year. Second, we only examined the association of physician experience on hospital mortality. While it is possible that physician experience significantly influences other patient outcomes, mortality is, obviously, an important and reliably tallied statistic that is used as the primary outcome in most July Phenomenon studies. Third, we excluded approximately a quarter of all hospitalizations from the study. These exclusions were necessary because the Escobar model does not apply to these people and can therefore not be used to predict their risk of death in hospital. However, the vast majority of excluded patients (those less than 15 years old, and women admitted for routine childbirth) have a very low risk of death (the former because they are almost exclusively newborns, and the latter because the risk of maternal death during childbirth is very low). Since these people will contribute very little to either the expected or observed number of deaths, their exclusion will do little to threaten the study's validity. The remaining patients who were transferred to or from other hospitals (n = 12,931) makes a small proportion of the total sampling frame (5% of admissions). Fourth, our study did not identify any significant association between house‐staff experience and patient mortality (Table 2). However, the confidence intervals around our estimates are wide enough, especially in some subgroups such as patients admitted electively, that important changes in patient mortality with house‐staff experience cannot be excluded. For example, whereas our study found that a decrease in the ratio of observed to expected number of deaths exceeding 30% is very unlikely, it is still possible that this decrease is up to 30% (the lower range of the confidence interval in Table 2). However, using this logic, it could also increase by up to 10% (Table 2). Finally, we did not directly measure individual physician experience. New residents can vary extensively in their individual experience and ability. Incorporating individual physician measures of experience and ability would more reliably let us measure the association of new residents with patient outcomes. Without this, we had to rely on an ecological measure of physician experiencenamely calendar date. Again, this method is an industry standard since all studies quantify physician experience ecologically by date (see Supporting Appendix A in the online version of this article).

In summary, our datasimilar to most studies on this topicshow that the risk of death in teaching hospitals does not change with the arrival of new house‐staff.

- ,,.The effects of scheduled intern rotation on the cost and quality of teaching hospital care.Eval Health Prof.1994;17:259–272.

- ,,,.Specialty differences in the “July Phenomenon” for Twin Cities teaching hospitals.Med Care.1993;31:73–83.

- ,,,.The relationship of house staff experience to the cost and quality of inpatient care.JAMA.1990;263:953–957.

- ,,,.Indirect costs for medical education. Is there a July phenomenon?Arch Intern Med.1989;149:765–768.

- ,,,,.The impact of accreditation council for graduate medical education duty hours, the July phenomenon, and hospital teaching status on stroke outcomes.J Stroke Cerebrovasc Dis.2009;18:232–238.

- ,.The killing season—Fact or fiction.BMJ1994;309:1690.

- ,,, et al.The July effect: Impact of the beginning of the academic cycle on cardiac surgical outcomes in a cohort of 70,616 patients.Ann Thorac Surg.2009;88:70–75.

- ,.Is there a July phenomenon? The effect of July admission on intensive care mortality and length of stay in teaching hospitals.J Gen Intern Med.2003;18:639–645.

- ,,,.Neonatal mortality among low birth weight infants during the initial months of the academic year.J Perinatol.2008;28:691–695.

- ,,,,.The “July Phenomenon” and the care of the severely injured patient: Fact or fiction?Surgery.2001;130:346–353.

- ,,, et al.The July effect and cardiac surgery: The effect of the beginning of the academic cycle on outcomes.Am J Surg.2008;196:720–725.

- ,,,.Mortality in Medicare patients undergoing surgery in July in teaching hospitals.Ann Surg.2009;249:871–876.

- ,,, et al.Seasonal variation in surgical outcomes as measured by the American College of Surgeons–National Surgical Quality Improvement Program (ACS‐NSQIP).Ann Surg.2007;246:456–465.

- ,,, et al.Mortality rate and length of stay of patients admitted to the intensive care unit in July.Crit Care Med.2004;32:1161–1165.

- ,,,,,.July—As good a time as any to be injured.J Trauma‐Injury Infect Crit Care.2009;67:1087–1090.

- ,,,,.Early in‐hospital mortality following trainee doctors' first day at work.PLoS ONE.2009;4.

- ,,.Effect of critical care medicine fellows on patient outcome in the intensive care unit.Acad Med.2006;81:S1–S4.

- ,,,,.The “July Phenomenon”: Is trauma the exception?J Am Coll Surg.2009;209:378–384.

- ,,.Impact of cardiothoracic resident turnover on mortality after cardiac surgery: A dynamic human factor.Ann Thorac Surg.2008;86:123–131.

- ,,.Is there a “July Phenomenon” in pediatric neurosurgery at teaching hospitals?J Neurosurg Pediatr.2006;105:169–176.

- ,,,,.Mortality and morbidity by month of birth of neonates admitted to an academic neonatal intensive care unit.Pediatrics.2008;122:E1048–E1052.

- .Measuring surgical quality in Maryland: A model.Health Aff.1988;7:62–78.

- ,,, et al.Complications and death at the start of the new academic year: Is there a July phenomenon?J Trauma‐Injury Infect Crit Care.2010;68(1):19–22.

- ,,,,,.Risk‐adjusting hospital inpatient mortality using automated inpatient, outpatient, and laboratory databases.Med Care.2008;46:232–239.

- ,,,.The Kaiser Permanente inpatient risk adjustment methodology was valid in an external patient population.J Clin Epidemiol.2010;63:798–803.

- .Introduction: The logic of latent variables.Latent Class Analysis.Newbury Park, CA:Sage;1987:5–10.

- July Effect. Wikipedia. Available at: http://en.wikipedia.org/wiki/July_effect. Accessed April 1,2011.

- Study proves “killing season” occurs as new doctors start work. September 23,2010. Herald Scotland. Available at: http://www.heraldscotland.com/news/health/study‐proves‐killing‐season‐occurs‐as‐new‐doctors‐start‐work‐1.921632. Accessed April 1, 2011.

- The “July effect”: Worst month for fatal hospital errors, study finds. June 3,2010. ABC News. Available at: http://abcnews.go.com/WN/WellnessNews/july‐month‐fatal‐hospital‐errors‐study‐finds/story?id=10819652. Accessed 1 April, 2011.

- “Deaths rise” with junior doctors. September 22,2010. BBC News. Available at: http://news.bbc.co.uk/2/hi/health/8269729.stm. Accessed April 1, 2011.

- .July: When not to go to the hospital. June 2,2010. Science News. Available at: http://www.sciencenews.org/view/generic/id/59865/title/July_When_not_to_go_to_the_hospital. Accessed April 1, 2011.

- July: A deadly time for hospitals. July 5,2010. National Public Radio. Available at: http://www.npr.org/templates/story/story.php?storyId=128321489. Accessed April 1, 2011.

- .Medical errors and patient safety: Beware the “July effect.” June 4,2010. Better Health. Available at: http://getbetterhealth.com/medical‐errors‐and‐patient‐safety‐beware‐of‐the‐july‐effect/2010.06.04. Accessed April 1, 2011.

The July Phenomenon is a commonly used term referring to poor hospital‐patient outcomes when inexperienced house‐staff start their postgraduate training in July. In addition to being an interesting observation, the validity of July Phenomenon has policy implications for teaching hospitals and residency training programs.

Twenty‐three published studies have tried to determine whether the arrival of new house‐staff is associated with increased patient mortality (see Supporting Appendix A in the online version of this article).123 While those studies make an important attempt to determine the validity of the July Phenomenon, they have some notable limitations. All but four of these studies2, 4, 6, 16 limited their analysis to patients with a specific diagnosis, within a particular hospital unit, or treated by a particular specialty. Many studies limited data to those from a single hospital.1, 3, 4, 10, 11, 14, 15, 20, 22 Nine studies did not include data from the entire year in their analyses,4, 6, 7, 10, 13, 1517, 23 and one did not include data from multiple years.22 One study conducted its analysis on death counts alone and did not account for the number of hospitalized people at risk.6 Finally, the analysis of several studies controlled for no severity of illness markers,6, 10, 21 whereas that from several other studies contained only crude measures of comorbidity and severity of illness.14

In this study, we analyzed data at our teaching hospital to determine if evidence exists for the July Phenomenon at our center. We used a highly discriminative and well‐calibrated multivariate model to calculate the risk of dying in hospital, and quantify the ratio of observed to expected number of hospital deaths. Using this as our outcome statistic, we determined whether or not our hospital experiences a July Phenomenon.

METHODS

This study was approved by The Ottawa Hospital (TOH) Research Ethics Board.

Study Setting

TOH is a tertiary‐care teaching hospital with two inpatient campuses. The hospital operates within a publicly funded health care system, serves a population of approximately 1.5 million people in Ottawa and Eastern Ontario, treats all major trauma patients for the region, and provides most of the oncological care in the region.

TOH is the primary medical teaching hospital at the University of Ottawa. In 2010, there were 197 residents starting their first year of postgraduate training in one of 29 programs.

Inclusion Criteria

The study period extended from April 15, 2004 to December 31, 2008. We used this start time because our hospital switched to new coding systems for procedures and diagnoses in April 2002. Since these new coding systems contributed to our outcome statistic, we used a very long period (ie, two years) for coding patterns to stabilize to ensure that any changes seen were not a function of coding patterns. We ended our study in December 2008 because this was the last date of complete data at the time we started the analysis.

We included all medical, surgical, and obstetrical patients admitted to TOH during this time except those who were: younger than 15 years old; transferred to or from another acute care hospital; or obstetrical patients hospitalized for routine childbirth. These patients were excluded because they were not part of the multivariate model that we used to calculate risk of death in hospital (discussed below).24 These exclusions accounted for 25.4% of all admissions during the study period (36,820less than 15 years old; 12,931transferred to or from the hospital; and 44,220uncomplicated admission for childbirth).

All data used in this study came from The Ottawa Hospital Data Warehouse (TOHDW). This is a repository of clinical, laboratory, and administrative data originating from the hospital's major operational information systems. TOHDW contains information on patient demographics and diagnoses, as well as procedures and patient transfers between different units or hospital services during the admission.

Primary OutcomeRatio of Observed to Expected Number of Deaths per Week

For each study day, we measured the number of hospital deaths from the patient registration table in TOHDW. This statistic was collated for each week to ensure numeric stability, especially in our subgroup analyses.

We calculated the weekly expected number of hospital deaths using an extension of the Escobar model.24 The Escobar is a logistic regression model that estimated the probability of death in hospital that was derived and internally validated on almost 260,000 hospitalizations at 17 hospitals in the Kaiser Permanente Health Plan. It included six covariates that were measurable at admission including: patient age; patient sex; admission urgency (ie, elective or emergent) and service (ie, medical or surgical); admission diagnosis; severity of acute illness as measured by the Laboratory‐based Acute Physiology Score (LAPS); and chronic comorbidities as measured by the COmorbidity Point Score (COPS). Hospitalizations were grouped by admission diagnosis. The final model had excellent discrimination (c‐statistic 0.88) and calibration (P value of Hosmer Lemeshow statistic for entire cohort 0.66). This model was externally validated in our center with a c‐statistic of 0.901.25

We extended the Escobar model in several ways (Wong et al., Derivation and validation of a model to predict the daily risk of death in hospital, 2010, unpublished work). First, we modified it into a survival (rather than a logistic) model so it could estimate a daily probability of death in hospital. Second, we included the same covariates as Escobar except that we expressed LAPS as a time‐dependent covariate (meaning that the model accounted for changes in its value during the hospitalization). Finally, we included other time‐dependent covariates including: admission to intensive care unit; undergoing significant procedures; and awaiting long‐term care. This model had excellent discrimination (concordance probability of 0.895, 95% confidence interval [CI] 0.8890.902) and calibration.

We used this survival model to estimate the daily risk of death for all patients in the hospital each day. Summing these risks over hospital patients on each day returned the daily number of expected hospital deaths. This was collated per week.

The outcome statistic for this study was the ratio of the observed to expected weekly number of hospital deaths. Ratios exceeding 1 indicate that more deaths were observed than were expected (given the distribution of important covariates in those people during that week). This outcome statistic has several advantages. First, it accounts for the number of patients in the hospital each day. This is important because the number of hospital deaths will increase as the number of people in hospital increase. Second, it accounts for the severity of illness in each patient on each hospital day. This accounts for daily changes in risk of patient death, because calculation of the expected number of deaths per day was done using a multivariate survival model that included time‐dependent covariates. Therefore, each individual's predicted hazard of death (which was summed over the entire hospital to calculate the total expected number of deaths in hospital each day) took into account the latest values of these covariates. Previous analyses only accounted for risk of death at admission.

Expressing Physician Experience

The latent measure26 in all July Phenomenon studies is collective house‐staff physician experience. This is quantified by a surrogate date variable in which July 1the date that new house‐staff start their training in North Americarepresents minimal experience and June 30 represents maximal experience. We expressed collective physician experience on a scale from 0 (minimum experience) on July 1 to 1 (maximum experience) on June 30. A similar approach has been used previously13 and has advantages over the other methods used to capture collective house‐staff experience. In the stratified, incomplete approach,47, 911, 13, 1517 periods with inexperienced house‐staff (eg, July and August) are grouped together and compared to times with experienced house‐staff (eg, May and June), while ignoring all other data. The specification of cut‐points for this stratification is arbitrary and the method ignores large amounts of data. In the stratified, complete approach, periods with inexperienced house‐staff (eg, July and August) are grouped together and compared to all other times of the year.8, 12, 14, 1820, 22 This is potentially less biased because there are no lost data. However, the cut‐point for determining when house‐staff transition from inexperienced to experienced is arbitrary, and the model assumes that the transition is sudden. This is suboptimal because acquisition of experience is a gradual, constant process.

The pattern by which collective physician experience changes between July 1st and June 30th is unknown. We therefore expressed this evolution using five different patterns varying from a linear change to a natural logarithmic change (see Supporting Appendix B in the online version of this article).

Analysis

We first examined for autocorrelation in our outcome variable using Ljung‐Box statistics at lag 6 and 12 in PROC ARIMA (SAS 9.2, Cary, NC). If significant autocorrelation was absent in our data, linear regression modeling was used to associate the ratio of the observed to expected number of weekly deaths (the outcome variable) with the collective first year physician experience (the predictor variable). Time‐series methodology was to be used if significant autocorrelation was present.

In our baseline analysis, we included all hospitalizations together. In stratified analyses, we categorized hospitalizations by admission status (emergent vs elective) and admission service (medicine vs surgery).

RESULTS

Between April 15, 2004 and December 31, 2008, The Ottawa Hospital had a total of 152,017 inpatient admissions and 107,731 same day surgeries (an annual rate of 32,222 and 22,835, respectively; an average daily rate of 88 and 63, respectively) that met our study's inclusion criteria. These 259,748 encounters included 164,318 people. Table 1 provides an overall description of the study population.

| Characteristic | |

|---|---|

| |

| Patients/hospitalizations, n | 164,318/259,748 |

| Deaths in‐hospital, n (%) | 7,679 (3.0) |

| Length of admission in days, median (IQR) | 2 (16) |

| Male, n (%) | 124,848 (48.1) |

| Age at admission, median (IQR) | 60 (4674) |

| Admission type, n (%) | |

| Elective surgical | 136,406 (52.5) |

| Elective nonsurgical | 20,104 (7.7) |

| Emergent surgical | 32,046 (12.3) |

| Emergent nonsurgical | 71,192 (27.4) |

| Elixhauser score, median (IQR) | 0 (04) |

| LAPS at admission, median (IQR) | 0 (015) |

| At least one admission to intensive care unit, n (%) | 7,779 (3.0) |

| At least one alternative level of care episode, n (%) | 6,971 (2.7) |

| At least one PIMR procedure, n (%) | 47,288 (18.2) |

| First PIMR score,* median (IQR) | 2 (52) |

Weekly Deaths: Observed, Expected, and Ratio

Figure 1A presents the observed weekly number of deaths during the study period. There was an average of 31 deaths per week (range 1551). Some large fluctuations in the weekly number of deaths were seen; in 2007, for example, the number of observed deaths went from 21 in week 13 up to 46 in week 15. However, no obvious seasonal trends in the observed weekly number of deaths were seen (Figure 1A, heavy line) nor were trends between years obvious.

Figure 1B presents the expected weekly number of deaths during the study period. The expected weekly number of deaths averaged 29.6 (range 22.238.7). The expected weekly number of deaths was notably less variable than the observed number of deaths. However, important variations in the expected number of deaths were seen; for example, in 2005, the expected number of deaths increased from 24.1 in week 41 to 29.6 in week 44. Again, we saw no obvious seasonal trends in the expected weekly number of deaths (Figure 1B, heavy line).

Figure 1C illustrates the ratio of observed to the expected weekly number of deaths. The average observed to expected ratio slightly exceeded unity (1.05) and ranged from 0.488 (week 24, in 2008) to 1.821 (week 51, in 2008). We saw no obvious seasonal trends in the ratio of the observed to expected number of weekly deaths. In addition, obvious trends in this ratio were absent over the study period.

Association Between House‐Staff Experience and Death in Hospital

We found no evidence of autocorrelation in the ratio of observed to expected weekly number of deaths. The ratio of observed to expected number of hospital deaths was not significantly associated with house‐staff physician experience (Table 2). This conclusion did not change regardless of which house‐staff physician experience pattern was used in the linear model (Table 2). In addition, our analysis found no significant association between physician experience and patient mortality when analyses were stratified by admission service or admission status (Table 2).

| Patient Population | House‐Staff Experience Pattern (95% CI) | ||||

|---|---|---|---|---|---|

| Linear | Square | Square Root | Cubic | Natural Logarithm | |

| |||||

| All | 0.03 (0.11, 0.06) | 0.02 (0.10, 0.07) | 0.04 (0.15, 0.07) | 0.01 (0.10, 0.08) | 0.05 (0.16, 0.07) |

| Admitting service | |||||

| Medicine | 0.0004 (0.09, 0.10) | 0.01 (0.08, 0.10) | 0.01 (0.13, 0.11) | 0.02 (0.07, 0.11) | 0.03 (0.15, 0.09) |

| Surgery | 0.10 (0.30, 0.10) | 0.11 (0.30, 0.08) | 0.12 (0.37, 0.14) | 0.11 (0.31, 0.08) | 0.09 (0.35, 0.17) |

| Admission status | |||||

| Elective | 0.09 (0.53, 0.35) | 0.10 (0.51, 0.32) | 0.11 (0.66, 0.44) | 0.10 (0.53, 0.33) | 0.11 (0.68, 0.45) |

| Emergent | 0.02 (0.11, 0.07) | 0.01 (0.09, 0.08) | 0.03 (0.14, 0.08) | 0.003 (0.09, 0.09) | 0.04 (0.16, 0.08) |

DISCUSSION

It is natural to suspect that physician experience influences patient outcomes. The commonly discussed July Phenomenon explores changes in teaching‐hospital patient outcomes by time of the academic year. This serves as an ecological surrogate for the latent variable of overall house‐staff experience. Our study used a detailed outcomethe ratio of observed to the expected number of weekly hospital deathsthat adjusted for patient severity of illness. We also modeled collective physician experience using a broad range of patterns. We found no significant variation in mortality rates during the academic year; therefore, the risk of death in hospital does not vary by house‐staff experience at our hospital. This is no evidence of a July Phenomenon for mortality at our center.

We were not surprised that the arrival of inexperienced house‐staff did not significantly change patient mortality for several reasons. First year residents are but one group of treating physicians in a teaching hospital. They are surrounded by many other, more experienced physicians who also contribute to patient care and their outcomes. Given these other physicians, the influence that the relatively smaller number of first year residents have on patient outcomes will be minimized. In addition, the role that these more experienced physicians play in patient care will vary by the experience and ability of residents. The influence of new and inexperienced house‐staff in July will be blunted by an increased role played by staff‐people, fellows, and more experienced house‐staff at that time.

Our study was a methodologically rigorous examination of the July Phenomenon. We used a reliable outcome statisticthe ratio of observed to expected weekly number of hospital deathsthat was created with a validated, discriminative, and well‐calibrated model which predicted risk of death in hospital (Wong et al., Derivation and validation of a model to predict the daily risk of death in hospital, 2010, unpublished work). This statistic is inherently understandable and controlled for patient severity of illness. In addition, our study included a very broad and inclusive group of patients over five years at two hospitals.

Twenty‐three other studies have quantitatively sought a July Phenomenon for patient mortality (see Supporting Appendix A in the online version of this article). The studies contained a broad assortment of research methodologies, patient populations, and analytical methodologies. Nineteen of these studies (83%) found no evidence of a July Phenomenon for teaching‐hospital mortality. In contrast, two of these studies found notable adjusted odds ratios for death in hospital (1.41 and 1.34) in patients undergoing either general surgery13 or complex cardiovascular surgery,19 respectively. Blumberg22 also found an increased risk of death in surgical patients in July, but used indirect standardized mortality ratios as the outcome statistic and was based on only 139 cases at Maryland teaching hospitals in 1984. Only Jen et al.16 showed an increased risk of hospital death with new house‐staff in a broad patient population. However, this study was restricted to two arbitrarily chosen days (one before and one after house‐staff change‐over) and showed an increased risk of hospital death (adjusted OR 1.05, 95% CI 1.001.15) whose borderline statistical significance could have been driven by the large sample size of the study (n = 299,741).

Therefore, the vast majority of dataincluding those presented in our analysesshow that the risk of teaching‐hospital death does not significantly increase with the arrival of new house‐staff. This prompts the question as to why the July Phenomenon is commonly presented in popular media as a proven fact.2733 We believe this is likely because the concept of the July Phenomenon is understandable and has a rather morbid attraction to people, both inside and outside of the medical profession. Given the large amount of data refuting the true existence of a July Phenomenon for patient mortality (see Supporting Appendix A in the online version of this article), we believe that this term should only be used only as an example of an interesting idea that is refuted by a proper analysis of the data.

Several limitations of our study are notable. First, our analysis is limited to a single center, albeit with two hospitals. However, ours is one of the largest teaching centers in Canada with many new residents each year. Second, we only examined the association of physician experience on hospital mortality. While it is possible that physician experience significantly influences other patient outcomes, mortality is, obviously, an important and reliably tallied statistic that is used as the primary outcome in most July Phenomenon studies. Third, we excluded approximately a quarter of all hospitalizations from the study. These exclusions were necessary because the Escobar model does not apply to these people and can therefore not be used to predict their risk of death in hospital. However, the vast majority of excluded patients (those less than 15 years old, and women admitted for routine childbirth) have a very low risk of death (the former because they are almost exclusively newborns, and the latter because the risk of maternal death during childbirth is very low). Since these people will contribute very little to either the expected or observed number of deaths, their exclusion will do little to threaten the study's validity. The remaining patients who were transferred to or from other hospitals (n = 12,931) makes a small proportion of the total sampling frame (5% of admissions). Fourth, our study did not identify any significant association between house‐staff experience and patient mortality (Table 2). However, the confidence intervals around our estimates are wide enough, especially in some subgroups such as patients admitted electively, that important changes in patient mortality with house‐staff experience cannot be excluded. For example, whereas our study found that a decrease in the ratio of observed to expected number of deaths exceeding 30% is very unlikely, it is still possible that this decrease is up to 30% (the lower range of the confidence interval in Table 2). However, using this logic, it could also increase by up to 10% (Table 2). Finally, we did not directly measure individual physician experience. New residents can vary extensively in their individual experience and ability. Incorporating individual physician measures of experience and ability would more reliably let us measure the association of new residents with patient outcomes. Without this, we had to rely on an ecological measure of physician experiencenamely calendar date. Again, this method is an industry standard since all studies quantify physician experience ecologically by date (see Supporting Appendix A in the online version of this article).

In summary, our datasimilar to most studies on this topicshow that the risk of death in teaching hospitals does not change with the arrival of new house‐staff.

The July Phenomenon is a commonly used term referring to poor hospital‐patient outcomes when inexperienced house‐staff start their postgraduate training in July. In addition to being an interesting observation, the validity of July Phenomenon has policy implications for teaching hospitals and residency training programs.

Twenty‐three published studies have tried to determine whether the arrival of new house‐staff is associated with increased patient mortality (see Supporting Appendix A in the online version of this article).123 While those studies make an important attempt to determine the validity of the July Phenomenon, they have some notable limitations. All but four of these studies2, 4, 6, 16 limited their analysis to patients with a specific diagnosis, within a particular hospital unit, or treated by a particular specialty. Many studies limited data to those from a single hospital.1, 3, 4, 10, 11, 14, 15, 20, 22 Nine studies did not include data from the entire year in their analyses,4, 6, 7, 10, 13, 1517, 23 and one did not include data from multiple years.22 One study conducted its analysis on death counts alone and did not account for the number of hospitalized people at risk.6 Finally, the analysis of several studies controlled for no severity of illness markers,6, 10, 21 whereas that from several other studies contained only crude measures of comorbidity and severity of illness.14

In this study, we analyzed data at our teaching hospital to determine if evidence exists for the July Phenomenon at our center. We used a highly discriminative and well‐calibrated multivariate model to calculate the risk of dying in hospital, and quantify the ratio of observed to expected number of hospital deaths. Using this as our outcome statistic, we determined whether or not our hospital experiences a July Phenomenon.

METHODS

This study was approved by The Ottawa Hospital (TOH) Research Ethics Board.

Study Setting

TOH is a tertiary‐care teaching hospital with two inpatient campuses. The hospital operates within a publicly funded health care system, serves a population of approximately 1.5 million people in Ottawa and Eastern Ontario, treats all major trauma patients for the region, and provides most of the oncological care in the region.

TOH is the primary medical teaching hospital at the University of Ottawa. In 2010, there were 197 residents starting their first year of postgraduate training in one of 29 programs.

Inclusion Criteria

The study period extended from April 15, 2004 to December 31, 2008. We used this start time because our hospital switched to new coding systems for procedures and diagnoses in April 2002. Since these new coding systems contributed to our outcome statistic, we used a very long period (ie, two years) for coding patterns to stabilize to ensure that any changes seen were not a function of coding patterns. We ended our study in December 2008 because this was the last date of complete data at the time we started the analysis.

We included all medical, surgical, and obstetrical patients admitted to TOH during this time except those who were: younger than 15 years old; transferred to or from another acute care hospital; or obstetrical patients hospitalized for routine childbirth. These patients were excluded because they were not part of the multivariate model that we used to calculate risk of death in hospital (discussed below).24 These exclusions accounted for 25.4% of all admissions during the study period (36,820less than 15 years old; 12,931transferred to or from the hospital; and 44,220uncomplicated admission for childbirth).

All data used in this study came from The Ottawa Hospital Data Warehouse (TOHDW). This is a repository of clinical, laboratory, and administrative data originating from the hospital's major operational information systems. TOHDW contains information on patient demographics and diagnoses, as well as procedures and patient transfers between different units or hospital services during the admission.

Primary OutcomeRatio of Observed to Expected Number of Deaths per Week

For each study day, we measured the number of hospital deaths from the patient registration table in TOHDW. This statistic was collated for each week to ensure numeric stability, especially in our subgroup analyses.

We calculated the weekly expected number of hospital deaths using an extension of the Escobar model.24 The Escobar is a logistic regression model that estimated the probability of death in hospital that was derived and internally validated on almost 260,000 hospitalizations at 17 hospitals in the Kaiser Permanente Health Plan. It included six covariates that were measurable at admission including: patient age; patient sex; admission urgency (ie, elective or emergent) and service (ie, medical or surgical); admission diagnosis; severity of acute illness as measured by the Laboratory‐based Acute Physiology Score (LAPS); and chronic comorbidities as measured by the COmorbidity Point Score (COPS). Hospitalizations were grouped by admission diagnosis. The final model had excellent discrimination (c‐statistic 0.88) and calibration (P value of Hosmer Lemeshow statistic for entire cohort 0.66). This model was externally validated in our center with a c‐statistic of 0.901.25

We extended the Escobar model in several ways (Wong et al., Derivation and validation of a model to predict the daily risk of death in hospital, 2010, unpublished work). First, we modified it into a survival (rather than a logistic) model so it could estimate a daily probability of death in hospital. Second, we included the same covariates as Escobar except that we expressed LAPS as a time‐dependent covariate (meaning that the model accounted for changes in its value during the hospitalization). Finally, we included other time‐dependent covariates including: admission to intensive care unit; undergoing significant procedures; and awaiting long‐term care. This model had excellent discrimination (concordance probability of 0.895, 95% confidence interval [CI] 0.8890.902) and calibration.

We used this survival model to estimate the daily risk of death for all patients in the hospital each day. Summing these risks over hospital patients on each day returned the daily number of expected hospital deaths. This was collated per week.

The outcome statistic for this study was the ratio of the observed to expected weekly number of hospital deaths. Ratios exceeding 1 indicate that more deaths were observed than were expected (given the distribution of important covariates in those people during that week). This outcome statistic has several advantages. First, it accounts for the number of patients in the hospital each day. This is important because the number of hospital deaths will increase as the number of people in hospital increase. Second, it accounts for the severity of illness in each patient on each hospital day. This accounts for daily changes in risk of patient death, because calculation of the expected number of deaths per day was done using a multivariate survival model that included time‐dependent covariates. Therefore, each individual's predicted hazard of death (which was summed over the entire hospital to calculate the total expected number of deaths in hospital each day) took into account the latest values of these covariates. Previous analyses only accounted for risk of death at admission.

Expressing Physician Experience

The latent measure26 in all July Phenomenon studies is collective house‐staff physician experience. This is quantified by a surrogate date variable in which July 1the date that new house‐staff start their training in North Americarepresents minimal experience and June 30 represents maximal experience. We expressed collective physician experience on a scale from 0 (minimum experience) on July 1 to 1 (maximum experience) on June 30. A similar approach has been used previously13 and has advantages over the other methods used to capture collective house‐staff experience. In the stratified, incomplete approach,47, 911, 13, 1517 periods with inexperienced house‐staff (eg, July and August) are grouped together and compared to times with experienced house‐staff (eg, May and June), while ignoring all other data. The specification of cut‐points for this stratification is arbitrary and the method ignores large amounts of data. In the stratified, complete approach, periods with inexperienced house‐staff (eg, July and August) are grouped together and compared to all other times of the year.8, 12, 14, 1820, 22 This is potentially less biased because there are no lost data. However, the cut‐point for determining when house‐staff transition from inexperienced to experienced is arbitrary, and the model assumes that the transition is sudden. This is suboptimal because acquisition of experience is a gradual, constant process.

The pattern by which collective physician experience changes between July 1st and June 30th is unknown. We therefore expressed this evolution using five different patterns varying from a linear change to a natural logarithmic change (see Supporting Appendix B in the online version of this article).

Analysis

We first examined for autocorrelation in our outcome variable using Ljung‐Box statistics at lag 6 and 12 in PROC ARIMA (SAS 9.2, Cary, NC). If significant autocorrelation was absent in our data, linear regression modeling was used to associate the ratio of the observed to expected number of weekly deaths (the outcome variable) with the collective first year physician experience (the predictor variable). Time‐series methodology was to be used if significant autocorrelation was present.

In our baseline analysis, we included all hospitalizations together. In stratified analyses, we categorized hospitalizations by admission status (emergent vs elective) and admission service (medicine vs surgery).

RESULTS

Between April 15, 2004 and December 31, 2008, The Ottawa Hospital had a total of 152,017 inpatient admissions and 107,731 same day surgeries (an annual rate of 32,222 and 22,835, respectively; an average daily rate of 88 and 63, respectively) that met our study's inclusion criteria. These 259,748 encounters included 164,318 people. Table 1 provides an overall description of the study population.

| Characteristic | |

|---|---|

| |

| Patients/hospitalizations, n | 164,318/259,748 |

| Deaths in‐hospital, n (%) | 7,679 (3.0) |

| Length of admission in days, median (IQR) | 2 (16) |

| Male, n (%) | 124,848 (48.1) |

| Age at admission, median (IQR) | 60 (4674) |

| Admission type, n (%) | |

| Elective surgical | 136,406 (52.5) |

| Elective nonsurgical | 20,104 (7.7) |

| Emergent surgical | 32,046 (12.3) |

| Emergent nonsurgical | 71,192 (27.4) |

| Elixhauser score, median (IQR) | 0 (04) |

| LAPS at admission, median (IQR) | 0 (015) |

| At least one admission to intensive care unit, n (%) | 7,779 (3.0) |

| At least one alternative level of care episode, n (%) | 6,971 (2.7) |

| At least one PIMR procedure, n (%) | 47,288 (18.2) |

| First PIMR score,* median (IQR) | 2 (52) |

Weekly Deaths: Observed, Expected, and Ratio

Figure 1A presents the observed weekly number of deaths during the study period. There was an average of 31 deaths per week (range 1551). Some large fluctuations in the weekly number of deaths were seen; in 2007, for example, the number of observed deaths went from 21 in week 13 up to 46 in week 15. However, no obvious seasonal trends in the observed weekly number of deaths were seen (Figure 1A, heavy line) nor were trends between years obvious.

Figure 1B presents the expected weekly number of deaths during the study period. The expected weekly number of deaths averaged 29.6 (range 22.238.7). The expected weekly number of deaths was notably less variable than the observed number of deaths. However, important variations in the expected number of deaths were seen; for example, in 2005, the expected number of deaths increased from 24.1 in week 41 to 29.6 in week 44. Again, we saw no obvious seasonal trends in the expected weekly number of deaths (Figure 1B, heavy line).

Figure 1C illustrates the ratio of observed to the expected weekly number of deaths. The average observed to expected ratio slightly exceeded unity (1.05) and ranged from 0.488 (week 24, in 2008) to 1.821 (week 51, in 2008). We saw no obvious seasonal trends in the ratio of the observed to expected number of weekly deaths. In addition, obvious trends in this ratio were absent over the study period.

Association Between House‐Staff Experience and Death in Hospital

We found no evidence of autocorrelation in the ratio of observed to expected weekly number of deaths. The ratio of observed to expected number of hospital deaths was not significantly associated with house‐staff physician experience (Table 2). This conclusion did not change regardless of which house‐staff physician experience pattern was used in the linear model (Table 2). In addition, our analysis found no significant association between physician experience and patient mortality when analyses were stratified by admission service or admission status (Table 2).

| Patient Population | House‐Staff Experience Pattern (95% CI) | ||||

|---|---|---|---|---|---|

| Linear | Square | Square Root | Cubic | Natural Logarithm | |

| |||||

| All | 0.03 (0.11, 0.06) | 0.02 (0.10, 0.07) | 0.04 (0.15, 0.07) | 0.01 (0.10, 0.08) | 0.05 (0.16, 0.07) |

| Admitting service | |||||

| Medicine | 0.0004 (0.09, 0.10) | 0.01 (0.08, 0.10) | 0.01 (0.13, 0.11) | 0.02 (0.07, 0.11) | 0.03 (0.15, 0.09) |

| Surgery | 0.10 (0.30, 0.10) | 0.11 (0.30, 0.08) | 0.12 (0.37, 0.14) | 0.11 (0.31, 0.08) | 0.09 (0.35, 0.17) |

| Admission status | |||||

| Elective | 0.09 (0.53, 0.35) | 0.10 (0.51, 0.32) | 0.11 (0.66, 0.44) | 0.10 (0.53, 0.33) | 0.11 (0.68, 0.45) |

| Emergent | 0.02 (0.11, 0.07) | 0.01 (0.09, 0.08) | 0.03 (0.14, 0.08) | 0.003 (0.09, 0.09) | 0.04 (0.16, 0.08) |

DISCUSSION

It is natural to suspect that physician experience influences patient outcomes. The commonly discussed July Phenomenon explores changes in teaching‐hospital patient outcomes by time of the academic year. This serves as an ecological surrogate for the latent variable of overall house‐staff experience. Our study used a detailed outcomethe ratio of observed to the expected number of weekly hospital deathsthat adjusted for patient severity of illness. We also modeled collective physician experience using a broad range of patterns. We found no significant variation in mortality rates during the academic year; therefore, the risk of death in hospital does not vary by house‐staff experience at our hospital. This is no evidence of a July Phenomenon for mortality at our center.

We were not surprised that the arrival of inexperienced house‐staff did not significantly change patient mortality for several reasons. First year residents are but one group of treating physicians in a teaching hospital. They are surrounded by many other, more experienced physicians who also contribute to patient care and their outcomes. Given these other physicians, the influence that the relatively smaller number of first year residents have on patient outcomes will be minimized. In addition, the role that these more experienced physicians play in patient care will vary by the experience and ability of residents. The influence of new and inexperienced house‐staff in July will be blunted by an increased role played by staff‐people, fellows, and more experienced house‐staff at that time.

Our study was a methodologically rigorous examination of the July Phenomenon. We used a reliable outcome statisticthe ratio of observed to expected weekly number of hospital deathsthat was created with a validated, discriminative, and well‐calibrated model which predicted risk of death in hospital (Wong et al., Derivation and validation of a model to predict the daily risk of death in hospital, 2010, unpublished work). This statistic is inherently understandable and controlled for patient severity of illness. In addition, our study included a very broad and inclusive group of patients over five years at two hospitals.

Twenty‐three other studies have quantitatively sought a July Phenomenon for patient mortality (see Supporting Appendix A in the online version of this article). The studies contained a broad assortment of research methodologies, patient populations, and analytical methodologies. Nineteen of these studies (83%) found no evidence of a July Phenomenon for teaching‐hospital mortality. In contrast, two of these studies found notable adjusted odds ratios for death in hospital (1.41 and 1.34) in patients undergoing either general surgery13 or complex cardiovascular surgery,19 respectively. Blumberg22 also found an increased risk of death in surgical patients in July, but used indirect standardized mortality ratios as the outcome statistic and was based on only 139 cases at Maryland teaching hospitals in 1984. Only Jen et al.16 showed an increased risk of hospital death with new house‐staff in a broad patient population. However, this study was restricted to two arbitrarily chosen days (one before and one after house‐staff change‐over) and showed an increased risk of hospital death (adjusted OR 1.05, 95% CI 1.001.15) whose borderline statistical significance could have been driven by the large sample size of the study (n = 299,741).

Therefore, the vast majority of dataincluding those presented in our analysesshow that the risk of teaching‐hospital death does not significantly increase with the arrival of new house‐staff. This prompts the question as to why the July Phenomenon is commonly presented in popular media as a proven fact.2733 We believe this is likely because the concept of the July Phenomenon is understandable and has a rather morbid attraction to people, both inside and outside of the medical profession. Given the large amount of data refuting the true existence of a July Phenomenon for patient mortality (see Supporting Appendix A in the online version of this article), we believe that this term should only be used only as an example of an interesting idea that is refuted by a proper analysis of the data.

Several limitations of our study are notable. First, our analysis is limited to a single center, albeit with two hospitals. However, ours is one of the largest teaching centers in Canada with many new residents each year. Second, we only examined the association of physician experience on hospital mortality. While it is possible that physician experience significantly influences other patient outcomes, mortality is, obviously, an important and reliably tallied statistic that is used as the primary outcome in most July Phenomenon studies. Third, we excluded approximately a quarter of all hospitalizations from the study. These exclusions were necessary because the Escobar model does not apply to these people and can therefore not be used to predict their risk of death in hospital. However, the vast majority of excluded patients (those less than 15 years old, and women admitted for routine childbirth) have a very low risk of death (the former because they are almost exclusively newborns, and the latter because the risk of maternal death during childbirth is very low). Since these people will contribute very little to either the expected or observed number of deaths, their exclusion will do little to threaten the study's validity. The remaining patients who were transferred to or from other hospitals (n = 12,931) makes a small proportion of the total sampling frame (5% of admissions). Fourth, our study did not identify any significant association between house‐staff experience and patient mortality (Table 2). However, the confidence intervals around our estimates are wide enough, especially in some subgroups such as patients admitted electively, that important changes in patient mortality with house‐staff experience cannot be excluded. For example, whereas our study found that a decrease in the ratio of observed to expected number of deaths exceeding 30% is very unlikely, it is still possible that this decrease is up to 30% (the lower range of the confidence interval in Table 2). However, using this logic, it could also increase by up to 10% (Table 2). Finally, we did not directly measure individual physician experience. New residents can vary extensively in their individual experience and ability. Incorporating individual physician measures of experience and ability would more reliably let us measure the association of new residents with patient outcomes. Without this, we had to rely on an ecological measure of physician experiencenamely calendar date. Again, this method is an industry standard since all studies quantify physician experience ecologically by date (see Supporting Appendix A in the online version of this article).

In summary, our datasimilar to most studies on this topicshow that the risk of death in teaching hospitals does not change with the arrival of new house‐staff.

- ,,.The effects of scheduled intern rotation on the cost and quality of teaching hospital care.Eval Health Prof.1994;17:259–272.

- ,,,.Specialty differences in the “July Phenomenon” for Twin Cities teaching hospitals.Med Care.1993;31:73–83.

- ,,,.The relationship of house staff experience to the cost and quality of inpatient care.JAMA.1990;263:953–957.

- ,,,.Indirect costs for medical education. Is there a July phenomenon?Arch Intern Med.1989;149:765–768.

- ,,,,.The impact of accreditation council for graduate medical education duty hours, the July phenomenon, and hospital teaching status on stroke outcomes.J Stroke Cerebrovasc Dis.2009;18:232–238.

- ,.The killing season—Fact or fiction.BMJ1994;309:1690.

- ,,, et al.The July effect: Impact of the beginning of the academic cycle on cardiac surgical outcomes in a cohort of 70,616 patients.Ann Thorac Surg.2009;88:70–75.

- ,.Is there a July phenomenon? The effect of July admission on intensive care mortality and length of stay in teaching hospitals.J Gen Intern Med.2003;18:639–645.

- ,,,.Neonatal mortality among low birth weight infants during the initial months of the academic year.J Perinatol.2008;28:691–695.

- ,,,,.The “July Phenomenon” and the care of the severely injured patient: Fact or fiction?Surgery.2001;130:346–353.

- ,,, et al.The July effect and cardiac surgery: The effect of the beginning of the academic cycle on outcomes.Am J Surg.2008;196:720–725.

- ,,,.Mortality in Medicare patients undergoing surgery in July in teaching hospitals.Ann Surg.2009;249:871–876.

- ,,, et al.Seasonal variation in surgical outcomes as measured by the American College of Surgeons–National Surgical Quality Improvement Program (ACS‐NSQIP).Ann Surg.2007;246:456–465.

- ,,, et al.Mortality rate and length of stay of patients admitted to the intensive care unit in July.Crit Care Med.2004;32:1161–1165.

- ,,,,,.July—As good a time as any to be injured.J Trauma‐Injury Infect Crit Care.2009;67:1087–1090.

- ,,,,.Early in‐hospital mortality following trainee doctors' first day at work.PLoS ONE.2009;4.

- ,,.Effect of critical care medicine fellows on patient outcome in the intensive care unit.Acad Med.2006;81:S1–S4.

- ,,,,.The “July Phenomenon”: Is trauma the exception?J Am Coll Surg.2009;209:378–384.

- ,,.Impact of cardiothoracic resident turnover on mortality after cardiac surgery: A dynamic human factor.Ann Thorac Surg.2008;86:123–131.

- ,,.Is there a “July Phenomenon” in pediatric neurosurgery at teaching hospitals?J Neurosurg Pediatr.2006;105:169–176.

- ,,,,.Mortality and morbidity by month of birth of neonates admitted to an academic neonatal intensive care unit.Pediatrics.2008;122:E1048–E1052.

- .Measuring surgical quality in Maryland: A model.Health Aff.1988;7:62–78.

- ,,, et al.Complications and death at the start of the new academic year: Is there a July phenomenon?J Trauma‐Injury Infect Crit Care.2010;68(1):19–22.

- ,,,,,.Risk‐adjusting hospital inpatient mortality using automated inpatient, outpatient, and laboratory databases.Med Care.2008;46:232–239.

- ,,,.The Kaiser Permanente inpatient risk adjustment methodology was valid in an external patient population.J Clin Epidemiol.2010;63:798–803.

- .Introduction: The logic of latent variables.Latent Class Analysis.Newbury Park, CA:Sage;1987:5–10.

- July Effect. Wikipedia. Available at: http://en.wikipedia.org/wiki/July_effect. Accessed April 1,2011.

- Study proves “killing season” occurs as new doctors start work. September 23,2010. Herald Scotland. Available at: http://www.heraldscotland.com/news/health/study‐proves‐killing‐season‐occurs‐as‐new‐doctors‐start‐work‐1.921632. Accessed April 1, 2011.

- The “July effect”: Worst month for fatal hospital errors, study finds. June 3,2010. ABC News. Available at: http://abcnews.go.com/WN/WellnessNews/july‐month‐fatal‐hospital‐errors‐study‐finds/story?id=10819652. Accessed 1 April, 2011.

- “Deaths rise” with junior doctors. September 22,2010. BBC News. Available at: http://news.bbc.co.uk/2/hi/health/8269729.stm. Accessed April 1, 2011.

- .July: When not to go to the hospital. June 2,2010. Science News. Available at: http://www.sciencenews.org/view/generic/id/59865/title/July_When_not_to_go_to_the_hospital. Accessed April 1, 2011.

- July: A deadly time for hospitals. July 5,2010. National Public Radio. Available at: http://www.npr.org/templates/story/story.php?storyId=128321489. Accessed April 1, 2011.

- .Medical errors and patient safety: Beware the “July effect.” June 4,2010. Better Health. Available at: http://getbetterhealth.com/medical‐errors‐and‐patient‐safety‐beware‐of‐the‐july‐effect/2010.06.04. Accessed April 1, 2011.

- ,,.The effects of scheduled intern rotation on the cost and quality of teaching hospital care.Eval Health Prof.1994;17:259–272.

- ,,,.Specialty differences in the “July Phenomenon” for Twin Cities teaching hospitals.Med Care.1993;31:73–83.

- ,,,.The relationship of house staff experience to the cost and quality of inpatient care.JAMA.1990;263:953–957.

- ,,,.Indirect costs for medical education. Is there a July phenomenon?Arch Intern Med.1989;149:765–768.

- ,,,,.The impact of accreditation council for graduate medical education duty hours, the July phenomenon, and hospital teaching status on stroke outcomes.J Stroke Cerebrovasc Dis.2009;18:232–238.

- ,.The killing season—Fact or fiction.BMJ1994;309:1690.

- ,,, et al.The July effect: Impact of the beginning of the academic cycle on cardiac surgical outcomes in a cohort of 70,616 patients.Ann Thorac Surg.2009;88:70–75.

- ,.Is there a July phenomenon? The effect of July admission on intensive care mortality and length of stay in teaching hospitals.J Gen Intern Med.2003;18:639–645.

- ,,,.Neonatal mortality among low birth weight infants during the initial months of the academic year.J Perinatol.2008;28:691–695.

- ,,,,.The “July Phenomenon” and the care of the severely injured patient: Fact or fiction?Surgery.2001;130:346–353.

- ,,, et al.The July effect and cardiac surgery: The effect of the beginning of the academic cycle on outcomes.Am J Surg.2008;196:720–725.

- ,,,.Mortality in Medicare patients undergoing surgery in July in teaching hospitals.Ann Surg.2009;249:871–876.

- ,,, et al.Seasonal variation in surgical outcomes as measured by the American College of Surgeons–National Surgical Quality Improvement Program (ACS‐NSQIP).Ann Surg.2007;246:456–465.

- ,,, et al.Mortality rate and length of stay of patients admitted to the intensive care unit in July.Crit Care Med.2004;32:1161–1165.

- ,,,,,.July—As good a time as any to be injured.J Trauma‐Injury Infect Crit Care.2009;67:1087–1090.

- ,,,,.Early in‐hospital mortality following trainee doctors' first day at work.PLoS ONE.2009;4.

- ,,.Effect of critical care medicine fellows on patient outcome in the intensive care unit.Acad Med.2006;81:S1–S4.

- ,,,,.The “July Phenomenon”: Is trauma the exception?J Am Coll Surg.2009;209:378–384.

- ,,.Impact of cardiothoracic resident turnover on mortality after cardiac surgery: A dynamic human factor.Ann Thorac Surg.2008;86:123–131.

- ,,.Is there a “July Phenomenon” in pediatric neurosurgery at teaching hospitals?J Neurosurg Pediatr.2006;105:169–176.

- ,,,,.Mortality and morbidity by month of birth of neonates admitted to an academic neonatal intensive care unit.Pediatrics.2008;122:E1048–E1052.

- .Measuring surgical quality in Maryland: A model.Health Aff.1988;7:62–78.

- ,,, et al.Complications and death at the start of the new academic year: Is there a July phenomenon?J Trauma‐Injury Infect Crit Care.2010;68(1):19–22.

- ,,,,,.Risk‐adjusting hospital inpatient mortality using automated inpatient, outpatient, and laboratory databases.Med Care.2008;46:232–239.

- ,,,.The Kaiser Permanente inpatient risk adjustment methodology was valid in an external patient population.J Clin Epidemiol.2010;63:798–803.

- .Introduction: The logic of latent variables.Latent Class Analysis.Newbury Park, CA:Sage;1987:5–10.

- July Effect. Wikipedia. Available at: http://en.wikipedia.org/wiki/July_effect. Accessed April 1,2011.

- Study proves “killing season” occurs as new doctors start work. September 23,2010. Herald Scotland. Available at: http://www.heraldscotland.com/news/health/study‐proves‐killing‐season‐occurs‐as‐new‐doctors‐start‐work‐1.921632. Accessed April 1, 2011.

- The “July effect”: Worst month for fatal hospital errors, study finds. June 3,2010. ABC News. Available at: http://abcnews.go.com/WN/WellnessNews/july‐month‐fatal‐hospital‐errors‐study‐finds/story?id=10819652. Accessed 1 April, 2011.

- “Deaths rise” with junior doctors. September 22,2010. BBC News. Available at: http://news.bbc.co.uk/2/hi/health/8269729.stm. Accessed April 1, 2011.

- .July: When not to go to the hospital. June 2,2010. Science News. Available at: http://www.sciencenews.org/view/generic/id/59865/title/July_When_not_to_go_to_the_hospital. Accessed April 1, 2011.

- July: A deadly time for hospitals. July 5,2010. National Public Radio. Available at: http://www.npr.org/templates/story/story.php?storyId=128321489. Accessed April 1, 2011.

- .Medical errors and patient safety: Beware the “July effect.” June 4,2010. Better Health. Available at: http://getbetterhealth.com/medical‐errors‐and‐patient‐safety‐beware‐of‐the‐july‐effect/2010.06.04. Accessed April 1, 2011.

Copyright © 2011 Society of Hospital Medicine