User login

Current models used to predict suicide risk fall short for racialized populations including Black, Indigenous, and people of color (BIPOC), new research shows.

Investigators developed two suicide prediction models to examine whether these types of tools are accurate in their predictive abilities, or whether they are flawed.

They found both prediction models failed to identify high-risk BIPOC individuals. In the first model, nearly half of outpatient visits followed by suicide were identified in White patients versus only 7% of visits followed by suicide in BIPOC patients. The second model had a sensitivity of 41% for White patients, but just 3% for Black patients and 7% for American Indian/Alaskan Native patients.

“You don’t know whether a prediction model will be useful or harmful until it’s evaluated. The take-home message of our study is this: You have to look,” lead author Yates Coley, PhD, assistant investigator, Kaiser Permanente Washington Health Research Institute, Seattle, said in an interview.

The study was published online April 28, 2021, in JAMA Psychiatry.

Racial inequities

Suicide risk prediction models have been “developed and validated in several settings” and are now in regular use at the Veterans Health Administration, HealthPartners, and Kaiser Permanente, the authors wrote.

But the performance of suicide risk prediction models, while accurate in the overall population, “remains unexamined” in particular subpopulations, they noted.

“Health records data reflect existing racial and ethnic inequities in health care access, quality, and outcomes; and prediction models using health records data may perpetuate these disparities by presuming that past healthcare patterns accurately reflect actual needs,” Dr. Coley said.

Dr. Coley and associates “wanted to make sure that any suicide prediction model we implemented in clinical care reduced health disparities rather than exacerbated them.”

To investigate, researchers examined all outpatient mental health visits to seven large integrated health care systems by patients 13 years and older (n = 13,980,570 visits by 1,422,534 patients; 64% female, mean age, 42 years). The study spanned from Jan. 1, 2009, to Sept. 30, 2017, with follow-up through Dec. 31, 2017.

In particular, researchers looked at suicides that took place within 90 days following the outpatient visit.

Researchers used two prediction models: logistic regression with LASSO (Least Absolute Shrinkage and Selection Operator) variable selection and random forest technique, a “tree-based method that explores interactions between predictors (including those with race and ethnicity) in estimating probability of an outcome.”

The models considered prespecified interactions between predictors, including prior diagnoses, suicide attempts, and PHQ-9 [Patient Health Questionnaire–9] responses, and race and ethnicity data.

Researchers evaluated performance of the prediction models in the overall validation set and within subgroups defined by race/ethnicity.

The area under the curve measured model discrimination, and sensitivity was estimated for global and race/ethnicity-specific thresholds.

‘Unacceptable’ scenario

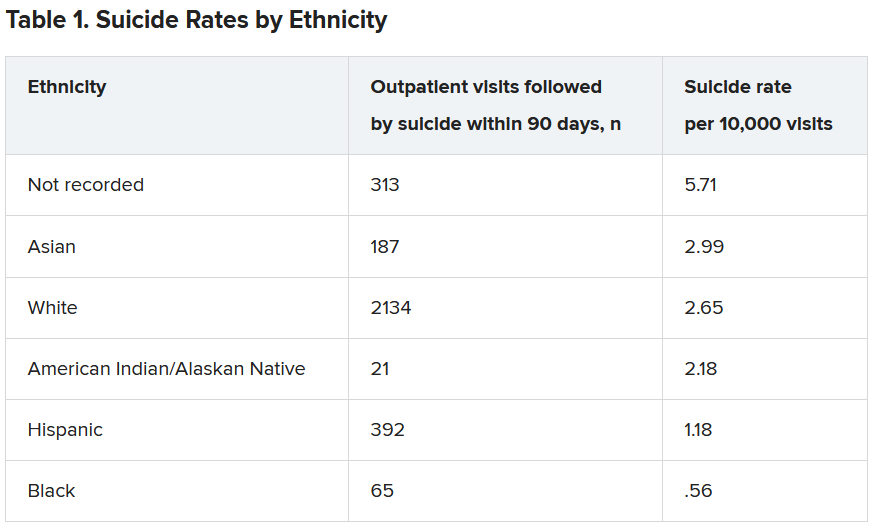

Within the total population, there were 768 deaths by suicide within 90 days of 3,143 visits. Suicide rates were highest for visits by patients with no recorded race/ethnicity, followed by visits by Asian, White, American Indian/Alaskan Native, Hispanic, and Black patients.

Both models showed “high” AUC sensitivity for White, Hispanic, and Asian patients but “poor” AUC sensitivity for BIPOC and patients without recorded race/ethnicity, the authors reported.

“Implementation of prediction models has to be considered in the broader context of unmet health care needs,” said Dr. Coley.

“In our specific example of suicide prediction, BIPOC populations already face substantial barriers in accessing quality mental health care and, as a result, have poorer outcomes, and using either of the suicide prediction models examined in our study will provide less benefit to already-underserved populations and widen existing care gaps,” a scenario Dr. Coley said is “unacceptable.”

“ she added.

Biased algorithms

Commenting on the study, Jonathan Singer, PhD, LCSW, associate professor at Loyola University, Chicago, described it as an “important contribution because it points to a systemic problem and also to the fact that the algorithms we create are biased, created by humans, and humans are biased.”

Although the study focused on the health care system, Dr. Singer believes the findings have implications for individual clinicians.

“If clinicians may be biased against identifying suicide risk in Black and Native American patients, they may attribute suicidal risk to something else. For example, we know that in Black Americans, expressions of intense emotions are oftentimes interpreted as aggression or being threatening, as opposed to indicators of sadness or fear,” noted Dr. Singer, who is also president of the American Academy of Suicidology and was not involved with the study,

“Clinicians who misinterpret these intense emotions are less likely to identify a Black client or patient who is suicidal,” Dr. Singer said.

The research was supported by the Mental Health Research Network from the National Institute of Mental Health. Dr. Coley has reported receiving support through a grant from the Agency for Healthcare Research and Quality. Dr. Singer reported no relevant financial relationships.

A version of this article first appeared on Medscape.com.

Current models used to predict suicide risk fall short for racialized populations including Black, Indigenous, and people of color (BIPOC), new research shows.

Investigators developed two suicide prediction models to examine whether these types of tools are accurate in their predictive abilities, or whether they are flawed.

They found both prediction models failed to identify high-risk BIPOC individuals. In the first model, nearly half of outpatient visits followed by suicide were identified in White patients versus only 7% of visits followed by suicide in BIPOC patients. The second model had a sensitivity of 41% for White patients, but just 3% for Black patients and 7% for American Indian/Alaskan Native patients.

“You don’t know whether a prediction model will be useful or harmful until it’s evaluated. The take-home message of our study is this: You have to look,” lead author Yates Coley, PhD, assistant investigator, Kaiser Permanente Washington Health Research Institute, Seattle, said in an interview.

The study was published online April 28, 2021, in JAMA Psychiatry.

Racial inequities

Suicide risk prediction models have been “developed and validated in several settings” and are now in regular use at the Veterans Health Administration, HealthPartners, and Kaiser Permanente, the authors wrote.

But the performance of suicide risk prediction models, while accurate in the overall population, “remains unexamined” in particular subpopulations, they noted.

“Health records data reflect existing racial and ethnic inequities in health care access, quality, and outcomes; and prediction models using health records data may perpetuate these disparities by presuming that past healthcare patterns accurately reflect actual needs,” Dr. Coley said.

Dr. Coley and associates “wanted to make sure that any suicide prediction model we implemented in clinical care reduced health disparities rather than exacerbated them.”

To investigate, researchers examined all outpatient mental health visits to seven large integrated health care systems by patients 13 years and older (n = 13,980,570 visits by 1,422,534 patients; 64% female, mean age, 42 years). The study spanned from Jan. 1, 2009, to Sept. 30, 2017, with follow-up through Dec. 31, 2017.

In particular, researchers looked at suicides that took place within 90 days following the outpatient visit.

Researchers used two prediction models: logistic regression with LASSO (Least Absolute Shrinkage and Selection Operator) variable selection and random forest technique, a “tree-based method that explores interactions between predictors (including those with race and ethnicity) in estimating probability of an outcome.”

The models considered prespecified interactions between predictors, including prior diagnoses, suicide attempts, and PHQ-9 [Patient Health Questionnaire–9] responses, and race and ethnicity data.

Researchers evaluated performance of the prediction models in the overall validation set and within subgroups defined by race/ethnicity.

The area under the curve measured model discrimination, and sensitivity was estimated for global and race/ethnicity-specific thresholds.

‘Unacceptable’ scenario

Within the total population, there were 768 deaths by suicide within 90 days of 3,143 visits. Suicide rates were highest for visits by patients with no recorded race/ethnicity, followed by visits by Asian, White, American Indian/Alaskan Native, Hispanic, and Black patients.

Both models showed “high” AUC sensitivity for White, Hispanic, and Asian patients but “poor” AUC sensitivity for BIPOC and patients without recorded race/ethnicity, the authors reported.

“Implementation of prediction models has to be considered in the broader context of unmet health care needs,” said Dr. Coley.

“In our specific example of suicide prediction, BIPOC populations already face substantial barriers in accessing quality mental health care and, as a result, have poorer outcomes, and using either of the suicide prediction models examined in our study will provide less benefit to already-underserved populations and widen existing care gaps,” a scenario Dr. Coley said is “unacceptable.”

“ she added.

Biased algorithms

Commenting on the study, Jonathan Singer, PhD, LCSW, associate professor at Loyola University, Chicago, described it as an “important contribution because it points to a systemic problem and also to the fact that the algorithms we create are biased, created by humans, and humans are biased.”

Although the study focused on the health care system, Dr. Singer believes the findings have implications for individual clinicians.

“If clinicians may be biased against identifying suicide risk in Black and Native American patients, they may attribute suicidal risk to something else. For example, we know that in Black Americans, expressions of intense emotions are oftentimes interpreted as aggression or being threatening, as opposed to indicators of sadness or fear,” noted Dr. Singer, who is also president of the American Academy of Suicidology and was not involved with the study,

“Clinicians who misinterpret these intense emotions are less likely to identify a Black client or patient who is suicidal,” Dr. Singer said.

The research was supported by the Mental Health Research Network from the National Institute of Mental Health. Dr. Coley has reported receiving support through a grant from the Agency for Healthcare Research and Quality. Dr. Singer reported no relevant financial relationships.

A version of this article first appeared on Medscape.com.

Current models used to predict suicide risk fall short for racialized populations including Black, Indigenous, and people of color (BIPOC), new research shows.

Investigators developed two suicide prediction models to examine whether these types of tools are accurate in their predictive abilities, or whether they are flawed.

They found both prediction models failed to identify high-risk BIPOC individuals. In the first model, nearly half of outpatient visits followed by suicide were identified in White patients versus only 7% of visits followed by suicide in BIPOC patients. The second model had a sensitivity of 41% for White patients, but just 3% for Black patients and 7% for American Indian/Alaskan Native patients.

“You don’t know whether a prediction model will be useful or harmful until it’s evaluated. The take-home message of our study is this: You have to look,” lead author Yates Coley, PhD, assistant investigator, Kaiser Permanente Washington Health Research Institute, Seattle, said in an interview.

The study was published online April 28, 2021, in JAMA Psychiatry.

Racial inequities

Suicide risk prediction models have been “developed and validated in several settings” and are now in regular use at the Veterans Health Administration, HealthPartners, and Kaiser Permanente, the authors wrote.

But the performance of suicide risk prediction models, while accurate in the overall population, “remains unexamined” in particular subpopulations, they noted.

“Health records data reflect existing racial and ethnic inequities in health care access, quality, and outcomes; and prediction models using health records data may perpetuate these disparities by presuming that past healthcare patterns accurately reflect actual needs,” Dr. Coley said.

Dr. Coley and associates “wanted to make sure that any suicide prediction model we implemented in clinical care reduced health disparities rather than exacerbated them.”

To investigate, researchers examined all outpatient mental health visits to seven large integrated health care systems by patients 13 years and older (n = 13,980,570 visits by 1,422,534 patients; 64% female, mean age, 42 years). The study spanned from Jan. 1, 2009, to Sept. 30, 2017, with follow-up through Dec. 31, 2017.

In particular, researchers looked at suicides that took place within 90 days following the outpatient visit.

Researchers used two prediction models: logistic regression with LASSO (Least Absolute Shrinkage and Selection Operator) variable selection and random forest technique, a “tree-based method that explores interactions between predictors (including those with race and ethnicity) in estimating probability of an outcome.”

The models considered prespecified interactions between predictors, including prior diagnoses, suicide attempts, and PHQ-9 [Patient Health Questionnaire–9] responses, and race and ethnicity data.

Researchers evaluated performance of the prediction models in the overall validation set and within subgroups defined by race/ethnicity.

The area under the curve measured model discrimination, and sensitivity was estimated for global and race/ethnicity-specific thresholds.

‘Unacceptable’ scenario

Within the total population, there were 768 deaths by suicide within 90 days of 3,143 visits. Suicide rates were highest for visits by patients with no recorded race/ethnicity, followed by visits by Asian, White, American Indian/Alaskan Native, Hispanic, and Black patients.

Both models showed “high” AUC sensitivity for White, Hispanic, and Asian patients but “poor” AUC sensitivity for BIPOC and patients without recorded race/ethnicity, the authors reported.

“Implementation of prediction models has to be considered in the broader context of unmet health care needs,” said Dr. Coley.

“In our specific example of suicide prediction, BIPOC populations already face substantial barriers in accessing quality mental health care and, as a result, have poorer outcomes, and using either of the suicide prediction models examined in our study will provide less benefit to already-underserved populations and widen existing care gaps,” a scenario Dr. Coley said is “unacceptable.”

“ she added.

Biased algorithms

Commenting on the study, Jonathan Singer, PhD, LCSW, associate professor at Loyola University, Chicago, described it as an “important contribution because it points to a systemic problem and also to the fact that the algorithms we create are biased, created by humans, and humans are biased.”

Although the study focused on the health care system, Dr. Singer believes the findings have implications for individual clinicians.

“If clinicians may be biased against identifying suicide risk in Black and Native American patients, they may attribute suicidal risk to something else. For example, we know that in Black Americans, expressions of intense emotions are oftentimes interpreted as aggression or being threatening, as opposed to indicators of sadness or fear,” noted Dr. Singer, who is also president of the American Academy of Suicidology and was not involved with the study,

“Clinicians who misinterpret these intense emotions are less likely to identify a Black client or patient who is suicidal,” Dr. Singer said.

The research was supported by the Mental Health Research Network from the National Institute of Mental Health. Dr. Coley has reported receiving support through a grant from the Agency for Healthcare Research and Quality. Dr. Singer reported no relevant financial relationships.

A version of this article first appeared on Medscape.com.